It’s ridiculously easy to take a bad photograph. Your brain is a far better Photoshop than Photoshop, and the amount of editing it does on the scenes your eyes capture often results in marked and disappointing differences between what you saw and what you shot.

Taking your brain out of the photography loop is the goal of [Peter Buczkowski]’s “prosthetic photographer.” The idea is to use a neural network to constantly analyze a scene until maximal aesthetic value is achieved, at which point the user unconsciously takes the photograph.

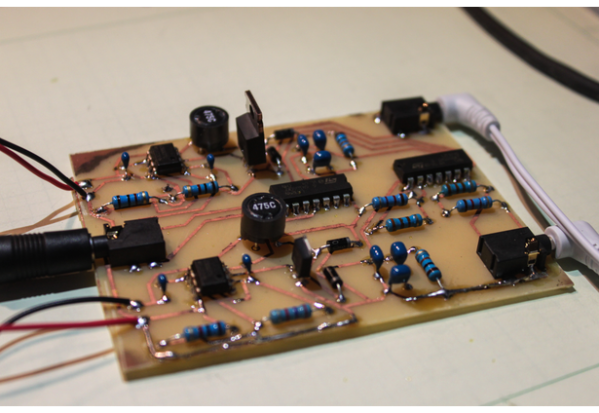

But the human-computer interface is the interesting bit — the device uses a transcutaneous electrical nerve stimulator (TENS) wired to electrodes in the handgrip to involuntarily contract the user’s finger muscles and squeeze the trigger. (Editor’s Note: This project is about as sci-fi as it gets — the computer brain is pulling the strings of the meat puppet. Whoah.)

Meanwhile, back in reality, it’s not too strange a project. A Raspberry Pi watches the scene through a Pi Cam and uses a TensorFlow neural net trained against a set of high-quality photos to determine when to trip the shutter. The video below shows it in action, and [Peter]’s blog has some of the photos taken with it.

We’re not sure this is exactly the next “must have” camera accessory, and it probably won’t help with snapshots and selfies, but it’s an interesting take on the human-device interface. And if you’re thinking about the possibilities of a neural net inside your camera to prompt you when to take a picture, you might want to check out our primer on TensorFlow to get started.

Continue reading “Neural Network Zaps You To Take Better Photographs”