By now, large language models (LLMs) like OpenAI’s ChatGPT are old news. While not perfect, they can assist with all kinds of tasks like creating efficient Excel spreadsheets, writing cover letters, asking for music references, and putting together functional computer programs in a variety of languages. One thing these LLMs don’t do yet though is integrate well with existing app interfaces. However, that’s where the TypeChat library comes in, bridging the gap between LLMs and programming.

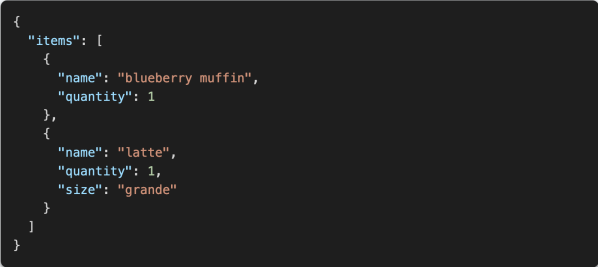

TypeChat is an experimental MIT-licensed library from Microsoft which sits in between a user and a LLM and formats responses from the AI that are type-safe so that they can easily be plugged back in to the original interface. It does this by generating JSON responses based on user input, making it easier to take the user input directly, run it through the LLM, and then use the output directly in another piece of code. It can be used for things like prototyping prompts, validating responses, and handling errors. It’s also not limited to a single LLM and can be fairly easily modified to work with many of the existing models.

The software is still in its infancy but does hope to make it somewhat easier to work between user inputs within existing pieces of software and LLMs which have quickly become all the rage in the computer science world. We expect to see plenty more tools like this become available as more people take up using these new tools, which have plenty of applications beyond just writing code.