By now, large language models (LLMs) like OpenAI’s ChatGPT are old news. While not perfect, they can assist with all kinds of tasks like creating efficient Excel spreadsheets, writing cover letters, asking for music references, and putting together functional computer programs in a variety of languages. One thing these LLMs don’t do yet though is integrate well with existing app interfaces. However, that’s where the TypeChat library comes in, bridging the gap between LLMs and programming.

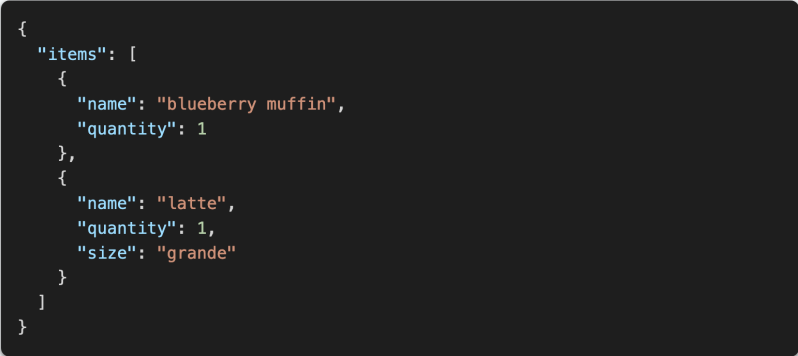

TypeChat is an experimental MIT-licensed library from Microsoft which sits in between a user and a LLM and formats responses from the AI that are type-safe so that they can easily be plugged back in to the original interface. It does this by generating JSON responses based on user input, making it easier to take the user input directly, run it through the LLM, and then use the output directly in another piece of code. It can be used for things like prototyping prompts, validating responses, and handling errors. It’s also not limited to a single LLM and can be fairly easily modified to work with many of the existing models.

The software is still in its infancy but does hope to make it somewhat easier to work between user inputs within existing pieces of software and LLMs which have quickly become all the rage in the computer science world. We expect to see plenty more tools like this become available as more people take up using these new tools, which have plenty of applications beyond just writing code.

Why am I left thinking this is going to lead to a world of pain in times to come. With AI’s just spewing out all sorts of human-unmanageable code we’re going to need more AI’s to patch that code, and so on and so on.

We’re in the uncanny valley of AI code.

If you’ve ever worked with legacy software, you’d know that we do not need AI to write human-unmanageable code.

Similarly “AI” aka LLMs learn only from what Humans have already done and mix in random exploration in an attempt to generalize. If prompted by someone with proper software knowledge you get beautiful and understandable code.

Thus I think that the AI is not the problem, it is once again the human agent in front the computer committing stupid actions. No AI inception is required, because no Programmer-AI can fix human stupidity.

Still better than interns and graduates of coding bootcamps spewing out all sorts of unmanageable code.

please, like you popped out of your mom a fully fledged programmer that writes amazing code, remember when you were an intern or graduate writing shit code, and go thanks the mentors that made you into a hopefully competent person

Autodidactic.

I learned C in high school and college and then self-taught python in a weekend because I needed to scrape leads for my telemarketing room.

For some of us arranging algebra sequences isn’t challenging.

If you find algebra challenging computer programming is not for you.

It’s more that bootcamps make people believe you can be a “rockstar” in a short period of time, and they actively try to make experience seem as something bad.

They mostly exist to increase competition, not to really teach.

There’s a huge difference between learning and actually writing production code. Learners should not do the latter. Learner civil engineer should not build crappy bridges, right? Learner neurosurgeon should not slaughter unlucky patients. Learner programmers should not cause immense suffering to their users. They all should learn in well isolated learning environment, and only when they’re ready they should be unleashed into the wild.

So why programming is perceived so differently and learners are allowed to screw up the production code?

Great point. More care should be taken in selecting competent employees, consultants, code farms, etc.

I don’t know if this will ever happen, or even if it does, if we as customer/client will ever be able to distinguish which products take adequate care. So much is hidden from public, unless an outsider reveals, or developer is FORCED to disclose a bug.

Unfortunately, programmers are viewed as a commodity by many execs and hiring agents — fungible, with tepid disregard for level of experience or cleanliness/security of their code. There are some execs who even measure productivity solely in bytes/lines of code produced per unit time, with disregard for quality as long as it meets some low-standards minimum of success in a project.

Clients and purchasers are left blind by NDAs (or just silence by software company out of fear of loss of $$) toward the failures and bugs that the the software producer’s other clients have experienced.

Your point is that programmers are not fungible. There is a consequence — a risk — to hiring or farming out coding to less-than-competent coders. Agree completely: Frustration, cyber security, info breach … ultimately lost revenue for clients/consumers.

Meanwhile, hold-harmless clauses and NDAs prevent any liability by the crap-code producer.

The execs and bean counters producing said crap can justify via overall cost… via their iterative “agile” approach (as if this can justify release of buggy code, but I’ve heard it used), and by their airtight NDA and hold-harmless legal agreements that clients unwittingly (or because no alternative, knowingly) agree to.

Our standards as users have also sunk so low. We no longer care when our PI (personal information) is disclosed in a breach, because it has been disclosed so many times by so many software providers. Occasional BSOD or crash? NP. Snack break while reboot.

It may be ultimately up to clients (esp. larger ones) to protect themselves by negotiating reasonable SLAs in order to hit the providers where it hurts. Doubtful larger monopolies will bend much, though.

This, unfortunately, also leaves smaller clients and individuals completely powerless, absent laws that require redress despite any hold-harmless agreement.

Some progress on this front has been made regarding InfoSec, but so far this hasn’t had great impact on prevention of loss. A year of free identify protection software is useless if you already have it. Likewise such “punishments” amount to mere pennies for the offenders.

Such software bugs have real impact on economy of every nation. Heck, the abuse of such bugs IS the economy of some bad-actor nations — or a significant part of it.

Perhaps there needs to be better laws, especially as software companies become even bigger monopolies.

Perhaps software quality should be regulated by a govt office, perhaps the FTC. Current anti-fraud prevention laws governed by FTC don’t suffice.

Guidelines specific to software bug prevention could be created. Complaints could be received by a special department, and a special court convened to mete out significant fines to offenders (similar to FCC … Created in 1934 in the wake of new technologies and shortcomings/abuse by their providers esp as monopolies formed). Cutting cost, and want to spend less on QA? A large company will have to consider the risk as part of their bottom line, and possibly revise release date. No more crap being shoveled out the door.

Or rather, perhaps less shoveled out the door.

Government is rarely a real solution.

What you’re trying to say is this is a prompt-based interface for interacting with a data set.

Computers are just calculators.

They cannot learn, they cannot think, the only thing they can do is process algebra sequences.

The reason you’re seeing this sort of disinformation is because I pointed out on Qoura and is proven by a Vox reporter, that no AI enthusiast understands computer programming.

I’m a robotics engineer.

I had to master computer programming before moving on to robotics.

I can assure you every single learning script is written in executable algebra one line at a time by a man no exceptions.

It is insulting to real engineers like me who build the real tools that you really depend on to pretend like a calculator can learn.

Calculators only do as I command they do not do anything else.

Full agreement as a professional programmer.

Unfortunately we have a majority of our society that seems to go gaga over what they don’t understand and actually will ‘believe’ the spewing. Going to cause all kinds of pain, not just coding. A skewing of reality.

Yes, but everything must become its opposite, so the pain of growing up will become the pleasure of maturity.

Twilight for the Intelligent Calculators

You create robots and program for an _effect_, i.e. a product. I.e. code.

Everyone is getting swept up by the recent and obvious improvement in the _effect_ of AI.

If LLMs can be considered (as they wishfully are, by some….) to “think”, then they do so differently than we do. Agreed.

If you are saying that their effect/product cannot and will not improve to meet or exceed the abilities of a human programmer (especially an entry-level one), though, then I suggest that you are mistaken.

Ironic, it is, that a declared “robot engineer” would declare that AI could never replace a human. Doing so would put one in the same group as a Michigan assembly-line workers from 1979 — all but replaced by robotics (and cheap labor — not like that will ever happen to programmers or roboticists, anywhere!!!)

Science fiction has proven itself an accurate oracle, as our imaginations have always outpaced the technological limits of the time. Star Trek communicators? Robot surgery? Bionics? They’re all here.

More are coming. Faster, and with greater frequency — as our crude tools improve.

It is logical to conclude that absent any real and defined knowledge of the limits of LLMs, we should assume that their effect will increase and improve without limits.

Oh, there are certainly limits for the moment (based on cost and time, at very least). We just don’t know what those limits ultimately will be.

It would be unwise to declare any such ultimate limits, absent the research that will eventually provide anything beyond mere conjecture. This especially if such declaration comes from the hubris of human nature.

Doing so would place us in that dangerous position where history repeats itself and technology leaves us in its wake. Sails past us. Rolls over us. Demolishes us, along with tons of rock, and moves on to the next mountain.

For, alas, even if the Legend of John Henry was based on some bit of fact, and a human being actually won the day, the fact remains: given time, the steam engine won the battle in the long-run.

Proving how awful AI enthusiasts are at programming.

Genius is making the complex simple.

You made the simple complex.

What does that mean ?

Kind of ironic too how man makes more ‘complex’ things to make more convenient. Simple example is paying a bill. Now a lot of people are using a complex hand held device (costs a lot of money to buy) that has to make many connections over the internet to get from here to there, encrypting the data to make it ‘safe’, do checks and balances on other end to make sure it is ‘you’ , etc. Before you simply wrote a check, drove up the water company and paid you bill in person. Even tip your hat to the lady and say ‘howdy’. Nothing simpler and most reliable by working with a person face to face. Have a problem, drive up, go in, and hash it out in person. Everyone knows now how it is done today: Press 1 for Spanish, Press 2 for…. Step, Step, Step, then … Sorry no person available today, or on hold for a few hours, and IF you do get a person, that person speaks ‘garbled’ English in another country a lot of times … Gotta love it.

If the cell towers ever lost power for more than a few days …. Chaos. People would be lost. Yet, again ironically, with renewable energy being the buzz words which adds much complexity and less reliability to a transmission system to keep those cell towers operating and cell phones charged…. Living in interesting days!

So a programmed AI to help program being most helpful? Doubtful. Just make it more complex.

The computer program output is long-winded and nonlinear.

Very poor quality.

Wouldn’t fool a child.

When I was explaining how computers work to Reddit they tried the same thing of turning chat GTP on me but when I pointed out that it was an output and not a person they stopped after the first one.

What level of hubris does it take to continue to fail in front of me ?

It’s kind of impressive that hackaday was the forum that couldn’t stop after the ruse was identified.

That’s more of an issue of cost reduction, though. And trying to push away clients more and more, together with patronizing and “guiding” people to what’s “best” for them.

Windows is a “good” example. With each version, more and more customization options are gone, because they know what you need.

It already starts with a bad choice of colors that sucks for non-default vision. Same for many modern graphs, they suck regarding contrast, but the designers know what’s good for you… even when it’s not distinguishable.

It means that someone didn’t appreciate my message, which served to warn others of oversimplifying — especially without the perspective gained by history.

So let me summarize and simplify my comment for the readers who admire (and/or require) simplicity… The TL;DR :

The issue is grey. The unknowns far outweigh the knowns. Anyone who purports to have an answer regarding the future capabilities and limitations of AI, most certainly does not.

Btw been following AI since 16. Coding since 8 yrs old (BASIC, then 6502 ML and assembly). LISP was next, since l read it was required 1st year at MIT at the time, and as prerequisite for AI studies. Obviously moved on since then. Understand your point about simplification.

The issue is complex, though, and deserving of proper consideration, though, at least

by others who can acknowledge what we don’t know.

This especially since many human beings’ life-pursuits are involved in the matter and likely hang in the balance. The actual outcome will determine how and to what extent their life changes. There is a lot of fear and emotion involved. It gets touchy. Case in point.

At some point in my career, I learned to see grey — not just black and white. This especially where technology meets human emotion. Grey is never as simple as black and white .. as 1 and 0. Sorry.

Hilarious

This is your 3rd attempt at using a chatbot to reply.

Now hackaday looks 3x less sophisticated than Reddit.

Not good.

Here’s something that I understand that no one else on Earth does.

What is the nature of existence ?

Wow. We now have a new test — the obverse of the Turing Test (and distinct from Swirski’s “Ultimatest”). One human wishes to distinguish in conversation whether the other participant is also human, and is responding without assistance from AI. Its eponymous first subject just flunked. How awesomely dystopian.

No chatbot here. No AI-assist, either. Just thoughtful replies.

Regardless, the point of original post was just proven, as you seem to have just admitted:

the _effect_ of a chatbot can meet or exceed that of a human being, to the point that the two products can be indistinguishable.

They’ve been working on better chatbots for a long long time in order to automate customer service and other jobs (and we can all agree with other poster about frustrating mixed results along the way). Freelance copywriters are literally already out of work with LLMs.

Chat is not code, though. However, we can all count on continued attempts to automate a programmers job.

Beyond that, let’s agree to disagree on the potential limits of those attempts, and end this thread.

No no one is out of work from a computer program.

All LLM or AI output is a rearrangement of existing human content and therefore Plagiarism or Theft of Royalties from the artist’s perspective.

You prove your low comprehension by your lack of word economy.

Those who understand can speak it simply.

Low comprehension? You continue to spew direct (yet oh so admirably economical) insults, just as in your posts to other users.

Never said programmers are out of work. Was clear comments pertained to future.

Also made clear issue is complex and requires finesse in thought and expression.

Apologies not done in way worthy of your respect … and capable of your comprehension. Purposefully left subject ls and pronouns out of sentences. Just for you.

We teach others how to treat ourselves.

Good luck. This is toxic, and NOT what Hackaday was ever about. I’m out.

You are spreading the lie that calculators can possess intelligence.

This lie makes people wait for tech solutions instead of solving the problems they’re capable of.

It is fear-mongering that retards us collectively.

Genius is making the complex simple.

You just made the simple complex.

What do you call that ?

” Robot surgery? Bionics? They’re all here.”

No they are not. First attempts exist, but it’s all still prohibitively expensive. You have robot assistance for surgery (mostly robots like Da-Vinci that allows for more precise motion or teleoperation), not robots that DO surgery. That’s a very different and much more difficult task.

Same for bionics, they are nowhere near close to what you see in science fiction, still very expensive and limited in ability.

I don’t doubt it is doable, but we are still FAR from it.

Fake hype is not helping anybody, except maybe convincing investors…

The same robotic appliance I used to invent the Sandwich Artist robot I could use to do brain surgery it’s only 19k.

I’m the first man on earth to deliver a robot at a price meant to sell not to steal investment money like everyone else.

You’re welcome America.

Not hype. I had recent hernia repair. Doc sent link to mfg demo. Old robot assisted surgery was to stabilize/scale motion, and magnify vision. Long since most major hospitals have robots in OR that assists with sub-processes of procedures. Doc just checks and adjusts if needed. Then tells robot to do the work. This one placed mesh and stitched it to muscle. I was out of OR in less than HALF the time — he told me so. Also less risk with more sensitive procedures. Example at https://youtu.be/cybRmhsvOss . $$ for hospital. Hire less surgeons. Similar should AI ever assist programmers (like that’s not happening already). Or copywriters, for that matter.

Cybernetics? Already being done. Seriously. You wouldn’t know it unless you met a recipient, since insurance likely won’t cover such surgery (and don’t hold your breath). Look it up. The VA funding bespoke surgery for vets to continue experimenting with different limbs and levels of loss. All prostheses bespoke to begin with, but these especially since neuro-anatomical study must be performed, brain surgery/implant In the end, they try to move part of limb they no longer have and new synth limb responds. Not atomically-powered like 6M$ man, but rather Li-Ion bats. Yes it is expensive. More docs, FMRI, brain surgery, implants, and tech involved. As more prosthetic mfgs produce them, and more docs train, it’ll be common. After lawsuits vs insurance companies that otherwise would prefer to give ppl a lump of plastic.

Heard of human neurons on glass that were artificially trained to play pong? Yeah the present day is that weird. That SciFi. Look it up. PoC in Dec 21. Was it 6 neurons? Now 100s of K. Published in Neurons journal last Oct. Reputable.

Awaiting peer review last I looked. So Frankenstein. But calculator? No.

Hows about 1.5petabit/sec multimode single strand of fiber tested to 25.5 km. Common SM cladding used. Yup. Already been produced. The 4, 15- or 55-mode transceivers are working in lab, but a matter of time before either a standards body adopts and/or someone buys and produces. 100×100 MIMO to sort the scatter, and 16qam iirc.

Again, the point was regarding the FUTURE potential of AI. Until we know of some real limit, we should assume all will continue to advance.

Same week this Hackaday article posted, a new LLM called Gorilla was released by UCB/Microsoft. Purports great accuracy with API calls.

Whether one calls it a calculator or not, it is getting better as more money and time spent. That’s not a promise. (Never promised anything). It’s an observation.

You’ve never written a computer program before.

Each line is written in executable algebra one at a time by a man.

At the end of converting each one of your method steps to algebra you then bunch all that algebra together execute it & resolve the errors until it is generally functioning.

Those require problem solving skills only capable by high comprehension human minds.

The presence of entropy makes ai / agi / llm impossible.

Sounds like you worked out the math, and got your robot to roll the wrapper on that sandwich… and then used it to roll the “data” you picked up from info entropy into a big fat celebratory submarine!

I like the new, mellower, you.

Keep it rollin’! =)

LLMs aside (or augmented)… For those interested in more than a few words regarding calculator vs. thinking machine, ref. Turing vs Lovelace (1950):

COMPUTING MACHINERY AND INTELLIGENCE

A. M. Turing

Published: 01 October 1950

https://academic.oup.com/mind/article/LIX/236/433/986238?login=false

I’m the only one who’s applied it to reality.

Hypothetical white paper possibilities don’t matter in the real world.

What I can assure you is real, by the thousands of questions I answer on quora, is that people are genuinely delaying their individual progress waiting for LLM / AI / AGI solutions.

That is detrimental to humanity on the face of it.

You are spreading a lie that forces others to achieve less in life.

And all you offer are distractions.

Zero personal accountability for your actions.

I am eating a sandwich.

Well if you like eating sandwiches and don’t want human disgusting this on your food The Sandwich Artist Robot on eBay.