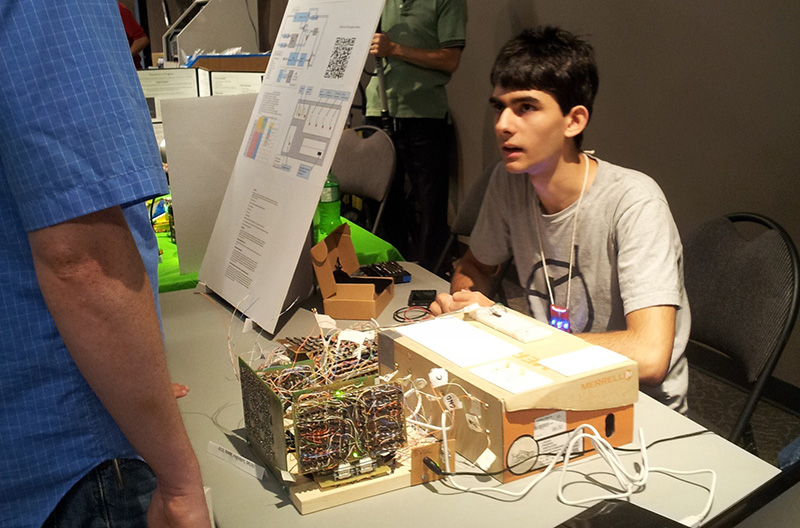

Anyone reading this uses computers, and a few very cool people have built their own computer out of chips, [zaphod] is doing something even cooler over on hackaday.io: he’s building a computer from discrete transistors.

Building a computer from individual components without chips isn’t something new – Minecraft players who aren’t into cheaty command blocks do it all the time, and there have been a few real-life builds that have rocked our socks. [zaphod] is following in this hallowed tradition by building a four-bit computer, complete with CPU, RAM, and ROM from transistors, diodes, resistors, wire, and a lot of solder.

The ROM for the computer is just a bunch of 16 DIP switches and 128 diodes, giving this computer 128 bits of storage. the RAM for this project is a bit of a hack – it’s an Arduino, but that’s only because [zaphod] doesn’t want to solder 640 transistors just yet. This setup does have its advantages, though: the entire contents of memory can be dumped to a computer through a serial monitor. The ALU is a 4-bit ripple-carry adder/subtractor, with plans for a comparison unit that will be responsible for JMP.

The project hasn’t been without its problems – the first design of the demux for the ROM access logic resulted in a jungle of wires, gates, and connections that [zaphod] couldn’t get a usable signal out of because of the limited gate fan-out of his gates. After looking at the problem, [zaphod] decided to look at how real demuxes were constructed, and eventually hit upon the correct way of doing things – inverters and ANDs.

It’s a beautiful project, and something that [zaphod] has been working for months on. He’s getting close to complete, if you don’t count soldering up the RAM, and already has a crude Larson scanner worked out.

8-bits @ https://www.youtube.com/watch?v=bCVT1BtlZn0

It seems that he never finished it, though.

I saw this a while back. It takes a lot of dedication to finish something like this.

I am curious what an exercise in minimalism would look like. A Turing complete machine with no regard to speed, that is as simple as possible with a usefully large address space. Anyone know of such a project?

It’s certainly possible to build a very small CPU, but the downside is that you’ll need much more room for program storage. The big question is what kind of technology you allow yourself for this program storage. If you insist on individual diodes, transistors and resistors, the program storage will get very big (depending on the program of course).

Core memory as program memory that is bootloaded from an external source such as a parallel port from a computer or a punch card/tape or magnetic tape, if you’re really serious about it.

The bootloader is only a few bytes, which could be done with diodes.

If discrete 2T/capacitor or even 6T SRAM is used, the machine will indeed get really big, so flipflops should only used as registers and as clocked logic

In research for my project I stumbled across this project: http://www.ostracodfiles.com/compactpage/menu.html

This is very close to what I was expecting. Thanks, it is very interesting and the project is complete with an assembler.

Theoretically we’re about 7 gigapeople and one can give one transistor to build the thing one.

Despite not a new project, it is nice project, good to develop knowledge in transistor/computer/digital logic/ field for a young kid. Keep on!

I want to build a a computer from scratch in the long run, I’m having a hard time staring out where to approach something like a (relatively speaking) simple z80 breadboard build. I wish there was a resource that offered hand holding every step of the way. It’s hard work finding, collating and then retaining the foundations of such an endeavor.

Back in the days when it was popular, people figure it out on their own. Since it was before the web so there aren’t going to be youtube video or instructable back then.

Look at Zilog datasheet, application notes and look at existing projects. That’s sufficient for an individual to do a proper design. Skills/experience is required to fill the gap. Tons of text book and digital design course for that. There won’t be a step by step hand holding guide for that just like there isn’t one for climbing the Mt. Everest. Just like a video game, you have to grind and learn the basic skill before attempting that and it will take time.

The short cut path with no skills other than soldering would be to look for kits where you get some documentation and just solder the whole thing. You won’t learn anything except soldering and assembly.

I have a book at home from the 80s about making a computer based on the 8080 chip. It’s about as step by step as any website I have seen, actually it’s probably more so. I also have a series of old Popular Electronics magazines from the 70s that detail building a very complete and usable computer from a z80. If you built all the optional modules it’s pretty much a primitive PC with a TV terminal, keyboard, cassette tape storage and everything!

So.. I don’t have any way of knowing what percentage of the ‘old timers’ used written materials and what percentage just used a handful of parts, wirewrap boards and their heads but don’t try to give us that up-hill both ways in the snow story!

I too have had a hard time finding information about how this kind of thing works, as tekkienneet said you have to figure it out on your own. However a great resource is the book ‘the elements of computing systems’ (http://www.nand2tetris.org/). It teaches how computers function by giving step by step instructions on how to build and program one from first principles.

Another resource would be undergraduate electrical engineering textbooks on Amazon.

For example Logic and Computer Design Fundamentals by Mano

While these are a little expensive they generally provide a good low level understanding of what you need to do. If you are looking for a kit don’t bother with this, but if you actually want to learn this is a good idea.

$10 on abebooks.com, thanks for the recommendation!

Thanks everyone for the info and advice.

Have you seen Quinn Dunki’s Veronica site? Reasonably handholdy, very informative. Otherwise, nand2tetris as suggested, or in the old school, _Digital Computer Electronics_ by Malvino. Trouble is, if you’re at the level of bread boarding a CPU, any handholding completely glosses over a lot of actual learning, which is why resources tend to be at the deeper electronics level.

Best is to just start the project. I never ended up building my z80, I still have the chips somewhere too. It was all the little questions I didn’t know that kept me from doing it, but in reality I probably could have just found the answers one at a time along the way. In fact you learn more when it doesnt work than when it does. Now I don’t have much time for projects.

TL;DR, just start at the begining and don’t worry if you don’t know where the end is.

That’s a lot of soldering. We built an 8 Bit adder out of transistors back in college, and that was over 200 transistors in itself. Hard to imagine a complete system.

The best part of that story is that I decided to use too small of resistor values for the gates, and at 12v it ended up requiring a 10 amp power supply to run it.

what logic family did you use? my adder (4 bits of course) took ~70 transistors so an 8 bit version would have been ~140 transistors meaning that you must have had more complex gates.

Solder 640 transistors?

I think he had better try making core memory instead!

I think core memory is even more tricky. Not as many solder joints, but very finicky circuitry, and wiring the core plane itself is probably as much work as wiring 640 transistors.

Memory has always been the tricky part, which is why the most important steps in the evolution of computers were in memory technology. Both magnetic core and transistor (either static flip-flops or dynamic cells) require separate discrete circuits for each bit, which is why many early computers used some sort of serial system such as a spinning drum, acoustic delay line, or moving tape (magnetic or punched paper). Discrete memory cells also need a lot of support circuitry to select a particular word, which is a bigger challenge than actually building the bit cells themselves. Even when random-access memory made its debut, the storage medium was the face of a CRT rather than individual storage cells. The first appearance of random access memory used CRTs that stored data as electrostatic charges on the faceplate of the tube, which greatly reduced the complexity of the circuitry required to access individual bits, but I think this is still a little outside the “scope” of hobbyist-accessible technologies.

I would think that the easiest to implement would be a delay line based memory. Yes, these are slow, because the data is always circulating and you only have one moment in the whole cycle to read and/or write a particular bit. Traditionally these used mercury as the medium and quartz crystals as the read and write transducers, or solid chunks of quartz crystal for the medium, but I’ve seen hobbyists implement delay line memory in air and water in recent years.

A common thing I see in computers people build from the ground up, is that they attempt to use “modern” (i.e., post-1960) architectures, which assume the existence of random-access memory devices. In the real world, computers built from discrete transistors generally used memory devices of the same era, which resulted in radically different system architectures.

For example, I once had the opportunity to learn hands-on about 1950s computer technology on a Philco AN/FYQ-9 computer, which had a graphical display and a trackball and numerical keyboard for input. This system was used for tracking aircraft in real time for parts of the North American air defense system. The FYQ-9 used discrete transistors everywhere, and a 3600 RPM magnetic drum of twenty-something tracks for its main memory, and discrete flip-flops for registers. Being a rotating memory, trying to use this like random-access memory would have been ridiculously slow, so instead of arranging the instructions sequentially around the drum, each instruction included the address of the following instruction, which was placed according to where the drum was expected to be positioned by the time the instruction was finished executing. This meant that memory load and store instructions (used to transfer data between the drum and registers) would be located physically close to the data they were reading or writing, wherever possible. It also meant that modern programming languages wouldn’t have worked at all, without specific optimizations for the architecture. But the important thing was that it was POSSIBLE to create a real-time data processor this way, using technologies that today would seem hopeless.

The bottom line here is that there’s a reason things evolved in the way they did, and with each new advance in technology, old technologies became utterly impractical and therefore obsolete. One of these obsolete technologies is discrete transistor logic, because you just couldn’t DO random-access memory of any useful size with it. There was some overlap – some discrete transistor computers used really advanced technologies like magnetic core memory, but I know of NO example of a computer with solid state memory that didn’t use integrated circuits for almost everything, including the memory.

So here’s a word of advice to those who would build computers from discrete transistors or other low-tech devices: consider the memory FIRST, because that’s the hard part, and it will dictate many aspects of the overall system design.