A while ago, [Paul Stoffregen], the creator of the Teensy family of microcontrollers dug into the most popular Arduino library for driving TFT LCDs. The Teensy isn’t an Arduino – it’s much faster – but [Paul]’s library does everything more efficiently.

Even when using a standard Arduino, there are still speed and efficiency gains to be made when driving a TFT. [Xark] recently released his re-mix of the Adafruit GFX library and LCD drivers. It’s several times faster than the Adafruit library, so just in case you haven’t moved on the Teensy platform yet, this is the way to use one of these repurposed cell phone displays.

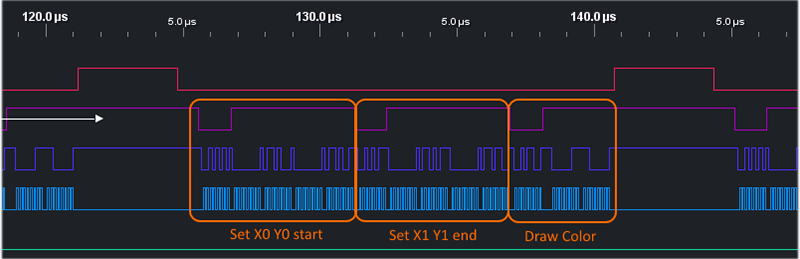

After reading about [Paul]’s experience with improving the TFT library for the Teensy, [Xark] grabbed an Arduino, an LCD, and an Open Workbench Logic Sniffer to see where the inefficiencies in the Adafruit library were. These displays are driven via SPI, where the clock signal goes low for every byte shifted out over the data line. With the Adafruit library, there was a lot of wasted time in between each clock signal, and with the right code the performance could be improved dramatically.

The writeup on how [Xark] improved the code for these displays is fantastic, and the results are impressive; he can fill a screen with pixels at about 13FPS, making games that don’t redraw too much of the screen at any one time a real possibility.

What is the relevance of or benefit of this over http://ugfx.org/? How many different raw display boards and output boards can this package support?

uGFX provides both output *and* input for full-fledged GUIs. It’s a large piece of software, though it can be stripped down. It’s also complex, and porting isn’t exactly trivial. Want Teensy support? This thread requesting it ran six months, and it’s still questionable whether it was actually fully ported or not:

https://forum.pjrc.com/threads/26535-uGFX-Port-for-Teensy-3-ChibiOS-available

Adafruit’s GFX is far simpler, supporting only basic drawing primitives – points, lines, rectangles, circles, text. Smaller. Easier to get working on the intended hardware. Easier to port. Better for someone just starting out and wanting to push some pixels to their new LCD.

This is due to the fact that we don’t / didn’t work on the port for the Teensy ourself. It is a userwh is / was working on that.

When we’re provided with hardware we port our library to any system.

Many companies, manufacturers and DIY people send us boards to get a proper port. If adequate we add the ports to the library in order to list them as officially supported.

Please excuse my drooling…

if fast spi is needed why not just choose a microcontroller that supports dma?

Where is the fun in optimizing that? :-) I already had a Teensy 3.1 if I just wanted faster LCD use. The purpose was to see just how fast an 8-bit AVR could run this code as an optimization “exercise” (and also provide a “drop-in” software library that can speed up existing user’s sketches on the same hardware).

Did you see the assembly “game” + somewhat optimized LCD screen software written the other day?

http://petenpaja.blogspot.com/2013/11/toorums-quest-ii-retro-video-game.html

and a video of it in “action”

https://www.youtube.com/watch?v=sLvgW_zb6bQ

Nice! reminds me of Conan on the Apple][

Montezuma’s Revenge!

Yes! I have seen that project. Very impressive. I especially love the way he got color on 16MHz AVR.

I have done a some work with “bit-banged” video on AVR (with a “re-mixed” TVout that uses tiled characters), but so far it has all been black and white (and mostly tech-demo, no real playable game yet). https://imgur.com/IgsmEY3 https://i.imgur.com/gNyTb6u.jpg and a sprite test https://www.youtube.com/watch?v=Imk5ony8JHI .

I’ll have to ponder adding color…

Andre Lamothe added colour to one of his consoles, I think the smallest PIC-based one, by using a selectable delay line chip. He feeds the colour burst into the input, then just by selecting how far along the delay line you want to use as the output, he can give the necessary phases for colour on composite video. Seems a quick and easy way of doing it. Same sort of colour Atari used for their 8-bit computers, and the 2600 console.

Of course it only works with composite displays, NTSC or PAL needing some software tweaks. But it works! The info’s all on the web to look up, and I think in the free downloadable part of his E-book.

While the hardware supports DMA, Paul’s library didn’t use it. (at least when I looked at it last year.) Freescale had a brain dead design in their DMA that required interleaving each *single* transfer with SPI setup making it a moot point for block transfer. Paul’s Arduino is pretty fast as it tries to keep the FIFO filled. Yes, someone did made a DMA SPI driver by using software chip select.

I was involved in porting DMA SPI over to ChibiOS for the K22 (mostly testing/tweaking). It was difficult to use DMA to block fill the FIFO, so ended up using single DMA transfer. There was quite a bit of DMA setup overhead for each request though, but yes the SPI was fully utilized and leaving the CPU to do something else. In my case, it is a RTOS so the cycles can get utilized by other tasks. Not so much for Arduino unless you coded it that way.

I don’t quite understand what you mean by this. Can you explain it in another way please?

They talked about issues here:

——————————————————————

https://forum.pjrc.com/threads/23253-teensy-3-0-SPI-with-DMA-nice-try?s=a81b7ff451e696251c55395a0cbe5418

>Major DMA Limitation

There’s a bug in the silicon that makes the Scatter-Gather feature of the DMA useless. So we’re limited to one Major loop per SPI “transfer” (that is a transfer of multiple words) unless some ISR reloads the TCD or channel linking is used

>SPI Limitations

* The SPI hardware has chip select signals, but they need to be activated by asserting the appropriate bits in the upper half-word of PUSHR.

* Short writes to PUSHR are filled with zeros, i.e. they will clear previously set bits in the upper half-word of PUSHR. It would be cool if the SPI kept these bits during short writes.

* The above two points are also true for the transfer attribute select bits and other control bits in PUSHR.

>This boils down to this thing can’t do much more than an AVR SPI if you want DMA. In fact, the resulting code I’ve come up with looks pretty much like my AVR code that handles SPI transfers in the background. On the plus side, as we can’t use the hardware CS signals, we are forced to implement our own CS scheme, which in turn can be anything we like. We can even place the chip selects on an I²C port expander, if such madness is necessary.

—————–

So if you were to want to use their hardware chip select line, you would have to alternate each data byte/word with an upper half word for the setting in your buffer. Fine for a single transfer, but you are really using DMA for a block transfer (e.g. SD memory) or you won’t bother in the first place.

The short write fills the upper half word is not documented, meaning that they do not intend people to use it. So by design they want people to fill a large buffer with alternating data and setting – if that’s not brain dead, I don’t know what is.

The DMA SPI is now available for LC. :) find crteensy/DmaSpi on github

Seems the TLDR is just to comment out the SPI-wait operation? I’ve done similar with driving 2-channel WS2811 from SPI ram on a small AVR (at no timing consequence over standard single channel), the 2811 protocol is much slower than the SPI operations so why waste cycles in between…

Yes, that will indeed save you a few cycles per SPI operation, and can be an easy optimization (as long as you can guarantee you won’t try to send “too fast”). However, just doing that would, I believe, not have squeezed nearly as many cycles from this library. Removing the “this” pointer overhead (both passing and accessing) and virtual calling overhead was pretty significant also. Also toggling /CS and DC “too often”, careful inlining etc. It all adds up.

And this is why driver writing is a skill in itself. Well done in writing a driver as it should be – always with an eye on the overhead. The best drivers are the ones you don’t even know are there…

Interesting writeup – well constructed and presented. I’ve limited interest in software development but I still read the whole thing. I also have some of those displays that I’ve been using with the Adafruit library so some definite practical value for me.

I know it can be a bit of a task, but good technical writing that follows a story from recognition of a problem through to solution is a great learning aid for people outside that field.

Gcc 5.1 does devirtualization now for optimization. Even 4.9.2 is noticably better than 4.8.1, especially when it comes to link-time optimization.

Another thing to watch out in the Adafruit libraries is that their bit-bang SPI is not well optimized. I wrote an implementation that is 3x faster.

http://nerdralph.blogspot.ca/2015/03/fastest-avr-software-spi-in-west.html

That’s…. actually pretty amazing.

And instead of fastpin you can go back to Wiring (what Arduino was forked from) to get a fast digitalWrite.

http://www.codeproject.com/Articles/589299/Why-is-the-digital-I-O-in-Arduino-slow-and-what-ca

Download it from wiring.org.co

Another reason yet why I’ve stayed away from arduino – wonderful for knocking together some hardware for a quick proof of concept, but libraries are a crutch that no-one ever seems to understand, they just borrow someone else’s code and make simple calls to it, never realizing how poorly a lot of it is written. If it works for what you need, great. But often times the is a far simpler, easier, and better way of doing things that people never knew was there because they only know how to put together a sketch.

Now the monster is so huge and out of control, you could spend a lifetime re-writing the libraries to optimize them and get the most bang for your buck. it comes full circle when you start writing your stuff from the floor up because its more efficient and you know what’s going on.

We’re raising a whole generation of “coders” who don’t actually know how to “code”! how is this a good thing?

I don’t think it’s an issue of them not knowing how to code. I think it’s more an issue of high-level programming vs. low level programming.

For most high-level programmers, you look for a library to do it for you instead of rolling your own because you don’t want to expend the effort. However, for coders like you and me, working low-level can be fun and more satisfying than using what someone else wrote. So the high-level coders rely on us to find those mistakes and fix them.

Thus GitHub.

(whispering) …Cosa…

https://github.com/mikaelpatel/Cosa

it’s interesting, you see this loop of coding skills and techniques repeating over time. I see the same optimisations here as i’ve seen happen in the past with different areas. what is old is new again. people will say, keeping it understandable is part of the goal but you can do both. there are a lot of people who’ve only used scripting languages and not really caring.

partially its just part of the current mantra, take whats out there, or bang up something that works and let other people make it better and call that part of the system, just make it good enough to be able to sell it and pass the buck on.

there is a misguided notion that it doesn’t have to be that fast for people learning, it is just not true since there are a lot of things you wouldn’t be able to achieve with mediocre performance and a lot of people won’t have the same ability, time or simply want to be able to make it better.. not being able to get the end result due to a lack of say refresh speed can be frustrating for someone only wanting to dip the toes in.

i don’t think its as much of raising a generation of coders who can’t code, i think its more raising a lot of people who don’t want to be coders, but do want to be involved in hacking stuff together to do neat things. like using a hammer doesn’t mean you need to know about how to make one.

decent core libraries are great, but these companies aren’t in it for that, they’re electronics box shifters getting it out to as many as possible. the next gen radio shack.

luckily there are people around that enjoy making pastries out of wheat. (insert favourite food/ingredient here)

“like using a hammer doesn’t mean you need to know about how to make one”

That’s a bad analogy. A hammer is not an abstraction, whereas code libraries are.

If you don’t understand how electronics works, then putting something together that works reliably is difficult, and debugging problems is near impossible.

its a good enough analogy to show you do not need to understand underlying mechanisms to do what a considerable amount of what the people buying things like this are doing, and they are doing. feel free to choose a different tool to use for analogy. maybe a car is better, its got lots of electronics, code and complex interactions but its abstracted down to a few easy to use controls.

because we can do this things we tend to look at it from that perspective, but these things are mostly toys with interfaces that have gotten much simpler. i’ve seen people who know nothing about electronics or much coding getting things together. a good abstraction allows you to hide the innards well.

cheers

The first time I drove an LCD, I did it on a PIC. Starting with a simple example library provided with it, that was written for the MSP430, and commented mostly in Chinese.

Within an hour, I had it running. Within a day, I had it running 300% faster. In two days, I had it completely rewritten as a protothread, where drawing ops are pushed into a ring buffer rather than immediately drawn. The actual drawing routine can be called when the CPU has spare time, and it checks a global flag every few pixels that causes it to exit and release the SPI bus; with the checks performed almost exclusively while waiting for SPI transfers to complete, so there’s little speed penalty. An “interrupt” can occur even in the middle of an op, like drawing a line/rect/circle/char, it resumes the op when next called. This allows it to be “interrupted” by things running in non-interrupt context, and multitask fluidly regardless of whether a preemptive multitasking kernel is used.

Regardless of how cool that may be to me, I would NEVER release that as example code, or even as a general-purpose library. Should someone want to port that, or add features to it, it would be significantly harder for them to understand well enough to do so. In fact I bet that if my own, current code was somehow sent back in time, and provided to me as an example when I was just starting out with a LCD, if it didn’t work on the first try I would probably reject it and look for something simpler.

Simple non-optimized code has its place.

“Simple non-optimized code has its place.”

And I’ll add that complex, cut/paste spaghetti code has no place. On many occasions I’ve “optimized” other people’s mostly by deleting superfluous code, streamlining what is left so it flows well. The resulting code runs faster, takes less space, and is easier to maintain.