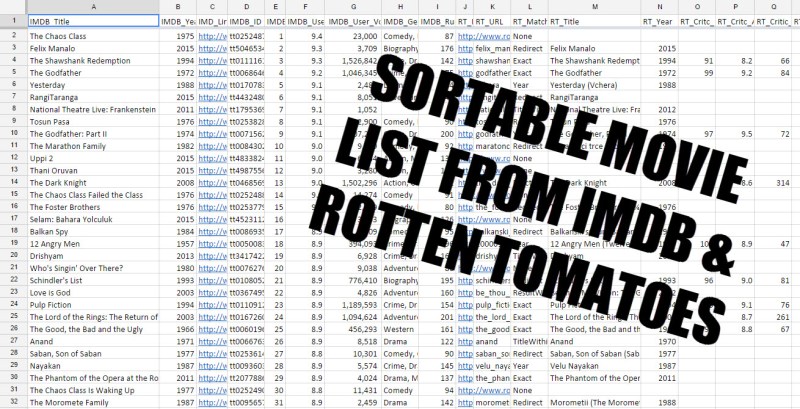

Well, it’s the holiday season! Which means two things. Long, sometimes uncomfortable gatherings with family, and our favorite — free time. So if you’re looking to catch up on some movies, [Rajesh Verma] has you covered. He’s compiled a database that aggregated data from both Rotten Tomatoes and IMDB!

That means you can very easily sort through based on critic’s favorite, audience favorite, IMDB favorite, underrated, overrated, year, etc. He started by dabbling with aggregation scripts with just Rotten Tomatoes, and after he released one showing both Audience and Critic scores, Rotten Tomatoes updated their site to include that sorting method! Coincidence? Maybe.

Either way, it broke his original script when the site was updated — so he’s come back with something even better — a list for both IMDB and Rotten Tomatoes.

He started the project thanks to [Michael] at Meta Film List, who had the idea of making movie databases more accessible. Without further ado, you can check out the list on Google Docs.

I was looking to use IMDB data for another purpose, but ran into restrictions in the terms of use:

From IMDB’s web site:

“The data must be taken only from the plain text data made available from our FTP sites (see alternative interfaces for more details and for links to our FTP servers). You may not use data mining, robots, screen scraping, or similar online data gathering and extraction tools on our website. If the information/data you want is not present in the data files available from our FTP sites, it means it’s not available for non-commercial usage.”

“The data can only be used for personal and non-commercial use and must not be altered/republished/resold/repurposed to create any kind of online/offline database of movie information (except for individual personal use). Please refer to the copyright/license information enclosed in each file for further instructions and limitations on allowed usage.”

Rajesh Verma violates both of these clauses by screen scraping and republishing.

Until it happens, I can’t draw a bright line between abuse of terms of service and removal of a useful search feature on IMDB, or the withdrawal of free movie data that *is* allowed for personal use via FTP by IMDB. I think it’s plausible that abuse could precipitate or hasten such a reduction in usefulness. I might still be able to use IMDB’s data without violating their terms of use, and it’ll affect me if that data is withdrawn.

if they didn’t want him to have the information, they shouldn’t have given it away so easily. ;)

Cool and useful! As long as this is for personal use I hope IMDB/RT does not go to war against it. I wonder if the database sheet can be copied over to an Airtable ( https://airtable.com/ ) sheet, which has very handy filtering/sorting tools.

being uncomfortable with your family will be the end of civilization.

The problem with reviews is they are very often written by workers for the film company, friends etc. You see full positive reviews for complete trash productions or movies that still aren’t released, which is ridiculous. Amazon has the same problem too.

If everybody likes a movie, then you can easily discover it using either IMDB or RT. The hard to find “diamonds in the rough” are usually those that are divisive, with strong reactions of either love or hate.

I suppose you could find a few of them by comparing ratings from two sites, and looking for disparities. But that’s reliant on the demographics of the two sites being different enough to reliably cause a noticeable disparity. And of course, the biggest disparities will be seen when the script failed to properly match a movie across two sites, so any attempt at such search will flood you with those. I think any useful signal will be buried in the noise, so to speak.

Using RT data you can easily make up a score based on divisive votes by critics.

I used the following empirical formula to get a disparity rating (0..1):

rt_critic_disparity = 2*min(rt_critic_fresh, rt_critic_rotten)/rt_critic_total

I also made up a critic-to-public score ratio in order to see how much (more or less) the critic had rated the movie compared to the public. Finally I combine the two scores with a weighted average increasing the overall movie rating, trying to identify movies the critic was more polarized about and the public didn’t quite like as much, which I believe is usually the case for good movies some people don’t get or find boring and the critic finds somewhat controversial, that is according to my taste obviously.

Adjusting the weights I also managed to make “so bad it’s so good” or fairly unknown movies rank higher.

Still, RT scores give you quite some room to work with using simple maths, so legal matters and terms of service aside, I find this scraping work very useful and thank [Rajesh] for it.