According to this article in Nature, Moore’s Law is officially done. And bears poop in the woods.

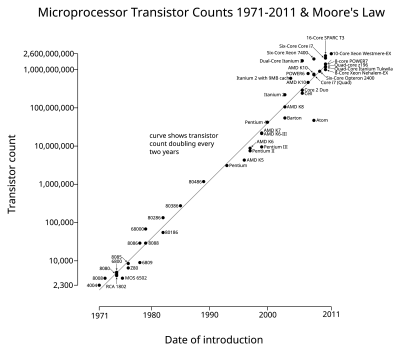

There was a time, a few years back, when the constant exponential growth rate of the number of transistors packed into an IC was taken for granted: every two years, a doubling in density. After all, it was a “law” proposed by Gordon E. Moore, founder of Intel. Less a law than a production goal for a silicon manufacturer, it proved to be a very useful marketing gimmick.

Rumors of the death of Moore’s law usually stir up every couple years, and then Intel would figure out a way to pack things even more densely. But lately, even Intel has admitted that the pace of miniaturization has to slow down. And now we have confirmation in Nature: the cost of Intel continuing its rate of miniaturization is less than the benefit.

We’ve already gotten used to CPU speed increases slowing way down in the name of energy efficiency, so this isn’t totally new territory. Do we even care if the Moore’s-law rate slows down by 50%? How small do our ICs need to be?

Graph by [Wgsimon] via Wikipedia.

This post, which ends with “How small do our ICs need to be?”, is followed by an add for tiny 8 and 14 pin MCUs. I don’t know if it is advertisers targeting the posts, or just chance. either way the picture of an ant holding a chip made me smile after reading the post.

Digital will continue on into whatever technology ends up replacing silicon as well as probably expand into 3D structures. The mismatch due to small feature sizes as well as other things like short channel effects already prevent analog from shrinking further.

The mismatch is actually that “Moore’s law” isn’t Moore’s law. It was distorted into Intel’s marketing spin around the 90’s.

Moore’s law is about the number of transistors per chip at the optimum cost. Take the picture above for example, and you’ll notice there’s no control of price – just a bunch of CPUs that fit a logarithmic curve, regardless of the cost per transistor. That’s why “Moore’s law” has appeared to hold over the decades – you can always put more transistors on a chip by making a larger chip, which also makes it more expensive but we’ll just pretend Moore didn’t care about that because it makes for nice infographics for the futurists.

The real Moore’s law stopped almost 20 years ago, because ever finer processing nodes require exponentially higher investments and exponentially more chips to be sold to offset the cost. But the market for CPUs and chips is not expanding exponentially at the same rate. The market is slowly maturing, so it’s taking ever longer to recoup the investment and gain enough capital for the next step.

Why couldn’t it be Murphy’s laws that end?

It was about to, but something went wrong

+1

+ 1000. Truly laughed out loud.

They tried getting rid of Murphy’s law, but completely screwed it up.

+- damnrit +1

Well, we don’t see the intel vs. amd war anymore…

On the other hand Murphy’s law is still alive! Lol!

“Do we even care if the Moore’s-law rate slows down by 50%?”

Yes? Yes we do?

On the desktop, SSDs have masked the lack of improvement in CPUs for a few years now but even if they get higher capacity and cheaper, they’re not going to boost computer speeds much beyond what they already have.

After 40 years of new application areas opening up due to faster silicon and higher transistor counts I think we run the risk of technology starting to stagnate, relatively speaking. And to the extent that our economy has benefitted from those 40 years of growth, the hit from a technological slowdown will probably be uncomfortable at the very least.

So maybe this generation of tech workers isn’t going to be laid off suddenly?

Developers and tool-builders will have to relearn how to use their resources more efficiently. It’s happened before, it will happen again.

No matter the effort 9 women can’t produce 1 baby in one month. The bottlenecks are real and a significant subset of problems have strong linear dependencies making parallel execution impossible in practice. Trying to use speculation to crack linear dependencies is complicated, expensive (hardware & power usage) and of no help for many problems.

On the other hand, how much developer productivity over the previous decades has been sucked up by churn, by people reinventing the wheel, again, and again, badly, because they don’t learn from the past, enabled by the fact that the market was growing quickly and hardware was growing more capable?

How much computing efficiency has been soaked up by layers of work-arounds for bad designs that squandered the chance to start over by not taking time to learn from the past? How much has been soaked up by layers upon layers of abstractions when people tried to build on flawed foundations?

I’m really interested to see what happens when the chip engineers are no longer able to save software “engineering” from its many sins. It could be a great opportunity, or maybe its already too late.

For most commercial or common purposes, there’s still plenty of room to go parallel. Especially rendering, GUI stuff. Programmers have risen to the challenge, all computer games, which makes high demands on CPUs, have gone parallel. Chips can still get cheaper, and more parallel. Supercomputers have been massively parallel since the start.

There’s only very few things that need to be done one after the other, and there might even be ways of doing them differently by re-addressing the problem. A lot of artificial intelligence is just database work. Machine vision is a parallel task. We’ll be fine.

The rise in speed hasn’t been because we do anything more useful, businesses haven’t become 1000x more profitable since CPUs have. The race for faster CPUs was just how Intel, AMD, and the rest, chose to compete with each other, as well as with their own older products. There was lots of profit at the high end. Software got more bloated to match the available power, without gaining anything like as much in proportion.

Now they’ll just have to compete on something else. It’ll be fine!

For rendering we have gone parallel a long time ago. But graphics rendering is a task suited for parallel execution together with a small subset of normal computer tasks.

While the current CPU design isn’t suitable for extracting thread level parallelism (creating, destroying and synchronizing threads are orders of magnitude too expensive to effectively execute many known parallel algorithms) there are many cases that can’t be parallelized _or_ where parallel execution isn’t better than scalar execution. There’s no way around that.

The reason code get bloated isn’t to use the computer to its maximum (with a few exceptions like games, demoscene productions etc.) but the change of development practices, the expectations from users of having a lot of features etc. Code reuse alone have contributed a lot to the current bloat, adaptive code is another significant bloat factor. People not caring as the code runs fast enough on their machine or just being stupid (things like storing configuration data in text and rereading the whole of the data each a configuration setting was requested).

I like that you hit on code reuse. To expand on that: there are a lot of development practices designed for, eg, weaker coupling between components, easier automated testing, or greater tolerance of network issues rather than–and in fact often at the cost of–raw, brute efficiency. People who talk about “bloat” tend to insinuate (or sometimes explicitly claim) it’s a matter of incompetence or even planned obsolescence. Some software is…ponderous, to be sure, but the fact is that there’s more to software development than computational efficiency and minimal memory footprint.

It always seems like embedded programmer types complaining about bloat, but that may just be confirmation bias on my part.

umm, I think you have a typo…

“the cost of Intel continuing its rate of miniaturization is less than the benefit”

would seem to imply that miniaturization would till be cost effective…

I till can’t believe he wrote that. Till, at least he tried. I hope he till has another good article in him.

“Do we even care if the Moore’s-law rate slows down by 50%? How small do our ICs need to be?”

That is the right question if you are buying 7400 TTL parts. But the question which is more interesting is how many transistors can you buy for $X? Right now I can get an ARM-based microcontroller with 256KB of RAM for $10 (say). It would be great if I could get it with 1MB of RAM for the same price. It isn’t a matter of making it smaller.

If the cost is less than the benefit, Intel would be continuing to miniaturize. I think you mean the cost is greater than the benefit.

They have moved from 2D chip designs to several layers which is more like 3D.

I believe that there must be some speed-up left as they move from single digit number of layers, into double digit layers, to triple digit layers. Yes they have to be clocked slower to keep the heat dissipation down. Maybe move two steps back and make the chips bigger, so that failures due to high tolerances become less and this allows a doubling or tripling of the number of layers. More like skyscrapers than farmland.

The problem I see with 3D is not just one of registration of the layers on eachother, but there are other issues like getting the heat out of the core of the silicon stack and a new subset of EMI issues with layer transfers (vias) and having circuits sandwiched into other circuits all around. I think these problems can be solved or at least properly adressed in the future, but it takes time to learn and adapt the silicon designs to these new problems.

Firstly i realise this is a pipe dream. What if it lead to more efficient code, actually pushing the limits of what the processors can do? Most people don’t do tasks on their pcs that require substantially more power than we have at the minute.

People doing cad and simulations can just add more CPUs and GPUs. Hell if intel/amd/whoever just made a really stable chip at the point where cost/benefit hits the sweet spot it’d pretty much become ubiquitous wouldn’t it?

That would make them pretty cheap too. Plus mainstream support for legacy hardware could be tailed off, I bet that’d cut codebases. Only having to optimize for 3 or 4 chips offered by the different vendors would probably offer some benefits too.

Meanwhile the R&D budgets could be spent replacing silicon with whatever superMaterial we’ll need for when we actually do hit the limits of current tech.

The masses just don’t have a need for more than what a MacBook from 4 yrs. ago has to offer…

Heck, I know quite a bunch of people who would LOVE to keep using their MacBook 13″ (the white/black plastic ones), but they just start falling apart… While still working… Also those LED screens tend to get rather dark after almost a decade…

That said: The new ones they buy now will most likely last at least that long…

Apart from my MBPr that I use for videography and web/app design, I mostly use a 2009 IBM W500 Core2Duo, which does everything plus more than most of today’s low to mid range laptops on the market (I will admit to upgrading to a 120GB SSD). Yes, 7 years and running strong.

I believe that quality and durability would benefit the end user much more than minaturization, although the Macbook (the new thin one) is a great example of design and the capabilities we have for minimum weight and size. Long lasting products certainly aren’t a good business model if you expect profit growth, especially in the low and mid price range.

The increase in single-threaded performance used to be about 50% a year and has leveled out to about 21% a year sometime around 2005. It used to feel stupid to buy a mid-high class machine, only to see it being outperformed twice after 2-3 years. Now that computers began to age slower it makes more sense to go for a higher mechanical quality model. After all it might well serve you 6 years or more!

The refurb market is booming as well. It feels great to buy a Precision workstation 2.5 years after being manufactured for one sixth of the original price, while the performance and build quality is still really mighty.

I have a MPC TransPort T2500 – or Samsung x65 if you’re in Europe. Made in 2008, it has an Intel 2.5Ghz Core 2 Duo CPU, 4 gig DDR2 it can fully use, even with the 32 bit XP or Vista that were factory options along with Vista x64. It has an absolutely gorgeous 1680×1050 15.4″ LCD. Pixels tossed onto that a discrete nVidia GeForce 8600M GS GPU with 256 meg dedicated RAM. Should I need to see more pixels it has an HDMI port for 1920×1080. With its magnesium chassis it’s very lightweight. It runs Windows 10 very nicely.

With the exception of not having Blu-Ray, USB 3.0 or ExpressCard (seriously, Samsung? Still using Cardbus in 2008?!) its specs stand up to or even beat many current laptops.

How well does it stand up to current stuff? With the Strongene OpenCL HEVC decoder, LAV filters, and the latest CCCP pack it can play highly detailed 10bit 1080p H.265 video without the least hint of problems.

After you install Strongene and LAV filters, go to LAV Filters > LAV Video Configuration. Under Hardware Acceleration, select NVIDIA CUVID. Check the MP4 and HEVC boxes. Click Apply and your “doesn’t have hardware H.256 decoding” nVidia GPU suddenly… does. You still need CCCP or something else with an H.256 codec of course.

I’ve also used that same software setup on an old dual core AMD Socket 939 with 2 gigs of DDR1 and a nVidia 8800GT, running XP Pro. It plays 720P HEVC perfectly, but can’t quite handle 1080P. Dunno if it’s XP or if even with the software making the GPU pick up some of the video decoding effort there’s just a speed bottleneck in the hardware…

At any rate, if you just want to keep up with the latest video codec without having to spend big $ on a new GPU with built in official support, a cheap older nVidia GPU and a couple pieces of free software could save you a bunch.

I think it’s time for a new technology. Like using light instead of electrons. Or perhaps the quantum computing.

It seems that the producers keep clinging on the current technology because they invested to much. Or because they don’t want to invest more for new technologies. Like using gas/petrol.

The problem with introducing any radically new technology in this domain is that it is going to have to hit the ground running, that is it is going to have to be a significant improvement over current silicon technology in its first iteration, and that is not going to be easy.

For clock rate, I thought Moore’s law ended over 10 years ago? The only “improvements” since the early 2000s had just been to add more cores

Modern processors are significantly more powerful even core-for-core than ones ten years ago, while using less electricity per unit of processing power and producing less heat. I’m not qualified to go into the details of why, but this is absolutely the case.

https://www.nasa.gov/ames-partnerships/technology/technology-opportunity-nanostructure-based-vacuum-channel-transistor

How small? Small enough that your contact lenses will have a giant popup saying. “Please re-new your Wolfram-Alpha abstract visualizations and math module or be downgraded to our basic Crayola Friends ™ and Abaccus module. Brought to you by Apple.”

https://coubsecure-a.akamaihd.net/get/b29/p/coub/simple/cw_timeline_pic/8a5bb5f408c/6c414ef0b8e35b08de66b/med_1421107726_image.jpg

Perhaps while I am driving in heavy traffic? – No thanks!

I don’t have a TV, because I find ads annoying.

And do you sit in silence during heavy traffic? Ads are everywhere my friend. FM radio, Pandora, billboards….

Can we finally expect a return of the great tradition of optimization ?

Yes… And No.

Seriously, the order of magnitude make one person very unlikely to hyper solve something. Before you flag as reported comment lemme just extrapolate and contribute.

The prime example is our “humble” linux core kernel. If you delve into reading the mailing list archives. (And remove all extraneous crap… including SystemD{ipsh1t_code}..)… The kernel can run PERFECTLY~..BUT. Won’t RUN userspace interface. I.E (or E.G. for latin-istsa)

Even more far fetched, somehow they got a G.A. (Genetic Algo) to M.L. (Machine Learn) on the compiler and then compile that until. full optimization… The math, constructs and code was fully illegible to humans.

You may have heard about the “silicon lottery” basically a part not to spec goes in a lower threshold bin. The compiler *it ATE through that*….type deal and custom made new algos based on previously micro-un-coded areas “defective/locked areas”.

At which point the ph.d doctoral white papers had been bought and swept under the rug about 10-20 years ago.

Optimization is like how to grow *the most perfect goldfish* that is as perfect rectangle as the tank it is living in. Get air in, Get air out, Get food in, get waste out, Don’t.Crack.The.Tank! Notice the person feeding it, Don’t break fins, gills perfectly cycle… Then get it to do a ‘triple axle somersault’ and land back in the tank flawlessly without knocking out the water (while taking into account; H20, Oxygen Levels, magnetic and EM sources AND initial origin(s) meta-states (was it raised upside down? Right side up? Check 16 Axis).

Remember there are “Software Engineers” and there are “System Administrators”.

Optimization doesn’t make money. Most people won’t upgrade for a small bump in performance. Graphene is just beginning to take off, and it looks like it will be the next leap in technology. They will be able to make things smaller, faster, and more energy efficient. Intel has also been working on building up rather than out. Building in one dimension means trying to make things smaller in the same footprint, which leads to an increase in part count and speed. Building up means they can instantly double the speed for every layer. If graphene makes its way into the equation, at one atom thick, Intel could build several parallel layers in the same footprint as one silicon layer, using approximately the same energy. It could lead to a factor of 2-3x increase in speed for the same power consumption. Imagine a Raspberry Pi operating at 3ghz or a single layer version that requires only a quarter of the energy.

Moore is Over (If You Want It)

“Man, why the hell did you invite him or open the door… Weirdo Beardo, keeps going on about 555 timer chips and drinks ALL our beer. Worse yet he will talk for 10 min with the spliff and then talk crap if you hold it more then 20 seconds.”

its beginning the age of quantum computing.

the end of peak silicon is the end of bloated programming. Back to coding instructions in hex. Coders are suddenly in short supply again and wages skyrocket. No need to pipe updates constantly because it’s always a complete rewrite so once every ten or twenty years.

You jest, but now I’m wondering if there really are people out there convinced that hand-writing hex is more efficient than assembly commands that are literally just mnemonics for the same thing. Like the programmer version of audiophile goobers who buy magic rocks to Crystallize their Soundstage.

If Intel wants to slow down on Moore’s law, I’m sure AMD and others are more than happy to pick up the slack.

Making CPU’s more efficient (less heat) we could end up running faster, We might get larger cpu die’s, There are still a few tricks they can pull before stagnation. Let’s face it Moore’s law isn’t stopping anytime soonjust slowing down.

>How small do our ICs need to be?

This may not be a need, but my company is re-releasing a ton of our four year old chips, that are still selling well, on new significantly smaller processes, because we can get almost 2.5 times as many chips per wafer. Our old chips weren’t cost-competitive. Our new ones undercut our low-cost competitors.

Sort of the same as when Quantum did their Bigfoot hard drives by applying newer coating and head technology to the old 5.25″ platter size – except I assume your companies version doesn’t suck and break down a lot. ;)

It is interesting to note the 99.99% of all IC market don’t even been fabricated on 22nm or less , and might don’t have access to to it altogether , so it not that the law is broken, who cares… , ( it is for CPU market that is over) but there are millions of IC’s and that can benefit from the newer FABs , and indeed we CAN expect the law to amaze us once again , I wish some micro controllers would catch up to this level of size and speed . ( cost would be cut as well due to smaller die size ) 1Ghz Arduino for $0,50 a piece should be fair price .

The next big step will happen when we step away from silicone and go to a new material that is capable of great heat dissipation and higher conductivity, probably carbon.

So the power users will cry that future CPU:s won’t be able to run their crap programs or 3D games in 4 K with their stupid gadgets in front of their eyes. . The crappy programmers relying on moores law to fix their crappy programs. My eyes are wet now. Microdollar and Mac dollar that can’t sell their crap OS:es since they are resource hungy and their programmers can’t make efficient programs that runs concurrently in 2 to 8 CPU:s, people who do not understand Amdahłs Law, all your f……….g big dollars won’t help you. What worked perfectly in Beos 20 years ago they cannot grasp. My tears have become a flood by now. Check up Beos, concurrency, assembler, c, c++, multithreading, multicore, multiprocessing, and do not think you can do multiprocessing in very high level programming languages ?