Over at [Truthlabs], a 30 year old pinball machine was diagnosed with a major flaw in its game design: It could only entertain one person at a time. [Dan] and his colleagues set out to change this, transforming the ol’ pinball legend “Firepower” into a spectacular, immersive gaming experience worthy of the 21st century.

A major limitation they wanted to overcome was screen size. A projector mounted to the ceiling should turn the entire wall behind the machine into a massive 15-foot playfield for anyone in the room to enjoy.

With so much space to fill, the team assembled a visual concept tailored to blend seamlessly with the original storyline of the arcade classic, studying the machine’s artwork and digging deep into the sci-fi archives. They then translated their ideas into 3D graphics utilizing Cinema4D and WebGL along with the usual designer’s toolbox. Lasers and explosions were added, ready to be triggered by game interactions on the machine.

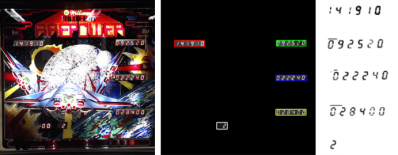

To hook the augmentation into the pinball machine’s own game progress, they elaborated an elegant solution, incorporating OpenCV and OCR, to read all five of the machine’s 7 segment displays from a single webcam. An Arduino inside the machine taps into the numerous mechanical switches and indicator lamps, keeping a Node.js server updated about pressed buttons, hits, the “Lange Change” and plunged balls.

The result is the impressive demonstration of both passion and skill you can see in the video below. We really like the custom shader effects. How could we ever play pinball without them?

Pinball’s pretty awesome, but I’ve never exactly been great at it (digital tables with infinite quarters helped somewhat). This though, I could get hooked on this.

As an exercise in making something more fun to watch I don’t consider this a success.

Watching the ball ping around the table and rooting for (or against) the player is probably more entertaining than watching things fly about on a big screen.

We wanted to explore ways to enhance the experience for the audience behind the current player. As the effects are triggered by the action on the table, the narrative plays out in the projection,

Additionally, we were constantly rolling the high score, so the things flying about in the current player’s peripheral vision makes it more challenging.

What.. huh? whaaaa? Where am I?. OMG, I think I had a siezure just watching the Video of it.

Optically reading the score?? Can’t decide if it’s neat or lame.

We decided to do it this way for several reasons:

1. The ‘correct’ way would be to use a logic analyzer or some interface to interpret the digital data as it’s being sent to the displays. However, the way this data is encoded is slightly complex (http://www.pinball4you.ch/okaegi/pro_d7f.html), the displays run at +100V (plasma), and are hard to find original replacements in case something was shorted incorrectly.

2. We have experience with OpenCV, so it wasn’t difficult to adapt that knowledge to this scenario.

3. The technique can ‘easily’ be applied non-invasively to other machines. You can see the code here: https://github.com/truthlabs/SuperNova-OCR

I’m very impressed you got the OCR working so well, even with the projector going. I failed even with totally controlled lighting (https://github.com/mattvenn/minim-reader) and I’d found the same 7segment software you used. How critical is the positioning of the game/camera – can it cope with the machine moving around a bit?

I love to see that you had a similar idea (just for a different piece of hardware).

I think you identified the crucial issue with OpenCV – the input has to be clean and controlled.

Our process is illustrated here: https://cdn-images-1.medium.com/max/2000/0*l6bIW7vQ_DgQNV2Z.png. The two methods we had identified to cope with this were:

1. Maximize contrast. You will need to keep tweaking the values until you have output that best resemble black digits on white background.

2. Isolate input. I do this by masking out all extraneous pixels: https://raw.githubusercontent.com/truthlabs/SuperNova-OCR/master/server/img/firepower-1player-mask.png.

Once we had the camera positioned, there was a lot of tweaking to get the contrast values correct, and then adjusting the masks to provide only the pixels needed by SSOCR. SSOCR also has a ton of useful parameters, including crop: https://www.unix-ag.uni-kl.de/~auerswal/ssocr/ssocr-manpage.html#sect25

We’re also refreshing CONSTANTLY, discarding invalid values, and only accepting values that consistently appear.

Reading the displays should be done before the high voltage drivers and it is very simple. Personally I’d have probably gone this route or by putting the ram chip(s) on a daughter board (with custom rom) and read the score and switch positions from memory.

5 axis fdm printer already in use in the industry

https://www.youtube.com/watch?feature=player_embedded&v=L37IhrkVX04

https://www.youtube.com/watch?v=w8Fl8L4yk8M&feature=player_embedded

I’ve a suspicion you’ve commented on the wrong article; I think you may have intended to post that here: http://hackaday.com/2016/04/07/a-look-into-the-future-of-slicing/

Cool videos, though; There’s someone at my uni that’s been programming a couple of robotic arms to do 3d printing, but as of yet they’ve just been using standard planar slicing methods. It’d be pretty cool if they could get it going with some of these techniques; Make a bit better use of the hardware

Not mapping out the pinball machine and has spill over on it? he really needs to tweak this bit more, and also needs to switch to a short throw projector as it seems everyone standing near the machine is causing shadowing.

Too much of hack for you?

Not Immersive at all. As a pinball guy this is pretty useless. When you play pinball you are staring at the playfield the entire time. Animations on the DMD or LCD on a couple of newer games is done during a period of downtime( i.e. ball captures). The only people that will be looking at that wall are the spectators, which will get boring because there is literally nothing on that wall that shows what he is doing during his game. This looks like a pinball machine with a projector playing space fighter 3d scenes. He says the animations are triggered by the actual playfield, and optically reading the score but i dont see any evidence of that in his video.

While my IFPA rank isn’t great: http://www.ifpapinball.com/player.php?p=26404, I also consider myself a Pinball guy. Take a look at my comment here: http://hackaday.com/2016/04/08/the-most-immersive-pinball-machine-project-supernova/#comment-2982346 regarding the intent of the project.

For more evidence of how the OpenCV / OCR process works, take a look at our code here: https://github.com/truthlabs/SuperNova-OCR, which is referenced by a more detailed write-up here: https://medium.com/truth-labs/making-pinball-fun-to-watch-and-play-47943467d7f5

Yeah I got the intent, but you need to rematch your own video. Your spectators are watching the playfield not the giant screen. From the video it looks like random clips are playing, and they start when the plunger is shot. Sorry to criticize it looks cool, and pretty but it does not enhance the experience and i thought that was the point. Again this is from your video, there are no audio or visual cues for mode changes or shot completion. Think about if that was projected into another room without the pinball machine in it. Would the viewer be able to even tell it is being controlled by a pinball machine? Would it be obvious that the projection is not just a loop of video? If it would be obvious you need to make a better video showing this because from what i see there is too big of a disconnect between the wall and the machine.

For a project like this to work you need to start from the beginning, get rid of system 6, and rewrite the game code.

The stupid shit people comment never ceases to amaze me.

Really Buddy, maybe you should expand your comment. What is stupid?

According to the video posted.

Where is there a correlation between the game and video?

How does a video loop enhance the viewers experience?

I asked valid questions that were not shown in the video? Stupid is posting one liner ad hominids. Im sorry i hurt your feelings about your project as i assume you must be one of the makers, but maybe you should have asked yourself these questions.

I’m not on the project team. I labeled your comment as “stupid shit” because that’s what it was. This is a cool project, a fun project, and you’re approaching it as though it’s a commercial product or a doctoral thesis that lacks cohesion. Get over yourself.

I got it, stupid is now applying critical thinking skills to a project. I didnt approach it as a thesis or commercial product.

I looked at the makers intent and think it failed, I see no meaningful integration to the pinball machine. If the maker said his intent was a video loop behind a pinball machine to make it more cinematic, then yay great project. So yeah cool project it looks really nice and pretty as i stated before. I would just like to see more integration that the maker is talking about. He posted this whole thing about OCR and the score. Cool yeah but lets see some fruits from that labor, as of right now i dont see it. Think about how much cooler this would be if that would translate to something meaningful on the screen.

Oh, I see. You were offering constructive criticism. I was thrown by the phrases, “Not immersive at all … useless … boring.” So that entirety of the [HaD] community can reap the benefits of your invaluable feedback during the design and build phases of our projects rather than after they have already been featured on [HaD], please leave your contact information on this thread. An IP address would be great.

Okay, I’m done feeding the Gr3mlin — err — troll. Feel free to reply with whatever snarky lunacy you have saved up. The last word is yours for the taking.

If we are only allowed to post ‘cool hack’ then maybe we should get rid of the comments section entirely.

I’m grateful of posts like this so I know I’m not the only one completely bewildered by projects that nobody seems to notice have missed their objective.

Am I the only one who’s reminded of Willy Wonka’s infamous “Scary Tunnel” by the animation in the gif?

“The rowers keep on ROWING!”

There’s no earthly way of knowing

Which direction they are going!

I spend time playing my pinballs looking at the playfield not looking at the wall behind it.

neat hack but stupid idea.

The title may be misleading but the very first sentence of the article lays out the true intentions behind the project.

Looks like fun to play, and fun to make. Great hack.

I see no meaningful correlation between the pinball game and the projection? Maybe I am missing something?

I like it. I used to play a pinball game that had a huge LED macro that would display “graphics” while you played. You hardly ever had time to look up at it, but when you did there was often some red LED face laughing at you, or crazy patterns scrolling by. I can imagine it getting very immersive with the addition of sensors on every bumper, spinner, etc. Even more cool would be to mount cameras in the table and display them on the projector during play. A “ball’s eye view” of the game would be very entertaining. I like the idea of the video loops, but I was really hoping that they were procedurally generated depending on what was happening in the game. Neat project. I hope it grows, because the possibilities are endless.

It would be much better if the projection represented a real-time point of view of the ball instead of what is shown here. To achieve that would require high speed hardware (fpga’s, fast motion sensors, high speed camera, low latency projector). All doable but orders of magnitude more expensive and complex. Even rather state-of-the-art hardware, like the occulus rift is plagued by lag which can make some people seasick (myself included). At the moment, “real” realtime is one of those areas reserved for the defence industry and military applications.