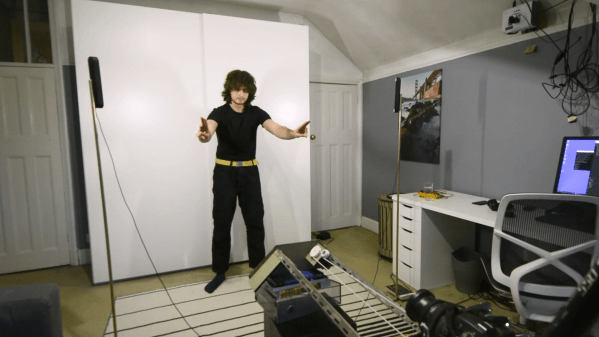

Inspired by the over-the-top stage lighting and pyrotechnics used during e-sport events, [Hans Peter] set out to develop a scaled-down version (minus the flames) for his personal Counter-Strike: Global Offensive sessions. It might seem like pulling something like this off would involve hacking the game engine, but as it turns out, Valve was kind enough to implement a game state API that made it relatively easy.

According to the documentation, the CS:GO client can be configured to send out state information to a HTTP server at regular intervals. It even provided example code for implementing a simple state server in Node.js, which [Hans] adapted for this project by adding some conditional statements that analyze the status of the current game.

These functions fire off serial commands to the attached Arduino, which in turn controls the WS2812B LEDs. The Arduino code takes the information provided by the HTTP server and breaks that down into various lighting routines for different conditions such as wins and losses. But things really kick into gear when a bomb is active.

[Hans] wanted to synchronize the flashing LEDs with the beeping sound the bomb makes in the game, but the API doesn’t provide granular enough data. So he recorded the audio of the bomb arming sequence, used Audacity to precisely time the beeps, and implemented the sequence in his Arduino code. In the video after the break you can see that the synchronization isn’t perfect, but it’s certainly close enough to get the point across in the heat of battle.

With the special place that Counter-Strike occupies in the hearts of hackers and gamers alike, it’s little surprise people are still finding unique ways to experience the game.

Continue reading “Counter-Strike Gets The RGB LED Treatment” →