An SD card is surely not an enterprise grade storage solution, but single board computers also aren’t just toys anymore. You find them in applications far beyond the educational purpose they have emerged from, and the line between non-critical and critical applications keeps getting blurred.

Laundry notification hacks and arcade machines fail without causing harm. But how about electronic access control, or an automatic pet feeder? Would you rely on the data integrity of a plain micro SD card stuffed into a single board computer to keep your pet fed when you’re on vacation and you back in afterward? After all, SD card corruption is a well-discussed topic in the Raspberry Pi community. What can we do to keep our favorite single board computers from failing at random, and is there a better solution to the problem of storage than a stack of SD cards?

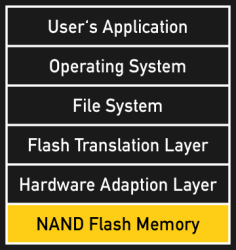

Understanding Flash Memory

The special properties of Flash memory reach deep down to the silicon, where individual memory cells (floating gates) are grouped in pages (areas that are programmed simultaneously), and pages are grouped in blocks (areas that are erased simultaneously). Because entire blocks have to be erased before new data can be written to them, adding data to an existing block is a complex task: At a given block size (i.e. 16 kB), storing a smaller amount of data (i.e. 1 kB), requires reading the existing block, modifying it in cache, erasing the physical block, and writing back the cached version.

This behavior makes Flash memory (including SSDs, SD-cards, eMMCs, and USB thumb drives) slightly more susceptible to data corruption than other read-write media: There is always a short moment of free fall between erasing a block and restoring its content.

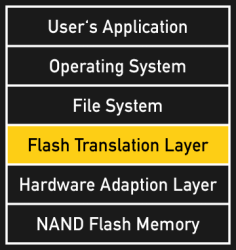

The Flash Translation Layer

The Flash Translation Layer (FTL) is a legacy interface that maps physical memory blocks to a logical block address space for the file system. Both SSDs and removable Flash media typically contain a dedicated Flash memory controller to take care of this task. Because individual Flash memory blocks wear out with every write cycle, this mapping usually happens dynamically. Referred to as wear-leveling, this technique causes physical memory blocks wander around in the logical address space over time, thus spreading the wear across all available physical blocks. The current map of logical block addresses (LBAs) is stored in a protected region of the Flash memory and updated as necessary. Flash memory controllers in SSDs typically use more effective wear-leveling strategies than SD-cards and therefore live significantly longer. During their regular lifetime, however, they may perform just as reliably.

The Flash Translation Layer (FTL) is a legacy interface that maps physical memory blocks to a logical block address space for the file system. Both SSDs and removable Flash media typically contain a dedicated Flash memory controller to take care of this task. Because individual Flash memory blocks wear out with every write cycle, this mapping usually happens dynamically. Referred to as wear-leveling, this technique causes physical memory blocks wander around in the logical address space over time, thus spreading the wear across all available physical blocks. The current map of logical block addresses (LBAs) is stored in a protected region of the Flash memory and updated as necessary. Flash memory controllers in SSDs typically use more effective wear-leveling strategies than SD-cards and therefore live significantly longer. During their regular lifetime, however, they may perform just as reliably.

Retroactive Data Corruption

A write operation on Flash typically includes caching, erasing and reprogramming previously written data. Therefore, in the case of a write abort, data corruption on Flash memory can retroactively corrupt existing data entirely unrelated to the data being written.

The amount of corrupted data depends on the device-dependent block size, which can vary from 16 kB to up to 3 MB. This is bad, but the risk of encountering retroactive data corruption is also relatively low. After all, it requires a highly unusual event to slice right in between the erasing and the reprogramming cycle of a block. It is mostly ignored outside of data centers, but it is certainly a threat to critical applications that rely on data integrity.

Unexpected Power Loss

The most likely cause of write abort related data corruption are unexpected power losses, and especially Flash memory does not take them very well. Neither consumer grade SSDs nor SD cards are built to maintain data integrity in an environment that is plagued with an unsteady power supply. The more often power losses occur, the higher is the chance of data corruption. Industrial SSDs, preferably found in UPS powered server racks, additionally contain military grade fairy dust impressive banks of Tantalum capacitors (or even batteries), which buy them enough time to flush their large caches to physical memory in case of a power loss.

While laptops, tablets and smartphones don’t particularly have to fear running out of juice before they can safely shut down, SBCs are often left quite vulnerable to power losses. Looking at the wiggly micro USB jack and the absence of a shutdown button on my Pi, the power loss is effectively built in. In conjunction with Flash memory, this is indeed an obstacle in achieving data integrity.

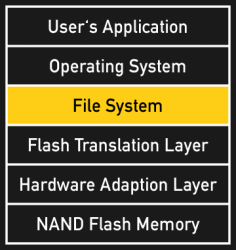

The Role Of The File System

File systems provide a file-based structure on top of the logical block address space and also implement mechanisms to detect and repair corruptions of their own. If something goes wrong, a repair program will scan the entire file system and do it’s best to restore its integrity. Additionally, most modern file systems offer journaling, a technique where write operations are logged before they are executed. In the case of a write abort, the journal can be used to either restore the before state or to complete the write operation. This speeds up filesystem repairs and increases the chance that an error can actually be fixed.

File systems provide a file-based structure on top of the logical block address space and also implement mechanisms to detect and repair corruptions of their own. If something goes wrong, a repair program will scan the entire file system and do it’s best to restore its integrity. Additionally, most modern file systems offer journaling, a technique where write operations are logged before they are executed. In the case of a write abort, the journal can be used to either restore the before state or to complete the write operation. This speeds up filesystem repairs and increases the chance that an error can actually be fixed.

Unfortunately, journaling is not free. If every write operation was written to the journal first, the effective write speed would be cut into half while the Flash memory wear would be doubled. Therefore, commonly used file systems like HFS+ and ext4 only keep stripped down journals, mostly covering metadata. It is this practical tradeoff, that makes file systems a particularly bad candidate to stand in for data integrity after a failure in the underlying storage medium. They can restore integrity, but they can also fail. And they can’t restore lost data.

In the age of Flash memory, the role of the file system is changing, and it’s about to absorb the functions of the FTL. The file system JFFS2 is prepared to directly manage raw NAND Flash memory, resulting in more effective wear-leveling techniques and the avoidance of unnecessary write cycles. JFFS2 is commonly used on many OpenWRT devices, but despite its advantages, SBCs that run on Flash media with FTL (SD cards, USB thumb drives, eMMCs) will not benefit from such a file system. It is worth mentioning, that the Beaglebone Black Beagleboard actually features a 512 MB portion of raw accessible NAND flash, which invites for experiments with JFFS2.

The Pi Of Steel

To answer the initial question of how to effectively prevent data corruption on single board computers: the physical layer matters. Especially for the use in single board computers, high-quality SD cards happen to perform better and live longer. Employing a larger SD card than the absolute minimum adds an additional margin to make up for suboptimal wear-leveling.

The next step on the way to the Pi Of Steel should deal with unexpected power losses. Adopting a battery-based UPS will reduce them to homeopathic doses, and over at hackaday.io, Patrick Van Oosterwijck has worked out a great UPS solution to keep a Raspberry Pi alive at all times.

For some applications, this may still not be enough and for others, the added cost and weight of a battery pack may not be practical. In those cases, there is actually only one thing you can do: Set the Pi’s root partition to read-only. This practically gives you the power of an SBC and the reliability and longevity of a microcontroller.

Eventually, a single Flash cell in read-write mode can only be so reliable, and just by looking at the facts, I would think twice before employing SD-card based single board computers in certain applications. But what do our readers you think? What’s your strategy to keep your SD cards sane? Let us know in the comments!

High scores and configuration changes aside, an arcade machine certainly should be able to run from a read-only SD card.

What about my high score of 50,000? :(

will stay in memory until the machine is powercycled, like a large number of arcade machines of olde.

I want the power source LOCKED DOWN. I WILL NOT LOSE MY SCORE

Try using core memory. :D

If data integrity was REALLY important you could use a small I2C eprom for high scores. No risk to the main system then.

or use a thumbdrive…data corruption would only affect the high scores.

NAND flash also suffers from read disturb errors – very large numbers of reads of the same block can cause data deterioration. A few years ago I did some testing on a system that stored video frames in NAND chips with no error corection – the effect varied a lot between different makes of chip, but some strted droppijng bits after a few hundred thousand reads, others were fine with 100x that.

The onboard ECC in an SD card should take care of it, but at some point there is scope for failure. Caching data that will be read very frequently is a good idea ( the filesystem wll sometimes do that for you).

correct me if I’m wrong but a few years ago= 4-5 NAND flash generations ago … flash has come a long way transfer speed and data retention is dramatically increased in later generations. i remember the very first SSD those were expensive slow and not very durable compared to even the cheapest SSD out on the market now….

afaiu it the issue is that as the features shrink the risk that reading one row might affect a neighboring row enough to change bits increases

“data retention is dramatically increased in later generations” : not so. ECC and wear levelling makes it *look* better, but the underlying physical devices are much *reduced* in reliability. Cell write endurance has gone from thousands/tens of thousands of writes per cell down to the hundreds. This is mostly due to cell sizes (transistor sizes) shrinking, and because manufacturers are now using triple-layer-cells (TLC) as opposed to the single layer when flash first appeared. Note the use of “layer” here is not physical layers, it refers to how many bits can be stored per cell : it was originally 1 bit per cell, moved to two, currently three. To my knowledge no-one has yet commercialised four as it is simply too un-reliable.

Actually, it has. Sandisk had SD cards with X4 technology (4 bits per cell) a while ago.

Read disturb issues are the dirty little secret of SD cards. I had large numbers of SD cards dying in a media player product I developed and eventually tracked it down to this. The SD card manufacture advised that there needed to be periodic pauses in the card reads so internal firmware could rewrite cells to prevent failures. However they refused to say how often or how long we needed to pause for.

ZFS copy on write (COW) semantics would solve the problem. The original data would not be overwritten until much later when the space needed to be freed. Full blown ZFS would have lots of other benefits for data integrity.

Isn’t the big disadvantage of ZFS how much memory and processing power it takes?

I have a freeNass at home running ZFS and they recommend 1 gig memory for every TB.

Are there any slimier embedded solutions?

btrfs?

The NAS I’m setting up right now is using btrs and the overhead (costs) of ZFS (EEC memory, freeNAS) is why I’m not using it, plus the ability to easily expand the storage pool. But this isn’t a discussion about FS benefits.

PXE boot your raspberry. Then the uSD cards are all uniform and disposable. Store data on NFS/samba shares on spinning media which is backed up. Raspberry’s are not mission critical.

That number is just on this side of ridiculously over cautious.

I have a VM NAS/SAN running ZFS on Arch, and so I can see it’s memory usage. I have 6 4TB drives in zraid2 and I rarely see that VM use more than 1GB even during regular use. The VM averages just shy of 200mb memory use at idle. It’s max memory use since last restart is 820mb. That VM also runs deluge in a separate network namespace, is a member server of ad domain, nfs, and sometimes gerrit. I am not sure how much SAN stuff I have done recently but likely some activity.

I think for the tiny amount of storage and simplistic configurations likely commonly in play for a single board ZFS makes sense and will not strain the system. The worst case scenario is degraded IO performance if it ran out of memory, and I highly doubt that memory would be more of a bottle neck than the processor.

ZFS is a crazy RAM-hog only if you’re doing deduplication. You don’t need nearly that much memory for normal use.

Question: Does shutting down the SBC (“sudo shutdown -h now”) guarantee that powering off won’t corrupt data, or might the SD card still be holding uncommitted writes in some internal cache?

I am not an expert, but I would not expect caches in an SD card to be held any more than absolutely necessary. SD cards are in things like cameras that might be powered down at any moment in order to have the card ejected.

The main reason to shut down Linux computers normally is to flush the SOFTWARE caches in the OS itself.

shutdown invokes sync, which is supposed to write out any cached data…. And now I’m reading linux system calls. So the sync command (http://linux.die.net/man/8/sync) actually just calls the kernel sync call (http://linux.die.net/man/2/sync) and in the bus section down at the bottom it says this: According to the standard specification (e.g., POSIX.1-2001), sync() schedules the writes, but may return before the actual writing is done. However, since version 1.3.20 Linux does actually wait. (This still does not guarantee data integrity: modern disks have large caches.)

So that’s interesting.

Stupidly included links and got caught in the spam filter, so here’s the short version. From the kernel sync call man page bugs section: According to the standard specification (e.g., POSIX.1-2001), sync() schedules the writes, but may return before the actual writing is done. However, since version 1.3.20 Linux does actually wait. (This still does not guarantee data integrity: modern disks have large caches.)

No, a regular power off won’t be a problem. SD card don’t have large RAM caches like SSDs.

But wear leveling operating may still be taking place (moving blocks, updating tables, …) after the write command has finished. IIRC there is a command that you can use to power down the card, when that is done you may switch off the supply without any risk.

There is never a totally safe time to power off an SD card that has bugs when it comes to unexpected power loss. The card could be performing garbage collection at any time.

When you power down RPi with shutdown command, it shuts down only the system and halts the processor, IIRC. SD card is still powered, until you unplug the RPi. Also SD card goes to sleep, when there is no clock signal…

Maybe use small SD card to store a start-up image and then load the system from HDD connected to the USB port of RPi?

There are adapters that convert SD card into PATA or SATA HDD, why not make something that converts HDD to SD? The problem of different sizes can be fixed with piece of cable and thin PCB that is cut to fit in microSD slot…

Most pi boot selection tools let you start the tool from an SD card and then start the actual os from other attached storage. Adapting a full sata drive to SD would be pretty interesting. I’m seeing 10TB digital cameras in my head…

I know about these tools, I just wondered why people don’t use them more often, if SD corruption on RPi is such a big issue.

I don’t think that connecting external HDD to camera will work in some cases. I have a camcorder in which SD card is next to battery and without battery cover battery falls out. For those situations alternative might be to use SD to WiFi bridge and WiFi-enabled external HDD. But on the other hand speed might be an issue. Also there were cameras and camcorders with build-in hard drives…

This is precisely what I do on pis and odroids. Only the MBR and /boot partitions are on the sd card. The rest is on a HD of some sort. I use a USB3SATA converter, any laptop drive (or SSD) and good to go. For somewhat lesser systems, a USB stick can take the place of the HD. I mount the HD (and some network shares) via /etc/ftsab.

On pis, there’s also a mountpoint for root in boot.ini that has to match. Done. And now you can get total backup with a simple file copy from the big guy on some other machine, and a dinky dd for the SD card.

My employer makes embedded scientific instruments that use SD cards. We have data corruption issues. Nothing anywhere near the Rasberry Pi level of drama (interestingly). Our FreeRTOS based instruments never experiance data corruption. Our NETMF based instruments rarely experiance corruption. Our Linux based instruments are probably about as bad as the Rasberry Pi. Honestly, it seems like the Penguins are doing something wrong. I suspect they don’t flush their write streams (for performance reasons) for as long as 1 minute.

When I said we had no PI like drama, and then that our Linux based instruments were about as bad as the PI, I left out that we stopped using Linux.

Another thing I’d like to mention, a problem I don’t understand yet, sometimes (rarely) our instruments can corrupt the card to the point that it cannot be formatted using Windows/OSX/etc. We have to use the official SDFormatter utility. Any idea of what causes this lower level of corruption? Is this possibly caused by those Write Aborts mentioned above?

I have this issue too. There are several SD cards on my desk that used to work well, and I know are not old enough or used hard enough to be “dead”. I’ve brought one back before by getting in a quick dd command between device resets, but that was luck. What’s the right way to zero these things?

Sometimes it’s just a partition table corruption that some OSes (in my experience Android and Windows) can’t handle. In those cases, dd’ing over the first few megs of the block device puts it in a state where those OSes will see it as a blank disk.

But the hard failures are almost always because of firmware bugs. The controller on an SD card allocates a significant chunk of each block of NAND for metadata: wear leveling, error detection and correction, and relocation. Often bugs in the controller firmware can cause the controller to lock up if the metadata is corrupt in just the wrong way (see some of the bugs here: https://wiki.cyanogenmod.org/w/EMMC_Bugs#Samsung_eMMC_secure_erase_bug ) And most controllers use a region in the NAND it’s controlling for its own firmware, opening up the possibility of completely bricking the card if a firmware bug stomps on the wrong block.

Most likely one of the tables for the FTL is corrupt. On some SD card controllers you can fix this using the OEM tool (reinit the NAND flash). You can almost reliably trigger this kind of corruption by doing a write to the card, and then switching off power when the write is in progress or just after it. Do this a few tens of times with a random delay and the card is likely nuked.

It shouldn’t corrupt the SD card if the OS doesn’t flush before a power loss. Data would be lost, but the FS would be fine. Frequent flushing and other unlucky write patterns in your application would actually be more likely wear out the SD card prematurely. It’s always a tradeoff with SD cards.

> for as long as 1 minute.

Depends on the file system used. For ext3/4 it’s 30s for data and 5s for metadata, I believe. Check the mount options used. There are various sync calls which (to varying degree, also depending on the kernel) ensure data makes it to the media.

The risk of data loss is inherent with caching; this isn’t specific to flash storage or Linux by any means but a general, well understood problem, with many solution strategies (with varying trade-offs and no silver bullet). You could do worse than to browse the documentation and e-mail list of the DB folks, e.g. PostgreSQL.

You said: “scientific instruments that use SD cards”

I doubt that those instruments use SD cards to actually store an OS. Much more likely for that application is sequential data writes for acquired data, which are relatively gentle on the cards.

Plus, even if there WAS data corruption, you might loose a little data, but that will not cause the entire instrument to be bricked.

I think that data corruption is not just a problem of sd cards. it is a fundamental problem of our current data driven society.

everything relies on data these days. and detecting data corruption should be the next big step for all sorts of computers. big servers have ECC memory to detect errors. consumer computers do not. everyone who has dealt with broken ram memory modules knows that these are a pain. it requieres extensive testing. and the only way to notice it in every day life is to hope for a bluescreen in windows, because otherwise the memory might corrupt your files or things you are currently working on and you wouldn’t even notice until it is too late.

same is for hdd and ssd. how can you tell if it is physically broken if only a few bits are damaged. of course there is SMART, but can you trust this system to guarantee the integrity of every single bit ?

i would prefer a filesystem with integraded checksums. storage space is cheap these days. the filesystem should have small checksums for every block or so. data corruption would be instantly detectable. this filesystem would also detect corruption in sd cards. if the block is small enough, the checksum could also be used to restore the corrupted data. like in a raid array, even if one drive fails you can restore it with the data from the others.

Backups with file hashes solve this problem for long term storage. Corruption in short term storage is not so easy to work around, so you generally accept that you will have to go back to your last backup and start over from there with your work.

Party on.

i am especially carefull ever since i had the problem with the USB3 on my computer, that would corrupt large files when i copied them over to an external drive. not much, but i changed a few bit. this was most noticable on video files. some players could not handle the errors in the videostream.

since this time i am using teracopy. this tool creates a checksum from the file it copies and then reads it back for comparision. i ultimately fixed the error with a new usb driver and usb firmware for the 3.0 controller chip, but how can i trust any file copy operation without checksums in the future ?

Hard drives and SSDs have built in error detection/correction for every sector. SSDs actually require error correction to function since the flash has many bad cells on such bleeding edge lithography.

You should definitely look into the “next gen” file systems like ZFS, btrfs, etc. which periodically recheck your data for consistency and avoid silent bit rot.

I would pay more for an SD card (or hard disk) that contains firmware built in to run *extra* ECC (HDDs and solid state devices both have low level ECC) while loosing capacity. Unfortunately I’m in the *VAST* minority – and manufacturers won’t cater for that 0.000….01%.

Fortunately CD’s, DVD’s and BD’s can take advantage of a program called “DVdisaster” that puts more ECC onto the disk than the low level ECC does. Regrettably there is no solution (that I’ve found) that works transparently on other storage media. There are “solutions”, but they mess horribly with, well actually they don’t actually even try, to be transparent.

JFFS2 was deprecated long ago in favor UBIFS. UBIFS scales to much larger flash sizes than JFFS2 ever will.

It’s not exactly ‘deprecated.’ JFFS2 is more space-efficient, so for small partitions it’s fine.

“Small” here means “under 16 MB,” so obviously the BeagleBone Black example of 512 MB would be horrible with JFFS2. It’d be nice if JFFS2 was named “Small Flash Filesystem” and UBIFS was “Large Flash Filesystem.”

Yeah, and for example anyone with a C.H.I.P is using UBIFS because it has a large raw NAND flash chip on board. If you want some idea of how scary modern flash memory is, the UBIFS docs are worth a read: http://www.linux-mtd.infradead.org/doc/ubifs.html The “unstable bits” issue is particularly, errm, interesting: basically, if there’s a power failure then the data may appear to have been erased or written successfully, but then spontaneously corrupt itself beyond what the error correction can handle.

“In the age of Flash memory, the role of the file system is changing, and it’s about to absorb the functions of the FTL. The file system JFFS2 is prepared to directly manage raw NAND Flash memory, resulting in more effective wear-leveling techniques and the avoidance of unnecessary write cycles.”

JFFS2 is 10 years old. It doesn’t scale to GB+ flash memories. That’s why it gets used on OpenWRT devices, where the flash is tiny. Raw NAND flash filesystems on Linux are a fairly active area of development, so there’s a fair amount of options out there, but UBIFS is the one that’s got a fair amount of traction at this point.

See here for a comparison on JFFS2/UBIFS. The “money plot” there is on slide 22, where you can see the scaling issue: up to 2 minutes (!!) from mount to usable (see slide 23) for JFFS2.

raspbian is Debian based, so I install flashhybrid on all my Pis. The one I’m using as ‘desktop’ computer (well, more like an ‘intelligent’ terminal), I rarely shut down, but simply unplug at the end of the day.

But for embedded systems, a RAM based file system (like OpenWRT uses) is the much more reliable (and simpler) solution.

Many routers that run OpenWRT have slow flash, so running entirely out of ramdisk is fine. But if your system is using sufficiently fast flash, read only root + unionfs ramdisk or a few tmpfs mounts is a better use of RAM.

My router has 16MiB of RAM (as much as a Sun serving as the mail hub for the cs department of a large university in the early nineties ;-}

My Pis have 32 to 64 times as much. Thankfully, I don’t need to count bits anymore …

The nature of any Linux is that is pretty much half baked by default. It’s no wonder it struggles with data corruption during a less than graceful shutdown. Utopias of everyone working towards a common goal sound wonderful on paper. In the case of Linux, it works to some degree. But you also have a lot of choices when building a distro, and you can end up with some real garbage in the works. Especially when it comes to file system error protection. Then you take those problems and put them on media that is already flakey enough on its own, you’re going to lose data at some point.

PI’s as wonderful as they are, are not real computers and should not be trusted as such. They come at such a low, low price for a reason.

People hate spending money, but walled gardens offer a little more in the way of protection. It’s just the way it is.

Clearly you’ve never performed SD stress testing. The issue of SD card corruption and even total death due to sudden loss of power is OS agnostic and due to bugs within the SD card firmware.

^^

I have yet to corrupt a modern (ext4?) Raspbian system by pulling the plug, but I have had a few microSD cards brick themselves after a power cycle.

I just had microSD corruption on my Pi two days ago while installing lots of packages… this speaks more about the quality of power supply I was using than the Pi, though : )

Always wondered why people weren’t mounting their root/boot/et c. partitions as read-only. In developing custom distros for embedded hardware (routers and process control mostly) that’s sort of standard practice. Even with modern storage where write endurance isn’t the issue it used to be, everything but /var gets mounted read-only, and often /var is a ramdisk that gets synced to Flash every now and then.

Read-only filesystems being safe is *so* ten years ago.

NAND flash needs writes, even if the filesystem doesn’t *do* any writes. NAND flash develops single-bit errors over time, and if the FTL detects those errors, it’ll fix them (through ECC), and write back that data in an empty block, marking the old one as empty. In addition, MLC or TLC flash have ‘read disturb’ errors: reading bits from a shared cell can corrupt the level enough to affect the other bit: conceptually, reading from one block can cause errors in another block. Again, an FTL fixes that by detecting the error, and rewriting the block elsewhere.

If a power cut happens during those sequences, you can still lose data – in the worst case the FTL’s map of what blocks are OK and mapped where can get screwed up.

Most FTL-type devices are protected against that (they’ve got enough backup power that they can finish things) but not all.

Read-only root partition sounds like a good thing to try, but has anyone here looked into the NARD SDK? (https://sourceforge.net/projects/nard/)

So I guess that I get to be the annoying person who complains about word choice.

“i.e.” means “that is” which would imply that all blocks are 16kB sized and all small reads are 1kB. What you were looking for is “e.g.” which means “for example”.

I thought “i.e.” meant “in example” like “e.g.” means “exemplum gratia”

you are correct:

“i.e.” => “id est” => “that is”

“e.g.” => “exempli gratia” => “for example”

If you leave your pet behind when going on vacation and rely on an automated feeder to keep them alive… Well, instead of saying what I’m really thinking I’ll just say that you shouldn’t have pets.

I used a lego tread loaded with food and a web button to turn on the lego motor for a moment. worked great. spock lived.

This article should certainly mention F2FS, Flash-Friendly File System. It’s much less demanding on flash memory firmware and typically higher performing.

the assertion that SBC evolved from educational purposes is a bit uncomfortable to me…

Unless you incorporate a safe shutdown circuit into your UPS you’ve only postponed the inevitable. You still face the same SD corruption issue when the battery runs out of power.

The Beaglebone Black doesn’t have any onboard NAND. The link you posted is a wiki page for the BeagleBoard, an entirely different beast.

Corrected, you’re totally right, thanks!

When Using FatFs and a micro SD card:

I can detect main power loss.

Interrupt the microcontroller.

Then finish writes and close the file system.

How long do I have to hold up bower to the micro SD card to finish writing?

That is the spec I wish was published. ;)

You won’t find that answer anywhere because the answer depends on how much is going on with the PI when power is interrupted and the write speed of the SD card.

SD-Card Raid ?

I could imagine, that it could make sense to build a SD-Card RAID controller. Put two or four sd-card slots and one sd-card connector with a controlling chip in between on a little pcb. Maybe with a FullyBatterybackedWriteCache, and litte selector for Raid-mode 0,1,10… and leds for card-ok/card-bad//card-rebuilding/card-activity. I know some of you could do that.

Similar products already exists but they all offer SATA or USB on the host-side:

http://www.running-system.com/microsd-card-raid-1-vmware-esxi-boot-device/

http://www.cypress.com/file/96431/download

It’ll probably be cheaper and better to use an SSD then, but just because why not: 10x microSD array FTW

Found here: https://usb.brando.com/10-x-micro-sd-to-sata-ssd-adapter-raid-quad-2-5-sata-converter_p13939c0046d015.html

Holy camoly! Please tell me those 10 SD cards can be run as 8 parallel bits + 2 parity bits, for increased throughput and reliability!

Nope, only Raid 0. You know, for applications where a single SD card is not unreliable enough.

Funny thing with SD RAID – HP has some blade servers like BL495c without HDD, but with dual SD RAID, only for boot partition. But problem is that, in several years we have seen no problems with SD cards, but controllers failed almost every two-three months. Only power off sequence “fixes” problem. So, sometimes workaround problem creates more problem that problem itself :)

What we need is mram or 3D xpoint based sd cards..

and sata compatible sdio interface (using the 4 bit sd lanes as rx+- and tx+-)

In fstab mount your flash based partitions with the additional setting:

commit=300

The default setting in Linux is to flush write buffers every 5 seconds. If you have a battery backup (I want to stress this point) you can increase your write commit interval for DRAMATICALLY longer lasting flash memory. Adding commit=300 will write to the SD card every 5 minutes, instead of every 5 seconds. Now a program can always ask to flush the write buffer, or you can run a sync, but unless that’s happening all the time that one setting should be enough to increase the useful life of an SD card beyond what will probably be expected of the single board computer itself.

Or just use a board like the Olimex Lime which has a SATA port and on board LiPo battery connector and charge circuit.

Producnt my company make load either from an industrial USB flash device or an embedded SATA device like what you’ll find here: http://www.smartm.com/products/storage/embedded.asp

MTTF is the key here, how long does your app need to live? With Linux, the file system tends the be the thing that will corrupt first, but I thought EXT3 did awy with those issues.

A sywtem with 2 micro SD cards might be the way to go. It would be nice if the SBCs could support some sort of IPL from a NOR flash device with a second stage load from a suffiecently large NAND device or a network boot with a third device for data storage.

At my current employer we produce boxes that sit for decades in power plants, protecting some functions. Different generations have had different sorts of flashes, but in the latest one it is normal sd-cards. Previously it has both been soldered flash and compact flash.

We test all new cards via a re-boot sequence for one, since our reboots are very hard on the cards. The thing is though, a regular sd-card bought at a store chokes almost right away. The industrial cards we use are a lot better, but even they are not always great, hence the testing. Currently I think we are using Swissbit, although its a BOM-locked one so might not be the same consumers can buy, I don’t know. And they are not cheap.

We also use industrial rated microSD cards. They’ve survived serious abuse, and >50k boot cycles during initial testing (boot fully, drop power rather than shut down). Our Linux filesystem is read only – which really helps according to our testing. We do have a RW partition for storing data & logs, and do not cache writes. We have >10K units out there and only a few per year come back with corrupt microSD.

We tryed to build serial-dataloggers for QA on our product (in house long term testing). First, we went with SD cards to write the logs on, but after around 50 consumer cards had died, we went ahead and got the industrial cards. They hold up a bit longer, but price is high, and eventually they still fail. We now went ahead and redesigned our datalogger to use USB-OTG and accept USB-sticks, but again, they die just as fast as the cards.

We are still not shure what to try next for reliable and cheap storage options for our logger. It’s based on an STM32 microcontroller running fatFS and we added a 16MB buffer RAM to the controller so we can log to RAM first and reduce the amount of flash writes.

That sounds surprisingly bad. If you are doing enough volume you should be able to reach out to your SD card supplier and have them analyse the actual cause of the card failures and give advise on how to avoid that particular failure. If you are buying premium cards they should be more receptive to helping you.

One issue I see with you set up is FAT is likely to writing a lot to the same areas where as new file systems like EXT4 are better behaved in this respect.

The cards have built in load leveling so you are not writing to the same physical address each time.

The problem is if you only write a few bytes at a time the controller is reading a 32KB+ page from the flash into a cache modifying it then writing it back to the flash. You are wearing out space that you are not even storing data on. Or if you write across page boundaries… You need to do writes that are a multiple of the pagesize for your SD card. Not sure how you do this when using a filesystem.

Does anyone have any experience with MTBF for sequential writes?? Not using a filesystem. Any recommended “industrial” cards that can be bought in small quantities?

With SD cards you never know what you are going to get until you buy and test it in your system. I’ve got a Samsung pro+ that comes out of idle in 10 ms and a sandisk extreme that takes 200ms. Once initialized the sandisk goes into a busy state less than the samsung during writes so its faster.

“Any recommended “industrial” cards that can be bought in small quantities?”

Seconded.

Really want a reliable card I can abuse on a project. (R/W cycle-wise.)

Swissbit make some nice ones, which are available in onesies from the usual suspects (Digikey, Mouser), but you’ll pay for the reliability. Search for SLC cards with industrial/extended temperature range. I have also found these to have less variable write latencies (I.e. “hiccups”), which can matter for embedded projects sampling lots of data with limited buffer RAM.

Thanks! I’ll seriously consider those.

No problems here with my core memory. ABSOLUTELY none. Zed. Nada.

How many K do you have?

My first (shared) computer, IBM 1401, had 16K core.

Making your FILESYSTEM partition readonly will not protect you from power down corruption on these devices when writes are occurring on other partitions. The wear-leveling is happening at the device level. And from the sounds of it, even making the device readonly (can that even be done?) doesn’t protect you due to the read degradation effect. At least that’s my understanding from reading all of this.

Thanks for the tip about Swissbit.

Yes, we tried making the file system mount read only and it did not help as the ‘read disturb’ failures occur purely from reading too much without a pause in access.

I’m surprised to not hear another possible solution that hasn’t been mentioned… Worried about using a cheap single board computer with unreliable SD storage for mission critical applications? If the application supports it, just use two!

At $DAYJOB we got in a sticky situation where a physical office needed a device to serve as a recursive DNS server with custom configurations that the fancy appliance it was usurping couldn’t do. The easy solution was a pair of raspberry pis.

Resilient cloud computing depends on redundancy. You’d never depend on a singly commodity VM for high availability. RAID was invented around INEXPENSIVE storage – something many people forget.

Reducing the price of a thing until you can afford two, regardless of quality, can be a great strategy.

I have a problem while using fram with I2c bus the data suddenly got corrupted after some tests. is there any way to get a solution for this.

I’ve had issues with data corruption in the past with the Raspberry Pi. I mitigated my problem by switching over to BTRFS which performs CRC32 by default for data integrity to prevent silent data corruption, giving very obvious IO errors when bad data is detected instead of silently using it. I also perform regular scrubs to preemtively check for errors.

This doesn’t totally prevent corruption on consumer SD cards, but it helps me sleep at night knowing that using corrupted data from the disk is highly unlikely.