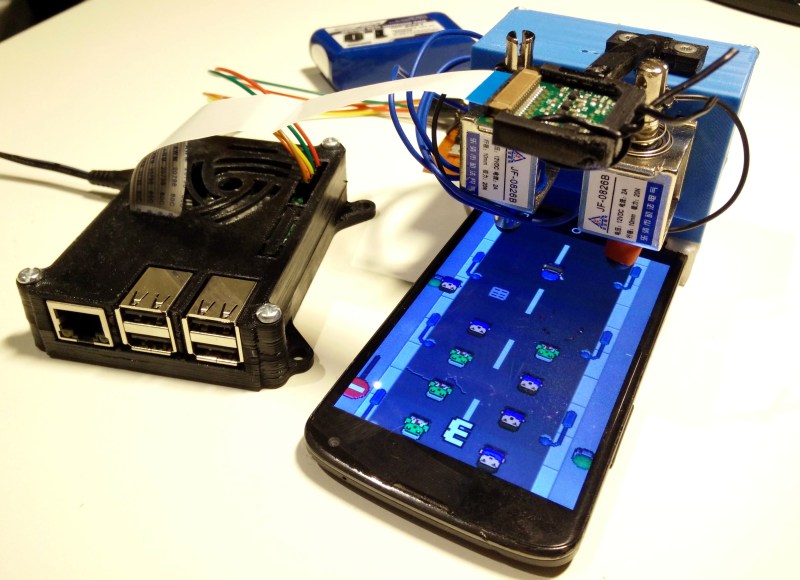

This Raspberry Pi 2 with computer vision and two solenoid “fingers” was getting absurdly high scores on a mobile game as of late 2015, but only recently has [Kristian] finished fleshing the project out with detailed documentation.

Developed for a course in image analysis and computer vision, this project wasn’t really about cheating at a mobile game. It wasn’t even about a robotic interface to a smartphone screen; it was a platform for developing and demonstrating the image analysis theory he was learning, and the computer vision portion is no hack job. OpenCV was used as a foundation for accessing the camera, but none of the built-in filters are used. All of the image analysis is implemented from scratch.

The game is a simple. Humans and zombies move downward in two columns. Zombies (green) should get a screen tap but not humans. The Raspberry Pi camera takes pictures of the smartphone’s screen, to which a HSV filter is applied to filter out everything except green objects (zombies). That alone would be enough to get you some basic results, but not nearly good enough to be truly reliable and repeatable. Therefore, after picking out the green objects comes a whole chain of additional filtering. The details of that are covered on [Kristian]’s blog post, but the final report for the project (PDF) is where the real detail is.

If you’re interested mainly in seeing a machine pound out flawless victories, the video below shows everything running smoothly. The pounding sounds make it seem like the screen is taking a lot of abuse, but [Kristian] mentions that’s actually noise from the solenoids and not a product of them battling the touchscreen. This setup can be easily adapted to test out apps on different models of phones — something that has historically cost quite a bit of dough.

If you’re interested in the nitty-gritty details of the reasons and methods used for the computer vision portions, be sure to go through [Kristian]’s github repository where everything about the project lives (including the aforementioned final report.)

We have also seen projects like this LEGO robot playing an iPad and this Arduino using a servo to hit a button at just the right time, which show that the goal isn’t always research. Sometimes the goal is just to have a machine grind out a repetitive task while you sleep.

Regardless of “cheating” or not it’s still a very interesting feat, especially doing all image processing from scratch.

There’s been a rise in projects envolving robot fingers, CV and mobile games, in particular in Japan where mobile games are usually more popular than console/PC ones. Some examples:

https://www.youtube.com/watch?v=iRyNR3vLvK8

https://www.youtube.com/watch?v=0uzP6t-YuH4

Instead of using a physical phone touching thing (and associated solenoids) could you just use a metal thing close to the screen, and when you want to register a touch, run a current through it? Or put an electric charge on it? I’m not super up to date on exactly how capacitive touchscreens work (i know they detect capacitance, but is it possible to vary that without moving things physically?), but if you can do it without movement it seems like it would be much better, for both the phone and the high score.

I have looked but have not seen an easy way of doing this electrically instead of mechanically. If there is one I’d like to give it a try as well.

A pair of RF PIN diodes in series will do the trick, with a contact pad in between them as close to the diodes themselves as possible. Then add a series resistor and drive it off a pair of GPIOs from an Arduino or whatever. For best results, use at least 5V for the reverse bias mode. (That is trivial to implement with a single transistor and a few resistors.)

report concludes that the robot only beat his own scores by a disappointingly small margin, hardly “absurdly high”.

if it wasn’t a school report a lot of that processing could have been dumped by using a lower resolution camera.

Dont even need to do image analysis. two simple optical sensors looking for that green color is enough, align the sensors at the right location and it will always win no matter how fast it runs.

I used to do that with a video camera counting pins on bowling alleys, camera placed at the right spot and it can see all pins in two alleys. look at one scan line for the bright pips and you have your information.

Same could be done this way, heck with image processing, take one pixel line at the right location looking for that green. Really simple to process now.

im not an CV expert but i wonder why he used the HSV color space in an automated system? whats the advantage over a plain RGB implementation?

HSV handles shadowing / non consistently lit environments far better as it seperates out the colour and brightness component of the pixel. RGB is a pretty terrible colour space for a lot of CV applications.

HSV seperates out the colour and brightness components of a pixel this is far better for inconsistently lit environments and anything with shadows.

So did anyone consider the philosophical implications of computers playing computer games? Nah, me neither.