If you are interested in how a computer works at the hardware grass-roots level, past all the hardware and software abstractions intended to make them easier to use, you can sometimes find yourself frustrated in your investigations. Desktop and laptop computers are black boxes both physically and figuratively, and microcontrollers have retreated into their packages behind all the built-in peripherals that make them into systems-on-chips.

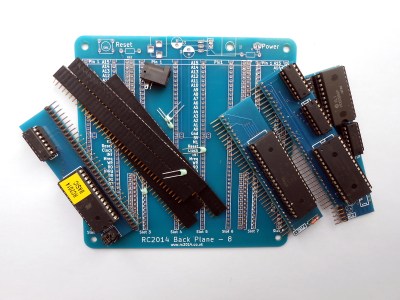

Maybe you’d like to return to a time when this was not the case. In the 8-bit era your computer had very accessible components, a microprocessor, RAM, ROM, and I/O chips all hanging from an exposed bus. If you wanted to find out how one of these computers worked, you could do so readily. If this is something you hanker for then happily there are still machines that will allow you to pursue these experiments in the form of retro computers for hobbyists. Most of the popular architectures from the 1970s and 1980s have been packaged up and made into kits, returning home computing to its 1970s roots. Breathe easy; you won’t have to deal with the toggle-switch programming unless you are really hard-core.

When you have built your retro computer the chances are you’ll turn it on and be faced with a BASIC interpreter prompt. This was the standard interface for home computers of the 8-bit era, one from which very few products deviated. If you were a teenager plugging your family’s first ever computer into the living-room TV then your first port of call after getting bored with the cassette of free educational games that came with it would have been to open the manual and immerse yourself in programming.

Every school had similar machines (at least, they did where this is being written), and a percentage of kids would have run with it and become BASIC wizards before moving seamlessly into machine code. If you have a software colleague in their 40s that’s probably where they started. Pity the youth of the 1990s for whom a home computer was more likely to be a Playstation, and school computing meant Microsoft Word.

The trouble is, in the several decades since, 8-bit BASIC skills have waned a little. Most people under 40 will have rarely if ever encountered it, and the generation who were there on the living room carpet with their Commodore 64s (or whatever) would probably not care to admit that this is the sum total of their remembered BASIC knowledge.

10 PRINT "Hello World"

20 GOTO 10

If you have built a retro-computer then clearly this is a listing whose appeal will quickly wane, so where can you brush up your 8-bit BASIC skills several decades after the demise of 8-bit home computers?

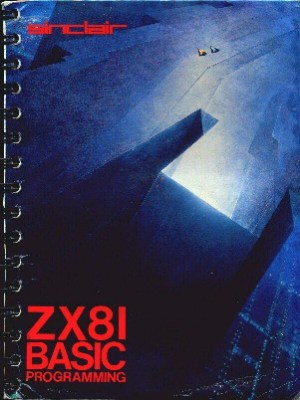

A good place to start would be a period manual for one of the popular machines of the day. Particularly if it’s a machine you once owned. These are all abandonware products, many of which were produced by now-defunct manufacturers, so you’ll have to use your favorite search engine. In this you are unlikely to be disappointed, for the global community of 8-bit enthusiasts have preserved the documentation that came with almost all machines of the day on multiple sites. It’s easy to be diverted into this particular world as you search.

These manuals usually have a step-by-step BASIC programming course, as well as an in-depth reference. They will often also have information on the architecture of the machine in question, and its memory layout. It’s worth reminding that each machine would have had its own BASIC dialect so there may be minor quirks peculiar to each one. But on the whole, outside some of the graphics and sound commands, they are fairly insignificant. It is after all as the name suggests, basic.

One thing worth commenting on is the iconography of these manuals, it’s striking with nearly four decades of hindsight that these were exciting and forward-looking products. Computers had not become boring yet, so their covers are full of gems of early-80s futuristic artwork. Our colleague [Joe Kim] is carrying on a rich tradition.

When 8-bit machines were current, it’s important to remember that for the vast majority of computer users there was no Internet. The WWW was but a gleam in [Tim Berners-Lee’s] eye, and vanishingly small numbers of parents would have bought their kids a modem, let alone allowed them to dial a BBS. Thus the chief source of technical information still came from printed books, and the publishing industry rose enthusiastically to the challenge with a raft of titles teaching every possible facet of the new technology, including plenty of BASIC manuals.

Just as with the computer manuals mentioned above, these can often be found online by those prepared to search for them. Better still in the case of the iconic computer books produced by the British children’s publisher Usborne, some of them can even be legitimately downloaded from the publisher’s website (scroll down past the current books for links). Nowadays their computing titles teach kids Scratch on their Raspberry Pi boards, however for the 1980s generation these were the 8-bit equivalent of holy texts. In no other era could a title like “Machine Code For Beginners” have been a children’s book.

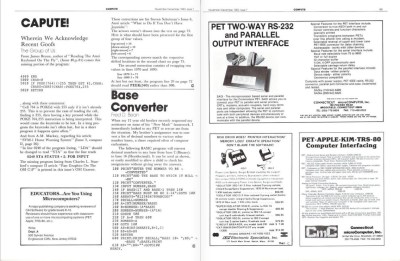

There is a further period source of BASIC programming information from the 8-bit era. Computer magazines didn’t come with a cover disc — or cassette — until later in the decade, so they had a distinctly low-tech approach to software distribution. They printed listings in the magazines, and enthusiasts would laboriously type them line by line into their machines. The print quality of listings reproduced in print from photocopies of dot-matrix printouts was frequently awful, so the chances are once you’d typed in the listing it wouldn’t run. It was an annoying experience, but one which taught software debugging at an early age.

Thanks to the Internet Archive we have a comprehensive set of period computer magazines freely available online, so finding listings is simply a case of selecting a title and browsing early 1980s issues. They often have a mix of code, from articles explaining algorithms to reader-contributed listings. It’s easy yet again to get sidetracked into the world of period peripherals and first-look reviews of now-retro machines. The cover art is still futuristic in the same way as the programming manuals, it would be a few more years before pictures of grey-box PCs became the norm.

If you’ve managed to tear yourself away from a wallow in 8-bit nostalgia to get this far then we hope you’ve been inspired to try a little BASIC on your retro computer board. It’s a language that gets a bad press because it’s not exactly the most accomplished way to program a computer and it certainly isn’t the fastest, but it does have the advantage of being very accessible and quick to deliver tangible results. When your computer is as much a toy as the home computers of the 1980s were, tangible results are the feature that is most important.

[Header image: Sinclair ZX Spectrum keys with BASIC keywords: Bill Bertram CC-BY-SA 2.5, via Wikimedia Commons.]

PiPlay comes with the C64 emulator, BASIC prompt included.

I encourage all to check out the EXCELLENT Nand2Tetris course which teaches you online how to build a computer from NAND gates up to chips up to an operating system up to a programming language and compiler and finally up to a working game. Simply an amazing gift to the online community.

http://www.nand2tetris.org

Excellent, Thanks CMH62

You “forgot” to mention that it is a Coursera course and, as such, it costs money.

It appears quite free to me, on their site. Also, Coursera only used to (haven’t been on Coursera for over a year) ask for money for certificates of completion and such, not for the actual knowledge.

One thing about the books, they were reasonably priced. It was before the “textbook mentality” came to the field, so the books were affordable, but also not bloated.

Later, they all went to a larger size, and got thick, and rarely cost as little as ten dollars. I guess that also reflected larger market.

The good thing was there were lots of remainders, so you could get them at more reasonable price. But so many would have overlap, trying to be part of a template, and they took up space, and be heavy.

Michael

Sybex, if I recall correctly. It was amazing how quickly they could fill and publish a thousand-page book.

I have a Commodore 64, two 1541 drives and a 1604 monitor. I have lots of floppy disks too. Amazingly, they all work. There are retro computing communities online and even hardware made to connect your C=64 to the internet. I built a simple interface to connect a 1541 to an older PC. There’s a TON of software made in the 1980s that you can actually download and then save to a floppy on the 1541 as a real PRG file. It’s awesome!

As a member of more than one of said communities, we are quite active, and there’s software being released almost daily for the C64. Tons of indie titles, and most of them very good. PondSoft UK / Privy software has already released 7 this year, and have about 8 more in the works.

Also, if you liked the flash game Canabalt, there is a port of that for the 64. plays great, amazing soundtrack.

Talking about BASIC: In 1974 I ordered a copy of Tom Pittman’s TINY BASIC for the 6502 based system I was building in Wirewrap. Internet? CD’s? NO: it came as folded punched paper tape in the US mail. I took the tape down to SUNY where I taught electronics part-time and ran it through a model 33ASR Teletype and printed it out. My son and I laboriously typed the Motorola Hex code into our machine on our model 33 (No tape reader) Teletype and saved it on cassette tape at 110 baud with the homebrew “Modem” I had built for amateur radio RTTY. Worked! The BASIC prompt came up and my kids spent weeks writing some text mode Lunar Lander and Spacewar games. These days they are designing Wind Energy systems hardware and firmware, designing high-end communications chips that run above 10 Ghz.

Started with BASIC…

Regards, Terry King …In The Woods In Vermont

Terry, was that maybe the 6800? Or maybe it was a different year? The 6502 was introduced in late ’75. BTW, since you mention 10GHz, Bill Mensch, the owner of Western Design Center which licenses the 65c02 intellectual property said in an interview last year that he estimated that if the ’02 were made in the latest deep-submicron geometry, it would do 10GHz.

10 gHz? My word!

A 10GHz 6502? Unlikely as (1) you still have to get data on and off chip and (2) it’s not in any way pipelined. Modern CPUs have large amounts of on-chip cache, and suffer large penalties for a cache mis. That’s why hyperthreading was invented, so that when a core stalls due to a mis an alternate register set can be swapped in to keep it busy while the cache is being loaded.

The second part missing is pipelining. Without this signals have to propogate completely through the logic in one clock period. With pipelining part of the work can be done in one clock, then passed on to the next stage. That way the latencies can be fitted inside of a short clock period while still pushing out one instruction per clock cycle.

Sweeney, I’ll try to find it later when I have time and give the link, cued up to the right part of the interview. According to WDC (the IP holder and licenser), the 65c02 is still selling in quantities of over 100,000,000 (a hundred million) units per year; but they’re rather invisible, because they’re at the heart of custom ICs going into automotive, industrial, appliance, toy, and even life-support applications. The memory is always onboard (as is the I/O, timers, etc.). It’s like it’s _all_ cache. There is no off-chip memory in these. The fastest ones today are running over 200MHz. They’re not being made in the latest deep-submicron geometries though, which I think is under 20nm today. The 10GHz figure was Bill Mensch’s estimate of how fast they could go if they were made in such geometry.

Regarding Bill Mesch’s claim about a 10GHz 65c02 possibility, I wrote, “Sweeney, I’ll try to find it later when I have time and give the link, cued up to the right part of the interview.” It’s in this podcast and I don’t think there’s a way to give a URL with the cue-up extension; but you can go to http://ataripodcast.libsyn.com/webpage/antic-interview-96-bill-mensch-6502-chip and you only have to listen for a minute or two starting at 54:50 into it. I was wrong though. Yes, it was 10GHz, but it was not the 65c02. Even better, it was the 65816, which is the 16-bit upgrade of the ’02 with a lot of added capabilities. My ‘816 Forth runs two to three times as fast as my ’02 Forth at a given clock speed; so 10GHz would make it about 25,000 times as fast as the 1MHz 6502’s from 35 years ago would run it. He does indeed mention that all the memory would be on-chip though, like it’s all cache. I imagine the boot loader would have to be loaded into this fast RAM from flash upon power-up, before letting the processor run full speed.

I am no longer in my 40s but I guess I started earlier. The book I used, and would still recommend if you can find it is “Instant Basic” by Dilithium Press. Yes it was the ’70s. https://books.google.com/books?id=Ad5WAAAAMAAJ&focus=searchwithinvolume&q=goto

I couldn’t find a site with a better copy than google’s scan.

I am well past my 40’s – far closer to 60 and an 8-bit basic was the second language I learned. I’m actually getting to the age where I’ve forgotten more coding lanugages and dialects that some “millenials” have ever learned.

I have been building projects since I was in 6th grade. I cringe when I see proto-boards used in finished “Maker” projects; I made my own PCB’s when I was in High School (photo-lith) and mastered point-to point wiring about the same time. I also learned to work with sheet metal, how to use wrinkle finish spray paint to cover my lack of sheet metal skills and use rub-on lettering for more professional looking projects. Can you even get rub-on lettering any more? Prior to that I was an anti-Maker, taking apart everything I could get my hands on.

I started programming with Fortran IV in High School and later moved to Radio Shack Basic running on TRS-80 Model I running TRS-DOS (MS-DOS) and I picked up Z-80 assembly soon after (basic, as stated, was not enough). The IEEE offered an assembly language course for the i8085 when I was a sophomore in college and after I took that course, I was hooked. I used basic, including an old version of Power Basic, for various analog design tools (filter calculations mostly) until the early 2000’s. Somewhere in the early 80’s (that’s 1980’s) I picked up C and even toyed with Pascal. That’s an era when a company called Borland made some awesome programming tools.

I moved out of a design role to a parts role around 2000 and the analytical nature of my work turned to data management and text manipulation I fell back on Perl and barely enough SQL knowledge to stay alive. I am not a professional coder — I use programming as a tool to simplify my engineering work.

My experience as an engineer is that it is rare to find people born in the 1940’s that have lots of 8-bit BASIC language experience – but that is not to say they can’t code circles around me with C, C++ or more modern OO languages.

A little respect for the aging in the community is in order. I suspect quite a few of your followers on Hackaday are a LOT older than you may think! By all means refer to the past, but please use common dates, not age brackets. Any 50-something or older looking for a job knows why I say that. Age discrimination is illegal, but so is speeding.

My first computer was a ZX-81 (bought the kit), still have it as well as the book in the photo. I got a Radio Shack book on the Packet PC and converted all the programs to Sinclair Basic. Did the same when I got a C-64. I was in the Air Force at the time, we ended up with a HP-85 and work a program to calculate bore-sight constants for F-4Gs. The most complex program I wrote was to solve differential equations, as part of a class. Everyone else had FORTRAN, I was stuck using Basic on my new XT clone. In “turbo mode” it took an hour.

In some way I miss those days, but the new generation of SBCs have helped bring back that same feeling.

Another way to go with older CPU’s is what I call an Olduino. An arduino work-alike with a retro-cpu. My latest is a Z80 that can attach arduino shields and talk ethernet!

https://hackaday.io/project/10626-olduinoz

Oh, I forgot why I was posting! One ofe the best things I’ve done with the olduino’s is adapting the basic language bagels game from “101 Basic Computer Games”. There are versions in C for the 1802 and Z80 Olduinos speaking both HTTP and Telnet.

https://olduino.wordpress.com/2015/03/13/in-which-i-plan-to-serve-doughnuts-instead-of-bagels/

“If you are interested in how a computer works at the hardware grass-roots level, past all the hardware and software abstractions intended to make them easier to use, you can sometimes find yourself frustrated in your investigations.”

Remember the days of building one out of gates and bit-slice.

I’m almost out of my 40s and the first computer I used was a Commodore PET2001, at a friend’s house. It must have been one of the first ones in my country (The Netherlands) at the time. When I was 12, my school had a PET2001 and a few other CBM computers, and later on a C64. I learned a lot about programming from magazine articles and books such as “101 BASIC computer games” by David Ahl, and those were pretty much the only source of information. The public library in the village where I lived had exactly one book about Basic.

Nowadays, information about these computers is so easy to find on the Internet. I’m working on a project (https://hackaday.io/project/3620) that can probably emulate many of the early 6502 computers that came out before everyone started using ULA’s and custom chips, and I feel like I’m as smart as Woz when he designed the Apple 1, but really those computers are just a lot simpler than they are today and if you were at the right place at the right time with the right data people around you or the right company (Woz could get chips from HP where he worked), you could make something that would change the world. Nowadays the vast majority of computers are PC’s based on a 1982 design by IBM, and the small embedded computers everyone talks about are Raspberry Pis and Arduinos.

When I left high school, I was optimistic that schools would start teaching (at least some) people how to program (I never had any programming classes, that all happened after I had already left school). People would learn programming languages, buy computers to program and computers would get cheaper and the world would be a smarter place. That partially became true but instead of people getting smarter because of computers, computers simply became dumber to accommodate people who can’t program and don’t want to. I have to say I have mixed feelings about that.

We must have attended the same school! Those CBM’s were really fabulous at the time and we even had a double disk drive at our school. Good times

The same school? Possible… Was your school’s double CBM floppy drive also shared between two computers, and could it also store an entire MEGABYTE per disk, something that IBM PC’s didn’t accomplish until 5 years later or so?

A megabyte was a lot of space those days. I owned a single floppy disk that I got for free from 3M by mailing in a coupon, and I never filled it up.

I went to school in Eersel, a village south of Eindhoven. IIRC we had about 100 or 120 Kb storage on a single-sided floppy? A box of 10 floppies was > 100 guilders back then so a free disc was quite a gift.

Hey Brabo, It’s a small world… I grew up in Eindhoven but learned about computers while I was living in Goirle. I (used to) know people in Eersel but they moved to Oirschot :-)

OH MY GOD typing in MACHINE CODE from one of those mags was a nightmare. All the while keeping my fingers crossed the code was correct. Assembly would have been luxurious.

My little brother and I spent an entire day once typing in hex code from Compute! Magazine but by golly by that night we had a working spreadsheet program on our C64! Good times.

I had an S-100 machine with cassette tape storage, but little software except a clunky version of BASIC. Borrowed a schoolfriend’s copy of ‘TRS-80 Level II BASIC Decoded’ (I think it was called) and using the hex editor typed in the 12k of machine code over a week or so. Then manually patched the keyboard routine in the binary to use my machine’s EPROM monitor keyboard routine. The screen adress also needed adjusting but my S-100 video card had the same 64×16 format with the addition of a Programmable Character Generator board, so I made up the TRS-80 graphics PCG font. It worked really well! I patched the cassette loading and saving to use my Monitor’s routines.

Well, I’m in my early 60’s and played around with small BASIC interpreters. My first computer was a SWTPC M6800. This powerful 8 bit machine used the Motorola 6800 running at an astounding 200KHz clock speed. Knowing the standard 2K of RAM was not enough I bought an additional 2K when I bought the kit in 1976.

Mostly I programmed in assembly, but one day some computer magazine I subscribed to contained a thin plastic 45 RPM record. It had a BASIC interpreter on it. It took me dozens of attempts with my stereo turntable and level settings to finally get a clean load. Then I stored it on my “mass storage” cassette tape system. It only took about 7 minutes to load every time I wanted to run BASIC!

My next few computers, a CPM machine, Commodore C64 and a PC clone came with more powerful BASICs.

Actually Commodore’s BASIC was pretty bad. Atari’s was worse, way worse! If it wasn’t for the BBC Micro and it’s exemplary BASIC, I’d think it was impossible to implement on a 6502.

C64 BASIC had nothing for controlling sprites or sound. IE the reason people bought the damn thing! If you have to enter POKEs to get anything done, it’s not really BASIC.

I agree BBC BASIC is excellent, particularly in having structured programming support. It’s too bad the graphics and sound on the BBC Micro weren’t comparable with the Commodore 64 and Atari 800’s. Hardware scrolling, sprites, and high quality sound aren’t necessary for fun gaming, but they help.

The core of Commodore’s BASIC was very good as it was Microsoft BASIC. When you say it is bad, I suspect you are referring to the fact that the version on the Commodore 64 had no commands for graphics or sound, and sometimes even for disk commands. I agree that’s bad. Many people bought Simons’ BASIC, which not only added graphics and sound commands, but dozens of others, and made Commodore BASIC faster as well.

Commodore later put out computers called the 16, Plus/4, and 128 which have excellent BASICs. They’re still Microsoft BASICs but add all kinds of graphics, sound, disk, and other commands.

Atari BASIC is also actually very good in many ways as it does have commands for graphics and sound, and is very user friendly. The problem with Atari BASIC is that it is slow, mostly due to it having poorly optimized floating point routines in the operating system, thus it is not exactly the fault of the BASIC. Third party replacement floating point ROMs are available which speed things up. Additionally, it wasn’t difficult to replace Atari BASIC with another language cartridge – just plug it in.

Another common complaint with Atari BASIC is that it doesn’t support arrays of strings. This isn’t as bad as it initially sounds, but merely means you have to do arrays of strings much like they are done in C.

Nah, the stuff missing in C64 and Atari BASICs were stuff that needed to be there. Graphics and sound, whatever the computer could do. That’s what gives you your reward of programming. And not being able to do string arrays is pathetic. It’s not like it needs to be particularly fast.

I’m comparing them to other 8-bit BASICs of the time. They were much more comprehensive. And as I remember, easier to use, with better error messages. Did Ataris even have error messages? I forget, but I half-remember it was just some cryptic code or something.

Simons’ BASIC sounds OK, but almost nobody’s going to use it. The BASIC that comes with the machine has to be good. You’d only buy a new BASIC if you were serious about programming, and if you were, you’d pretty much just learn machine code.

There were a few compiled BASICs on 8-bits, sometimes with a few restrictions on what you could do, a few BASIC commands or functions weren’t suitable for compiling to machine code, they needed interpreting at runtime. Or possibly, were too much work to implement. They could get a decent speed compared to interpreted standard BASIC. But generally only mediocre programs were written in them, because talented programmers just went straight to machine code. At first, without even an assembler! READ X, POKE Y,X, DATA 1,2,3,etc.

Avoid any language with mandatory statement numbers.

This was a solid rule 30 years ago and all the more these days.

Ah bollocks, they’re for what they’re for. BASIC is an educational language. That some insane fuck decided people should write actual commercial code with it isn’t BASIC’s fault.

When you start taking away features from BASIC and “modernising” others, you pretty quickly end up with C. But with different keywords. Pascal then. All that stuff takes time and brainpower to learn. BASIC probably didn’t add compulsory line numbering for didactic reasons, presumably it helped the interpreter, and owes something to FORTRAN. But it was a good feature.

BASIC isn’t meant for performance or reusability or style or, gods help us, object orientation. I’d sooner make babies into handbags than force that into BASIC. It’s a simple, powerful, but deliberately limited tool. Lets you do what you want, and usually there’s only one way, the way that works. Important when learning, completely wrong when you’re actually producing.

But without line numbers, how would you ever make a simple one-line modification to your program?

(I somehow expect the answer you’ll give is to use a non-existent text editor. Kids these days, so spoiled.)

Bullshark. In the 1980s, we didn’t need no fancy editors that would let us scroll up and down in our listings. Most computers could do a LIST command and you had to insert or edit lines by using line numbers. Commodores were the one exception: you could move the cursor over the screen and edit an existing line and hit Return to update it in memory. They even designed the machine language monitor in such a way that you could move the cursor up and edit hex values and hit Return to store them. That was more than enough.

Atari BASIC also had a screen editor, and it worked virtually identically to the Commodore one. At least I haven’t noticed any difference.

ZX basic scrolled up and down like a more modern editor. The environment alternated between an edit screen and an execution screen. The edit screen showed a full page of code including the current line (the last line edited), which was marked with an inverse ‘>’ . Pressing the up or down cursor keys would move the current line pointer to the previous or next line (i.e. Line number) and scrolled the screen to make it visible if it was off the top or bottom.

Lines however were not edited in-place. You would hit the edit key and the current line would be copied into the bottom edit area to be edited. Here you would use the left and right arrow keys to move through the line, typing would insert at the cursor; delete would backspace and overtyping wasn’t possible.

In addition, the editor supplied basic keywords in context just by pressing a single key and would not allow syntactically wrong lines to be entered. In some ways this is more advanced than today’s IDEs.

I remember when I first tried to type in a BASIC program on my Spectrum I was bewildered by that inverse “>”. I couldn’t work out why it appeared in the listing, and why, when I edited the line to delete it, it didn’t appear in the edit area. It was all in the manual, of course…

Worried about line numbers? Nah, you could always buy one of the Line Number Re-numberer programs advertised in computer magazines of the day :)

Line numbers might have been a small nuisance, but the bigger problem with most BASICs was not the re-numbering issue, but rather that a line number has no human significance. My HP-71 BASIC (and probably many others) allowed meaningful branch labels (and they didn’t even have to be at the beginning of a line), so you could GOTO the label instead of a line number. With a set of language extensions from the Paris users’ group, you could have multi-line structures whose flow was controlled by the structure words rather than having to refer to any labels or line numbers. Most other high-level languages allow this. I used to write programs in text with indentations, which space, vertical alignment, etc. which most BASICs did not allow, then transform them into BASIC files before running them. Since it was easier to see what I was doing, it resulted in fewer bugs and better maintainability.

You had REM to make things meaningful! Generally though BASIC programs were small enough you could keep track in your head. It’s not the sort of thing you’d write Linux in.

If you want sophisticated C-style structures, you’re probably better off with C. That’s not what BASIC was intended for. It’s for learning, keeping the difficult bits out of sight. A simplified model of a computer, with a smiley face!

REM statements helped some, but they take more memory than labels, and I seem to recall that in some BASICs, they required their own line and line number. All REMs took up memory in the final program, unlike the situation in assembled or compiled languages; so you had to be extremely frugal with them.

Simplified model, for sure. “Beginners’ All-purpose Symbolic Interface Code” (BASIC) was an appropriate name, but I’m not sure the language itself was ever truly appropriate for much of anything.

BASIC is what I taught myself coding with, as a kid from about the age of 7. I’ve since learned a few other languages as an adult, and I know I’d have got nowhere with any of them (C, COBOL, Java, a bit of Visual Basic, and some other minor stuff). An unattended kid, teaching himself, needs a suitable language, and BASIC, in it’s simple, rigid, limited, 8-bit form was it. Or STOS on the Atari ST / AMOS on Amiga. They were more powerful, had lots of game-creation tools built in, the language was designed (“STOS: The Game Creator”) for that, and shipped with Atari STs after the first couple of years.

Earlier ones came with Metacomco BASIC which was AWFUL! If it’s got fucking DWORD in it, it’s not BASIC it’s assembler!

At school, around age 9 – 12, we had compulsory lessons in LOGO on 6502-based BBC Micros. Which ironically had a very good BASIC, but Logo it was. Logo is good too, for many of the same reasons BASIC is. It gives immediate results, with graphics. It’s easy to understand, and write code, knowing only half a dozen commands, and you can quickly add others as you go, experimenting and reading the manual. Logo has lots of good features for introducing coding, recursion, functions, etc. And once a kid knows there’s 360 degrees in a circle, they’re most of the way there. You draw a square, then pick up REPEAT, then bingo! It’s all there!

Logo too was invented as an educational language. More purely educational than BASIC was, Seymour Papert was interested specifically in getting young kids into programming. On it’s negative side, it’s not much like other languages, so transferring to non-turtle-based languages is a big step. BASIC is much easier to step on to new shores from. Perhaps Logo would be a start for younger kids, then BASIC. Then if they start to advance, either Pascal, or just cut the shit out and go straight to C. Pascal’s just C with a couple of niceifying bits added on anyway.

But currently we have those things with jigsaw blocks. And some embedded stuff, that has some potential. But I still think graphics are the way to go. Writing their own computer games would be a huge attraction for kids, and now computers are so fast the slowness of interpreted BASIC is meaningless. Seriously, I’d look to STOS, maybe get Lionet and Sotiropoulous together with some educators. Practical educators. Then go from there with something for the PC.

STOS came with example programs, quality games that ran at playable speed (on an 8MHz 68000). A good book, starting from scratch, would be good. I wouldn’t literally use STOS, I’d do something more modern, but the basic principle of line-numbered fixed-type no-messing machine-abstracted BASIC, I’d carve in stone.

I wonder who’s in charge of kids’ education with programming, now this micro-bit fad’s starting up? I can only guess it’s some half-politician half-headteacher who has his secretary print out his email each morning. That’s a problem, “those who can’t, teach” is so, so true. Not all the time of course, but we all remember the “good” teacher who was enthusiastic, really knew his stuff, and knew how to get it across to kids. Because the others were mediocre time-servers who’d forgotten most of the stuff they learned in high school. I remember school well, the standard really wasn’t high.

Just to ramble more, many schools in the UK used RM Nimbus machines, in a network, for their students (1 or 2 per classroom) and staff, including all school admin. Nimbuses were underpowered (80186 in the era of 386s), horrible overpriced (by a factor of 4 or so compared to commercial stuff) garbage. They were mostly-PC compatible, back in the DOS days.

They were garbage. But their market was education, and they knew how to talk to schools, how to sell to them. They’d come and set the network up for the school, and had tech support that teachers could communicate with. They basically took advantage of the utter ignorance and laziness of teachers regarding PCs.

At one school I went to, the science teacher fucked all that off, and instead bought 386 PCs, for I’m sure a similar or cheaper price than the 80186 Nimbuses. Because he knew what he was doing. Because he made the effort to find out.

This was all in the late 1980s, early 1990s. I’m pretty sure things haven’t changed. As I said, it’s not just a computer issue, teachers and school staff are lazy and ignorant. Perhaps no worse than other middle-class office-type employees, but we don’t send kids to offices all day.

This means a lack of support, and the required knowledge, for the kids, regarding things like Micro-bit and any other plans. Above the level of lazy teachers and admin staff who are answerable to nobody, it turns into politics. Even better!

If you go on Facebook you’ll see people who passed exams in English, maths, and science. And they can’t spell, have terrible grammar, and don’t understand the most basic scientific principles. Sort of makes the whole thing look like a waste of time.

Even in BASIC, writing programs at age seven sounds great. I’m sure I had not heard of a computer yet when I was seven. When I did perhaps a few years later, most people had never seen a computer yet, and the few who worked with them seemed next to God or something. I remember a math book in grade school that mentioned computers and had a picture of core memory (with tiny wires threaded through all the tiny donuts). I remember the first time I heard the word “software,” at about age 16. It seemed like a joke or something, except this woman who worked with computers said she did software only, and she seemed serious and professional.

My first experience programming was a light brush with Fortran my first year of college, followed later by my getting a TI-58c programmable calculator, and soon after that, a TI-59 which had twice the memory (nearly a whole KB!). The 59’s memory forgot everything every time you turned it off, but at least it had a magnetic card reader. I wrote tons of programs for those, mostly making circuit-design calculations for my audio and amateur-radio hobbies. No games. I’m not a games person, but I cannot deny that games were a major factor in rocketing the 8-bit home-computer market forward and getting kids interested in programming too.

First computer was a ZX80. 1K ram and the CPU could either run you code or display the screen, not both at the same time. LoL. I was 12 and failing at school because they thought Dyslexia was not real and that I was just lazy. (their words to my parents). Learning to code in 1981 was the start of a new life for me, I’ve been a software engineer of over 20 years and it has so far been the best job ever! :)

Thank you 8 Bit, you saved my life.

And the one-touch keyword entry saved you having to remember how to spell them! Thoughful old Sir Clive.

Did not consider that but yes you’re right. By the time I moved to the 16 bit world the key words were baked into my head. And ASM did not need any spelling skills. :)

Nearing the end of a degree now, something that when I left school @15 and literate was an impossible goal. If it was not the advent of computing I would still be the read sweeper I was before I got my first break.

I would not be surprised if Clive did it because he know how bad spelling can be with engineers. :) Dyslexia is very common in my line of work and studies have shown we have an edge in computing.

https://www.scientificamerican.com/article/the-advantages-of-dyslexia/

Road sweeper, not read sweeper. Unfortunate typo LoL.

I had a Sinclair ZX1000 I played with briefly. (I think that was the model number anyway.) I figured the one-touch keyword entry was there because the membrane-switch keyboard with no tactile or other feel was impossible to type on quickly.

A couple of years later I got a handheld HP-71 computer whose BASIC was a thousand times as good. The high-quality 2/3-size keyboard was suitable for typing about 30wpm, a little over half of what I can type on a full-sized one. It had one-touch keywords too, but you could also re-define keys, so if there were things you used more, you could assign those to keys, overriding the ones printed above the keys. You could have multiple key-assignment files. Then you’d have various keyboard overlays which could be changed in a second, and you write your own key assignments on the overlays. Very convenient.

You mean Timex-Sinclair TS-1000, AKA Sinclair ZX81. 3D Monster Maze was amazing on that! Really showed what imagination and programming talent can do with a computer that contains only 4 chips! CPU, ULA, ROM and RAM. There were quite a few bits of inspired genius on the ZX81, one of them being “hi res” mode. On a text-only computer.

The “hi res” was done by altering the contents of each line of text, per scanline. So you could use the bit patterns from any of the characters, a scanline at a time. Of course it was limited to bit patterns that were actually in the character set, not every combination was available. Some art software would improvise, and do you a closest fit. There were a few hi-res games, which were a bit weird looking, but still amazing for the hardware. Sir Clive himself said hi-res on the ZX81 was impossible!

It’s true, kids are missing out a lot on having something as simple, as ultimately ownable, as an 8-bit. They ran no other software, no stupid OS to contend with and compromise with. The ROM had BASIC and a library of functions for text and basic I/O, basic machine-code system management stuff. There was an OS, but it was really more of a library, and you could ignore as much of it as you wanted, or work along if you wanted to extend BASIC or use it’s tools.

Microcontrollers are OK, in that you own the machine completely. But they don’t have graphics, they don’t have interactive interpreters. An 80s 8-bit with BASIC was really a treasure for the people of the time, and the programmers they grew into. The ZX Spectrum alone is thought responsible for Britain’s standing in the world software market, punching much above our weight, compared to our size.

There’s a book called something like “Spectrum Machine Code For The Absolute Beginner” by William Tang. Starts off with the idea of memory as a series of cardboard boxes with numbers in them (you know that metaphor if you were into computers as a kid). Then pretty quickly moves into actual machine code, writing little device drivers, using graphics and sound, everything possible really. From a beginner to an actual machine coder, actually possible in one book.

Even if you don’t have a Spectrum (and you should!) it will teach you so much of what goes on between logic gates and running software. A great start in how computers REALLY work!

I wonder if there’s some way to reproduce that for modern kids. Maybe a modern BASIC aimed at games. Something simple, logical, consistent, bombproof, and that quickly produces fun results, with a learning curve that produces more interesting stuff the more you learn. You can figure out the theory yourself as you’re working and playing.

I think being interactive, an interpreter, with no compilation step is important. It makes the code more “live”, being able to key a line in and have it work right in front of you. Lets you do little tests, experiments, to hone your assumptions.

The move from programming in BASIC (or LOGO at school) to MS fucking Word is a tragedy in modern civilisation.

One sad cause of that, is that teachers are generally clueless about computers, and don’t care. That thwarts childrens’ learning instinct, because there’s nobody they can ask, nobody to help them with their experiments.

The “programming” stuff aimed at kids, with it’s little jigsaw blocks, is OK for the very simplest of principles, but you’re not gonna put Space Invaders together like that. There needs to be some simple, clear code. With very little punctuation in the syntax! STOS was good back on the Atari ST. Something like that. STOS’s authors are still producing similar stuff, Multimedia Fusion is the latest, and it can create games. I think it’s code-free though, and code is important. Partly because adult programmers have to use it. Partly because it encourages logical, algebraic thought. Partly because I don’t think there’s a denser or quicker way of getting a programmer’s complex intentions into a machine.

And Usborne! The hours and hours of my childhood, reading those books. There were dozens! Where’s my home robot? And my satellite TV and pocket computer information terminal? Two out of three ain’t bad.

Check out FIGnition.

Check out Micro Python.

All good points.

How many Dick Smith VZ200 / VZ300’s (Aussie/NZ) or VTech Laser 210 / Laser310’s are still out there?

Wanting fans to join our VZ email list.

vzemu@yahoogroups.com

Ahem-how about fast forward to 2016 ? BASIC (in assorted favours) is still much used for programming such popular & dirt cheap micros as the PICAXE & 32 bit Micromite families! See => http://www.picaxe.com and =>http://geoffg.net/micromite.html

I’ve used PICAXEs and I’ve used Commodore 64s (and a few other systems of similar vintage). Two very different worlds.

At least on the C64 I can do some string manipulation (IIRC, it’s been a while) — sure, it’s not up to making say a proper text adventure, but it’s a sight better than what I can do with those dinky chips.

Whoops, wrong handle… and wrong button. Don’t tell nobody :P

My school library had “Machine Code For Beginners” in 1988. When they asked for it back after I’d had it for a few months, I paid 10p per sheet to photocopy it. Thank-you Usborne and my librarian for being awesome.

I feel blessed I’ve grown up with such a variety of 8 bit computers, apple II, msx-1 and 2, atari 800xl, c64. I did some basic on most of them, and some assembly, pascal and c on msx2. Then moved on to Amiga 500 with Amos, which was a basic variant with a proper editor. Then C on the Amiga 1200, of course it was 32 bits. Ironically I got a pc with a pentium 75 but running Windows 3.11 which was basically 16 bit :) I also remember using gw-basic on the pc, and Quick basic. Somehow you feel the need for a basic interpreter on every new pkatform to learn it from scratch, basic feels familiar (except visual basic, never got used to that).

Since the pc and Windows I never went to a different platform (apart from moving to win95, win98, 2000, xp and finally 7) which is quite extraordinary if you think about it. Moving from platform to platform was quite normal in the 70ies right through to 90ies.

I can’t imagine going through the pain and struggle to go to linux or x os for example, I’d sorely miss my huge number of windows applications and tools. I’ve played around with ubuntu and raspbian but I’ve been very underwhelmed by all the conflicts of modules, lengthy make scripts and conflicting x terminal software with other applications. I never realized I was so spoiled with windows, how most of the apps just work without complaint or diving into config files and kernel recompilations. But I’ve wandered off topic now ;)

That’s a list of some of the greatest 8-bit systems. You need a BBC Micro (or BBC Master) in there though. The Commodore 128 is also great.

How I migrated to Linux from Windows… spent a couple years idly tinkering with a distro called Puppy. Broke a lot, but the way it works, you can recover in a half hour flat. Switched in full-time about two, three years ago… the Puppy community unfortunately has gotten a little toxic — bad forum moderation practices, in a nutshell. I’m with Mint now and I hardly need help unless I do something under the hood (I probably ought to know better by now, I’m no dev).

If you’re used to W7, switch to something with the XFCE desktop environment. Things will be very familiar, trust me.

I’m about to give a friend a netbook of mine that I’ve had since I graduated college in mid-2009. It’s a solid system and has a fresh install of Linux Mint on it… this will be her first computer of her own — she’s used Windows a little at work, but that’s it. I know I can explain to her what she needs to know in less than an hour, and she’ll be fine.

My argument against Windows, by the way, has very little to do with raw usability (you get used to what’s in front of you) or licensing and legal crap. It’s actually simpler than that.

Linux has and does more where it matters. Windows does not come with an office suite built in. Mint has LibreOffice baked in. Windows requires third-party programs to make it work — antivirus/antimalware software at the very least, and then there’s whatever the heck you want to actually do with it. (Yes, you can get these from Microsoft for additional fees, if you actually want to… you are better off, I find, not doing that — and I’m not the only one who says that.) Windows when you get it is essentially a blank slate. Linux Mint when you get it is more like a toolbox waiting to be opened. It’s ready to go OOTB, because it’s got a lot of additional programs right there at one second past installation, and the ability to install yet more stuff with a few clicks. Windows inherently cannot make the same claim with any real degree of honesty.

Yes, if you are a hardcore gamer, Linux has little for you unless you install WINE (ugh) — but that is more a failure of Linux on the whole to properly market itself in a world of money, rather than anything else. It also doesn’t affect me, since I don’t really game any more. I will admit that I have a dedicated non-networked XP box for exactly two programs that won’t work well on Linux and don’t have meaningful equivalents — my CD of MYST (the original one, but for PC) and my copy of CorelDRAW X3 — both treasured programs. That box gets used about once every two or three months, tops… I just don’t need it all that often.

I’m not a great fan of Windows, but your reasoning is bogus. Windows machines often include a copy of MS Office shipped with them. Windows Security Essentials gives you Anti-Virus direct from the box. Windows Store lets you install stuff with a few clicks. There are plenty of free tools and packages that run just fine on Windows, and let you use it as a “blank canvas” also.

Windows didn’t get to where it is today by having no software available, or being difficult to tailor to the needs of users. Now if you want to talk about the horrors of the new UI or the amount of reporting back to base that MS want these days then I might agree with you.

There’s some free utils from The Windows Club site that make it quick and easy to nuke all the annoying things and remove Apps you’ll never ever use. If you pay attention during install you can select options to shut off the majority of the phoning home BS before it ever gets to the first full boot into Windows 10.

As for the default UI. Dull, flat, boring. Harks back to Windows 3.0 from 1990, minus the stolen from Macintosh round cornered buttons. At least with the 1607 update you don’t have to resort to a hack to be able to change the titlebar colors from white. (Stupid thing to have active and background titlebars both white!) Of course Firefox has to do their own thing, you get a grey titlebar no matter what.

I don’t like the Modern/Metro UI. Don’t use any of those new “Apps”. That UI looks like it’s inspired by Windows 1.0. They both have non-overlapping tiles with active content, flat, saturated colors and no “3D” effect anywhere.

If someone would come up with a pixel perfect XP Luna theme, or a problem free Win7 Aero Glass theme for 10, I’d be using it. Already got Classic shell, been using that since it was first available for Vista. Used to be I couldn’t stand Luna on XP, always switched it to Classic first thing. Then MS made Classic in Vista so subtly *wrong*. Things that should be square were not, and other things were so slightly off or missing and there was no way to fix it. Didn’t take me long to get used to Aero Glass. MS managed to make a “pretty” UI that wasn’t annoying and distracting, unlike the contemporary UI in OS X. OS X still bugs me, dunno exactly what but the look of it just doesn’t sit well. I used Mac OS 9 and earlier for years and it was fine.

I’m finding Windows 10 to have far better support for some old programs than any version between it and the version the software was originally made for. ‘Course the 64 bit versions don’t run any crufty old 16 bit programs without setting up a virtual machine.

One of the oldies is the game Darkstone. It was ported to windows 9x from the original Playstation, and much improved in the process. Upon first try in 10 it was all graphical funkiness. So I went to the shortcut properties, clicked on the compatibility tab then the compatibility troubleshooter. It analyzed the program and adjusted whatever it adjusted. The game runs exactly like it did back on Windows 95.

I still have the TRS-80 my dad purchased to learn assembly language on and an Apple IIgs I litterally dug out of a dumpster (to go with my huge collection of disks from elementary school) and I’ve taught my two daughters (now 11 and 13) how to use both of them.

The girls were first introduced to ‘programming’ on these two machines when they were 4 and 5. Later, we ‘coded’ Emilie’s first game, Space Poo, using p5 on a Mackbook Pro. Then came the Scratch / Makey-Makey wave. Now we’re back to using java (and the Arduino) as an introduction to Robot C for use with our homebrew Botball Team.

But Maddie sat down and wrote a program on that “Trash-80” a couple of weeks ago, just to make her mom laugh!

All of that is to say, logical thinking isn’t machine/language dependent. Kids will soak up whatever you put in front of them! I know I did! :)

You would not have got a game called Space Poo published in the 1980s, but it gave me a good laugh. Back in 1987 at school my friend Jim wrote a very simple program in s-Algol, which as I recall went like this:

program plop;

var

name: string;

begin

write(‘What is your name?’n’);

name := readstr;

write(‘You mean PLOP PANTS!’)

end

?

Such is the sophisticated humour of boys who were, in Scotland, old enough to marry.

BASIC is just fine, in the right hands. Like everything else. I started with QBasic in DOS. To this day I love the freedom and almost total control of hardware that this combination had. The same is true for most other BASICs of an earlier era.

You can take you basic knowledge and create cross-platform (or even server side) Java bytecode apps today with a little known freeware tool dubbed JABACO. http://www.jabaco.org. A part of the runtime is open source, the IDE is not, sadly. And sadly, too, the German author doesn’t seem to be interested in replying to emails… :)

I also started with a C64 (well, Tandy CoCo before that, but the CoCo was very limited, hardware-wise, slow graphics, no hardware sprites and limited sound). When moving to the Amiga there was ARexx (REXX for the Amiga). Rexx was a no brainer to learn for someone familiar with Basic.

Then on the PC side I used Computer Associates’ CA-Realizer, it’s Visual-Basic killer which let you generate apps both for Windows 3.x (16 bit windows), or 32-bit OS/2 PM (IBM OS/2 2.x – Warp).

By the time CA got Realizer to the 3.0 version, and after they started signing up beta testers for CA-R 3.0, some upper management deals with the Evil Empire of Redmondia (something about integrathing CA-Unicenter features into the upcoming Windows NT 4.0) turned CA-Realizer 3.0 into a Win32-only product, killing its main appeal (creating apps for multiple platforms).

CA-Realizer was also great because it included a graphical IDE and allowed you to embed objects like for instance a spreadsheet. It was built, in, you could load an array and display it as a spreadsheet, let the user fiddle with it, and also IMPORT AND EXPORT DATA to MS Excel files….

After CA let CA-R slide into irrelevance and neglect, came Oracle with its own Visual Basic killer wannabe, Oracle PowerObjects. As far as I know there were two versions released… the spec sheet looked amazing, but I couldn’t afford it at the time.

The last Visual Basic killer I remember was IBM VisualAge for Basic, available for AIX Unix, IBM OS/2, and Windows. It was great but its runtime was very bloated and slow for the time, generating some huge DLLs that you were supposed to include with your app for it to run. If I remember correctly someone once told me that the entire thing was built upon smalltalk….

FC

There are IchigoJam that is $15 BASIC PC.

http://ichigojam.net/index-en.html

sadly the two English ordering links I clicked shows SOLD OUT both in kit and assembled form. Also shipping from Japan isnt particularly cheap. :-{

I didn’t actually figure out whether those Usborne books were just terribly proofread/edited or whether Sinclair BASIC was super weird. I know some magazine listings off other systems I got to work with a few changes, but going through those Usbourne books trying to work all the examples was a hell of a slog and mine ended up smothered in annotations.

Also some of them were super disappointing, they had a 3 or 4 really, really basic books that were 90% the same and ended up with just a stupid hello world thing as the climax, so you sent off to the bookclub waited, and got the same shit you already had. The best one I has was a 4 books in one, that did some useful BASIC programming and went into graphics etc also… but with a spectrum I did more banging my head against a brick wall than doing much because of machine differences.

Ah, Usborne BASIC programming! A listing that’d run on some machine or other, with little symbols telling you where to make changes. A star for Spectrum, a semicircle for Apple, non-Euclidean icosahedron for the Commodore, etc.

I like to think they tested each program before they printed the books. They had so many computers supported, they must surely have tried them to know the BASIC well enough.

I once typed in an entire Usborne book. It was a text adventure, that took an entire book. A few chunks of code per 3 or 4 pages, telling you what each bit does. Pretty good, and it told me how text adventures were made. Back then, it was worth knowing, and I did my own versions in COBOL and C later on, knowing the method it works on. Actually I converted it on the fly for my Z88, a Sinclair-designed portable, 8-line 90-something character LCD display and a Z80 CPU, 32K RAM + 128K in an expansion slot.

The smaller screen was what needed most accommodating for, but I managed it. My sister played the adventure all the way through, she still remembers the bit with the vacuum cleaner all these years later.

Best BASIC of all time was RMB (Rocky Mountain Basic) that ran on the HP 9000 series 200 & 300 work stations. I’m 65. Started programming in 1975 on a modified teletype terminal hooked to a Univac 1108 at the University of Utah (One of six original ARPA net nodes). Book was UC Berkeley BASIC guide. $10 bought ten hours – interface – time sitting in a hot small room with a row of these modified teletypes, 2 DEC LA-120 printing terminals and one Tektronics CRT high zoot graphics terminals (which was never open!). We programed lunar lander, Star Trek and the original Cave adventure….

It taught me enough that when I moved to Silicon Valley I quickly learned RPL (?) that ran on HP9825. (Just abreviated BASIC). In 82 the HP9836 came out and I learned RMB, PASCAL (a facist language!) and 68000 assembler. A year or two later the department bought an HP 3000 and I learned FORTRAN, MPE and IMAGE / QUERY. Also learned HP 1000 code.

Of them all my favorite was still RMB. I still own an HP9826 & 9000 – 340. My first home computer was ATARI 400 followed by ATARI ST. (Which I upped to 1 MEG of RAM & 2 10 MEG Lapine hard drives through an Adaptek SCSI to IBM 205 adapter.)

Ah… you guys don’t want to read about some old fart reminiscing… Just if you get a chance to buy an old HP 9000 (9826, 9836, 9820, 9000- 310, 320, 330, 340) don’t hesitate to buy it. You can even interface them to modern disk drives using an old PC as an interface. BASIC in ROM mods are also on the web.

I learned and used RMB 5.1 for lab instrumentation at work in the late 1980’s on an HP9000 series 300 computer after having gotten proficient at the BASIC in my HP-71B hand-held computer with loads of LEX (language extension) contributions from the user groups, and I was very disappointed with RMB. RMB was very clumsy at things that the HP-71 was so nimble at. There were too many limitations. Later there was HTBASIC and I think I had a demo disc of it but I never got into it. I think it was supposed to be a competitor to RMB, for lab instrumentation and automated test equipment.

Lee Davison who died a couple of years ago at a fairly young age (40’s?) wrote EhBASIC for the 6502 which, although I never actually used it, looked far better than any other basic I had seen on 6502.

Agreed that RMB 5.1 was unimpressive. Earlier versions were MUCH more versitile. My fav was 3.0. 15 level prioritized interupts. I/O interupts. Compiled utility calls.

HTBasic was always a dog. Bad port over to PCs. None of the priority levels. Because they were inherent to 68000 but not x86.

Looking back, that whole competition with BASICs was crap. They would be far better off with a simple C compiler or something simpler, closer to assembler. With 68K it wouldn’t take that much of an effort, as even its assembly looks good.

Or pehaps Forth. I remember seeng some ZX-81 lookalike with Forth.

Agreed (although I can’t stand C). The existing hardware of the day, primitive as it was, could have been put to much better use with better software methods; but the experience to to that just didn’t exist yet. It was all too new. Everyone was green—even the really smart ones like Woz.

Even assembly language can be made to handle like a high-level language in some respects by making good use of a macro assembler, with, in most cases, zero penalty in performance or memory taken, partly by forming program structures like I show starting about 2/3 of the way down the page at http://wilsonminesco.com/multitask/ regarding simple methods of doing multitasking without a multitasking OS.

Forth was an excellent fit for the 8-bitters, yielding compact code and good performance, even better interactiveness, good structure, and it is fully extensible, unlike BASIC. An interesting thing about it is that it makes good programmers better, and bad ones worse.

Take QL for example. They took all that effort for SuperBasic. Whole machine had to have 48K of ROM just for that. What was the end effect ?

HOw many really useful apps were done in SuperBasic ? How many people can say that they became great programmers because of it ?

Once you got the machine, you quickly realised that you need every CPU cycle and you can’t afford to waste this resource.

So superBasic was used most of its time just for loading and starting machine code. And a bazillion pointless excercises for drawing an stippled ellipse now and then.

NOw imagine just barebone multitasking OS with:

1. Simple but capable editor

2. assembler

3. dissasembler

5. specialised tools ( debug, run in controlled environment, error management etc)

Imagine an assembler with:

symbolic register names along with real ones.

Simple math operators that mirror simple assingment and math instruction sequences

“Safe” mode operation, where assembler merges register content checking infrastructure wiht each genrated instruction that could do damage.

Special container for executing small segments of code and oneliners.

With such assembler you could do things like A=0; while A< 3515 { A = A +1 ; print "%d\n" , A }

System would translate text into code in a moment and run it without user noticing that this is not an interpreter.

The Jupiter Ace was pretty much a ZX80 clone, but with Forth in ROM. I think 4K. Possibly had more than the 1K RAM of the ZX80 too, maybe 4K? You’d need a bit more for Forth I’d expect.

It tanked. The experiment of using Forth instead of BASIC was applauded at the time, but the machine being an utter heap of shit, ZX80 technology, when the C64 and other machines were out, with sound and full colour, was never going to be good enough. Dunno whose crazy idea that was, or who was daft enough to fund them.

Forth could be the greatest language in the world, but on piece of crap hardware, people won’t buy it. I bet Forth on any other machine would’ve been better, even if you had to load it from tape. The 16K to 64K RAM of other machines would leave you with much more RAM even after loading in the Forth interpreter. And colour, sound, a keyboard that wasn’t a flat membrane, etc.

For new users who had no idea what a computer even was, BASIC was by far the best option. And interpreted too. Compiled C would have got nowhere. You can type 1 line of BASIC and get a result. 2 or 3 lines you can make a simple little program. And no weird punctuation or stack-based thinking to comprehend it. BASIC just did 1 thing at a time, whatever you told it to. You got back meaningful error messages that told you exactly where you’d gone wrong. C doesn’t have that, and never has.

C’s not for learning to code, BASIC isn’t for writing operating systems (although I’m sure there was one, somewhere, maybe IBM did something).

My computer likes me when i speak BASIC.

I used to love borrowing those BASIC books from the library when I was a kid!

I learned BASIC on a Digital 10E in the Fall of 1973. In the Spring I took the Assembler class and programmed the same machine with the toggle switches on the back. BASIC was a great way to learn to program with no wasted space.

Great article. I’m someone in their mid forties who learnt to program on a Sinclair ZX81. However, today – I think you don’t need to go back to BASIC, when you can easily get started on a Pi / Linux / {python / javascript} or develop simple C code on a £2 microcontroller

Just to add that if you want a home computer, you can also use JavaScript: http://www.espruino.com/Espruino+Home+Computer

Just one Espruino board and 4 keypads, and you can make something with VGA output. All the code for keyboard and console output is JS too, so it’s trivial to understand and modify.

“and the generation who were there on the living room carpet with their Commodore 64s (or whatever) would probably not care to admit that this is the sum total of their remembered BASIC knowledge.

10 PRINT “Hello World”

20 GOTO 10″

What kind of kid were you? You should be remembering this:

10 LET X = 1

20 SOUND X, 1

30 X = X + 1

40 GOTO 20

Or any of the multitude of close variations on this all of which were great for annoying the crap out of all the grownups in the house.

BASIC? Keyboards? Meh…too convenient.If you really want to learn how computers work try this:

http://s2js.com/altair/

I like retro computers as my first computer had Celeron processors and 512 RAM. This was the time when we used to learn computer basics and languages. https://www.pcrepairs-bognorregis.co.uk/chichester.html

Or you can learn on the real hardware :) Check this out: http://cb2.qrp.gr