The US National Highway Traffic Safety Administration (NHTSA) report on the May 2016 fatal accident in Florida involving a Tesla Model S in Autopilot mode just came out (PDF). The verdict? “the Automatic Emergency Braking (AEB) system did not provide any warning or automated braking for the collision event, and the driver took no braking, steering, or other actions to avoid the collision.” The accident was a result of the driver’s misuse of the technology.

This places no blame on Tesla because the system was simply not designed to handle obstacles travelling at 90 degrees to the car. Because the truck that the Tesla plowed into was sideways to the car, “the target image (side of a tractor trailer) … would not be a “true” target in the EyeQ3 vision system dataset.” Other situations that are outside of the scope of the current state of technology include cut-ins, cut-outs, and crossing path collisions. In short, the Tesla helps prevent rear-end collisions with the car in front of it, but has limited side vision. The driver should have known this.

This places no blame on Tesla because the system was simply not designed to handle obstacles travelling at 90 degrees to the car. Because the truck that the Tesla plowed into was sideways to the car, “the target image (side of a tractor trailer) … would not be a “true” target in the EyeQ3 vision system dataset.” Other situations that are outside of the scope of the current state of technology include cut-ins, cut-outs, and crossing path collisions. In short, the Tesla helps prevent rear-end collisions with the car in front of it, but has limited side vision. The driver should have known this.

The NHTSA report concludes that “Advanced Driver Assistance Systems … require the continual and full attention of the driver to monitor the traffic environment and be prepared to take action to avoid crashes.” The report also mentions the recent (post-Florida) additions to Tesla’s Autopilot that help make sure that the driver is in the loop.

The takeaway is that humans are still responsible for their own safety, and that “Autopilot” is more like anti-lock brakes than it is like Skynet. Our favorite footnote, in carefully couched legalese: “NHTSA recognizes that other jurisdictions have raised concerns about Tesla’s use of the name “Autopilot”. This issue is outside the scope of this investigation.” (The banner image is from this German YouTube video where a Tesla rep in the back seat tells the reporter that he can take his hands off the wheel. There may be mixed signals here.)

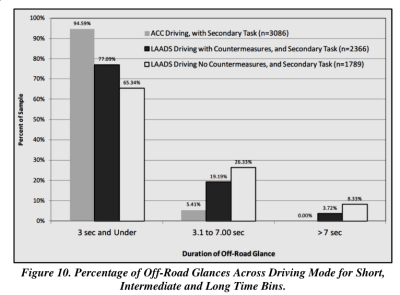

There are other details that make the report worth reading if, like us, you would like to see some more data about how self-driving cars actually perform on the road. On one hand, Tesla’s Autosteer function seems to have reduced the rate at which their cars got into crashes. On the other, increasing use of the driving assistance functions comes with an increase driver inattention for durations of three seconds or longer.

There are other details that make the report worth reading if, like us, you would like to see some more data about how self-driving cars actually perform on the road. On one hand, Tesla’s Autosteer function seems to have reduced the rate at which their cars got into crashes. On the other, increasing use of the driving assistance functions comes with an increase driver inattention for durations of three seconds or longer.

People simply think that the Autopilot should do more than it actually does. Per the report, this problem of “driver misuse in the context of semi-autonomous vehicles is an emerging issue.” Whether technology will improve fast enough to protect us from ourselves is an open question.

[via Popular Science].

“People simply think that the Autopilot should do more than it actually does. Per the report, this problem of “driver misuse in the context of semi-autonomous vehicles is an emerging issue.” Whether technology will improve fast enough to protect us from ourselves is an open question.”

So then why are we rushing to make cars without steering wheels? It’s interesting that Tesla was not at fault even though their steering assist was happily operating at 90 MPH – well above the speed limit. I don’t disagree that the driver was responsible, but shouldn’t the system have at least popped up a dialog box saying “I’m sorry Dave, I can’t do that.” Tesla says it can’t operate above a few MPH over the speed limit and yet…

That isn’t a crack at autonomous driving in general. It was directed at Tesla’s Autopilot specifically. It’s not that it, technically, could not be made to handle such obstacles, it’s that it wasn’t. In the context that you would use the Autopilot in (highways only) nothing comes at you at right angles or sits there at a dead stop. Usually. This is a problem unique to semi-autonomous vehicles, where they are not designed to handle all types of conditions. In contrast, attempts such as Google’s car DO try to be fully autonomous, handling all realistic driving scenarios.

The downside, of course, is that such a vehicle is going to be much more difficult to design. A highway, where there are no lights, no odd signage, and everyone’s going the same direction at approximately the same speed ONLY in cars/motorcycles is a much easier environment to handle than a busy road lined with parked cars, pedestrians, cyclists, signs, and who knows what else.

Though I do agree that not giving some sort of audiovisual alert was a poor design choice. It’s one thing for the instrumentation to not be able to see the obstacle for some reason, it’s another to have instrumentation that can see such things, but not make at least a cursory attempt to determine whether it’s a threat. I don’t see any good excuse for that.

The solution is for Tesla to stop pretending they have an “autopilot”, and the legislators coming up with rules that say you shall not engage an autosteer feature except in designated areas where there’s no crossing traffic.

The real problem is that AI in general is smoke and mirrors, and the whole community of researchers and industry have overhyped their stuff for decades because they know people can’t tell the difference as long as it appears to function correctly.

As a result, people anthropomorphize the machine and believe the science is already at the level where you could equate a robot to something like a dog, when in reality the level of complexity and the ability to handle abstract information of the AI models is more akin to a nematode worm, and even that is giving it too much credit – it takes a supercomputer to actually simulate the brain functions of a nematode worm and they don’t have a supercomputer in a Tesla.

> The solution is for Tesla to stop pretending they have an “autopilot”,

“pilot” literally means the person who steers. You don’t have to take my word for it either: https://www.merriam-webster.com/dictionary/pilot and https://en.wiktionary.org/wiki/pilot

autopilot = autosteer

I thought autopilot did make you wiggle the wheel every now and then to keep you in the loop. Pretty sure I’ve seen that on several show and tell youtube videos.

Let’s be clear. Tesla was not seen as at fault specifically by the US National Highway Traffic Safety Administration for this specific accident. Others may or may not agree with that assessment.

Oh there are going to be growing pains with this technology, and lots of bellowing from every quarter, but it’s going to happen sooner rather than later.

This type of thinking is going to cause a lot of future drivers from ever experiencing the sheer joy and freedom that comes from driving a car. It’s already making a generation of drivers who cannot operate a manual transmission. Sad times.

On the other hand this can help people who are handicapped and can’t legally drive normal cars. I have poor eyesight. I’d love to have a car with system that would enhance my vision, inform me about road signs and traffic lights I can’t see on my own and assist me with avoiding collisions. It should be simpler than fully autonomous car, yet I didn’t see (ekhm) anyone working on something like that…

Agreed, there are many benefits to having both driverless and manually driven vehicles. In highly predictable environments (urban) it makes a lot of sense to have self- driving for those that can’t drive, and it has the potential to alleviate some congestion and reduce accident rates.

As far as DV82XL’s concern, people still ride horses for enjoyment. I think the same will hold true for automobiles. I don’t particularly enjoy driving (I enjoy it about as much as I enjoy using a can opener, it’s a tool that makes my life easier). But I know people who restore cars and love to get them out on the road and I think there will always be a place for that.

Sure people still ride horses for enjoyment – on trails dedicated for such mostly, and yes I can see tracks for driving cars. But I strongly doubt that there will be much in the way of manually driven cars on trunk routes. At best, (and in my view, most likely) dual control will be the norm with manual operation on the ‘last mile’ and automatic on the highways. Primarily I suspect because the computational load navigating the trip beginnings and ends would be far higher than would be cost effective, particularly if vehicles need to draw on network services, again something I suspect is highly likely.

The only thing predictable in the large urban setting I drive in daily is that traffic is unpredictable and I will have to fight tooth and nails to get to where I have to go.

Same here – I have a problem with my leg and I can’t ride an unmodified car. So far I have to use public transport, which is good enough where I live.

People choose automatic transmission (why is beyond me, but they did).

People will choose autonomous cars.

Folks like yourself will join the relics who are disappointed that kids today don’t know how to use a slide rule.

That being said, I plan to drive my stick shift until I can get a level 5 autonomous class 3 RV… it seems like the best use of the technology would be for a car that has a bed in the back.

Last time I checked, the government has never forced people to stop using slide rules. They WILL force you to ride in their autonomous death machines.

With slide rules you had learn about logarithms, with a practical example at hand, not just to use your fingers and pre-programmed macros. And with kid’s IQ going lower per generation, well …

>People choose automatic transmission (why is beyond me, but they did).

>People will choose autonomous cars.

Because driving in heavy stop start traffic with a manual stucks, same with autonomous car’s perfect for the daily grind or long drives but it’d be nice to turn off to go through the twisties on the weekend.

We manage it fine in the UK… really can’t understand Americans inability to use manual transmission… is it because you need one had free to wave a gun about? :P

I see this attitude about manual transmissions a lot on the internet and it is pretty baffling to me. Sure, you may prefer manual transmissions. But are you really unable to understand why most people prefer automatics? They shift for you. If driving is just something you have to do, rather than a hobby, then having a car that smoothly automatically shift for you is pretty awesome. Most people don’t care about being “connected” to their car any more than they want to be connected to their toaster or coffeemaker (both of which can be done manually)

I get worked up when I ask for a model with Manual, only to be told Ican’t have it. Model X doesn’t have a Manual shift version

I bought my *first* automatic car after driving manual for >25 years. Almost every year I have at least one really long journey, and I often do 3hr+ drives to see friends and occasionally to see clients. I was completely unsure about if I’d like autos and even RWD (I’ve driven a couple, but haven’t liked them – but that was before traction control was common) basically to see if I’d be able to make the transition to an electric car at some point.

After learning NOT to knock it out of gear when stopping and just holding the car on the brakes at stop junctions, I found that the cognitive load was very much reduced on long journeys, and when I discovered how to work the cruise control everything was much easier on long journeys.

I *do* like manual gearboxes, but really there’s not much of a reason for them apart from ego.

I’m certainly looking forward to trying out the new tech when it gets affordable.

I was sad when they took the crank off the front in favor of electric starter motors. Its a shame.

For sure there are a lot of gearheads that see this as emasculation and are not happy about it, but that isn’t going to stop it happening.

“It’s already making a generation of drivers who cannot operate a manual transmission”

You’re a good decade or two late for that one. Many younger people I’ve talked to think “manual transmission” means you have “click shifting”, and are baffled by the existence of a clutch pedal.

Only a rare few know of the existence of manual transmissions, and a rarer subset that can drive them.

The time has already past where people at least knew what they were, even if only thinking it was a thing for trucks, race cars, and gear heads.

But you can search on “carjacking foiled by manual transmission” for the entertaining side of this coin.

My kids were 8-9 years old when they started drifting around on the frosen lake with my stick shifted car, both went on to motorsports and racing cars when they was 14 years old.

If you start them on motorsports early, they will never have any money for drugs.

In my experience motorheads use drugs as much as any other group.

While it’s a funny thing to say when you complain to other nerds about the cost of your hobby, the truth is drugs become more important than everything else if you are addicted. :-/

Outside of motorcycle riders it’s become a rarity just like cursive writing is vanishing among the young. In another decade or two, people who work a manual transmission or do cursive writing will be seen as oddities.

And is that really a problem? Cursive writing is pointless.

“Cursive writing is pointless.” I wonder, since those that can’t apparently never pass up a chance to assert this as if it were a fact. Do you also go out of your way to deprecate those that can write Japanese, or those that are fluent in another language for no other reason other than you cannot?

Not sure why it’s sad their are generations who never learned how to use manualtransmissions. IMO that sweating the small shit, not worth. My first daily driver was ’38 GMC pickup with a non synchromesh manual transmission, never occurred me to complain about that there are generations who never learned drive non-synchromesh transmission. Mostly because I have ro purchase vehicles used most o those I have owned in the last 40 years have been equipped with automatic transmissions even the pickups. Even contractors of all sort and farmers purchase pickups with automatic transmission; including independents who know how to operate manual transmissions. No that I have acquired left side hemiparisis it’s highly unlikely I’ll own a vehicle with a manual transmission again

I honestly don’t care, I hope this technology kills many people and the companies then suffer the wrath of the victims, then it’s never touched again kind of like Zeppelins after the Hindenberg. better get started on the memes for the tragedy’s that will ensue. I’m spiteful because I want a Zeppelin in my backyard.

why would you want someone to die over this?

sick mode of thought to me to be honest.

also zeppelins didnt die, they are still being used, in fact the german company that produced the hindenburg are still making and selling airships in their “new technology” line, mostly used for cargo transport or handling (skycranes).

what i find most odd about autonomous vehicles are how many have a gut reaction against it, are we really that petty that we have to explicitly control or do something to feel valuable?

a similar gut reaction happens when talking about autonomous production.

Shh don’t ruin my plans for opening a Pitch fork and Torch factory.

Unfortunately this is the real reason that there will be stiff resistance to self-driving cars – a lot of people WANT to drive and will feel diminished if they cannot. All this will do, of course, will be to raise the bar for full adoption of this technology, not stop it.

>This type of thinking is going to cause a lot of future drivers from ever experiencing the sheer joy and freedom that comes from driving a car.

Frankly, I hate driving. That’s over 2 hours of every day in traffic I could spend reading or programming.

https://i.ytimg.com/vi/nvE_COK5ttw/maxresdefault.jpg

Ah yes, such joy and freedom. Nothing says joy and freedom like sitting in an uncomfortable seat, staring blankly ahead while waiting in line.

>It’s already making a generation of drivers who cannot operate a manual transmission.

I can operate a manual transmission and I have to say, it’s awful. It makes the burdensome chore of driving even more annoying.

>Sad times.

Translation: Some people enjoy different things than me so I am sad.

“… sheer joy and freedom…” This is not a strictly true statement. You neglect to factor in purchase costs, insurance costs, running costs, parking costs, time spent waiting in traffic, time spent waiting for a vehile park, stress due to waiting in traffic/waiting for a park. Financially, I am far better off without a personal vehicle. Emotionally: there is no hanging about waiting for a space to open up, traffic to move, idiots to be mowed down.

It may be suggested that a vehicle represented freedom…20-30 years ago when traffic numbers were less but with populations increasing, morons wanting to drive but knowing nothing about either vehicular or social awareness means that people nowadays are far less educated on what driving in society is about. These kinds of issues are manifest in plkaces where there is a large, rural section of the population. Kids grow up driving quad-bikes, or tractors, etc which teaches them how to handle the controls of a vehicle but then they get their driving licences and don’t give a flying fart about anything which does not appear in their front-facing vision. I spent 8 years in New Zealand and barely saw anyone using any of their mirrors. Their attitude was one of: “if it ain’t in front, I don’t give a shit.”

In the UK, enforced legislation regarding vehicle insurance has been pushed through according to some hidden agenda between governent and insurance companies. Drivers are penalised heavily for owning a vehicle, mostly due to laziness by insurance assessors to do their job in weeding out shady claims by self-injuring parties who routinely run one another over to make spurious claims. Imagine the increase in false claims when one can start attributing accidents to the cyber-driver?

I miss riding a motorbike but even that doesn’t weigh on my mind. There are certainly countries where I would never get on a bike as I wouldn’t trust any driver as far as I could spit. Am currently living in an East-European country and drivers here are, without doubt, the most selfish, worthless folk behind the wheel I have ever encountered. Regular drivers, buses, police (without lights or sirens) gleefully speeding through red lights in their haste to get from one traffic jam to another.

Given the slack attitude toward sensible driving by actual drivers, I would be totally reticent to live in a world of automated driving when the only fallback is a twat with a mobile phone, ponced-up hair and a cheaply-attained driving licence.

Ultimately, people are lazy and this will extend to driverless cars. It will give more people the excuse to pay less attention to the road and more attention to f**ckbooking while on the move. I see no benefits at all to what is, essentialy, glorified cruise control.

I am sorry but this is simply a CYA meant to make sure Tesla doesn’t get screwed legally.

That system could be well a “driving assistance” system (i.e. not fully autonomous) and not handling every possible situation – that’s ok. However, what is NOT excusable, is the design ignoring basic human factors research. Tesla has designed their Autopilot in such way that it gives a false sense of confidence – which leads to complacency and inattention.

In the case of that fatal crash above even if the computer was flashing and beeping at the driver, the driver had 7 seconds total to react. Reaction time of a concentrated and attentive driver is about 2 seconds. Reaction time of a driver that is not attentive is much longer (regaining situational awareness, moving hands on the wheel and feet on the pedals, and finally starting the emergency manouvre).

These man-machine interface problems with automation are long known, for example in aviation or spaceflight. It is therefore shocking that the regulator does not bring any attention to this glaring design problem of the Autopilot system. “Solving” the issue by a liability disclaimer somewhere in the manual that nobody reads is not the way to design safe systems.

I agree with you. There’s so much political pressure to force people to accept self-driving cars that any little misstep might derail them. Tesla does call this an “assist” and not self-driving, but flying into the side of a semi is a bit more than a misstep. Even if it didn’t see the truck, it should have known better than to self-steer going that fast.

I would say that is a technicality, even though it unfortunately ended up with a fatality.

That system either should be designed as fail safe so that the above couldn’t happen (probably not possible and waaay out of scope/expensive) or it needs to be designed in such way that it won’t allow the driver to become inattentive – e.g. by watching a DVD or sleeping.

It is not like Autopilot is the only such system cruise control/lane assist (that is what it essentially is) on the market – but Tesla’s is the only one that has been promoted specifically with “hands-off” operation, giving the impression it is fully autonomous. Competitors require that you keep your hands on the wheel – or the driving assist will disable itself. *THAT* is the main issue. Autopilot is explicitly designed to invite unsafe behaviour – and that cannot by solved by a CYA passage saying that the driver is responsible and that they are supposed to be attentive even with the automation engaged.

And that “…require that you keep your hands on the wheel or … autopilot disable itself..” is precisely why Tesla *should* have been found liable.

They designed a system that allowed the driver to (easily) kill himself. If I designed a balcony on a 20 storey building with no railing, and only a warning on the glass patio door to take care, I would be in jail in a minute!

It’s not easy to find a semi-trailer parked across a highway to test semi-autonomous software. And no, failed designs of balconies won’t (it turns out) send the designer to jail.

In this case, a set of balconies with concrete over wood beams, with a water-barrier that guided water into the sealed underside, which prevented seeing the beams were rotting. The balconies ended up rotting so much they were sagging several inches before a few students went out, causing them to collapse and kill them. No fault was found with the design, the execution, or the lack of ability to verify the condition of wood in a sealed compartment exposed to water.

Even the Hyatt Regency walkway collapse did not result in the engineers going to jail, though they did lose their licenses due to obvious negligence in managing the project as opposed to failure with this detail.

“It’s not easy to find a semi-trailer parked across a highway to test semi-autonomous software”

Yes it is. You park one and see what the car does.

> Tesla has designed their Autopilot in such way that it gives a false sense of confidence – which leads to complacency and inattention.

As I recall, research has demonstrated the airbags and seatbelts result in less cautious driving.

Let’s be honest here. Marketing this technology under the name “Autopilot” has a lot to do with the consumer misunderstanding the limits of the technology.

Commercial airliners have autopilot and the humans are still required to stay aware and respond to emergencies.

This is a terrible analogy. Commercial airplanes aren’t marketed to the pilots, and pilots are specifically trained on what an autopilot can and can’t do. Moreover, it’s their job to know how to fly the plane. The only ‘training’ you need with a Tesla is a big check.

I interpreted Mike’s comment to mean that even with the extensive training that airline pilots have flying the aircraft and understanding the limitations of the autopilot, they *still* have to be sitting in the seat paying attention. Pointing out that Tesla owners get no training and are operating a craft in potentially more hazardous environments than aircraft operate in (lots more to collide with), that makes the analogy even more relevant.

Also don’t forget that when a plane autopilot disables itself because it detects something it cannot handle, there is a loud noise, two pairs of eyes that can look for the problem and very rarely a situation where you have only few seconds to avert a disaster. Unlike in a car. Oh and the plane navigation/steering computers are usually tripple redundant too plus there are backup “steam gauge” instruments …

The driver in this case was an early adopter and long time user. He did not misunderstand the general capability of the technology. What he was doing was speeding. This sort of accident happens with human drivers from time to time, often including ‘following’ a stopped semi-trailer that is parked on the side of the road and out of the traffic lanes.

The term “Autopilot” is not incorrectly used. My dad was a pilot and I had many hours assisting in flight and knowing flight systems in his plane. When you engage autopilot in an airplane, it is a very simple function that allows you to take your hands off the wheel for long durations of flight. It can handle waypoints and course correction itself. BUT: it is a not intelligent system, and will fly you right into any obstacle along your course. There is no misunderstandings here, the airplane’s autopilot is NOT “autonomous operation”, quite similar to a autopilot system on a yacht. Maybe the solution could be that purchasers of the Teslas equipped with autopilot should be required to complete a small training course on it’s abilities.

Well, to avoid flying into obstacles there are TCAS and GCAS.

But of, course, it is far easier with aircrafts than cars…

The way I look at auto pilot systems is they relay on limited artificial intelligence. You train it with training data and as long as the real life data does not veer too far away from the training data, all will be well. The problem is anomalous events, that can be caused by sensor glitches, low lighting levels, vibration, soft faults, RF intermodulation of radar systems, cosmic radiation, pot holes, ….. One anomalous event may even be ok, but eventually multiple events will occur that are so far outside of the training data that a failure is inevitable, given a high enough number of these devices in operation over a long enough duration.

Same can be said of human drivers. What sixteen year old is going to be able to handle all the things you mentioned?

Great question. Lots of them can’t, and the insurance premiums for 16 year-olds reflects that. If owners of autonomous cars had to pay those same premiums, I’ll bet you that the technology won’t catch on very quickly.

Show me a self-driving car with a 16-year-old’s learning capacity, and I’ll consider using it the same way I would a 16-year old driver – by letting them drive around with me in the seat for a year or more.

The system as it stand currently is closer to letting a 4 year old drive you around.

A nearsighted 4 year old.

That’s too short to see over the steering wheel it isn’t holding onto…

That’s giving it WAY too much credit.

These AI systems aren’t comparable to mammals. They’re barely comparable to insect.

The Tesla autopilot is like letting a bacterium drive your car. Seriously.

I thought I heard Tesla was collecting driver data to build a better driver assist system. That is, they were basically copying the data generated from all the drivers of their cars to see how they drove and were incorporating that into their system. Am I wrong?

You’re right. But it doesn’t mean it’s safe.

So the plan is to make Autopilot “less dangerous.” Got it.

with those conditions most 50 year old humans with 30 years of driver experience would still fail at one point.

“given a high enough number of….” that sentence sort of guarantees it no matter how few or many failure modes you associate with the specific case, be it human or machine.

the machines dont need to be perfect just safer than the average human, they arent there yet but it wont take as much as many here seem to think, then again they are aiming for perfect and that is actually impossible, always have been, always will be.

“the machines dont need to be perfect just safer than the average human”

If the machine is equal to the average human, then for most it would be wiser to not use it.

That’s because the majority of accidents ascribed to the “average driver” are actually committed by a minority of the drivers, many of whom are drunk or on drugs. It means if you sit in an autonomus car that is just as good as the average driver, in all likelyhood it’s going to be significantly worse than you are.

This. The majority of drivers are significantly safer than the average, because of this.

This what? What does that even mean?

The “majority’ of drivers are lazy, cheap, selfish individuals. They will gleefully accept the jaundiced view that anything whose mass is smaller than the vehicle they are driving should give way to the one they are in. They will circle car parks, or streets for long periods looking for a parking space which is the closest to their ultimate destination. They are overcome with frustration and rage when another vehicle takes the space they were going to occupy: either parking or in the midst of commuting traffic.

In this country (am curerntly in East Europe) they block pavements (sidewalks), vehicle entrances/exits. In the UK, drivers will happily move from traffic into the lane which ceases to be buses only, and then move back out again 50-100m later when it becomes a bus lane again but, due to all the other traffic closing up, they effectively block bus lanes until they can get back into the traffic lane. They complain that they would take the bus but buses are too slow/take too long to arrive and they despise waiting for a bus…but fail to realise that it is they, the drivers, who are forcing bus routes to take longer. No politician in ANY country is brave enough to take a stand against the number of potential voters who drive.

can you prove that or are you just asserting your opinion?

because i will bet that most accidents dont involve drink or drugs, just pure stupid humanity.

there is plenty of it to go around, even if the drug induced accident idea was true it wouldnt prevent one from making a car better than the average non intoxicated driver.

In a chaotic world made by humans… that can’t even navigate it with 100% precision, the incalculable possible failure modes for any autonomous system will ensure accidents are a certitude.

Only linear systems like trains can mitigate the countless failure modes of such a complex system.

The issue here… is with people who are vulnerable to a phenomena known as the Illusion of control.

https://en.wikipedia.org/wiki/Illusion_of_control#By_proxy

The facts are that charismatic successful narcissists insist it is safe, the liability of failure falls onto the users, and the public’s expectation of safety is low given the regular accident rates.

The insurance companies should be suing the car companies for promoting negligent driving habits.

Trains fail, too. No system is free of entropy.

However, in such cases it is usually operator error.

While automated trains do regularly squash the careless/suicidal as well — the likelihood of a collision is exponentially lower with fewer intersections/stops.

It operates on the same principal as an elevator system, that were also manually controlled in the first few years of operation.

Indeed, no system is 100% safe around the exceptionally stupid.

I have been on a train which turned some individual into pasta sauce. The train driver is subjected to a battery of drug tests, breath tests, counselling, blah blah blah, and the passengers are all put out by several hours while the police, etc take statements, write stuff down and scrape the remains off the track. I defy anyone to stop a train if some arse throws themselves in front of it. The authories should just say, “T’aint your fault. You can go about your business”.

“New for 2025, ‘Manual Mode’.

Why let the computer have all the fun driving?”

*Quick cuts: [Down shift] [Throttle to the floor] [Engine roar] [Driver pinned back in the seat] [Car accelerates out of view]*

Disclaimer at the end of commercial:

Using ‘Manual Mode’ may violate the terms of your car insurance policy.

[Do not attempt. Professional Automobile Pilot on Closed Course]

[Intended for off-road use only]

I love technology and the things we can accomplish with it. In the case of self-driving cars, absolutely, positively, NOT! I see what happens in HVAC when you provide some “smarts” in the system to take care of a few things. Home owners and technicians suddenly become stupid and can’t operate on basic principals anymore. They believe that the “smart” think will do ALL of the work for them, even the thinking. Unfortunately, it’s human nature, and because of that, this technology should not be used.

A side note: Is the EyeQ3 system mentioned in the report, the same company that maintains Eyeq root app that spies on us through our cell phone?

EyeQ3 is a chipset for implementing ADAS built by Israeli company Mobileye. https://en.wikipedia.org/wiki/Mobileye

It ain’t an autopilot if it can’t go through a drive-in and order hamburgers while you are sitting at home to order more doll-houses via Alexa.

“The accident was a result of the driver’s misuse of the technology.”

Most human drivers don’t understand the capabilities of current vehicles. How, then, can we expect drivers to understand newly added capabilities? Drivers commonly go too fast for conditions, tailgate, fail to pay attention, don’t use seat belts, etc.

Marketing it as an “autopilot” system only adds to the confusion.

“The accident was a result of the driver’s misuse of the technology.”

Isn’t that the case for any car crash, given that cars are a technology not intended to be used for crashing.

And this argument is practically the same as “guns don’t kill people kill”

CLICKBAIT DON’T KILL CLICKBAIT, CLICKBAIT DOES

Does the article fail to deliver? Have you read a more in-depth analysis of the report online somewhere?

You’re welcome.

My favorite “automatic driving” car related situation was when Jeremy Clarkson got behind the wheel of a car with “collision avoidance” and started playing with it. He attempted to hit an obstacle at low speed straight on several times and the car stopped short each time. “This car can’t crash!” he exclaimed as he took a shallow turn into the same obstacle and crashed, severely damaging both the car’s radiator and (I suspect) its reputation.

These systems simply don’t work well enough. Period.

Top Gear is scripted. That was likely crashed on purpose with the actors, well, acting surprised. Tesla even called Top Gear out for falsely staging segments, when TG showed an episode with a Tesla Roadster “running out of battery” and another with “broken brakes.” Tesla showed what the computers had logged… which was that the battery had never been discharged below some 30-40% and there had been no problem alerts or maintenance done to the “broken” one. Top Gear is entertainment, it’s not a documentary.

I think it’s interesting that for the AEB to work, the camera and the radar both need to “agree” on what is in front. From the PDF, “The camera system uses Mobileye’s EyeQ3 processing chip which uses a large dataset of the rear images of vehicles to make its target classification decisions.” But then goes on to say, “Complex or unusual vehicle shapes may delay or prevent the system from classifying certain vehicles as targets/threats.”

So unless Tesla updates the computer with new data every few years, these systems will degrade in performance as time passes and new car profiles hit the road. In addition, any wonky back ends are pretty much invisible. An extreme case would be the crazy shit you see at burning man, but basically anything could cause problems if it deviates from the expected library too much.

It isn’t looking for an identical match to a car image database. It is looking for a match to the image vector that matches cars and trucks and such. It might not identify a Piper Pawnee sitting on a highway, but it will be until cars don’t look like cars at all. There are a lot of AI videos that show how such training works.

three_d_dave, I quoted the pdf when I made my comment. It literally states that it uses “dataset of the rear images of vehicles”

This is my point. The camera didn’t “see” the truck because a side profile of a truck was *not* in the database. Whether this recognition was done as an identical match or as a vector doesn’t matter.

The radar has to “agree” with the camera. That makes sense, but the developers failed to recognize that highway conditions are inconsistent. What does the Tesla A.I. do during white out conditions? Keep going because, while the radar “sees” a wall, the camera can’t process any images?

Clearly the operator is at fault for not understanding how the car actually functions, but why isn’t Tesla taking at least part of the blame? Education is the most important aspect of driving any vehicle. Not educating your consumers to the proper operation of, what is currently new, technology is irresponsible.

I guess the internet doesn’t need to circulate the R.V. Cruise Control gaff anymore, they’ll just circulate this one.

So basically any pickup truck carrying a load tied in the back would be different than anything in the database. (The little toyota pickups that run around los angeles picking up assorted metal scrap come to mind)

That’s an interesting conclusion that I am sure will be long debated by many on both sides of the autonomous issue. However, like some have eluded to, legal responsibility avoidance is more at play than determining what actually failed.

I’ve said it before and I will say it again “self-driving cars can only be allowed on the road when human drivers are NOT allowed on the road.” Not sure if that would have prevented this though as I am not sure an autonomous truck would have waited for the Tesla to pass before initiating the same turn.

While I agree that people should not rely on these systems any more than they do an air-bag or seatbelts (ie: drive like you don’t have them, but hope they save your life if some thing/one f*cks up), not detecting 90 degree vehicles is a HUGE oversight given the number of accidents that occur at intersections. Not only is a red-light-runner harder to see and anticipate, but they are moving a nearly absolute 0 speed relative to your direction of travel.

Agreed. It should have “reaction” logic for -any- object.

In other words, this vehicle’s “autopilot” will happily drive you straight into a wall, were the conditions present for it to happen. I still would put -some- blame on the software – it should detect any object in it’s path…. car, pedestrian, pedalestrian, wall, alien spaceship… whatever. Bottom line is these systems should not be placed in service until they can do so. People get the wrong idea about their part in “operating” an “autopilot” system when those systems have huge, glaring holes in their logic, sensors, and reactions – leading to events like this. Especially when it’s marketed as an “autopilot”.

Despite the baseless fear mongering, most likely will will not see a structure will autonomous motor vehicle operation will be allowed on public roadways, not one where only autonomous cars will be allowed to use public roadways. One where the operator is responsible for the safe operation. Otherwise one would now get a pass if the cruise control drifts up past the posted speed limit, or is engaged when using the cruise control is inappropriate for current conditions.

I find this odd. I remember someone winning a case for putting their own RV in cruise control and going to the bathroom on their RV. Of course, the RV crashed. Cruise control was never meant to steer the car. I am not sure how tesla can win this one when the RV company lost their case.

The story is anecdotal. The version I head was the guy got up to make a sandwich.

No website seems to be able to refer to a specific state court or case number.

I’m genuinely disappointed with the comments here, you’d think back a day readers would be more enlightened instead of fear mongering about the future…. I bet you’re all really fun people to be around.

I suspect there would have probably been an accident of the guy was driving the car himself anyway, and the fact that he wasn’t paying attention was his own fault if it was indeed the sole fault of the car, having test driven a model S in autonomous mode and auto pilot mode, I can tell you that the sales man pretty much exclusively babbles on about you absolutely CANNOT just Mong it because you think the car is driving itself, and that’s it’s more of an evolution of cruise control than anything else. More to the point, what about all the autopilot / autonomous cars that have avoided fatal accidents when the human in the equation would not have done? Nobody ever mentions them. The computer reacts to situations an order of magnitude faster than we can. Although that “2 second reaction time of your paying attention” from a few comments up is frankly horseshit, dunno what sedatives you’re on buddy but my reaction time is about .7 at worst case.

Please forgive the typos, it’s 4am and I’m on my phone…

Have you been here long? HaD commenters are awful.

Any comments left anywhere on the internet tend to be awful. It’s only those who care enough about something to post a reply, most people who would be cool with this probably look at the article, think “Huh, bump in the road” and move on instead of angrily posting comments desperately looking to reconfirm their terrors regarding the tech.

Never underestimate the calcified brain of the curmudgeon. Change is something they just can’t contemplate. strangely, this even applies to technologists on the topic of technology

Human drivers are perfectly capable of avoiding situations like this (in the U.K. At least), provided they’re in control. The issue here is that the system lured the driver into not being in control; what a sales guy said isn’t relevant.

A quick google shows 1.5 seconds is a standard time used in accident reconstructions. Your 0.7 time is only relevant in test conditions, or if you’re rally driving or something, not commuting.

What about the accidents tesla avoided? There was an excellent article here a few weeks back largely disproving that.

What a steaming load of crap.

‘Look we made an autopilot. It can safely drive you, and all you have to do is be prepared to take control’. But .. of course driving is a multivariant problem and we’ve only prepared our ‘autopilot’ for a small subset of those, and dangers of course can only be coming from specific directions in a specified manner.

You can’t have it both ways. If you expect people to relinquish control of their vehicle you can not expect them to magically notice a subset of unmonitored dangers and regain control in time enough to be effective. This is a bad system design from the ground up.

Look, we have seatbelts/airbags that will often save your life, but may end up killing you.

Seems like your reasoning is to rid cars of these devices.

Now wait a second. Autopilot has been synonymous with aircraft for decades, yet we still have pilots. Autopilot doesn’t have to mean completely pulling people out of the loop. You are confusing autopilot with autonomous. We haven’t quite got to autonomous yet and no one has claimed that we have. No one has said to relinquish control to a magic box. At this point it is a driver aid. A very impressive driver aid. Like cruise control. Or an engine starter motor. Any car can be a giant heavy weapon if the driver doesn’t take time to learn it’s limitations. I applaud Charles Darwin’s response to people who don’t RTFM in a Tesla.

Most people don’t read the manual of their car anyways. I own several right now and only ever read one. Hell, even the car dealership doesn’t give a fuck. Last two cars I bought, the dealership gave me the wrong manual.

The problem is that most peoples (not us) idea of autopilot is what is seen in sows and movies like I, Robot, Demolition Man, Knight Rider, and Total Recall just to name a tiny tiny handful. Hell, I met people who think the Maximum Overdrive trucks really can drive themselves.

The general population does _not_ think the phrase “autopilot” is not synonymous with “autonomous”. As far as they’re concerned, autopiloting cars is the equivalent of having a human driver.

aggh… shows, not sows. Pigs have nothing to do with this.

-I- am not confusing autopilot with autonomous, the average consumer is, and it is being promoted in that fashion as well in all but legaleze. The push to run it ‘while being ready to take over’ ignores reality; human response time, attention span, etc.

Autopilot on a plane has VERY little to do with what has been named autopilot by Tesla. Autopilot has been around since the early days of aviation, just as my grandfather had an ‘autopilot’ on his boat that used a mechanical compass to stay on course.

Thats because those problems are drastically simpler than the one being attempted in automobiles. A car is confronted with any number of obstacles and objects in close proximity as well as symbols and conditions which it may be unprepared for, of course you don’t know until you come upon them. Like a semi entering the road.. who knew that would ever happen?. /s Beyond that there are stringent requirements on the licensing and pre-flight preparation of a pilot and the plane.

At this point it is a driver aid that is entering a new uncanny valley which lulls people into a relaxed state and kills them when they don’t become fully alert at a moments notice of some unspecified special cases.

Except cruise control, starters, and anti-lock breaks take control of 1 action when 1 specific, pre-identified input occurs. ..and they were only implemented after they were thoroughly tested and shown to be safe as used, not as intended.

When antilock brakes takes over the driver has already lost that amount of control over the vehicle. The brakes response regains that control for the driver and then returns it to him as quickly as the technology allows. The same is true with traction and anti-skid control. ..a starter doesn’t really play into it because its just an on off switch.

“No one has said to relinquish control to a magic box.” Actually Tesla says specifically this.

From the oft touted f’ing manual. “We combine a forward camera and radar, long-range sonar sensors, and an electric assist braking system to automatically drive your Model S on the open road and in dense stop-and-go traffic.”

But you’re also supposed to be ready to take over at any time if it can’t handle the task.

Again from TFM..

“However, you must still keep your hands on the steering wheel so you are prepared to take control of the vehicle

at any moment during changing road conditions.”

Which conditions would those be?

Cruise control takes 1 factor out of play, maintaining speed. This can be, and is dangerous at times.. however it does not remove the driver from all the other tasks of driving and maintaining the vehicle, which keeps a human in the loop, paying attention, for those times when they must intervene. People simply cannot go from a state of non-interaction, non-observation to one of full operational control and awareness in the required time. This is especially true when the exceptions are non-specific.

If you have to keep your eyes on the road, your hands upon the wheel, then why have an ‘autopilot’ anyway?

Think of it like “driver assist”. Makes more sense that way.

I believe this to be a classical case of neglecting “soft factors” in technology with disastrous consequences. The standpoint of placing the blame on the driver makes sense. However, the design of the system invites, instead of preventing, its inadequate use. In order for Tesla to sell more cars due to their scifi Autopilot, they got rid of the safeties preventing accidents, but making the system much more boring. If one designs a system in which people can take a nap and it continues to function, the maker is to be blamed. Humans are evolved to ration their energy expenditure. If the system allows inattentiveness, people will be inattentive. And that principle should have been honored by Tesla in their design.

To be totally fair to Tesla, their new designs do exactly that — they keep the human in the loop and if he/she fails the test a few times, it locks “Autopilot” out.

The problem, as many here have mentioned, is that early on Tesla wanted to have its cake and eat it too: market slyly as “self-driving” while the fine print states that it’s like ABS brakes.

Germany just passed a law saying that self-driving car accidents are the car company’s liability. BMW is exceedingly careful in warning the driver under which circumstances their driver assist function will fail. Comparing BMW Germany to Tesla’s US owner’s manual is an interesting study in how legal liability changes corporate actions.

(And I would claim, does so in favor of safety over innovation. Whether this is good or bad, discuss amongst yourselves over coffee.)

the german legislation is as much of a problem as the cases where the manufacturer refused to play any kind of ball, i dont know of any other case where it is by definition the manufacturer, no matter what goes wrong, that has the responsibility, in fact i doubt it is legally possible in germany.

yup.

The dead drivers probably watched the “new” TopGear’s (season XXIII / 4, I think) idiotic review of the Tesla Autopilot that implied that it is a true, fully autonomous, driving artificial intelligence.

“…not in the dataset…” What weasel words.

Try using that excuse when you get a ticket for running a red light.

“But officer, I was multi-tasking, and the task switch from talking on my cell phone to assessing the red light status, exceeded the time needed to hit the brakes. Clearly they need to re-design the red light.”

seems like they designed the system for an incorrect threat model.

A car stopping in front is an easy situation for a driver to handle. if Proper following distance is done, its not even a consideration in every day driving.

“objects crossing the road at 90 degrees” makes up an absolute ton of collisions that are difficult for a human to handle, why wouldn’t they design around that first? That being said, why would anyone in their right minds give up control to a system that has ZERO chance of handling this situation?

“Guns don’t kill people, people kill people”

I think its a shame NHTSA is absolving Tesla of all blame. If an industrial machine kills an employee OSHA automatically assumes the manufacturers of the equipment and the employer is guilty and liable for an employees actions.

I believe this self driving technology has been promoted as a way to mitigate people’s moments if inattention and prevents accidents because the technology “does everything for you” and takes the human out of the loop.

Tesla has good lawyers and sphere’s of influence.

As a software developer I see this statement all to often. “It’s the user’s fault.” NO, definitely when someone’s life is on the line. As a developer you create so many test situations to figure out what could go wrong and create solutions to those situations. This includes dumb users. And creating user feedback systems to tell the user they are not using the system as it was intended is one of the things most developers are to lazy to implement. They would rather just say “oh, it’s the user’s fault.”

A lot of people have already compared this to the airline industry and they are correct in doing so. The airlines have some serious testing procedures to ensure that the software is most likely not at fault when a failure occurs. So for tesla to say that “it’s the user’s fault.” is so ridiculous and I hear it so many times in my industry. It’s the developer’s fault or the manager’s fault. They didn’t take the time to assess the situation and build a robust solution to ensure the least amount of casualties.