Like any Moore’s Law-inspired race, the megapixel race in digital cameras in the late 1990s and into the 2000s was a harsh battleground for every manufacturer. With the development of the smartphone, it became a war on two fronts, with Samsung eventually cramming twenty megapixels into a handheld. Although no clear winner of consumer-grade cameras was ever announced (and Samsung ended up reducing their flagship phone’s cameras to sixteen megapixels for reasons we’ll discuss) it seems as though this race is over, fizzling out into a void where even marketing and advertising groups don’t readily venture. What happened?

The Technology

A brief overview of Moore’s Law predicts that transistor density on a given computer chip should double about every two years. A digital camera’s sensor is remarkably similar, using the same silicon to form charge-coupled devices or CMOS sensors (the same CMOS technology used in some RAM and other digital logic technology) to detect photons that hit it. It’s not too far of a leap to realize how Moore’s Law would apply to the number of photo detectors on a digital camera’s image sensor. Like transistor density, however, there’s also a limit to how many photo detectors will fit in a given area before undesirable effects start to appear.

Image sensors have come a long way since video camera tubes. In the ’70s, the charge-coupled device (CCD) replaced the cathode ray tube as the dominant video capturing technology. A CCD works by arranging capacitors into an array and biasing them with a small voltage. When a photon hits one of the capacitors, it is converted into an electrical charge which can then be stored as digital information. While there are still specialty CCD sensors for some niche applications, most image sensors are now of the CMOS variety. CMOS uses photodiodes, rather than capacitors, along with a few other transistors for every pixel. CMOS sensors perform better than CCD sensors because each pixel has an amplifier which results in more accurate capturing of data. They are also faster, scale more readily, use fewer components in general, and use less power than a comparably sized CCD. Despite all of these advantages, however, there are still many limitations to modern sensors when more and more of them get packed onto a single piece of silicon.

While transistor density tends to be limited by quantum effects, image sensor density is limited by what is effectively a “noisy” picture. Noise can be introduced in an image as a result of thermal fluctuations within the material, so if the voltage threshold for a single pixel is so low that it falsely registers a photon when it shouldn’t, the image quality will be greatly reduced. This is more noticeable in CCD sensors (one effect is called “blooming“) but similar defects can happen in CMOS sensors as well. There are a few ways to solve these problems, though.

First, the voltage threshold can be raised so that random thermal fluctuations don’t rise above the threshold to trigger the pixels. In a DSLR, this typically means changing the ISO setting of a camera, where a lower ISO setting means more light is required to trigger a pixel, but that random fluctuations are less likely to happen. From a camera designer’s point-of-view, however, a higher voltage generally implies greater power consumption and some speed considerations, so there are some tradeoffs to make in this area.

Another reason that thermal fluctuations cause noise in image sensors is that the pixels themselves are so close together that they influence their neighbors. The answer here seems obvious: simply increase the area of the sensor, make the pixels of the sensor bigger, or both. This is a good solution if you have unlimited area, but in something like a cell phone this isn’t practical. This gets to the core of the reason that most modern cell phones seem to be practically limited somewhere in the sixteen-to-twenty megapixel range. If the pixels are made too small to increase megapixel count, the noise will start to ruin the images. If the pixels are too big, the picture will have a low resolution.

There are some non-technological ways of increasing megapixel count for an image as well. For example, a panoramic image will have a megapixel count much higher than that of the camera that took the picture simply because each part of the panorama has the full mexapixel count. It’s also possible to reduce noise in a single frame of any picture by using lenses that collect more light (lenses with a lower f-number) which allows the photographer to use a lower ISO setting to reduce the camera’s sensitivity.

Gigapixels!

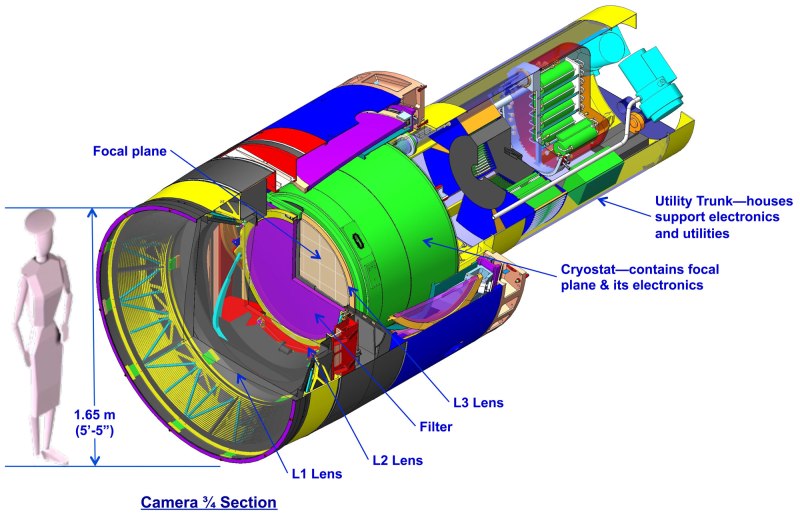

Of course, if you have unlimited area you can make image sensors of virtually any size. There are some extremely large, expensive cameras called gigapixel cameras that can take pictures of unimaginable detail. Their size and cost is a limiting factor for consumer devices, though, and as such are generally used for specialty purposes only. The largest image sensor ever built has a surface of almost five square meters and is the size of a car. The camera will be put to use in 2019 in the Large Synoptic Survey Telescope in South America where it will capture images of the night sky with its 8.4 meter primary mirror. If this was part of the megapixel race in consumer goods, it would certainly be the winner.

With all of this being said, it becomes obvious that there are many more considerations in a digital camera than just the megapixel count. With so many facets of a camera such as physical sensor size, lenses, camera settings, post-processing capabilities, filters, etc., the megapixel number was essentially an easy way for marketers to advertise the claimed superiority of their products until the practical limits of image sensors was reached. Beyond a certain limit, more megapixels doesn’t automatically translate into a better picture. As already mentioned, however, the megapixel count can be important, but there are so many ways to make up for a lower megapixel count if you have to. For example, images with high dynamic range are becoming the norm even in cell phones, which also helps eliminate the need for a flash. Whatever you decide, though, if you want to start taking great pictures don’t worry about specs; just go out and take some photographs!

(Title image: VISTA gigapixel mosaic of the central parts of the Milky Way, produced by European Southern Observatory (ESO) and released under Creative Commons Attribution 4.0 International License. This is a scaled version of the original 108,500 x 81,500, 9-gigapixel image.)

Holy cow that’s the most technical article about camera sensors written by somebody with far to little knowledge about it I have ever read.

Agree. At this level Wikipedia is better.

Samsung ended up reducing their flagship phone’s cameras to sixteen megapixels for reasons we’ll discuss, then doesn’t discuss it?

“This gets to the core of the reason that most modern cell phones seem to be practically limited somewhere in the sixteen-to-twenty megapixel range. If the pixels are made too small to increase megapixel count, the noise will start to ruin the images.”

Unfortunately true.

“Threshold to trigger the pixels” – complete BS, as you do not want a 1 bit black white picture. It’s analog. And raising the ISO setting has nothing to do with power consumption. This is just ordinary thermal and other noise, if you raise the gain you also amplify the noise.

And of course the limits of pixel density are also quantum effects, limiting the minimum size of sensors or pixels. As light consists of photons – quants – which correspond to a finite wavelength.

Not even mentioning the optics. Putting a huge megapixel sensor behind cheap glass will just result in higher-resolution blurry pictures. Match the sensor resolution to the resolution of the images that the glass can actually make!

Well, higher resolution blurry pictures can be deconvolved.

Enhance,Enhance

“Putting a huge megapixel sensor behind cheap glass will just result in higher-resolution blurry pictures.”

So, THAT’S what I did wrong! Thanks!

I believe this is a big factor with phone cameras stabilizing at 15-20MPix. Regardless of sensor quality and CMOS noise, you’re still limited by the resolving power of the optics, which in turn is limited by the aperture.

Welcome to Hackaday

New low, hands down.

the ‘amplifier’ on a cmos takes up valuable pixel space, up to a certain size ccd will ‘perform better’ if you can find any. of course when back lapping became a thing this all went up in the air.

pixel count might have been the yardstick for digital cameras but certainly the competing factor for mobile phones was sensitivity, if a sensor could produce a decent image in a dimly lit pub or nightclub on a Saturday night out it was a winner.

thermal noise is certainly a factor but talking pixel size photon shot noise is the real killer.

No mention of the fact that pixel density cannot be smaller then a wave length for the current filtering mechanism to function. No discussion about how a series of red, blue, and green pixels are organized. No discussion of the resampling used to convert from discrete RGB pixels to a pixel that has all colors included. No discussion on how the physical limits based on the filter construction are driving to fix optics over pixel count. No discussion on why the transistor size is much larger in CMOS imagers than memory or CPUs because the size is constrained by optical physics not chemical physics.

Not even a discussion on possible “winners” of this race or the maximum density that stopped the race…

This is a slap dash article at best.

Lolz. Phyics sure is hard. ;)

“Pixel density cannot be smaller then a wave length for the current filtering mechanism to function” is not technically true.

Well, sure.. you CAN have pixels smaller than the wavelength.. but you actually loose resolution because of diffraction effects..

That good ‘ol PSF sure is a pain..

Not so. You can account for some of these things, to a point. The point spread function is a bit more malleable than most people might think.

http://www.nature.com/lsa/journal/v5/n4/full/lsa201660a.html

http://pubs.acs.org/doi/abs/10.1021/nl501788a

https://www.osapublishing.org/oe/viewmedia.cfm?uri=oe-23-19-24484&seq=0

That is what books are fo.. er .. the Internet is for.

Nokia had a 41 megapixels phone in 2012

Maybe that’s what killed them. Camera overkill.

Nah, Microsoft did. The Nokia Lumia 1020 was a great phone crippled by the Windows Phone 8 OS and only having a dual core CPU. The camera was great.

The sensor is about 1/3 of a square meter. The camera takes up about 5 square meters of floorspace.

This article was not well researched or fact checked. It contains a giant imaging sensor created by combining 189 individual CCD sensors. It will not be a single sensor.

Will save 6 petabytes of data per year though.

Do they put those petabytes into a petafile? Was wondering about that.

CMOS sensors are not more accurate that CCD and certainly this not because they have an amplifier for each pixel. The opposite is true. In applications where quantification is a real requirement CCD is still the preferred sensor because of its linearity. All you have to do is normalize for Qe across the spectrum and keep the exposure such that the photon count is within the full-well capacity of the sensor. Any non linearity in the individual “pixel amplifiers” would result in non linearity in the final measurements. Combine this with the temporal complexity added by the rolling shutter most CMOS cameras operate with compared to the global shutter common in CCD sensors and you got another reason to avoid them.

I have to say though that CMOS sensors lately have made huge steps of improvement, creating so meting that would be a joke just a few years ago: sCMOS: scientific CMOS sensors…

In astronomy it is common to actively cool the sensor to reduce noise.

In the diagram, the camera sensors are in a cryostat.

I’m sorry but this entire article about camera sensors is just false, CCD is in fact better than cmos for photographic images, cmos is right at its door step though. The whole min voltage to trigger is ISO is false. I feel like your just putting out an an article to be putting out an article. Stick to what you know, don’t create fluff to be more of a writer. Subjectively, most of us here are tech heads and not general public who does not want or need the fluff, I rather read a reference manual than the marketing flyer for a device. Stop being the marketing flyer.

Yes. This is what I recall. CCD is much better. Bucket brigade. Analog. Etc. This article is way off. Shame on Hackaday for letting this go through. Where’s the editor?

Stopped reading when it didn’t mention nokia 808 41mp smartphone it beat the samsung of the time, cleverly creating 8mp noiseless photos or 3x zoom

Terrible article from Ignoramus. Stop writing articles at, or stick to something you:

– have knowledge about

– understand

– proof the accuracy of what you assert

– expunge your text from movies cheap sci-fi INSPIRED “Like any Moore’s Law-inspired race,” fantasy.

Sony just announced a 3 layer sensor (21.2 effective megapixel) with builtin buffer for smartphones that can do 1000FPS at HD resolution and also does HDR , so the race is not over by far.

Interestingly the agreed interface standard for phones don’t allow data to be read that fast so the buffer just stores the data in between.

Because of that readout limit though the practical effect is video @ 3.840 × 2.160 with 60FPS and in full HD with 240 FPS.

They point out the high speed will prevent rolling shutter a good bit I understand.

No I don’t work for Sony, in fact I boycott Sony products, but parts are parts eh.

http://mipi.org/specifications/camera-interface say:

“CSI-2 over C/D-PHY imaging interface does not limit the number of lanes per link. Transmission rate linearly scales with the number of lanes for both C-PHY and D-PHY.”

It look like it’s not the standard that limit the data readout speed.

Standard mentioned was the MIPI alliance standard.

Mind you the problem here is that the device makers probably just want something they can select and plug in so the sensor people have to design around that expectation I expect.

Not that I know anything about it.

They say the standard is 2.2 or 2.0 Gbit/s per lane btw

Parts are parts but some parts are easier to use. Looking at you, memory stick.

Apart from Sony making even more money, is there a point to Memory Sticks? Compared to SD Card and the rest?

I would not say that CCD have been relegated to specialty applications. Fujifilm has 16 megapixel CCD sensors in consumer cameras (S8600).