[G6EJD] wanted to design a low power datalogger and decided to look at the power consumption of an ESP32 versus an ESP8266. You can see the video results below.

Of course, anytime someone does a power test, you have to wonder if there were any tricks or changes that would have made a big difference. However, the relative data is interesting (even though you could posit situations where even those results would be misleading). You should watch the videos, but the bottom line was a 3000 mAh battery provided 315 days of run time for the ESP8266 and 213 days with the ESP32.

The fact that the hardware and software only differ in the central processing unit means the results should be pretty comparable. [G6EJD] accounts for the current draws throughout the circuit. The number of days were computed with math, so they don’t reflect actual use. It also depends on how many samples you take per unit time. The goal was to get operation on batteries to last a year, and that was possible if you were willing to reduce the sample rate.

While we generally like the ESP32, [G6EJD] makes the point that if battery life is important to you, you might want to stick to the ESP8266, or look for something else. Naturally, if you are trying to maximize battery life, you are going to have to do a lot of sleeping.

The real question is was this done with the orig esp32 die, or the newer one that had a si bug fix that reduced sleep current?

G6EJD is my Radio Amateur call-sign and my alter ego :). It was the original 0 revision chip, I’ve not found many suppliers with Revision-1 devices. The sleep current is consistently low at 5.6uA across all 3 devices that I tried and I believe this is as a good as it gets, but for battery powered use that current level is not dominant even over many years and is probably less than most battery self-discharge currents. RF power consumption is the driver in my particular experiment’s outcome.

Eep, 3 seconds active radio time to upload a handful of bytes of data? Work on that from two angles: Why is it taking 3 seconds—are you doing DHCP every time, say? Is the server just slow to respond to the request?

If it must take 3 seconds, then at least gather up an hour of data to send, and squirt it all at once, so you have 3 seconds per hour instead of 18 seconds per hour (say). You’ve just saved about 80% of your battery right there.

He’s using a cloud service called Thingspeak, which has REST and MQTT interfaces it appears. REST is going to be tossing around boilerplate in HTTP headers, and both of these are TCP protocols. On a really bad link I could see there being a crapload of resent packets. I could easily see DNS being in there, and if it’s sleeping for long times it may have just been assumed that the DHCP lease expires, or it isn’t stored at all for minimum battery use. If it was just me I’d be sending a single datagram with a secure hash for verification to a static IP. But I’m lazy as hell and would much rather use a PI or OpenWRT unit as a gateway bundling unit running a C app than try to interface with a web app from a device with no OS.

This is where an intermediary would be of good use. I do the same thing with my power meter. I talk to my own server which sends out a single TCP message, and the server then later uploads to Thinkspeak for this reason.

Or you can run a MQTT broker on your local box and use static configuration for all the sensors. And if you want to expose your sensor data to the Internet, then run a web server too…

Anything cloud-based is a bad idea…

Given someone further down the page is talking about saving values to the RTC memory so they survive deep sleep on an esp8266, the information from DHCP and DNS being lost in sleep and needing to be redone seems increasingly likely.

(Hi I’m G6EJD) what I found was the ESP8266 was significantly more reliable and faster at making a network connection than the ESP32, yes in both cases using a DHCP assigned IP address and negotiating the TCP/IP protocol overheads. I did try using RTC RAM but the devices take such a large in-rush current, especially the ESP32 that without a low impedance regulator to provided the much needed stability the ESP32 barely operated thereby losing RTC RAM contents. I used the capacitor to help regulate energy demands, but the true value required (i.t=C.v) (where I, C and V are the delta current and voltage demands) of 10,000uF to provide sufficient energy demands during the initial transmit phases looked impractical, so a 10uF was a compromise, also the AMS1117 LDO regulator has a quiescent demand of ~3mA and that was a factor of 10 greater than the ESP32 average current demand, so it’s all a compromise; suffice to say the only difference between the two trials was the device. I measured mAh consumption with 0.1ohm shunt and a digital storage scope over a period of 48-hours to enable me to get the best possible average current demand with sufficient validation for the figures I quote of 74mA (ESP8266) and 115mA (ESP32) that enabled me to predict with reasonable degree of confidence the expected battery life. As I say in the video, the ESP32 is being marketed as a low power IOT device and I was surprised that I could not achieve better (IMO) battery life than I did.

I also tried reducing RF output power and I assume the library call I used worked, but the result was no reduction in current demand, but (I have not measured it yet) I suspect RF output power is reduced. This outcome is not unusual for an RF output stage, where you can reduce the RF output power and yet the input power remains largely the same albeit efficiency drops-off markedly. My HF transmitter demands 16A at its full 100-Watt output and 12Amps on 10Watts and this is typical of a class-A/B RF output stage that I surmise the ESP devices are also using, but there is little or no published design data to verify my assertion.

I note similar devices produced by Microchip offer far superior low power performance and these offer the solution I was looking for.

for your inrush current, use a supercap in place of a regular aluminium

I think what some of us were starting to wonder is how the math would have worked out for never going into deep sleep and having a larger sleep current draw in exchange for not forgetting the DHCP and DNS info while the leases and TTL are still valid resulting in a drastically shorter time using the radio (just one short TCP session).

The power budget is 0.34mAh/day to last 1-year with a 3000mAh battery, so the maximum Tx time would need to be 1.8 seconds for a 10-min update rate and I’m currently near 3-secs. Yes it’s possible that not having to negotiate an IP before upload will be faster or by keeping the link active (although my experience shows for no more than 24-hours), but as far as I can see from Espressiff’s documentation it would need a low power mode that kept the link active but all their modes that use sufficiently low enough CPU power also shut down the WiFi / BLE, so I believe my trial aim is not feasible with this device. I will run a trial with a fixed IP though to see if that improves timing, but I’m beginning to wonder if the Thingspeak server may be the dominant part of the overall upload timing. I can get the ESP to record the timeline from wake-up to sleep, that may be of interest.

but, how about storing the numbers in memory, if it’s not that different from the last measurement, and only sending if you accumulate 5 of them in a batch or if it’s very different from the last measurement then you might get 4 years of battery life. if you are detecting things like water leaks, you don’t have to send every hour.

Bold claim right there, I’d like to know of a leak within seconds!

Apart from potential optimisation of his algorithm, the title promised a comparison between the two systems, and it delivered exactly that.

That depends on how you’re able to detect a leak. In many cases detecting things via aggregating data it isn’t possible to know something within seconds.

This appears to be exactly what you’re describing:

https://www.amazon.com/dp/B0748RK2XQ/

Water leak detector, uses a ESP8266, only connects to wifi when leak is detected, long battery life

The problem with sensors that only communicate when something has changed is “nothing has changed” looks exactly the same as “sensor is dead”. I wouldn’t use something like that for anything safety critical.

Agree. There’s no easy way to know if a battery failed, etc. My ESP8266 water sensor project checks in and reports battery voltage every 3 days. It wakes up hourly, increments a counter, and then goes back to sleep until 3 days have passed. A water leak will trigger the RST pin to wake up the ESP and in that case it reports in immediately. No check in for more than 3 days means something has failed. Of course, I’m using more circuitry to accomplish this than just what the ESP8266 is capable of.

Agreed.. I try to avoid this situation. My ESP8266 project uses auto light sleep, and MQTT. I only get 2 weeks from an NCR18650, but i hope to couple that with a small solar panel to get me to infinity. not bad for real time.

You can get much more battery life if you log a lot of data offline and only transmit rarely. Depends on the application if that is a suitable option, but by transmitting larger batches of data less frequently, you should reduce the modem up time which is a big consumer of power.

I’m working on a similar project and are experimenting with RTC memory on the ESP8266 to extend the battery life. The idea is to save samples in the RTC memory (which survives deep sleep) and to only transmit them to the server when the RTC memory is full. Reading a sample and writing it to RTC memory is much quicker and less power hungry than starting the WiFi module and transmitting data. I don’t have the final results yet.

Check out this tutorial, has some good info about using RTC memory for preserving data in deep sleep mode : https://www.youtube.com/watch?v=r-hEOL007nw

Or take a Cypress FRAM ( 8 µA standby) and have as much memory as you want on your hands. Compared to the 128 bytes rtc…

It’s 512 bytes on the ESP8266, actually.

How about a middle ground of send frequency, have it save up the data and send every hour or whatever unless the data is a certain value then send sooner.

This looks like a good time to mention that i have been working on a sleep solution for the ESP8266 that keeps it connected to WiFI, so you can trigger an interrupt, and it will send an MQTT packet immediately.

The idea was for more two way / real time battery powered sensors.

And it is still a bit unstable, but doing OK..

https://github.com/leon-v/ESP8266-MQTT-Auto-Light-Sleep

thanks!

Nice field test, what about lowering the CPU speed ?

Ahh, we think alike, yes I tried that (40MHz) and no discernible difference. Out of interest, the ESP32 consumes ~44mA with the Wi-Fi off and the ESP8266 about half that, I need to measure CPU draw at different clock speeds to get a definitive answer to that question. But, the dominant component in the mAh calculation is the (relatively) high RF stage (Wi-Fi) current demand, if that was down at 55mA it would have achieved 365-days easily. The ESP32 takes ~120mA, followed by pulse of 350mA (I believe the RF calibration phase) and then drops back to ~120mA, it is a common failure of many ESP32 designs that these high start-up current demands cannot be met leading to poor reliability. In my trial having those two batteries in parallel (3000mAh) should have been more than enough to provide a sufficiently low power supply impedance, but they were right on the limit of operating the ESP32 at start-up, it was only when I added the capacitor and shortened the cables that things became stable. Surprising the supply voltage measured at the ESP32 was dipping down to 2.6volts at start-up due to cable resistance, I was using Dupont cables, so not the best. There is much to the peripheral design of the ESP32 than as typically depicted with the bare unit. Most development boards (I now know) use the AMS1117 LDO regulator to provide the stability and dynamic impedance response to cope with the device start-up demands.

@David Would it be possible for you to try how fast ieee80211_freedom_output can be used after wake up? That means you have to have a server that monitors wifi traffic constantly, but that seems doable. Wifi is not supposed to be a single packet protocol but there are other ways than using the freedom_output I’m guessing. I feel it should be doable to get the wake up time down quite a bit.

https://hackaday.com/2017/04/24/esp32s-freedom-output-lets-you-do-anything/

A good idea, what I’m just about to try out is a measurement of the time-line from wake-up to sleep and see where all the time (3-Secs) is being consumed and by what part of the overall process, I have a idea it might be my Wi-Fi router, I’ll then try a fixed IP and any other techniques. I’m sure my router destroys the link certificate/TTL reset as soon as the connection is dropped, so that’s another factor I’ll look at.

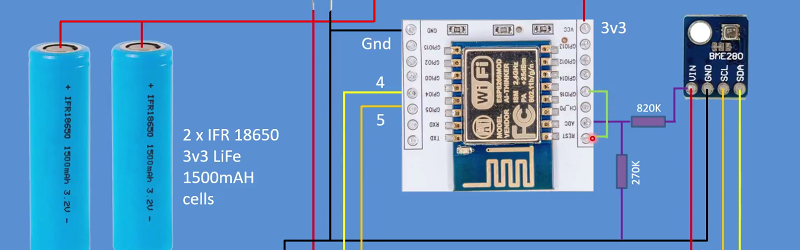

Trial aim: Determine time budget needed to update a Thingspeak server with Temperature, Humidity and Pressure readings.

Trial conditions: ESP32 connected to a Bosch BME280E via a 320Mbps Wi-Fi connection to a 80Mbps fibre broadband connection.

DHCP Assigned IP address:

Start: timer=0

Time to get an IP and start server: 2501mS

Time to update Thingspeak: 3381mS

Total time 5.882secs

Same update of Thingspeak, but connection still active

Connecting to ThingSpeak…

Time to update Thingspeak:2479mS

Going to sleep

DHCP Assigned Fixed IP address:

Start: timer=0

Time to get an IP and start server: 2487mS

Time to update Thingspeak: 3321mS

Total time 5.798secs

Same update of Thingspeak, but connection still active

Connecting to ThingSpeak…

Time to update Thingspeak:2479mS

Going to sleep

DHCP Assigned and Reserved Fixed IP address, lease still active:

Start: timer=0

Time to get an IP and start server: 2461mS

Time to update Thingspeak: 3323mS

Total time 5.784secs

Same update of Thingspeak, but connection still active

Connecting to ThingSpeak…

Time to update Thingspeak:2485mS

Going to sleep

Conclusions:

1. There is little performance gain from using a DHCP assign or Fixed IP address. DHCP protocol overhead is minimal.

2. Leaving the connection active provides an improvement of ~850mS, but an ESP low power mode with Wi-Fi active and of sufficient low power does not yet exist AFAIK.

3. The Thingspeak server takes a finite time (about 3-secs) to process the update. When I conducted my first trials the Thingspeak update timing was much faster, must depend on their server load.

Try it yourself, I’d be interest to see other results or a solution.

It’s on my todo list, but using non TCP communication has its drawbacks.

awesome details! I didn’t notice you specifying the type of Wi-Fi network security you used. I would try it on an open network and see if that brings the time down. If that works, then you could look at ways of dealing with security issues. You could do some fancy firewall stuff, but I would try running another ESP8266 as the AP and then connect it to a computer over a UART. The open network sounds bad, but if you only use it for transport with no IP access to your network it should be OK (similar to unsecured ISM band communications). I would also disable over the air (OTA) updates when running on an open network.

Hope it helps!

Hi, the security in use was WPA2 and although I have not tried an open network, I suspect the IP packet overhead is not that great, maybe a few percent slower. The connection time is is quite quick but Thingspeak servers take some time, it may be because it’s not an SSL connection and that may cause a change in access creating the delay. However, I have since purchased a Wemos Lokin ESP32 Lite and that uses considerably less power, if I get time I’ll repeat the trial with that device. I’m currently running a weather forecaster on it and can see it’s using so much less power but it needs days of running to get a good accumulated result. The Lite version is using the CH340c hart that takes 50uA in standby rather than the normal 22mA of the CP2102 fitted to a development board, so it’s my new favourite board. I will try out the test using an open network though, a good suggestion, thanks.

That is strange. I will try also.

3 seconds?!? I don’t know the exact size of the payload and how many tcp packets are needed to bring that payload on the other side of the link but … FreeRTOS (default in the esp-idf) uses LwIP, that was made for always on systems: it is too power hungry for this application. Usually a 3 seconds transmit can be turned down to 200ms using raw networking instead of LwIP!!!! It means you can multiply by 12-13 your results!!! Ie: 200 days become 2500 days :)

Have a look at https://github.com/Jeija/esp32-80211-tx (it’s the same guy that made the long range transmission reviewed here on hackaday a couple of years ago) for raw networking. And enable AMPDU in esp-idf sdk (that may give you another 20% increase by reducing the 802.11 framing overhead): 3000 days. By adding an efficient power havervester (in order to suck those batteries to death) you could get 10 years longevity … easypeasylemonsqueeze.

My guess urge another test from you! I’m curious!

I forgot another detail: if you don’t need exactly 6 samples per hour, and the radio link is fairly good … you can switch to UDP. It will loose some datagrams (ie: you could get 3-5 samples per hour instead of 6) but don’t have to establish a TCP session and send ACKs! I can’t remember the overhead ratio between TCP and UDP but it should be a big number. This change, by itself, could double (or more) the longevity of those batteries.

If I keep thinking I probably can find more issues but that’s not the point: from the video you sound like a guy with deep electronics know how, but very little IT one. You can’t state that analogue RF is more efficient because … well … analogue RF doesn’t exist any more: 99% modulations used nowadays are binary encoded, even the very simple one you cited in the video (OOK) transmits binary sequences, and the more are complicated the more FFTs have to do, the more digital logic is needed, the further they are from analogue RF :)

Well in the many months that have elapsed since I posted this video no-one has yet to come up with a solution. The specification is the specification and that is that, yes one could employ different strategies but that’s not the point. Once the RF stage is on that’s when the huge power demands are required regardless of whether it is a UDP or TCP datagram. The dominant power consumption is when the RF stage is running. Yes lwip could be tried but then it would add to the system complexity as it would need an lwip intermediary to get the data to Thingspeak. I specialise in electronics and RF engineering and hold a degree in electronics from Brunel and a post graduate diploma from MIT so I think know a bit about RF link efficiency and BTW nearly every digital protocol applied to an RF stage is highly inefficient and this can be verified by analysis usage Shannon’s theory. There have been in excess of 400 responses to my video many suggesting solutions but to reiterate no solutions are forthcoming so please have a go at implementing a solution and implementing your ideas so we can see if they are correct, I’m looking forward to your solution.

Let me be clear: I’m here to learn from you and I’m grateful for the time you’ve spent to publish those results. I could feel you are pretty good at electronics and RF!!! That’s typical of people like you: you are used to make a perfect RF stage and be ready to go … digital tech instead require more steps. You don’t do it, then complain :)

I was just saying that you should consider that test, to be just a naive test, nothing definitive. There is plenty room for getting better results. That test is not enough to spit that large spectrum sentence about digital devices.

Mine was a quick and dirty answer to an old post. In practice you can’t sum up all those things (raw networking, ampdu, UDP). In general the tcp/ip protocol stack was not made for battery powered and/or moving objects; everything you see around is standing on top of cheap charlie workarounds made to make the accounting department happy. It’s not engineering, it is marketing. If you want to go down to detailed RF energy/symbol balance, the word ‘tcp/ip’ itself is marketing.

IETF lately made some protocols that can do it but … nobody deployed those at large (yet). So, to hook one of those consumer bait-and-switch services (Thingspeak), you must spend money (battery, gateways, whatever).

If you can’t relax the requirements a bit (ex: having best effort results in the way that you could loose some of the hourly samplings), and you can’t manage the other side of the link (ex: having Thingspeak setup some kind of gateway device for its users), there’s really no solution to your problem using current mainstream internet technology only. The best thing is probably LoRa (or sigfox), and it works trough gateways … an extra device in the middle.

About the analogue-digital war: you’d need an analogue-digital gateway somewhere also using old style analogue RF … Thing Speak digital :)

I’m not complaining, merely observing that a product (ESP32) marketed and sold as a low power IOT device is far from what most people would assume is an IOT device, this was the purpose of the video to show that such an Internet of Things device cannot really be classed as low power. I have sensors even the ESP8266 and other devices that report temp, humidity and pressure and can run for well in excess of 1-year on batteries which was my design aim. I showed this was not possible, but the product predecessor (8266) is far more efficient at achieving the same thing, I don’t know why Espressif did not use the same RF stage as that is the dominant power consuming part. You will see in the discussion that I also tried a few other techniques to reduce power demands, but I and others found no solution. The result of this is to return to using the ESP8266 for low power applications because it works and achieves the design aims. I have looked at the data sheet but there is little to nothing on the RF stage parameters and there may well be an impedance mismatch , but actually what’s important is the antenna is the same impedance as the transmitter be that 1R 33R, 50R or 300R, there just needs to be a match, the transformation to free space impedance is the antenna itself and a function of its radiation resistance but that does not tend to affect the RF stage performance due to mismatch. The data being uploaded to TS Is about 70bytes comprised of API key?field1=value&field2=value&field3=value, quite small.

I can’t agree that 99% of transmissions are digitally encoded but I can agree that 1% of transmissions are digitally encoded! There are few if any digital encoded transmissions around, please give some examples of this digital predominance that you refer to?

I’m an IT guy, not an RF specialist. Can’t really stand in this discussion. However, discussions bring to enlightenment (and giving fire to people, myself included). So, let me understand a bit: what do you consider to be analogue (or digital)?

Just as a reference (because I’m not english-native) I opened Merriam-Webster, searched for digital and they gave me:

– of, relating to, or using calculation by numerical methods or by discrete units

– composed of data in the form of especially binary digits

And so on.

Examples of digital predominance?! Are you serious? Have you a mobile phone? A TV? Just look at those!

In the video you used OOK as a term of comparison; probably the wrong one, because wikipedia clearly defined it as digital (“the simplest form of amplitude-shift keying (ASK) modulation that represents digital data at the presence or absence of a carrier wave”).

Is that analogue or digital or both? I consider it to be digital. The media is analogue, and we must match impedance, but we catch it trough sampling digital information (discrete units) about it. The more DSP power we have the better the results. The better silicon tech the less energy wasted to get those results. I’ve no idea what kind of efficiency they got in recent ICs but … I was looking at those figures a couple of years ago and It seemed to me that compared to a few decades ago there are some orders of magnitude: FFTs are not that (energy) expensive any more. Sending the modulated analogue sensor signal, or sending its samplings could be equal, in terms of energy spent. And that may be the reason why all modern tech (TV, phones, and so on) use digital systems. That’s why I said everything is digital nowadays. That’s predominance. The media is still continuous because can’t be different, but the transmission is digital 99% of the times.

There’s no romance but it’s digital and it works.

You are right, can’t be 100% equal because electrons make silicon warm, but if new ICs reached 99,99999% (fifth standard deviation) equality, we can scientifically say it’s equal without being wrong, and get out from this baseline only when we need to study the phenomenon itself. And we are not doing it here. Here is electronics applied to a single use case.

One packet is enough to send all the data with a large spare capacity.

Packet? You mean a TCP packet or a 802.11 frame or a datagram or … ? To make it short: how many digits you need to send? I’m asking because if you can ask to the other leg to use the so called ‘monitor mode’, then parse the received frame … and you can send one custom 802.11 frame only … you might be set.

BTW, yesterday after commenting I’ve been searching a bit about this issue. I’ve found a couple of websites stating that the ESP32 isn’t exactly 50ohm; so if you are using one of those cheap charlie boards available on the market the antenna matching could be a problem to solve as well. Did you measure the impedance or you just gave for granted that the datasheet is good?

I can’t reply above: there’s no Reply button. Anyway, not much to say. I should have a look at all the comments and hands on the actual electronics before speaking more.

I agree with you that IoT is just another marketing crap. But I’m curious, I’ll give it a try asap and will report back in case I find something useful. Because I’m sure there is margin to get a better deal with these tiny cheapos. It’s a shame that to send 70 bytes we must keep a radio on for 3 seconds. That time is enough to speak those numbers at the phone.

Thanks for the conversation.

Another one: Nagle Algorithm. “a large spare capacity” means that some of the energy spent to turn on the device, its radio, and send the actual sensor data … is wasted. Those 3 seconds of high energy consumption are partly wasted. Can you send all the samplings of the day once per day? Or every 2-3 hours instead of every hour? Example: If the dataset to be sent each time employs half of the minimum packet size of the radio protocol in use (or the logic layer above: tcp/ip), you can use 1 packet to send 2 datasets. Hence sending every 2 hours doubles your battery life.

Another one: differential data. Because of the nature of the data, you can send increments/decrements instead of absolute values. That could cut the size of the payload. There’s no point to send the geographic location of the sensor each time, for a sensor that doesn’t move. There’s no point to send the first two digits of the year for a sensor that won’t last another 80 years: let our grand nephews to have their Millennium Bug. And so on … reducing the dataset size to simple increments/decrements of fixed position bytes. Or even bit changes: reducing the alphabet size has been a second industrial revolution :)

Combine the first (ie: sending 1 digest instead of many single samples) and the second (reducing the size of the single sample) and the efficency multiplier goes high fast …

This always in the case it is possible to have an agreement about the protocol, with the other side of the transmission. That is indeed not a so big deal. I mean: it sounds strange to me that a company with a sensor data gathering core business… well… doesn’t allow its customers to push via web a custom script to mangle the data before handling those data to them. It’s dumb. If they don’t know how to do a full fledged website, Apache has a cgi-bin folder for basic server side scripting since I was a kid :)

Establishing the network connection is what’s taking the time at start up the ESP begins a radio calibration routine with the radio on then it starts the network connection process waits for the router then server then sends the data the latter part is near instantaneous this is why in the various trials we have tried fixed IP’s not using a DNS service etc etc many theories but no solutions. I note in my most recent experiments where I’m using the wifimulti library that it can take up to 4-secs to make a connection but the wifimanager library is very quick by comparison but not quick if I get time I’ll record the connection time line through to closing the network connection.

I have just completed the time-line here are the results:

ESP Starts 0 mS

Setup Serial port 3 mS needed to see timings

Start WiFi (not connection though) 47 mS

Connect to server 1047 mS 1000 Time to connect to server

Upload to server 2352 mS 1305 Time to upload

Sleep

I notice that it is much faster than it was when this experiment was trials in Sept’17 and then again on 26-Sep’17 with similar timeline results, my Router and line speed here is the same, so could be that TS have faster servers, anyway total time to upload is 2.352secs and the actual time to send the data is 1305mS

Andreas Spiess gas reported reducing transmission times for data logging to 80ms. Using esp-now on an esp32s.

That might help with battery life and with WiFi client proliferation overloading some routers where the environment has many sensors.

Hello mates, pleasant article and good urging commented at this place, I am in fact enjoying by these.

Very useful and great discussions and videos particularity for somebody new to this field like me, thanks everybody. I’m looking to create a battery powered ESP8266 connected to an LDR sensor which I intend to stick onto my electricity meter to count the pulses of the led within the meter (100 pulses = 1kwh). This will allow me to log my energy usage. I only need to send the data over Wifi once a day but the sensor will need to continuously ‘listen’ for blinks from the electricity meter. From a power consumption point of view is this something that could realistically be run from a battery and can the wifi be shut down apart from the 1 time daily when it sends the data?

Realistically no, the esp devices are not suited to that type of application and consume ~40mA with the WiFi off. BTW you can buy a sensor adapter for that type of meter on a cable usually about 1M long that would / should enable the unit to be housed outside the supply box and be powered from the domestic supply.

Hi, thanks for your article.

I have a “little” trouble with my configuration: my ESP32 “Wemos” wifi+BT+Battery (18650), configured with BME280, acts like an external meteo station. It’s connected via wifi (fixed IP) and MQTT to a Home Assistant Raspberry (all in my home LAN), with a deep sleep of 30 minutes (about 3 seconds from wakeup to deep sleep).

But it last only 1 day! Could you help me?

What size battery are you using?

I suspect your ESP32 is not entering sleep.

Change your sleep duration and see if it makes any difference, don’t forget the ESP32 uses microseconds as it’s sleep unit, so 30 mins is 30x60x1000000

I use one 18650 3,7V 3000mA, directly onboard.

The deep sleep seems working (using serial connection or MQTT, I get values from BME280 every 30 minutes as they appear on my Home Assistant installation (1800×1000000).

Could it be the BME280 draining current?

The BME280 will consume about 5mA if it’s a 5v version, there are two breakout boards generally available one with a regulator (5v) and without 3.3v, the bme280 takes about 8uA. So 3000/5 = 600 hours or about 20-days so it’s not that. You should measure your sleep current as many developments boards take 22mA in sleep (3000/22=4-days) and often more, that is most likely your problem. A wemos D32 takes about 8auA in sleep I have many running and giving that level of performance.

Ok, it’s a development board.. but 1 day with deep sleep is too low…

What type of board?

I have two.

The first: https://www.amazon.it/gp/product/B077998XCN/ref=oh_aui_detailpage_o05_s00?ie=UTF8&psc=1

The last: https://it.aliexpress.com/item/ESP32-ESP-32S-For-WeMos-WiFi-Wireless-Bluetooth-Development-Board-CP2102-CP2104-Module-With-18650-Battery/1852360532.html?spm=a2g0s.9042311.0.0.16ed4c4dWaTjdq

All are “Wemos” wifi+bluetooth+battery

That board is your problem, that type also has an on board charge circuit and an led too, this is why the consumption is so high in sleep. It’s not a wemos board (visit the wemos site to see their products) it’s a fake wemos there are many. The best board for low power is the wemos d32 it takes a very low 8uA during sleep.

So your board is consuming ~ 3000mAHr/24 = 125mA, so that tells me it’s not going to sleep…

The correct command to initiate sleep on the ESP32 is:

const unsigned long UpdateInterval = (30L * 60L) * 1000000L;

esp_sleep_enable_timer_wakeup(UpdateInterval);

esp_deep_sleep_start(); // Sleep for e.g. 30 minutes

Yes, this is the command… I’ve now changed my IDE, using your (with MQTT changes), and I’ll report any improvement.

Thanks for your support

I just found this discussion. I have written some code for low power ESP8266. I am adding link here for feedback and further discussion.

https://github.com/happytm/BatteryNode

Thanks.

My goal in code above is to run remote ESP8266 node with BME280 & APDS9960 (or any light sensor) only using small LIR2450 coin cell battery charged by 53mm x 30 mm solar cell for life of battery or solar panel.

Please tell me why it is not possible ?

Thanks

Ken