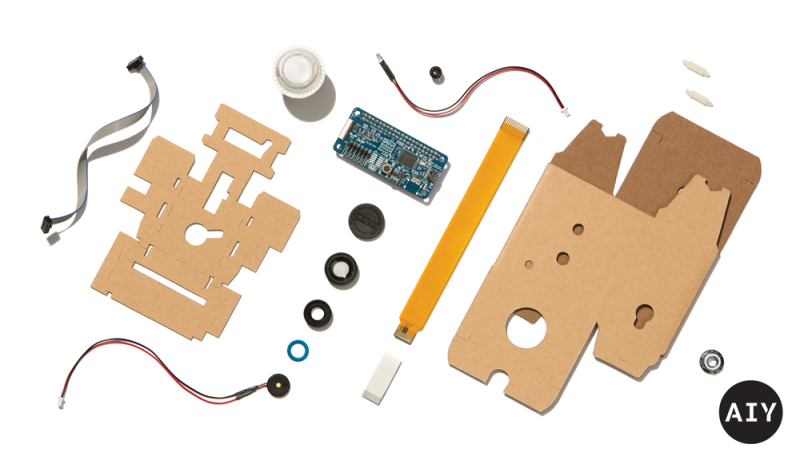

Google has announced their soon to be available Vision Kit, their next easy to assemble Artificial Intelligence Yourself (AIY) product. You’ll have to provide your own Raspberry Pi Zero W but that’s okay since what makes this special is Google’s VisionBonnet board that they do provide, basically a low power neural network accelerator board running TensorFlow.

The VisionBonnet is built around the Intel® Movidius™ Myriad 2 (aka MA2450) vision processing unit (VPU) chip. See the video below for an overview of this chip, but what it allows is the rapid processing of compute-intensive neural networks. We don’t think you’d use it for training the neural nets, just for doing the inference, or in human terms, for making use of the trained neural nets. It may be worth getting the kit for this board alone to use in your own hacks. An alternative is to get Modivius’s Neural Compute Stick, which has the same chip on a USB stick for around $80, not quite double the Vision Kit’s $45 price tag.

The Vision Kit isn’t out yet so we can’t be certain of the details, but based on the hardware it looks like you’ll point the camera at something, press a button and it will speak. We’ve seen this before with this talking object recognizer on a Pi 3 (full disclosure, it was made by yours truly) but without the hardware acceleration, a single object recognition took around 10 seconds. In the vision kit we expect the recognition will be in real-time. So the Vision Kit may be much more dynamic than that. And in case it wasn’t clear, a key feature is that nothing is done on the cloud here, all processing is local.

The kit comes with three different applications: an object recognition one that can recognize up to 1000 different classes of objects, another that recognizes faces and their expressions, and a third that detects people, cats, and dogs. While you can get up to a lot of mischief with just that, you can run your own neural networks too. If you need a refresher on TensorFlow then check out our introduction. And be sure to check out the Myriad 2 VPU video below the break.

This is the second AIY kit that Google has released, the first being the Voice Kit, which we also covered. That inspired our own [Inderpreet Singh] to, within just a couple of weeks, come out with his own equivalent voice kit.

Here’s the Myriad 2 VPU video. For the meatier hardware overview, skip to around 2:25.

https://www.youtube.com/watch?v=hD3RYGJgH4A

Every day I come and I’m amazed that you can achieve so much with so little hardware.

This is really just sort of like a thin client though. It doesn’t really train the neural net, which requires massive computing power that this device can not provide. This hardware simply acts as a go between. Sort of a specialized camera plus some dedicated hardware to better do basic image processing if I am understanding this correctly.

Certainly an improvement over a camera that required 50% of your CPU just to use due to lack of hardware image processing.

Isnt the computing power relative to the resolution of the image, required accuracy and time to process the images?

Not entirely sure what context you are referencing but yes, it takes more computing power when you change those or other variables. It does depend on what you are trying to do and does not scale linearly.

For example, a 500×500 image is not going to take 5 times the resources as a 100×100 image to process. It will take 25 times (or more). Going to something like 8K resolution will take many, many times more resources. Vastly more if you then make it video at 60 or 90 FPS.

Accuracy in terms of how deep the levels of processing also require more resources, which is also not linear. But it appears that none of that is going to be handled by this hardware anyway.

Yeah, I’m thinking it’s going to work like ‘Google Goggles’. The Pi may do some image processing but the heavy-lifting is done on their server such as image/object recognition.

According to TFA, all processing is local, using pre-trained Tensorflow nets.

So it should be a lot more private than Google Glass/Goggles.

“a key feature is that nothing is done on the cloud here, all processing is local.”

Does that mean that it doesn’t even communicate with the cloud at all or that common pre “clouded” items have already been “hashed” and are present in the device but only those items? Really light on the details.

This looks purrrrfect for my Weedinator project: https://hackaday.io/project/21744-weedinator

“We don’t think you’d use it for training the neural nets, just for doing the inference, or in human terms, for making use of the trained neural nets.”

So what would you use for training the nets?

Google branded AI cloud computing AI software and Google branded AI cloud computing hardware and the Google branded AI cloud platform would likely be the expected training model is my guess.

That depends on how big your neural network and your training dataset are. You can do a lot with a computer with an NVIDIA GPU. A friend of mine replicated this self-driving car neural network, https://images.nvidia.com/content/tegra/automotive/images/2016/solutions/pdf/end-to-end-dl-using-px.pdf, using a game simulator for the visual input of the road. I don’t recall exactly, but she said that training time went from weeks to hours when she switched to a computer with an NVIDIA GTX 1070 GPU.

Google’s Inception neural network, on the other hand, requires the amount of hardware you’d find from cloud computing.

That isn’t the case. You can train Tensorflow models on an Intel CPU, AMD CPU, NVIDIA GPU, or NVIDIA GPU. They will all work. The fact that some researchers, and probably not you, can use TPUs on Google Cloud to train Tensorflow models does not a conspiracy make.

Neat! I haven’t explored this product much at all yet. Is this VisionBonnet board considered fully open source?

Intel owned ASIC => No.

In fact, I looked into this, and one company which makes voards using the same chip wasn’t even sure they could make their own reference design open source, let alone the intel-supplied one.

1. Does this Hat use the entire GPIO header? [Y/n] =(

2. The VPU video only stated it does shared-memory operations, and has a graph based pipeline interface.

Most of the audience that will like the marketing-video will be incredibly disappointed with a half-documented software stack…

How about some simple examples for:

* Speeded up robust feature matching [y/N] (a licensed D. Lowe SIFT module would be useful)

* Kanade-Lucas-Tomasi Feature Tracker [y/N]

* Haar-like feature matching [y/N] (CUDA version exists in OpenCV)

* Template matching [?]

Current documentation implies (but doesn’t necessarily guarantee):

* Optical flow [Y]

* Homography Estimation [Y]

* Stereopsis [Y] (CUDA version also exists in OpenCV, and high frame rates are indeed very costly operations)

* Corner detection [Y]

Anyone have a better feature-specs list for what this thing actually currently does, and how it compares to a Tegra with OpenCV ?

=)

Considering the kit will be around $60 after adding the Pi Zero W and SD card while a Shield TV is $150 not including a camera, it’s in a different price range.

It uses a subset of neural networks called MobileNets, optimized to run locally on mobile and embedded systems. See more details in: https://arxiv.org/abs/1704.04861

The VisionBonnet uses an Intel Movidius Myriad 2 VPU.

If the software interface to this thing is any good this is a game changer.

Does this work stand-alone or does it require a connection to Google to work? I don’t mind downloading inference models but I certainly don’t want Google “spying” on me. I am looking at building an AI engine based on the Nvidia Jetson module for training and want something like this to provide a distributed inference system using the models created on the Jetson based server. My total focus is on distributed, localized systems which do not rely on cloud based servers. So, could I run inference models developed on a Jetson on this device?

Anyone else triggered by the Kickstarter xylophone music? Ugh.

Its the kickstarter “hum”. It can be a sign of normal operation, like older tv’s, or a red flag indicating marketing had too much hand when making the product and indicate some lack of desirable features (just like your car making a weird hum or a power supply ).

Yes, i do hate it as well.

Its an awfull but greatly executed tune, that allows people to voice over their marketing bullshit while distracting the viewer enough so they are willing to ignore the gaps in logic or have time to think.

MicroCenter website says “this item is no longer available” when checking availability on the AIY kit. It was supposed to release several days ago. I wonder what the rollout plan is now.

It was announced around the beginning of December and people could pre-order it then. My guess is that it’s lack of availability is because a lot of people ordered it early and they are out of stock. Hopefully they’ll be making more. I just did google and youtube searches and didn’t see anyone showing that they’ve received it but possibly the kits are still in the mail.

You might be right, Steven. I do recall one angle of their availability was that buyers would have to do in-store pickup, so I would think some of those pre-orders would have resulted in some people having them in-hand by this point if the kits had actually shipped on schedule. I am also not seeing online accounts of people having received the kits yet.

I pre-ordered one and got it the 29th of December.

First video of one in the wild:

https://www.youtube.com/watch?v=GqQajvZq08E

Thanks. With that recognition speed there’s definitely hardware support (as we expected there would be). It’d be much slower if the processing was being done with just the Pi Zero W’s CPU.

Does anyone know if the VisionBonnet board supports only speeding up neural networks (TensorFlow only?) or does it also improve OpenCV calculation time on a RPi-Zero?

Target has the vision and voice kits (both include the Pi zero WH) in stores, $90 for vision, $45 for voice. Check their site for local availability.