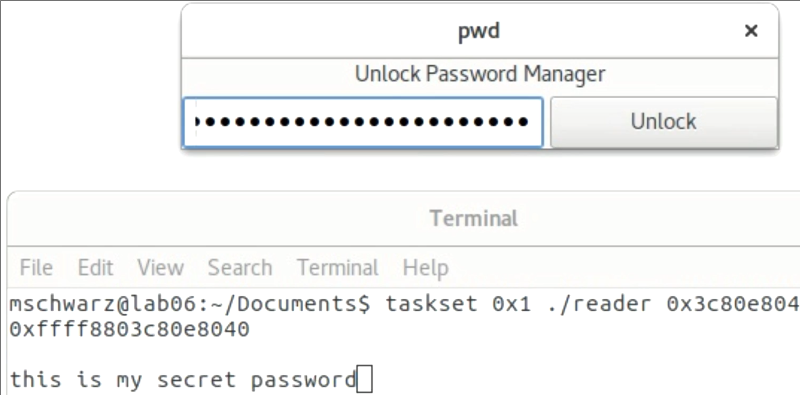

If you’ve read about Meltdown, you might have thought, “how likely is that to actually happen?” You can more easily judge for yourself by looking at the code available on GitHub. The Linux software is just proof of concept, but it both shows what could happen and — in a way — illustrates some of the difficulties in making this work. There are also two videos in the repository that show spying on password input and dumping physical memory.

The interesting thing is that there are a lot of things that will stop the demos from working. For example a slow CPU, a CPU without out-of-order execution, or an imprecise high-resolution timer. This is apparently especially problematic in virtual machines.

There are five tests, including one that just reads ordinary memory that you can read anyway. This is a test to show that the library is working. After that, another demo — which requires root — dumps out the kernel address space mapping, which is normally secret and changes on each boot. Although this requires root, the authors claim it is possible to do without root, it just takes longer.

Because of the nature of the hack, it is possible to not read data correctly every time. One of the demos measures the reliability of reading using the Meltdown method. The example shows a 99.93% success rate. There is also a pair of programs that stuffs a human-readable string into memory and then the second program finds it. Finally, a program that just dumps random memory finishes out the demos.

The real work is done in libkdump which is less than 500 lines of C code. Well — actually, it is a good bit of assembly embedded in the C file. There are a lot of things that will stop the code from working, but you can imagine that some of the code could be improved, too.

There’s definitely risk. On the other hand, it isn’t exactly a magic window into every computer, either. One nice thing about having this suite is you could use it to test mitigation strategies. It might not be perfect — you could do something to cause these programs to not work, but still leave a door open for some modified algorithm. However, it would be at least one data point.

We’ve been talking a lot about Meltdown in the Hackaday secret bunker. This may increase the value of our Raspberry Pi boxes. Or maybe we will find that crate of 486s we’ve been saving for just such an eventuality.

“Although this requires root, the authors claim it is possible to do without root, it just takes longer.”

I’m sorry but that makes no sense, if it uses the exploit that crosses security then why would root matter? And if it matters then how do we know this code would work without it? You can’t test or prove crossing security with a program requiring root.

Well, if they were to show “how root can be bypassed” along with all the other info, that exploit alone could cause a lot of damage by script kiddies.

I think they are just being wise to not show how to do that.

Excellent point, but that doesn’t take away that we have to trust his ‘this would work without root too’

And we don’t live in a world and time where the term ‘trust me’ from strangers works anymore.

Usually “Trust me” is followed by someone saying “don’t worry about it”. In this case, he’s saying “worry about it”. That would give some credence to it.

I’m finding the fetish for “not good enough to be effected” that’s prevalent in both the articles and comments on this subject to be immensely baffling.

Good system administrators from the Sun era, are known for the #1 security+performance guideline of minimizing both structural and algorithmic complexity. If you study CPUs, than you will know why the old guys are laughing at the cloud-based-services generation that has been shilling consumer grade hardware for 15 years.

For me, the meme has special meaning, as the mountain of loud corporate bullshit starts to burn. At this time – one must admit it is pretty funny — considering the $25 Pi3 is more secure than a $3000 workstation.

I am wagering we will soon see a worm that pulls root privileges, SSL private keys, and PGP signing keys.

The kids are all about to be taken to school… I am investing in popcorn…

LOL ;-)

Saying the Raspberry Pi 3 is more secure than a workstation is as shortsighted as anything you accuse the industry of. It may be impervious to the vulnerabilities as we understand them today, but they might very well be vulnerable to a variant. Even if that’s not the case, it will certainly have other issues.

It’s like saying your bicycle is more secure because they can’t crack the electronic lock.

But I’ll second the Pi claim… who knows what those binary blobs on the tightly-coupled and completely undocumented GPU are doing… where’d they come from, anyhow? Who needs a vulnerability when you build in a back-door?

Many processors and systems on a chip have hardware back doors let alone the software. The security agencies must be keeping a close eye on all this.

That’s kinda the irony, isn’t it…?

Look at the 8088/8086, then the 286 and 386… they didn’t really improve processing speed measurably at all… all they did was throw a bunch of more complex stuff in the mix, running at nearly the same speeds.

So… yeah… we could have thousand-core *well understood* 8088’s running at ghz speeds these days… or sixteen-core monoliths with star-destroyer-level vulnerabilities…

the star destroyer thing definitely convinced me :D

Diminishing returns from adding more processors is well known. 990 of your 8088s would be operating as room heaters, assuming you’re not running a server with lots of clients. Then it’d be 950. Even then, good luck managing memory consistency.

Modern CPUs do, off the top of my head, a couple of dozen times more stuff per cycle than an 8088 did. That’s the fairly sensible reason we don’t use them.

>Look at the 8088/8086, then the 286 and 386… they didn’t really improve processing speed measurably at all

yes, they only doubled performance with every version, nothing like todays 2% per processor generation ….

Actually early on they were making massive jumps in CPU performance an 80386 is massively faster than a 8088 crazy when you think only 6 years separated them.

Not really. A server is a server regardless of who owns it. They’re attack surface is bigger because they invited all of us in. But as noted those demos break easier in virtual machines so that should provide clues in breaking it some more.

Yeh… you inspired me I just fired up my Sun SS20 – almost 30y old – booted with no issue solaris9 4xHypersparc CPUs @ 100MHz – I had to sign agreement ( of not exporting it to Iran/Irak etc) before I could import it back in a day.

I miss this quality today.

Yah I dug my old Mac IIfx out of the closet and it booted after tracking down a new pram battery.

The $25 Pi3 has its own share of exploitable code in form of binary blobs, as many other SBCs out there.

In other words work like this is an early step on how to lock this backdoor without impairing the performance of all the affected computers.

nice -n19 echo “Ha Ha”

The backdoor can’t be locked on the chips that are affected – it’s hardwired.

The backdoor is trivial to avoid in future designs with insignificant performance impact (if any).

Now “spectre” is a hard thing to crack without affecting performance a bit: adding metadata to track owners of branch predictor state isn’t practical (more data in critical part = slower), context switching the state isn’t possible without a hard hit (more wires to the critical part = slower) and flushing the branch state would also cause a lot of extra branch mispredicts.

And lastly the actual mechanism for leaking data isn’t really possible to remove – caches are needed and some level of sharing is required.

No virus on my ZX Spectrum. :) Although it’s taken me two days to type this. Ha ha….

yes, zx spectrum forever :) no but seriously, if you look at how little you get at what price, then the spectrum was much better, all this software bloating and all this constant security risks and the stupid anti-coders making the world a way worst place than it was before…

Agreed. How many Z80 chips can you fit on an i7 die. Let alone a server processor. Subtract some for networking. That’s still a lot of cores.

Drill some holes. Pump liquid nitrogen through the chip.

awesome idea :) transistor wise you can place 1170000000 / 8500 = 137647 z80 cpus on an i7 die, to give this number a meaning, we can take the square root of it, what is 371, so if we take the modern 8k resolution (7680×4320) then we can see that one z80 cpu is only needed to take care of 21 pixel x 12 pixel screen fragment, as for the hardware problem, luckily there is still hope, for example chuck moore’s forth cpu ( http://www.greenarraychips.com ) or the new cpu from mill computing ( https://millcomputing.com ) as for the software side of it, the first step would be to discard the object oriented programming model, the second will be to give the young programmers a proper education from “old” programmers…

” the first step would be to discard the object oriented programming model”

That’s just throwing the baby out with the bathwater.

I used to think that people who wanted to throw object orientation were either unintelligent or insane. Object orientation when used right is the ONLY way to write code that is maintainable and reusable.

That can be accomplished quite well with simple classes, methods and inheritance. For some reason that simple concept has been wrecked with the addition of namespaces which might have been ok if they were limited to something simple like maybe a company name or something. Instead they have turned into 10 mile long strings that look like early 90s gopher urls copied out of some old newsgroup postings.

Eliminate or limit the complexity of namespaces and teach the new kids how to do object orientation right and we can achieve programming utopia.

Parallel programming is hard. Let me repeat. Parallel programming is really hard!

Sure.. you could take some old, relatively simple design and turn it in to a gazillion core beast. In theory I guess you would have a significant amount of processing power. But… with the exception of maybe doing some sort of scientific number crunching of a sort that just happens to lend itself to parallelism you would never see that power in action.

What you would see, and this is if you did a really good job on the design is maybe 4 or 5 cores (and I am being generous here) working at 100% giving all the speed you would expect out of 4 or 5 Z80s. The rest of the cores would never leave the idle loop.

Oh.. and security… hah! The difficulty of writing secure code increases exponentially with parallelism as well. You would probably be trading a bad hardware bug for 1000 software ones.

“Sure.. you could take some old, relatively simple design and turn it in to a gazillion core beast.”

They already did. It’s called a GPU. Fifty bucks gets you a passively cooled card with 384 of these little cores on it and a couple gigs of RAM.

So why didn’t my OS and web browser go any faster when I added one? /s

Hyperthreading.

@gregkenedy “So why didn’t my OS and web browser go any faster when I added one? /s”

Did you even read the rest of the comment you partially quoted?

if you can not see the problem with today computer environment, here are some metrics for you, right now it costs 20000 times of ram to load a web page and read that for about 10 minutes (maximum), than it to take to load one zx spectrum game and play with it for many hours :)

So?

Write a webpage to contain nothing but text and ASCII art.

Install an OS that isn’t meant to facilitate multitasking or any of the multimedia stuff we have baked into everything we do today.

Set your display to a text only mode with a similar number of rows/columns as your Spectrum had. (if you even can)

Now go view that webpage.

Shoot.. .we are still pretty far over on RAM. It turns out that being networked to the whole freaking planet is actually rather complex. Burn the html of that webpage onto a ROM chip and then pull out all the networking support.

Hey, look! You got your memory usage down to 1980s levels. That’s what you wanted right? Aren’t you so happy with your new microcomputer? It’s soooo bloody useful!

Yay You!

Ahh my cassettes were impervious to anything but thr friday rock show with tommy vance :)

One of the suggestions I’ve seen for Spectre mitigation is the introduction of a “high-security” branch instruction which acts as a barrier and prevents speculation beyond evaluation of the branch. That way, kernel level code interacting with user code can appropriately do its security checks without unintended side-effects on the cache/processor state.

It is something of a hack as it requires both a microcode update and modifications to the OS (and judicious use by the programmer on all cross-privilege level calls), but it should mostly clean-up the vulnerability, taking the performance hit only where required. There might be a cleaner, more clever fix in there somewhere involving the layout and use of cache, but I haven’t seen anything yet.

How would that help? Sure if all sensitive checks are using the new instruction it could work. But that means we need new software, it means we need all software doing any type of sensitive check patched. Even user space code.

Every single program that does any kind of sensitive check would have to be rewritten manually with new compiler support and every check tagged so that the new type of branch is used.

Might as well as bring back Itanium if we’re going to do that.

hackaday secret bunker confirmed

now opensource all but its location

I know the location, it’s in the basement at 2nd Street Southwest, on the 400 block of….

[carrier lost]

really very confirmed now

The linked code all requires machine access before it can run. Are there any examples of how Javascript in a random web page could be used to launch a Meltdown/Spectrum attack? Correction – I know there is somewhere, haven’t looked at it yet, but I’m interested in others’ opinions about how effective and probable a Javascript attack could be.

For meltdown? That _should_ be pretty hard as javascript code shouldn’t be able to trigger an exception usable in this way. Spectre is another thing…

I am not impressed. A privilege escalation vulnerability test run as root is not convincing. I also agree that it makes no sense that running this as non-root would “take longer”. I would like to see more about how well these exploits work on virtual machines with their non-definitive timing.

My test machine running Ubuntu 16.04 has been updated to kernel version 4.4.0-109, and I’ll be jiggered, but the dang thing is working faster and smoother than ever before. Maybe there is some light at the end of the tunnel.

So they already fixed the issue from this?

https://news.slashdot.org/story/18/01/10/1634215/meltdown-and-spectre-patches-bricking-ubuntu-1604-computers

I’ve been afraid to update myself. No slowdown?

Probably relevant but I discovered a similar bug on the Core 2 Duo.running on an Aspire 5620.

Tried to document it but lacked the programming skills to figure out what went wrong and eventually the machine inexplicably failed one day due to apparent BIOS corruption.

Coincidentally every other part used in the machine reused later caused problems including the LCD, HDD and RAM.

It looks like the fault was somewhere in one of the MMX instructions because it only ever happened when usage was quite high (ie cloning drives, etc) and I could reliably duplicate it by running very specific applications in the right sequence.

I am running another C2D system though which as yet seems to be unaffected.

Did notice a lot of drive failures, faulty pendrives etc and even two previously working memory cards just failed with little or no warning suggesting a problem with chipset or optical drive spinup sending spikes back into the 5V line.

Maybe related to earlier notes as the BIOS “press key to continue” only happened just after I’d transferred the HDD to 5620 for data recovery with a spacing of maybe 2 days.