By now you’ve almost certainly heard about the recent release of a high-resolution satellite image showing the aftermath of Iran’s failed attempt to launch their Safir liquid fuel rocket. The geopolitical ramifications of Iran developing this type of ballistic missile technology is certainly a newsworthy story in its own right, but in this case, there’s been far more interest in how the picture was taken. Given known variables such as the time and date of the incident and the location of the launch pad, analysts have determined it was likely taken by a classified American KH-11 satellite.

The image is certainly striking, showing a level of detail that far exceeds what’s available through any of the space observation services we as civilians have access to. Estimated to have been taken from a distance of approximately 382 km, the image appears to have a resolution of at least ten centimeters per pixel. Given that the orbit of the satellite in question dips as low as 270 km on its closest approach to the Earth’s surface, it’s likely that the maximum resolution is even higher.

The image is certainly striking, showing a level of detail that far exceeds what’s available through any of the space observation services we as civilians have access to. Estimated to have been taken from a distance of approximately 382 km, the image appears to have a resolution of at least ten centimeters per pixel. Given that the orbit of the satellite in question dips as low as 270 km on its closest approach to the Earth’s surface, it’s likely that the maximum resolution is even higher.

Of course, there are many aspects of the KH-11 satellites that remain highly classified, especially in regards to the latest hardware revisions. But their existence and general design has been common knowledge for decades. Images taken from earlier generation KH-11 satellites were leaked or otherwise released in the 1980s and 1990s, and while the Iranian image is certainly of a higher fidelity, this is not wholly surprising given the intervening decades.

What we know far less about are the orbital surveillance assets that supersede the KH-11. The satellite that took this image, known by its designation USA 224, has been in orbit since 2011. The National Reconnaissance Office (NRO) has launched a number of newer spacecraft since then, with several more slated to be lifted into orbit between now and 2021.

So let’s take a closer look at the KH-11 series of reconnaissance satellites, and compare that to what we can piece together about the next generation or orbital espionage technology that’s already circling overhead might be capable of.

Secret Agent Hubble

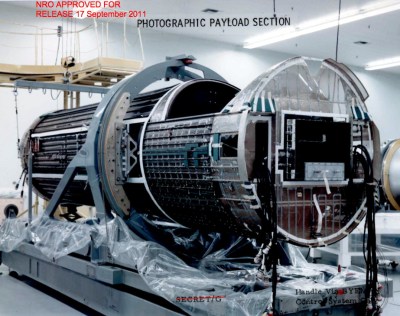

The KH-11 “KENNEN” satellite was designed as a replacement for the film-based KH-9 “HEXAGON” satellites developed in the 1960s. Recovering images from these older satellites required the use of small capsules that would re-enter the Earth’s atmosphere and get caught in mid-air by a waiting aircraft; a slow, complex, and expensive process. In comparison, the KH-11’s digital technology meant that images could be transmitted through a network of communications satellites in near-real time.

But despite the first KH-11 being launched all the way back in 1976, there has never been a publicly released image of one. Luckily, analysts have a fairly good idea what the satellites look like because they happen to have have a very famous cousin: the Hubble Space Telescope. Both space-going Cassegrain reflector telescopes were built by Lockheed, and according to official NASA records, some of the design elements of the Hubble (such as the 2.4 meter diameter of the main mirror) were selected to “lessen fabrication costs by using manufacturing technologies developed for military spy satellites”.

The family resemblance isn’t just speculation either. In 2010 and again in 2015, astrophotographer Ralf Vandebergh was able to directly image two separate KH-11 satellites using essentially hobbyist-grade equipment. Despite his relatively low tech approach, he was able to snap shots of these secretive spacecraft that appear to confirm suspicions that their design closely resembles the Hubble.

In the pixilated images, we can see the same tapered shape and what appears to be an aperture door at the end of the telescope. The KH-11 also features at least one solar “wing” like the Hubble, and potentially a directional antenna array of some type on the opposite side; though Ralf does say this could simply be a trick of the light.

In terms of size, the KH-11 is almost certainly the same diameter of the Hubble due to the shared mirror, but rumors suggest that it’s not as long. A shorter focal length would give the KH-11 a wider field of view than the Hubble, which would be better suited to observing the ground.

As Good as It Gets

If one man with consumer equipment could identify, track, and photograph two KH-11 satellites, it stands to reason that the intelligence agencies of other countries have managed similar feats. The idea that any technologically advanced nation would be caught unawares by the presence of one of these satellites overhead seems unlikely at best.

Of course, knowing they’re up there isn’t the same as knowing what they can actually see. But as it turns out, that isn’t a difficult question to answer either. Calculating the angular resolution of a telescope can be done using the Rayleigh criterion, which takes into account the wavelength to be observed and the diameter of the lens’ aperture. That angular resolution, when combined with the altitude of the satellite at the time of observation, can tell us how large an object needs to be before an optical telescope like the KH-11 can actually see it from space.

With a 2.4 m mirror observing a nominal wavelength of 500 nm, the Rayleigh criterion tells us a telescope should have a diffraction-limited resolution of around 0.05 arcsec. At an altitude of 250 km, that translates to a surface resolution of approximately 6 cm (2.4 inches). Keep in mind this is a theoretical maximum, in practice the resolution will be less due to atmospheric instability and the fact the satellite is unlikely to be directly above the target. With an estimated resolution of 10 cm, the Iranian image is therefore well within the calculated performance envelope of the KH-11.

Again, it would not have been difficult for any potential adversary to run the numbers and realize what the KH-11 would be capable of seeing. Especially since the United States has been running surveillance satellites at this physical resolution limit for more than 50 years. The KH-8 “GAMBIT”, a film-based surveillance satellite first launched in 1966, was also capable of resolving objects at 5 to 10 cm under ideal conditions.

The Next Generation

It might seem strange that a US surveillance satellite from 1966 would have comparable resolution to the ones flying around in 2019, especially with how much technology has changed since then. But ultimately, these are large optical telescopes, and the physics that govern their performance were figured out long before anyone ever dreamed of sending one of them to space. The rest of the spacecraft surrounding the telescope has certainly evolved since the 1960s, with improved propulsion, data throughput, energy consumption, and endurance; but a 2.4 m mirror is going to work the same today as it did 50 or even 100 years ago.

If the capabilities of optical telescopes have hit the physical limit, then where do we go from here? The most obvious way to wring more performance out of these satellites is through the use of image enhancement software. Thanks to the leaps and bounds computing performance has made over the last decade, the images from the telescope can be sharpened and cleaned up digitally. This is potentially why the Iranian image looks clearer than KH-11 shots released in the 1990s, even though the actual resolution of the telescope has not fundamentally changed.

Beyond that, it’s believed newer reconnaissance satellites, such as NROL-71 that was launched in January 2019, may augment or completely replace their optical telescopes with other sensing technologies such as synthetic-aperture radar (SAR). A radar imaging satellite has many advantages over an optical one, such as the ability to observe the target at night and in poor weather. In laboratory settings, SAR has achieved sub-millimeter resolution, and while the real-world accuracy would surely be lower when viewing the target from hundreds of kilometers away, it has the potential to move orbital surveillance beyond the physical limits that have been in place since the very first “spy satellites” took to the skies during the Cold War.

“Keep in mind this is a theoretical maximum, in practice the resolution will be less due to atmospheric instability and the fact the satellite is unlikely to be directly above the target.”

Using some of the techniques astronomers use to correct for atmosphere variability.

“A radar imaging satellite has many advantages over an optical one, such as the ability to observe the target at night and in poor weather.”

Active vs passive, the former being detectable from Earth.

“…detectable…” or not https://hackaday.com/2019/08/26/quantum-radar-hides-in-plain-sight/

Astronomers use known point sources – stars – for adaptive optics and image correction, and they also know the image is static and the atmosphere is changing. Not nearly as easy to do when looking at the ground.

Laser Guide Star pointed in the other direction.

https://en.wikipedia.org/wiki/Laser_guide_star

Multiplexing.

https://www.laserfocusworld.com/optics/article/16549652/adaptive-optics-turbulent-surveillanceor-how-to-see-a-kalashnikov-from-a-safe-distance

Sensor Fusion.

https://en.wikipedia.org/wiki/Sensor_fusion

https://medium.com/@cotra.marko/wtf-is-sensor-fusion-part-1-laying-the-mathematical-foundation-89e2d304e23e

Funny, we were talking about laser guide star here at Hackaday HQ. Won’t you be blinding people on the ground?

Rayleigh beacons may not, as for the sodium the effect may be pretty close to the satellite.

> the image is static and the atmosphere is changing. Not nearly as easy to do when looking at the ground.

Err… FYI the ground is static too.

Super-resolution is the name of any technique to go beyond the physical limitations of a sensor: https://en.m.wikipedia.org/wiki/Super-resolution_imaging

The new tech we have is the ability to work on high-frequency video instead of just single still photographs. This is probably made easier by the reasonably precise/predictable motion of a satellite.

You’re not going “beyond physical limitations,” you’re going beyond Rayleigh. The Rayleigh criterion is simple to understand: when you have a point source and an aperture, it’ll create diffraction rings around each point source. The Rayleigh criterion is when the first null of one point source is in at the maximum of the other.

It’s an arbitrary definition of resolution. Even at the Rayleigh limit, objects are obviously blurring into each other, and detecting objects closer is just a question of how precise your imaging is. Rayleigh’s criterion results in a 20% dip between objects: obviously a 10 or even 5% dip is still easy to measure. So superresolution techniques are nothing new or special.

In fact, before Rayleigh, Dawes came up with a limit based on humans identifying binary stars, and that limit is slightly tighter than Rayleigh. Which means the first superresolution technique was “look at the image.”

How about Synthetic Aperture Photographs?

With radar frequencies we can measure both amplitude and phase. At optical frequencies we can measure intensity (amplitude^2) but not phase. We can bypass this to a certain extent if we can define phase compared to a reference, but this doesn’t work if we can’t control the phase of the image, ie the light that comes from the earth in this case.

So synthetic aperture optical imaging basically doesn’t work.

One other point though, the Rayleigh criterion defines a resolution measure of the optical system, it doesn’t define how small things you can see. It defines how close objects can be together and still be resolved as 2 separate objects. With enough contrast (eg a dark night) a 1 mm aperture of a laser pointer is trivially visible at 10s, or even 100s of km, I’m sure the astronauts on the ISS could see a 25 mW 532 laser pointer shining directly at them.

So, does anybody know what the (Farsi?) script around the launch site spells out?

IIRC it was published with the initial photo release: “The product of national power” or something to that effect.

Wasn’t the original photo release a tweet from the POTUS?

MPGA – Make Persia Great Again.

Great NROL-39 reference with the opening picture.

I recall in the late 90’s there was a better zoom algorithm that was amazing in being able to digitally zoom in to clarify images and I think was a fractal algorithm available in one of the image editing software, maybe even Photoshop and then it kind of disappeared.

Then again, may have been where I went to school and with all the file sharing availability of data… was available. Seems by 2001 when I invested in a Nikon D100… the algorithm disappeared from the web, though the file sharing network between MTU, Princeton and RPI did also and I wonder if that is where I found. Though did seem like was something in a NOVA or PBS documentary also.

Anyone aware of the fractal or better zoom algorithm(s) for improving images by digitizing?

Was wondering with adaptive optics also the capabilities and I just mused about this topic about a month ago here regarding capabilities… including integrating other platform stations information:

https://www.reddit.com/r/TechnoStalking/comments/csncs7/technical_information_since_we_need_to_start/exito1r/

I’m reminded of the tech “they” use to read house keys at ~100 feet.

One of the interesting topics was determining the performance capabilities and I’ve been wondering about the detector(s) along with optical train that will be equivalent, or “better than,” to human perception.

Amazing on some days what can be performed on foot, in air and in space with I assume applications many will not know about… especially if you can gain some back door and/or bandwidth to the systems infrastructures implemented and not only the proprietary as I assume from my second B.S. degree is the case where the required back doors or capabilities in the main stream systems are by design.

Plus back on the topic of imaging, more main stream cameras with 8K performance and going high speed really makes remote viewing/sensing that much easier. I’m sure there are 16K to 32K cameras out there and high speed that are low profile. I may be wrong though… still… amazing with more than one or a synthetic aperture and image processing.

The detectors are massively better than the human eye I’m afraid. I work in fluorescence microscopy and we need as sensitive detectors as possible. You can buy a camera for about £10K that will detect roughly 95% of the photons that fall on it, and read out at about 100 frames/second at 2kx2k resolution, with very low readout noise.

The biggest issue with these types of sensor is getting the data off them, which I am sure is even more of an issue from orbit. However, the readout noise is limited by the speed so if you read out more slowly you get even lower noise so better images, especially in lower light situations.

“The detectors are massively better than the human eye I’m afraid.”

I figured that with such a wide range of optics available to enhance the viewing quality of course… though have been wondering what sensor/detector quality specification begins performing better than film?

https://en.wikipedia.org/wiki/Genuine_Fractals is what you’re thinking of. It’s alright.

Sweet find!

Kai’s Power tools https://de.wikipedia.org/wiki/Kai_Krause also had an implementation of a fractal zoom algorithm iirc

Genuine Fractals is most likely the one, though seems like was something else and I’m not recalling the details as may have been a custom script of that plugin floating around.

Reading about the latest and greatest… looks like Topaz A.I. Gigapixel is the best on the market. https://topazlabs.com/gigapixel-ai/

Any others worth noting while we’re on the topic? Thanks for the reference @gregranda11

I love the NROL-39 mission patch inspiration in the main picture, such a funny patch

Perhaps one has heard that U.S. satellites can distinguish between a slotted screw vs. a Phillips head screw on an aircraft wing? Of course, now that you’ve read this, you know too much.

Article doesn’t take in to account machine learning..

“General, the Russians have built a new launch facility, and it looks like a pig-snail!”

(https://ai.googleblog.com/2015/06/inceptionism-going-deeper-into-neural.html)

I’m an astrophysicist whose research depending on wringing absolutely as much detail out of observations as possible. Unfortunately, there’s really no such thing as “zoom in and enhance” to get you beyond the diffraction limit.

Of course, there are nifty things you could do. For example, if you already know what the object you’re seeking looks like, you could take an image, blur it to your diffraction limit, and then look for that particular blur.

In microscopy, you can do neat things like scan across your sample with with a very small illuminating spot (coming from some optics with a larger diameter than your microscope optics so the spot size is small) and then use the knowledge of how the spot rasters to build up an image with finer resolution than the actual resolution of your optics.

Or, with Hubble there’s a problem that the cameras under sample the diffraction limit of the telescope. In a process called drizzling, you move the telescope by sub-pixel shifts (possible because the fine guidance sensor star trackers work so well) and take multiple images, you can use the position information to reconstruct an image that basically matches the optic’s diffraction limit.

I’m guessing though that the way the big guys are moving up in resolution is simply by using bigger telescopes. (Not that they probably aren’t also using synthetic radar and things like that.). The NRO gave NASA two built spy satellites with perfect 2.4m optics (one is being used for the WFIRST mission) and Magdalena Ridge Observatory in NM similarly got a “spare” 2.4m mirror that wasn’t needed for surveillance work. I don’t think that scientists would be getting these hand-me-downs if bigger optics weren’t already flying.

> In microscopy, you can do neat things like scan across your sample with with a very

> small illuminating spot (coming from some optics with a larger diameter than your

> microscope optics so the spot size is small) and then use the knowledge of how the

> spot rasters to build up an image with finer resolution than the actual resolution of your > optics.

I’m a microscopist and I would love to have a magic optic that has “a larger diameter than my microscope optics” . What N really means is subtends a larger angle at the sample than the microscope optics, so a larger diameter further away probably doesn’t help. In practise microscopes illuminate and collect light from a cone with a half angle of ~70deg. you can’t do much better than this. Observing from one side the maximum is 90 deg but then you need a infinitely big lens or be touching you sample.

You can look from both sides, a so called 4pi microscope, but to get the resolution improvement from this you need to combine the light into one image. This only works if the two beam paths differ by a small number of wavelengths, green light is ~ 500 nm. This means they are complex and difficult to setup and align.

All this said, there are microscope that can localise single molecules with accuracies in the 5-10 nm range in all 3 dimensions.

The software you may be thinking of wasn’t so much that it was useful for zoom, but for incredibly tiny lossy compression. It was I think called fiasco. It seemed really cool, Imagemagick and others had added support for it, then poof it disappeared. Oh, interesting! It looks like someone did have a copy (It was GPL) and threw it up on github. https://github.com/l-tamas/Fiasco

Even on DEC Alphas, it took forever to create the image, however decoding was very quick.

I find it rather sad that just one KH11 is devoted to science and the rest (15 or more) are devoted to warfare. Imagine what we could have discovered with even a second HST.

Also – the Hubble rather famously had a dud mirror that was flawed. It beggars belief that no-one knew about the flaw, or even tested or measured the mirror for such an expensive vehicle before launch. Add to that the HST mirror is the same as at least 6 or 7 KH11 mirrors previously produced – and they suddenly got one wrong.

OK, conspiracy theory, but I recall at the time some astronomers claiming that the HST was deliberately built with a flawed mirror so that THE ENEMY couldn’t work out it’s optical properties; and that the deliberate flaw was miscalculated and over-blurred the images. It certainly seems more plausible than grinding a flaw into a mirror and never bothering to test it before launch.

I’m sure there has been a large number of hijackings and robberies avoided with the intense level of surveillance… I hope.

Reminds me of these comment why the advanced in technology that are still secret.

https://www.gaia.com/article/oldest-conspiracies-proven-true-project-echelon (see carefully at 30 seconds in) https://www.youtube.com/watch?v=53sAEQ0rYyU&feature=youtu.be&t=158 (watch at least until 4:10)

Now, since the above documentary was made and in our new era that we must lead validly… see what has been disclosed in the first Medal of Honor on video. John ‘Chappy’ Chapman (Air Force CCT) was posthumously awarded the medal of Honor and qualified for a second MOH Award: https://www.youtube.com/watch?v=3oKMjTqdTYo

We all know even COTS gun sights have more than black and white contrast color schemes… I’m wondering if what’s disclosed is another offices feed. Not quite a space based system… though makes me wonder since IR has been around since about the dawn.

Found these videos yesterday on the National Reconnaissance Office youtube page and I thought was interesting the capabilities, then disclosed (and somewhat could be determined undisclosed):

https://www.youtube.com/watch?v=tUIakZq0JGk (Gambit)

https://www.youtube.com/watch?v=r1WPoAH6D_M (Hexagon)

I also thought was interesting, how redacted the videos are still to the date of declassification where the Gambit system video eluded to better performance and the Hexagon did not… though notes improved performance.