When you put a human driver behind the wheel, they will use primarily their eyes to navigate. Both to stay on the road and to use any navigation aids, such as maps and digital navigation assistants. For self-driving cars, tackling the latter is relatively easy, as the system would use the same information in a similar way: when to to change lanes, and when to take a left or right. The former task is a lot harder, with situational awareness even a challenge for human drivers.

In order to maintain this awareness, self-driving and driver-assistance systems use a combination of cameras, LIDAR, and other sensors. These can track stationary and moving objects and keep track of the lines and edges of the road. This allows the car to precisely follow the road and, at least in theory, not run into obstacles or other vehicles. But if the weather gets bad enough, such as when the road is covered with snow, these systems can have trouble coping.

Looking for ways to improve the performance of autonomous driving systems in poor visibility, engineers are currently experimenting with ground-penetrating radar. While it’s likely to be awhile before we start to see this hardware on production vehicles, the concept already shows promise. It turns out that if you can’t see whats on the road ahead of you, looking underneath it might be the next best thing.

Knowing Your Place in the World

Certainly the biggest challenge of navigating with a traditional paper map is that it doesn’t provide a handy blinking icon to indicate your current location. For the younger folk among us, imagine trying to use Google Maps without it telling you where you are on the map, or even which way you’re facing. How would you navigate across a map, Mr. Anderson, if you do not know where you are?

This is pretty much an age-old issue, dating back to the earliest days of humanity. Early hunter-gatherer tribes had to find their way across continents, following the migratory routes of their prey animals. They would use landmarks and other signs that would get passed on from generation to generation, as a kind of oral map. Later on, humans would learn to navigate by the stars and Sun, using a process called celestial navigation.

Later on, we’d introduce the concept of longitude and latitude to divide the Earth’s surface into a grid, using celestial navigation and accurate clocks to determine our position. This would remain the pinnacle of localization and a cornerstone of navigation until the advent of radio beacons and satellites like the GPS constellation.

So it might seem like self-driving vehicles could use GPS to determine their current location, skipping the complicated sensors and not not bothering to look at the road at all. In a perfect world, they could. But in practice, it’s a bit more complicated than that.

Precision is a Virtue

The main issue with a systems like GPS is that accuracy can vary wildly depending on factors such as how many satellites are visible to the receiver. When traveling through wide open country, one’s accuracy with a modern, L5-band capable GPS receiver can be as good as 30 centimeters. But try it in a forest or a city with tall buildings that reflect and block the satellite signals, and suddenly one’s accuracy drops to something closer to 5 meters, or worse.

It also takes time for a GPS receiver to obtain a “fix” on a number of satellites before it can determine its location. This isn’t a huge problem when GPS is being used to supplement position data, but it could be disastrous if it was the only way a self-driving vehicle knew where it was. But even in perfect conditions, GPS just doesn’t get you close enough. The maximum precision of 30 centimeters, while more than sufficient for general navigation, could still mean the difference between being on the road and driving off the side of it.

One solution is for self-driving vehicles to adopt the system that worked for our earliest ancestors, using landmarks. By having a gigantic database of buildings, mountains and other landmarks of note, cameras and LIDAR systems could follow a digital map so the car always has a good idea of where it is. Unfortunately, such landmarks can change relatively quickly, with buildings torn down, new buildings erected, a noise barrier added along a stretch of highway, and so on. Not to mention the impact of poor weather and darkness on such systems.

The Good Kind of boring

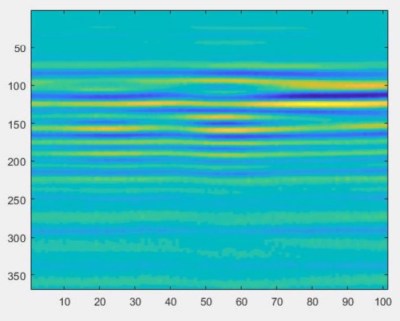

When you think about it, what’s below our feet doesn’t change a great deal. Once a road goes down, not too much will happen to whatever is below it. This is the reasoning behind the use of ground-penetrating radar (GPR) with vehicles, in what is called localizing ground-penetrating radar (LGPR). MIT has been running experiments on the use of this technology for a few years now, and recently ran tests with having LGPR-equipped vehicles self-navigate in both snowy and rainy conditions.

They found that the LGPR-equipped system had no trouble staying on track, with snow on the road adding an error margin of only about 2.5 cm (1″), and a rain-soaked road causing an offset of on average 14 cm (5.5″). Considering that their “worst case” findings are significantly better (by about 16 cm) than GPS on a good day, it’s easy to see why there’s so much interest in this technology.

Turning Cars into Optical Mice

The GPR system sends out electromagnetic pulses in the microwave band, where layers in the ground will impact how and when these pulses will be reflected, providing us with an image of the subsurface structures.

This isn’t unlike how an optical mouse works, where the light emitted from the bottom reflects off the surface it’s moving on. The mouse’s sensor receives a pattern of reflected light that allows it to deduce when it’s being moved across the desk, in which direction, and how fast.

LGPR is similar, only in addition to keeping track of direction and speed, it also compares the image it records against a map which has been recorded previously. To continue the optical mouse example, if we were to scan our entire desk’s surface with a sensor like the one in the mouse and perform the same comparison, our mouse would be able to tell exactly where it is on the desk (give or take a few millimeters) at all times.

Roads would be mapped in advance by a special LGPR truck, and this data would be provided to autonomous vehicles. They can then use these maps as reference while they move across the roads, scanning the subsurface structures using their LGPR sensors to determine their position.

Time will Tell

Whether or not this LGPR technology will be the breakthrough that self-driving cars needed is hard to tell. No matter what, autonomous vehicles will still need sensors for observing road markings and signs because above ground things change often. With road maintenance, traffic jams, and pedestrians crossing the street, it’s a busy world to drive around in.

A lot of the effort in making autonomous vehicles “just work” depends less on sensors, and more on a combination of situational awareness and good decision making. In the hyper-dynamic above ground world, there are countless times during even a brief grocery shopping trip that one needs to plan ahead, take rapid decisions based on sudden events, react to omissions in one’s planning, and deal with other traffic both by following the rules and creatively adapting said rules when others take sudden liberties.

With a number of autonomous vehicles on the roads from a wide variety of companies, we’re starting to see how well they perform in real-life situations. Here we can see that autonomous vehicles tend to be programmed in a way that makes them respond very conservatively, although adding a bit more aggression might better fit the expectations of fellow (human) drivers.

Localizing ground-penetrating radar helps by adding to the overall situational awareness, but only if somebody actually makes the maps and occasionally goes back to update them. Unfortunately that might be the biggest hurdle in rolling out such a system in the real world, since the snow-covered roads where LGPR could be the most helpful are likely the last ones to get mapped.

Seems a complicated solution instead of using the presence of cat-eyes on most roads.

Cat eyes are great right up until they are covered with snow. Also, unless specifically designed for it, they tend to be pulled up by snowplows. You don’t often see them in areas that get regular snow cover.

Actually, in Canada, they have inset cat eyes. The surface of the highway is gouged out on a small angle and the cat eye is glued into the gouge at or a little below the surface. The plows don’t touch them.

Don’t they just fill up?

With heavy snowstorms in very cold weather, yes they do. However, the pavement tends to collect a lot of solar energy and the snow melts surprisingly fast in lighter snowfall and even after plowing,

The point isn’t that they are still visible during a snowstorm (they aren’t), it’s that they don’t get destroyed when a snowplow goes over them. Dongwaffle and BM are talking across purposes here, I think…

They get knocked off, glue comes unstuck, get covered in road debris, lots of ways they are no good.

A standardised vehicle-to-vehicle mesh network plus a series of data nodes built into inductive loops in the road surface (similar to traffic light sensors) would go a long way towards helping with situational awareness. And you don’t even need to send a lot of information from the road itself, just an identifier which the vehicle uses a persistent connection to look-up the full data load as it goes past.

Heck, construction crews could lay a mat on the road with a temporary inductive loop so the cars are aware of upcoming roadworks and then slow down and switch lanes as appropriate as well as relaying the details on the mesh network for other nearby vehicles to get advanced warning.

I can think of one stretch of interstate (I-85 between Charlotte and Greensboro) where they’re just sorta scattered around the road surface. I sure don’t want to be around with autonomous cars splitting lanes against traffic, which they just might end up doing if they’re using those to navigate.

Using ground penetrating radar to look for things like pipes might sound logical on paper.

But ask any construction crew working with maintenance on said pipes, and one will rarely hear that the pipes actually are were they are supposed to be. Mud/dirt is apparently rather poor at keeping things in place. And pipes have all sorts of fun ways of enacting thrust against each other, pushing themselves around in the process. All though, usually very slowly.

I would have to say that it is likely easier to embed “RFID” tags into the road itself. Something simple like a circuit that resonates back, informing the car that it is there. And with some time of flight knowledge we can accurately know where we are on the road itself. Though, obviously this would make paving a road a fair bit more tedious…. But at least these markers would follow the movement of the road surface itself. Unlike the pipes underneath.

Though, I am personally of the opinion that “self driving cars” is currently a fairly arrogant thing to strive for. Since car manufacturers doesn’t even have the safety features to keep a human from driving off the road.

Not to mention that when things do go wrong with self driving cars, then who takes control? The human that hasn’t been paying proper attention the last 5+ minutes? How much time do they have to get into the loop, a few seconds or less?

If self driving cars become more competent, but yet need help from a human, then it frankly isn’t going to be safe… Especially if we toss over the reins to inexperienced drivers.

Though, knowing where the road itself is, is still going to be important for a more advanced safety system to work properly. Here self driving cars do have the upper hand, if the driver becomes unconscious, then the car can take over and at least drive competently enough to get to the curb and acquire assistance. (Ie, can we start self driving as a “if the driver suddenly becomes unresponsive.”, instead of “the car drives itself for you as if you are taking a cab/taxi.”)

It doesn’t sound like the plan is to use existing utility maps, but rather to use existing positioning data (augmented GPS or whatever) on a clear day, run the system to locate whatever it’s tracking under the road, and record that data. Then, future drivers can locate themselves based on that data if GPS etc is not available or providing a precise enough position.

In regards to who takes control, that’s only a factor in SAE levels 1-3, and level 3 is probably dangerous for the reasons you’ve outlined. Levels 4 and 5 don’t require a driver in the loop while running under automation.

The big issue with mapping stuff under ground is that it tends to move over time. So one needs to remap it every now and then. How reasonable that is to do will likely vary depending on a lot of factors.

Not saying that is won’t work, but in some areas where the ground moves around more, then it might be less suitable as a solution.

In regards to SAE levels, then yes, level 5 is something that by definition drives itself practically “flawlessly”.

Level 4 depends. Since it still can need human oversight. (The definition isn’t really fully clear about what it means…)

Level 3 and 2 on the other hand is a situation that should likely be reformulated. If the vehicle can’t drive competently enough to not need human assistance, then the human should be the one driving and the computer should keep things safe.

A human is frankly rather abhorrent at taking over control if they weren’t paying attention. Most people don’t pay sufficient attention to the road to be able to make the situation any better. Some people barely pay enough attention even when they drive the car fully by themselves.

A computer on the other hand doesn’t even know the concept of “not paying attention”, a computer can be superior at taking over control if the human becomes unresponsive or is about to make a fatal mistake.

Having a human take over control works decently during a test of the systems. But a non driving human shouldn’t be the backup plan out on the road…

A computer never loses attention, but it’s 99.99% blind to what’s happening due to lack of understanding – which is the same thing. If the AI does not recognize the situation as something it needs to be responding to, then it’s just as well it wasn’t looking.

The simplifications, approximations and shortcuts taken to make a modern car drive itself mean that it’s ignoring almost all of reality and concentrating on a few simple things, like detecting two red dots or two white dots instead of seeing the car ahead. This is necessary because the AI model simply doesn’t have the computational power (or electrical power budget) to do any better. This makes the system very fragile, and even Level 3 driving a science fantasy – or a disaster waiting to happen.

Yes, autonomous driving is a challenge even in simple environments.

Making the car competently driving itself in real world scenarios is as you say, a science fantasy. Likely dragging along a disaster with it.

Humans can be a quirky bunch even at the best of times. But most people are at least better at handling the situational awareness and acting on it.

A computer is truly better at “remembering” all details of any situation than what any human could even dream off, but logging stuff is frankly trivial.

Acting appropriately in a situation is the important part. AI systems can though get fooled by things that most humans wouldn’t even consider important.

For an example, Intel’s anti collision system couldn’t detect a kangaroo properly, simply because the AI had not been trained to avoid them. While any human wouldn’t care if it is a kangaroo, an alien from mars, or even a whole house crashing out into the street before us. What a human sees is that it is a larger thing that we don’t want to crash into. Doesn’t matter what it is….

Things underground do shift around, especially in places where the ground freezes in the winter. Pipes shift around, underground boulders rise up towards the surface, pits and bumps form…

https://en.wikipedia.org/wiki/Frost_heaving

You have to keep updating the map continuously, especially during the spring thaw or autumn freeze because things can change rather rapidly.

Yup, stuff under ground rarely stays in one place.

Pipes for an example can move both due to pressure pushing on bends, and from inertia from the flow within the pipe as well. There are measures towards reducing this problem, but it doesn’t really solve it fully in the end regardless.

One reason I think it would likely be easier to embed “RFID”-ish technology in the ground that a car can send out a signal to, have it blip back, measure the time and the ID of the tag, reference to a map, and thereby know where exactly the car is on the road surface itself.

Since asphalt/concrete tends to move as one large chunk, unless it cracks. But at least the tag will mostly follow the road surface. Unlike a pipe that follows its own movements.

“You have to keep updating the map continuously, especially during the spring thaw or autumn freeze because things can change rather rapidly.”

That’s probably easier than the initial map itself. It’s not like there’s an underground city down there and things are moving around randomly – it’d probably be pretty easy to predict the motion based on a few sample points, and it’d also likely be pretty easy to predict when those updates need to occur. Pretty easy to predict when frost heaving won’t occur.

Any car with such a radar (and presumably an internet connection to stream map data) could feed back to the system, updating and improving the map database for the next cars. Why you would need “special trucks” to scan in the first place? They might carry a more powerful radar but if the cars can’t penetrate as deeply then you would have excess data.

I’d say scrap this completely and instead have small metal ballbearings mixed in the asphalt when its laid, to the tune of a hundred per square meter or so for an average thickness. Their random distribution would be much easier to detect, map, and use for centimetre-class positioning -think a star map. Vehicle sensor pacage size and energy would be a fraction, and therefore cost too. Like above, any halfway intelligent system worthy of 2020 would have its users automatically contributing map refinements and changes.

>could feed back to the system

That’s assuming you want the system to track every single car wherever they go. If you’re Google, of course you do.

If the road is made of concrete, there is probably already a nice grid of metal wires built into it.

Planes can fly with autopilot, yet they still have human pilots. What makes people think car different? Esp. considering there are far more cars than planes, road conditions are much more variable, and so on.

Except, have you look at how an autopilot in a plane works?

The vast majority of them are practically only keeping the flight level, ensuring a fixed airspeed, keeping altitude and current heading. Ie, it is practically following a straight line.

Then they can also change altitude, airspeed, and heading at a fairly fixed rate, to ensure a smooth flight.

But for them to do this, the pilot needs to enter in a new heading, airspeed, or altitude. The autopilot doesn’t do that….

But a car doesn’t even navigate in the same way as a plane does.

A car practically follows VFR (visual flight rules) all the time.

Most commercial jets follows IFR (instrument flight rules) the vast majority of the time.

IFR practically means that the pilot states their destination ahead of even taking off. Then the flight coordinators on the ground will schedule the plane in on the appropriate routes, and inform the affected airports of its estimated arrival.

Then ATC will inform the pilot of what altitude, airspeed and heading they should maintain, and update them for each and every turn along the route. (if no update arrives, then the pilot kindly needs to ask for one, if they feel like they are flying off the intended route.)

Upon actual arrival, the airport can tell the plane to either slow down or speed up to better sequence the incoming planes. Even tell the planes to circle around a couple of times if so needed.

Also the distance from one airplane in the sequence to the next is usually more than one minute.

The distance from one car to the next on most roads is usually less than 5 seconds on the other hand.

In the end, Autopilot in a plane is practically little more than cruse control + lane assist. For IFR flight, that is literally all one needs. The only reason the pilot is there, is to firstly handle communications and navigation, monitor the flight, note down any oddities, and take over if the autopilot gets disabled. Not to mention handling takeoff and landing of the plane.

There is also “auto land” features in some planes (practically only used if the pilot can’t see the runway), but considering that such systems needs equipment on the ground as well, then self parking cars are frankly a bit on the fore front. Not that parking a car and landing a plane is even remotely comparable to each other…

Exactly my point. If planes which have simpler scenario still require pilots, why people think we can have driverless cars before we get pilotless planes?

I have been in a car driven by a Brazilian.

They kept talking with their hands all the while and pinballing between the lane divider and the shoulder, and the car’s lane assist kept bumping them back on the road.

Luke.

That sounds like misuse of a safety feature to be fair.

The car should at some point just had enough and drive to the curb and stop. Letting the driver think of the fact that they should be driving.

Though, implementing such a feature is going to be a pain in and off itself.

But there is an obvious problem if the result of making things safer means that people get less safe to start with… Kinda defeats the whole point of making things safer to be fair.

It’s called risk homeostasis. People do respond to their perceived level of risk by letting their hair down.

This is why marginal improvements in vehicle safety result in worse and worse outcomes – the perception of safety grows more than the actual reduction of risk, which makes people in cars with ABS, ESP, 4WD, automatic braking, lane assist… etc. crash more often. Then it becomes the job of the multiple airbags to keep them alive.

The effect kicks in fairly early. When ABS was first introduced, a study on taxi drivers found a slight increase in the number of crashes for cars with ABS than without, but when ABS was introduced in motorcycles it reduced accidents by a third. Motorcycle riders don’t want to get into situations where ABS would help them anyhow.

Introduction of ABS resulted in 18% reduction for vehicle-to-vehicle crashes, and 35% increase in running off the road crashes:

https://www.monash.edu/__data/assets/pdf_file/0020/218270/racv-abs-braking-system-effectiveness.pdf

That is indeed a big problem with increased safety.

One solution to such a problem would be if the safety systems filed a report if they kick in too often. Alerting the authorities about their misuse. But obviously that would have its own downsides.

But at some point, if lane assist, or automatic braking needs to kick in all the time, one is either drunk, or very bad at driving. Since without those systems, one would be in an accident, so why not treat it as one? (Not saying that one should treat every situation in that way, just when the systems are obviously misused.)

Most people would probably stop overusing the safety systems if they eventually get a ticket from that behavior, since the safety systems are intended for the worst case scenario, they aren’t meant to compensate for poor driving.

Even ABS can be overused, if it kicks in, one were likely driving too fast, unless one is trying to avoid an unforeseen obstacle. End of story…

The systems should still be used when needed. But they shouldn’t be relied on for the act of driving itself…

Not the same at all. It’s a totally unique problem. You can’t have a bajillion cars in such a complicated, constantly-shifting close-quarters environment, each one with a random, basically untrained person standing by as a backup driver. Trying to fight their nature to be distracted as they are lulled into a false sense of security, yet expected to leap to take full control at a moment’s notice after totally random intervals. Might be a few minutes, might be a few hours. And you know the advertising is going to sell the cars as if you can be free to work or watch a movie or make out in the front seat while it does the driving. BECAUSE NOBODY WOULD BUY IT OTHERWISE. But it’s a lie. You’d have to be just as vigilant as if you are actually steering with your hands, so why not just do the steering yourself as a built-in method to maintain attention? It’s craziness. Nobody even wants this specific kind of self-driving tech except tech fetishists like us. And even we will get bored of it after a month.

In a plane, autopilot is basically a solved problem and can do everything. The human pilot is just there for emotional support/PR. They are far more highly trained AND they have a second backup human in case some absolute catastrophe actually makes them take the controls. This is a safe solution that you can’t really implement in consumer self-driving cars.

It’s inherently unsafe and will be disastrous. They’ll try to blame it on human error for a while–ignoring that the system itself breeds prodigious amounts of human error–but it will eventually become clear enough it’s a fundamental flaw. You can’t have that kind of fail-deadly system out in the wild and numbering millions. It’s just not going to ever, ever, EVER happen. These people are chasing grants and stupid yokel VCs. Like those utter slack-jawed dummies at Softbank. They know it won’t ever really be deployed.

Yes, a human isn’t an ideal backup. A computer tends to be better at staying concentrated, and is therefor more suitable at randomly taking over control if the need arises, like if the driver becomes unresponsive all of a sudden.

Airplanes on the other hand have solved this issue by giving the pilot a long list of other things to do. There the autopilot weren’t really built to take over the job. But rather assist it. In IFR flight, something most commercial planes follow 99% of the time, then the plane don’t need to do more than fly in a straight line. Taking the occasional turn, and changing altitude and air speed at times too. (Just look at a flight path on Flightradar24.com)

And as I have already outlined, an airplane “autopilot” is not really more than the car equivalent of cruse control + lane assist. Not even adaptive cruse control at that!

Note in the video the car was going 9mph. I have a hard time believing this is going to work at 60mph, but if it’s used as a correction algorithm at low speeds it definitely would have value. Its greatest strength is that it doesn’t require additional infrastructure, once all the roads are mapped (!).

I wonder if it would get confused if I tossed a bunch of pennies on the road :-)

Just think of how confused the system might get after road construction work has taken place.

Though, a good system would take such into consideration and likely send in an automated report of the anomaly.

After all, there is no real need to use dedicated vehicles for scanning the road. If most cars using this system can send in their own reports, we can start filtering the gathered data and make a steadily updating map based on this.

Though, tossing out coins on the road will likely be a blip that could confuse the system temporarily. But it is though still a useful landmark to a degree. But, depends on how much it moves around and such.

In regards to speed on the other hand, it is an interesting question how things would change with increasing speeds.

I guess it could impact accuracy.

There’s always stray metal on the road, nails, screws, bits of banding off trucks, belting from shredded truck tires, rusted off exhaust hangers and bits of bodywork, tools, discarded cans. Some of it might have got pounded into the asphalt and be semi permanent but the rest is skittering around all day.

Think about the first car that reaches the anomaly? Will it veer out of control before the AI notices that something is off? Will it miss the intersection it was supposed to take?

There’s two modes for this system. 1) measuring the real motion of the car for dead reckoning, 2) locating the car on the map by recognizing the road features. Point 1 will be possible regardless, and point 2 is utopia and not necessarily even desirable because it would imply that someone keeps tracking where all the cars are to update the database.

Dead reckoning is something all navigation systems should at least have as a reference.

Be it VR tracking, or a car.

If one’s “absolute” readings are stating one is in position X, while dead reckoning states one is at Y, then one should look at the difference.

If the difference is small, then one is likely at X.

If the difference is huge, then one is likely not at X.

Depending on how our “absolute” reading is gathered, we can make judgments regarding the discrepancy.

For a system relying on land marks on the ground. Then the landmark itself might be gone, or might have moved. Or a new unknown one might have cropped up, spoofing the system into thinking it is somewhere else.

This is indeed something that should be taken into consideration.

We can also use more than one “absolute” reference as well.

This thing helps because it’s better at dead reckoning than observing the wheel speeds or trying to look out the windows. It is literally like an optical mouse under the car – it tracks where the car goes in the X-Y direction with millimeters of accuracy in potential – assuming it can keep up.

Wheel sensors aren’t so useful for that because of varying tire pressure and wear, or different size wheels, and slip, and radar/lidar isn’t so accurate because it’s relying on knowing the environment in advance to pinpoint yourself in it so differences between the map and the real measurement causes the best fit location to shift. Looking downwards and seeing how many inches you’ve actually gone in which way is going to be more accurate and less prone to sudden jumps in position because there’s less to go wrong.

If you want to know your position fast (within tens of milliseconds), there’s three options

GPS: 40-50 meters (individual data point)

Lidar: 40-50 centimeters (best statistical fit comparing to last measurement, however other things may have moved along as well so you never know…)

Better than that you need to use dead reckoning. However, since your wheels may have slipped, you’re not so sure. The ground will not have slipped without you actually moving, so if there’s a feature that’s still visible underneath the car, you can tell exactly where you are to within some millimeters – again assuming your radar can keep up.

The job of a self driving car is a lot more complicated than just following the road. It also needs to be able to recognize any objects on the road, and behave appropriately around them. If you can do that properly, then the vision system can also be used for navigation.

If visibility is so poor that you can’t see the road, it’s probably not the time to be driving.

Or — if the visual recognition system is so poor that it can’t locate the road, then it probably should not be driving.

I had such happen to me one.

Went from a little rainy, to extremely rainy. Couldn’t see more than a few meters, so felt safer to wait it out on the curb.

Happens once or twice a summer. Thing is, even with a little less downpour where you can still see the road, it’s going to rapidly build a layer of water and make your wheels into water skis, which still isn’t the main problem.

The main problem is that if you stop, other people don’t.

This is about as dumb and risky as all self-driving car bullshit. Self-driving cars are the new flying cars. Not really going to ever make it to common people. And stop talking about systems that use human safety drivers that must constantly stay vigilant and take over at a moment’s notice when the computer fails. That is the most unsafe kind of system imaginable, and to boot it defeats the entire purpose of a self-driving car in the public’s eyes. If that were ever rolled out at scale, the roads would be a bloodbath. If somebody tells you that self-driving cars will be safer than human drivers, know that that person is a complete rube. They launder their safety violations through the hapless safety driver, lulling them into a false sense of security with a system that could only ever be intended to reduce the amount of attention on the driver’s part, then requiring them to make life or death decisions at a random moment’s notice. Awful. Never EVER gonna happen.

Self-driving is either totally autonomous or a total dead end. There will never exist a society that will allow millions of such an inherently unsafe and doomed system to use the roads.

This is gonna remain five to fifteen years in the future for a century, just like fusion power. It’s one of those hard problems. And it’s not even a good solution. We need to get rid of cars and use public mass transit that doesn’t suck. An infrastructure based on self-driving cars is making a purse out of a pig’s ear. It’s totally boneheaded and people don’t even want it. It’s a great example of how out of touch silicon valley is.

So yeah, let’s add even more sensor data that’s far more unreliable and not even visible to the humans on the road to boot. That’ll help. This is really irresponsible engineering. Let’s stop pretending this is helpful.

> We need to get rid of cars and use public mass transit that doesn’t suck.

The better the public transit system is in terms of “not sucking”, the more it just resembles privately owned cars in terms of congestion and fuel use. You’re asking for a compromise where the least worse option – taxis – is still worse than just driving there yourself, because a taxi still has to shuttle between customers burning fuel pointlessly.

no, taxis/ubers are still more efficient than the personal vehicle: fewer cars carrying more people, not carrying one twice then occupying real estate and sitting still for 8 hours.

But cities the world over have amply proven that public transit – subways, trams, streetcars, buses – doesn’t have to suck.

It’s a shame you haven’t experienced a place where public transport really “doesn’t suck.” It doesn’t look anything like a bunch of private cars. It’s a sensibly planned network of light rail, undergound rail, bus and walkable/bikeable routes. Taxis are rarely required because there’s a transit stop in walking distance of where you need to go.

Having experienced public transport that doesn’t suck, I assure you that visiting places where moat people drive seems very primitive and inefficient. Not just in terms of space and congestion, but also time. As we know, when you are driving, you shouldn’t be doing anything else. On oublic transit I can read a book, text, surf the net or even close my eyes! Even with a short commute, that’s like an hour a day that you can be doing “something” instead of steering your car, or supervising the autopilot. That hour a day really adds up.

As an aside, I think a few people who are working from home during this plague are beginning to notice the extra time in their day…

Reminds me of this:

https://www.philohome.com/sensors/filoguide.htm

Back in I believe it was in the 60s there was an experimental buried cable guidance system they hyped. IIRC it was something like the Barret Guidance System or the like.

The problem with *any* non-vision based system is that it isn’t what humans use. In winter in cold climates sometimes the roads are covered with snow for days and road margins leak out for week. Humans just form new emergent lanes. These lanes often aren’t the “correct” lanes that non-vision based absolute position would find.

Doesn’t take that long for ice tracks to form. Then if you’re doggedly trying to drive where the road should be, you’re constantly going in and out of the grooves.

I’ve been waiting for this shoe to drop for years. Ok – ground-penetrating rader is like half a shoe-drop.

The best autonomous vehicle system will be somewhat smart vehicles, talking amongst themselves and to a central traffic control, and following embedded paths. Hoping for perfection by trying to make individual car automation use only visual cues intended for humans is silly.

Even easier then that. All you need is to filter out the engine noise, compartment noise, noise from other cars and a little FFT…

Listen to the echo of the tires on the road the speed of sound is slower in air then in water and water is slower still then say solid rock. (I’m not going to say just this isn’t something you can throw a 555 or Ardaino or ESP32 you will need an FPGA to update the vehicle profile to account for weight, wind, tire wear, speed even the occasional leaves, plastic bags (caught by the bottom of the car) antenna decoration, bug splats and dust, pollen, grime.

You can have a conversation with someone else in two separate cars in a parking lot. With your windows up. Range is based on the shape of compartment of the vehicle and profile of the tires. It works like a tuning fork.

Dirt roads or gravel would be never be self driving anyway! (The MIT challenge across desert nothing, based on the number of cars you would have to encode every single car with a different wavelength of LIDAR if it is active system.)

Passively? We saw the fake speed limit and pedestrian projector trick.

RTK GPS can get to centimeter precision.

Except for the wide swathes of “zero bar ranch”, every cell tower could have a base station that could be used as references (it knows where it is and doesn’t move and the data usage for the corrections would be small.