The leap to self-driving cars could be as game-changing as the one from horse power to engine power. If cars prove able to drive themselves better than humans do, the safety gains could be enormous: auto accidents were the #8 cause of death worldwide in 2016. And who doesn’t want to turn travel time into something either truly restful or alternatively productive?

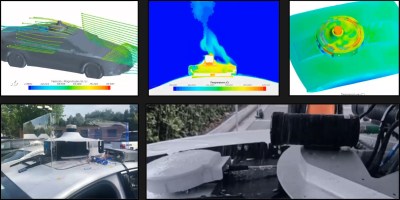

But getting there is a big challenge, as Alfred Jones knows all too well. The Head of Mechanical Engineering at Lyft’s level-5 self-driving division, his team is building the roof racks and other gear that gives the vehicles their sensors and computational hardware. In his keynote talk at Hackaday Remoticon, Alfred Jones walks us through what each level of self-driving means, how the problem is being approached, and where the sticking points are found between what’s being tested now and a truly steering-wheel-free future.

Check out the video below, and take a deeper dive into the details of his talk.

Levels of Self-Driving

The Society of Automotive Engineers (SAE) established a standard outlining six different levels of self-driving. This sets the goal posts and gives us a way to discuss where different approaches have landed on the march to produce robotic chauffeurs.

Alfred walks through each in detail. Level 0 is no automation beyond cruise control and ABS brakes. The next level up, “driver assistance”, adds features like lane holding and distance-aware cruise control. Level 2, “partial autonomous driving” combines two or more of these functions. (Telsa’s “Full Self-Driving” mode is truly only partially autonomous.) In these modes, the driver is responsible for watching the system, and deciding when its use is safe.

Level 3 allows the system to turn itself off and hand control back to the driver. This is the first level where the car starts to make higher level decisions about the overall traffic situation, and is the beginning of what I think is the general public’s de facto definition of self-driving. With Level 4 the vehicle should be able to drive itself completely autonomously in restricted areas and get itself safely to the side of the road even if the driver doesn’t take over. Level 5 is the top and the holy grail: the vehicle controls itself in any condition a human driver would have, with zero human intervention or oversight necessary.

Why This is So Hard?

Generally speaking, this is a sensor problem. By and large the actual control of the vehicle is a solved problem. Alfred mentions that there are important issue to consider like latency between self-driving control hardware and the vehicle’s computer systems, but making the car go where you want it to is already happening. From millisecond to millisecond, those decisions of where the vehicle should go are the very difficult part.

Pointing out the obvious, the road is crazy, people are unpredictable, and changes in road conditions like weather, closures, and construction make for an ever-changing playing field. Couple this with roadways that were designed for human drivers instead of robot operators and you have a crap shoot when it comes to interpreting sensor data. Interim solutions, like traffic lights that communicate directly with self-driving cars rather than relying on the sensors to detect their state, are possible ways forward that involve changes outside of the vehicles themselves.

Buzzing About Sensor Fusion

The most interesting part of the talk is Alfred’s discussion of sensor fusion. It’s an often thrown around buzzword, but rarely deeply explained with examples.

Some combination of cameras, lidar, and radar are used to sense the environment around the vehicle. Cameras are cheap and high resolution, but poor at determining distance and can be obscured by something as simple as road spray. Alfred calls lidar “super-fantastic”, able to depth map the area around the vehicle, but it’s expensive and can’t detect color or markings. It’s also low-reliability because lidar sensors include moving parts. Radar can see right through some things that foil the other two, like fog, but its output is very low resolution.

Combining all of these is the definition of sensor fusion and one great example of how that works is the exhaust from a vehicle parked on the side of the road. Lidar picks up particles in the cloud and would slam on the breaks if this were the only input for decision making. Radar sees right through it knowing there is no threat. And the camera can correlate that a parked vehicle has an exhaust pipe and what the other sensors have detected fits the expectation from past learning.

Actually Doing It

This is fun to talk about, but Alfred Jones is actually doing it, and that means diving into the minutia of engineering. It’s fascinating to hear him talk about the environmental testing employed for proofing the sensor array against huge temperature ranges, wet/humid conditions, and all challenges common to automotive applications. His thoughts on sensor recalibration in the Q&A at the end is of interest. And all around we’re just excited to hear from one of the engineers grinding away through all barriers in pursuit of the next big breakthrough.

Despite all the hype about using AI for self-driving cars, currently no implementations actually use any machine learning. Instead, all the detection an instruction is written by human programmers. It’s a huge problem to solve which requires absurd amounts of computation power, so I’m less surprised and more just disappointed.

AI techniques like classic Maschine Learning are used in the industry. But newer techniques like Deep Learning are not used yet.

Main reason is not lack of computational power, but reliability and predictability. Classical algorithms are also computationally expensive when facing on the amount of data produced by the complex sensor setups.

Actually,I would say Deep Learning approaches are even faster nowadays. But the current state of the art research does not allow to get enough insights into what a deep learning system learned to deem it reliable and predictable.

We should NOT push fully self-driving much further. Especially regarding normal vehicles inside cities. It just doesn’t add up.

At the end of the day pedestrians and cyclists will be the most unexpected entities in traffic. Especially cyclists often don’t give a fuck about rules. And always need to be in focus.

So how do we solve that problem? There has to be constant surveillance about anything that could in any way be traffic relevant. Well yes, cameras are cheap. But do we want that? I don’t.

Automated driving on highways or whereever we have less risk for trains, trucks, you name it, will probably work out, but I don’t see autonomous driving and to be honest, I don’t really want to. I think especially the ethical side from all of this should get more into focus.

Self-driving cars generally detect moving entities and avoid collisions with them regardless of what they are. If a cyclist cuts you off, you may swerve into the path of another vehicle, while a self-driving car would be able to critically evaluate the best course of action to avoid any collision at all. As far as surveillance concerns go, there are already cameras everywhere in our phones which we always have out in public. The equipment for mass surveillance is already deployed, we just need to promote openness, transparency, and legislation that prevents its use for undesirable purposes.

Self-driving cars are a future that’s better for everyone. Your ethical concerns are legitimate, but try to imagine how we can have a society that is both more ethical and more advanced.

No self driving cars are not a good idea. Because you will have some evil entity like Google, Apple or Amazon who calls the shots as to whether you are allowed to drive or not. In short it destroys personal autonomy and you become a object to be managed by Big Tech,

Advanced, for whom? Many so called recent advances like NEST and those digital big peeping toms like Alexa, Siri and others allow for a level of surveillance that would make Stalin and Hitler giddy.

To be fair the dystopian future you are painting is already here (has been for some time). Smart phones are infinitely more capable, camoflaged and widespread than any standalone device like a nest or alexa.

Almost all new cars today have some sort of “phone home” feature, either to enable a workshop to do road-side assistance (Is it safe to continue, or should i call a tow truck?), or to allow cars used for business to track their use. My 2015 Audi’s basic navigation-system pulls traffic-information from somewhere. The same system is connected to the engine-computer and is most definitely capable of disabling the car completely. And this car is almost 6 years old! Same car also is capable of steering, braking, accelerating and changing gears (From Park to Drive, etc) all on it’s own, as it’s gearbox-by-wire. In essence, with sophisticated enough software, VW could make this car drive itself back to their factory, or kill it’s own passengers and at least the ones in 1 other car, before anyone can react, or drive into a crowd and kill hundreds. And they will be able to do this simultaneusly across all their cars in the whole world, at the same exact time. Yes, scary stuff, but so is the atomic bomb, biological weapons and all the stuff we don’t know of yet. That is the price for being connected and having all the nice things we have today.

So, in short, the “Evil entities” already have that control, but ultimately you decide if you want to use their products. It’s not like the police come to your house and install Alexa against your will, or VW force you to drive their car?

It’s all a cost-benefit calculation. Some people can accept the cons like surveillance, when compared to the convenience of that i.e. Alexa has to offer, and those people might see higher convenience or lower cost than you and i might. I accept that VW has a lot of data on how i drive, because it also allows me to drive a modern, efficient and safe car, because VW can use that data to make cars more efficient, cheaper and safer, and all the while i also get access to at least some of the data their collect, in real-time, allowing me to monitor the health of my battery, how much fuel is in the tank or get notifications if the car is towed, broken into or hit by another car will parked.

What if a car cuts off a bike, and the self-driving car rides into the bike that needs to make space?

Some cars make dangerous and unexpected maneuvers, I am not that confident a self-driving car will react this will to unexpected, i.e., untrained situations. Granted, many people don’t react good either.

But to really have a more safe future isn’t trivial at all.

People react horribly, compared to any computer.

depends on the programming

I agree. But do u really think that people will no like it. Like me and you?

This is actually a pretty famous problem! The autonomous car has a lot more “brain-power” than we do, so it can essentially freeze time and think about what to do in a given situation. Humans react on instinct, and will almost always try to avoid the cyclist, because they get tunnel-vision and haven’t seen the family on the sidewalk. Some people have great peripheral vision and have already noticed the family on the sidewalk, but the average person driving is stressed, haven’t slept well, is hungry, just got a notification on their phone or is listening to an unsettling news-story on the radio.

The autonomous car will in a perfect world minimize losses because it has plenty of time to analyze the situation, but this raises another question:

In a situation where the autonomous car decides it can only do 2 things: Kill person A or person B, who does it kill? “doing nothing” is also a decision, so it has to make a choice. What if person A is a small child, and person B is a successful business-owner? What if person A is white and person B is black? What person A are actually 2 criminals and person B is an innocent child? What if person A is the owner of the vehicle(For example if option A is drive off a cliff) and person B is someone else? What if the computer chooses the right thing only 9/10 times?

And who is ultimately responsible for making that choice? The business that sold the car? The programmer who implemented the algorithm? The driver, that bought the vehicle but didn’t have any way of affecting what the algorithm did?

All very interesting questions, but i have no doubt that the world will be largely better off with a computer driving than humans, even though it might make bad choices sometimes. I have experienced first hand the abilities of a computer to make emergency stops in a parking lot because someone came flying out from between the cars and i didn’t even see what happened because i was looking for a parking spot in the other direction! Person in question probably wouldn’t have died, but they might have been bruised pretty badly, but in this case both of us got quite a shock, but after ensuring both where okay, we just continued on with our day.

> The autonomous car has a lot more “brain-power” than we do, so it can essentially freeze time

No it doesn’t, and it can’t. It trades speed for accuracy and ability. The CPU is powerless to make complex judgements or even to perceive things properly in the short time you want it to act, because it can’t cross-compare huge databases and make statistical analyses to say whether this pixel blob or radar cross-section looks more like this or that.

It actually operates on very crude “rules of thumb” that are essentially just checkbox lists over a few items that are fast to compute from the huge stream of data the car is getting from its sensors, and as a result it only works “most of the time” because the algorithm is simply too dumb.

It’s pointless to discuss whether the autonomous car should decide to do A or B in a situation where it is likely to not comprehend the situation at all, and it doesn’t matter that it can iterate through its program a thousand times per second, because even a simple moral choice for such programs is like asking a pigeon to write a Shakespeare play. You can dip its feet in ink and pretend it’s writing, but that’s just you.

What I’m saying is, before the car can even judge whether to kill A or B, it has to recognize that there are persons A and B.

And we’re still at the stage of development where this only happens 80-90% of the time and cases, because the computer is using extremely simple means of even detecting pedestrians. Something like comparing the width-to-height ratio of a bunch of pixels that appear to be moving against the background – anything that can be reduced to a number with a threshold. If the person is too fat, or they aren’t moving “correctly”, the car goes “huh, nothing there”. and drives on.

>Self-driving cars generally detect moving entities and avoid collisions with them regardless of what they are

Except they don’t.

Like in the examples given, the car has to decide whether what it sees is a false positive or a real object. If it sees a shopping bag flapping in the wind and brakes, it can cause an accident. For this reason, if it can’t or doesn’t make a positive ID for the obstacle that identifies it as something it should avoid, it treats it like air. The car has no self-preservation instinct – it’s just a mindless program – so it doesn’t care. If the conditions to brake are not met for any reason, it will drive you over.

I rather notice cars being reckless than cyclists cutting you off. Cars ignore speed limits, take over eventhough you are taking a turn, driving too close to pedestrians or cyclists. Might be location dependent, or maybe a car-owners view. The wish to overtake a bike at red-lights is also a pet-peeve. rarely can they stay behind or wait briefly, and therefore cars make dangerous maneuvers. Annoying.

Other than that, I agree that self-driving cars are a very hard problem to solve.

Urban city scenarios are seen as the hardest scenario for automated driving. So that is not the first thing we will see.

City scenarios are way less structured. You have winding roads with steep slopes, complicated lanes markings, and yes lots of pedestrians and cyclists.

Pedestrians are very hard to predict by the classical tracking algorithms. Does the pedestrian just make way for somebody else or does she cross the road? Humans are good at picking up those small cues and the evaluate the general scenario and context – and have empathy. For algorithms this prediction is still hard to do in a reliable way.

Cruise is putting its cars on the streets of San Francisco, fully autonomous, this winter.

And we’ll see how long that lasts (or how soon they kill someone)

I’d like to see how well an autonomous car could handle Amsterdam or Utrecht inner city driving. If it can handle the cyclist and pedestrian volumes there, I might start thinking it’ll actually work

They already handle that far better than humans. Wake up.

Humans and machines operate differently. To ask a machine to drive in a world occupied by humans is as obsurd as asking a human to efficiently perform binary operations. Create dedicated areas for ML operated vehicles (no humans, sensor driven access control and guidance, etc) and let them operate in the environment created for them. They can accel at operating in poor weather since they route is automated and they could know their precise location down to the millimeter through various sensors. Putting a set of cameras on the average street will create horrible problems.

Counterpoint: My car is more vigilant than I am, already.

That level of electronics and complexity is not needed in a vehicle. The average car on the road today has more cpu power than most military aircraft.

That’s a very bold claim, reference?

Well…

If you consider “most” to include all the military aircraft ever built, beginning with WWI, you can see truth to the claim.

B^)

@Ren … touche lol, I assumed he meant military aircraft still in active service/operation

True!

“””The MMC7000 that equips the more modern F-16, including all F-16 Europeans who benefited from the MLU (mid-life Update) modernisation, has always that 10 MB of memory but its RM7000A processor, designed in the early 2000s, works between 300 and 400 MHz.”””

https://aviation.stackexchange.com/questions/52853/what-cpu-does-the-f-16-use

Hyper vigilant cars will be taken advantage of in traffic. If a cyclist/pededtrain sees a self driving car approach, they can just jump out in front of them, knowing that the car will do whatever is necessary to avoid them.

Car drivers too.

When a majority of cars can drive on the highway autonomously, can the left lane be for passing or getting there faster? This assumes that everyone in the left lane is travelling faster and safer. Can speed limits be dynamically set for conditions? In a 5G connected car, any changes to road safety would be quickly communicated and all traffic slow down (or stop). Any damage to the road, paint, or other markings referenced by the vehicles would be communicated to DOT or highway staff for remediation. This is all an enabler for going faster, but to subjective people, that might be too scary? Emotions trump logic. We will end up going slower.

Speed is one thing, but how close vehicles drive is also very interesting. Computers basically have no reaction-time, and the cars can communicate, so they will be allowed to go 130km/h and drive mere centimeters from the car in fronts bumper, so any road will be able to move at least 4 times as many cars. I wouldn’t mind “only” going 110 km/h, if it means that traffic will completely disappear, so my average speed will be those 110km/h. Right now, in only moderately dense traffic, my average speed from the on-ramp to the off-ramp going to my parents is usually only slightly above 100 km/h, even though my adaptive cruise-control is actually set to 140 km/h GPS, but because of trucks overtaking, grandma in the fast-lane or just general traffic, the speed will often get as low as 85 km/h

My parents live in the second major city that way, almost exactly 100 km highway away, and we both live very close to the on/off-ramps, and it takes pretty much exactly an hour to get to them. At night when i can go 140km/h the whole way, the same journey can take as little as 40 minutes.

We will never get past the problem of a random cosmic ray event flipping a processor cache bit or memory bit.

I am, and shall remain, “of little faith.”.

That’s the simplest problem to solve. There are promising solutions for this being worked on for space travel.

I enjoyed getting around Tokyo by subway. Long distances are no problem, and the train doesn’t have to worry about cyclists or pedestrians. When I arrive there are no parking problems and I can walk to where I need to be.

If I want to go further I can take the Shinkansen. It’s almost as if separating transportation machines and people is better than trying to mix them all together.

The biggest causes of accidents are speeding and impairment. Self-driving cars must be programmed to never exceed the speed limit. Otherwise the manufacturer will have a million lawsuits. For this reason everyone in America will turn off self-driving unless they plan to sleep , have sex, or watch video since speeding is considered a God-given right in the USA so long as you don’t get caught. Based on my commuting experience, 80% of all drivers are speeding or trying to speed. 15% are impaired by trying to figure out some feature of the complex and distracting dashboard, texting, drunk, elderly, angry or all of these simultaneously. Roughly 5% of drivers are competent, attentive and obeying all traffic laws.

Self-driving cars will only catch on for taxis and similar services. It will be a tossup whether the overall benefit exceeds the legal costs for all the resulting lawsuits.

“speeding is considered a God-given right ”

maybe because speed limits are totally arbitrary, and make no sense whatsoever?

the idea that accidents happen because of speeding is the most absurd nonsense i have ever heard. i guess if you never leave the parking lot you will never have accidents?

in case you have not noticed, the purpose of private transport is to go from A to B. is it really surprising that people try to do it a bit faster than it would have been in 1700?

due to absurd speed limits, and other abritrary government measures like never ending road works, there are motorways in europe where average speed real is 70kmh or less. thats less than 50mph. soon a horse will become an attractive proposition. and its green!

too bad that the economic consequences of limited mobility will bring us all back to the middle ages, when you had to marry the daugher of your neighbour

Given the default speed limit in the US seems to be a positively snails pace 45 MPH/72 kmh for very long stretches of perfectly good highway (maybe 55 mph/88 km/h in some stretches, anything over seems to be rare from what I’ve seen) I would sort of agree with that view. 70km/h on the highway in Europe is not THAT common in my experience. The Netherlands lowered it to 100km/h in most places quite recently (was 120 to 130 before), most of Germany is 120 (and some unlimited, or 100 near cities). Belgium is also mostly 100 or 120 km/h (with some 80 sections due to bad roads). France I haven’t been to in 10 years so I couldn’t say. Italy I haven’t seen enough of to really say much.

The biggest causes of accidents are speeding and impairment.

Nope. Not even close. It’s like you literally just made that up.

self driving cars are a stupid idea. hell, even the name can hardly be stupid.

i have a two axis autopilot on my airplane and i can let it fly for hours without any problem. but let an AP drive my car? not happening.

unless all cars are centrally controlled and coordinated. which means, private mobility becomes a centrally controlled and planned public transport system, but even more at my expenses. which i suspect is the final goal of governments

at these conditions,i will rather just walk, and give up cars altogether.

Not a lot of opposing traffic, obstacles, stuff up there to hit, that’s why it’s easy. No one has thus far collided with the sky. However there have been flights ending in impact with cumulus graniteus while on autopilot.

There has traditionally been low penalties for cutting off bicycles by humans. Apparently we hold automation to a higher standard.

Very true. I am a conscientious cycler and have been hit five times in three different cities in the USA, each time by a driver who wasn’t paying attention. I have had dozens of close calls. I’m all about the auto-piloted automobile. Can’t be much worse than human drivers.

I hear all the time, “what happens in ?” I was always think, “what would happen today?” Often the answer is the driver would hit the person/cyclist/other car. Happens every day.

Good! Leave some breathing room for the rest of us, fuck up the planet a little slower than you are.

You should take to youtube and search for ‘Tesla FSD beta’. You’ll see some really impressive stuff. Not perfect but the step-change compared to the previous version is very big. Imagine another 2 or 3 of these leaps in the next 5 years or so and it is easy to imagine self-driving cars being the norm before 2030.