It’s an exciting time in the world of microprocessors, as the long-held promise of devices with open-source RISC-V cores is coming to fruition. Finally we might be about to see open-source from the silicon to the user interface, or so goes the optimistic promise. In fact the real story is considerably more complex than that, and it’s a topic [Andreas Speiss] explores in a video that looks at the issue with a wide lens.

He starts with the basics, looking at the various layers of a computer from the user level down to the instruction set architecture. It’s a watchable primer even for those familiar with the topic, and gives a full background to the emergence of RISC-V. He then takes Espressif’s ESP32-C3 as an example, and breaks down its open-source credentials. The ISA of the processor core is RISC-V with some extensions, but he makes the point that the core hardware itself can still be closed source even though it implements an open-source instruction set. His conclusion is that while a truly open-source RISC-V chip is entirely possible (as demonstrated with a cameo Superconference badge appearance), the importance of the RISC-V ISA is in its likely emergence as a heavyweight counterbalance to ARM’s dominance in the sector. Whether or not he is right can only be proved by time, but we can’t disagree that some competition is healthy.

Take a closer look at the ESP32-C3, with our hands-on review.

s/Speiss/Spiess/g

Now you have two problems

How is the zephyr OS and radio support?

I am afraid i don’t understand the answers here. The guys last name is Spiess, not Speiss. Just click on the video link and see yourself.

The /// construct you used is from a programming tool called regular expressions. There’s an old Computer science joke: I had a problem, so I wrote a regular expression to solve it. Now I have two problems.

I will honestly say that it is technically hard to make a properly open source piece of silicone.

And the advantages of having a fully open source chip is also largely a debatable topic.

I can see value in RISC-V being an open source, free to use ISA, and provide needed competition against ARM in a lot of fields. Though, I can also foresee a risk that RISC-V becomes as scatterbrained as GNU/Linux is in regards to different interpretations of it. This is something ARM already somewhat “struggles” with.

But a fully open source chip is a different topic.

Yes, RISC-V is a step in that direction. But on a similar degree as the 7400 series of logic chips.

Open sourcing actual manufacturing details and mask layouts can create legal issues with the foundry making the chip. Since most “cutting edge” foundries have their own secret sauce for making what they are good at. (In short, it is a lot more than just “We can draw a feature that is X nm wide.”)

One could though go to a fab that is more relaxed, or one that allows one to dictate the whole manufacturing setup. Ie, the fab only provides the tools, not expertise on effectively using the tools, nor offer advice on laying out the design.

One can go to a ASIC design firm, but they too tend to be fairly strict about their own secret sauce.

So for a truly open source chip, one would largely have to make the whole layout for it without the above help.

If one knows what one is doing, either as an individual, or as a community, then yes, it isn’t impossible.

But I doubt that the chip would have stellar performance nor power efficiency for the first few iterations of it.

Not that these two needs to be of high importance.

In regards to supply chain security, and the ability to validate that one truly has what one expects to have, then hardware unlike software isn’t really something one can just run a check sum on. Hardware backdoors can be nearly impossible to find, even if one knows that they are there.

Some backdoors can be implemented with a few transistors and a couple of clever traces, good luck finding that in the mess of wires and tens of millions of transistors we call a CPU core. If you have the tools to even see that small features that is. Without also destroying the chip in the process….

And since the chip is open source and likely developed by a community, then it gives more access for bad actors to plan their moves for how to either make a discrete on chip backdoor and seed that into the production chip somewhere in the pre production pipeline, or make a clone to just poison the supply line with. In short, “hardware security” isn’t a strength of open source.

And this leaves only a few real reasons for wanting a truly open source chip.

The main one being better documentation for software development.

Another being that one can more deeply study how the chip works and get a better understanding of the underlying system, instead of it just being a black box full of magic.

Some people taut that costs can be reduced. And this could potentially be true.

But the fact that the chip is “open source” makes it a bit of an iffy thing to invest in from a manufacturing point of view. Manufacturing chips is far from cheap, and the main cost is making the photomasks and setting up the machines. After that, the chips are fairly cheap. Ie, a manufacturer can be hesitant to make that initial investment if they don’t see sufficient security in getting the needed income from each chip produced. Even if the current market price might be on the high side in their opinion. (Since it can be hard to know if another manufacturer is thinking the same thing. Two birds striking the same pray can quickly result in a mid air collision.)

In my own opinion, I do agree with the statement that RISC-V’s main importance is that it competes in the embedded section against ARM. For better or worse. If RISC-V chips are “truly open source” is largely irrelevant.

As you say, it’s hard to audit a hardware device for vulnerabilities. I remember an exploit where a spurious non-functional circuit was placed near a latch that held a security-relevant bit, and the attack was executed by cycling the circuit so hard that it flipped the latch, a la Rowhammer. You are right that FOSS does not prevent this style of attack, but the proprietary model makes it practically impossible to detect it—you just have to have a complete trust in the designer/vendor integrity, while a FOSS design can at least be version-controlled, diff-ed against a known-good part, etc., as you yourself say, “instead of it just being a black box full of magic”.

I would say that the advantage of an open design is the transparency of the design workflow: in principle, every feature can be traced to artifacts in the previous stage, all the way back to the top-level source.

The disadvantage with open source and a community driven design is that any attacker has more time to plan their moves, and also more resources to base it off. And there is a lot more routes for the attacker to hide their tracks behind.

In a closed source design, then the only people that has access to the design file is the people designing it. If the end product turns out to have a backdoor, then it is easier to pinpoint where said backdoor came from.

There is a huge difference between “we made a mistake.” and “Someone intentionally altered the design.”

From a standpoint of finding flaws, open source has huge advantages.

From a standpoint of ensuring that copycat chips with backdoors doesn’t infiltrate the supply chain, then open source is the least thing you want…. (At least before the production run is made. After it is done and the chips have gone out to the customers, then the thing can be made open sourced and scrutinized heavily.)

Security through obscurity isn’t really security.

But obscurity takes time to circumnavigate, for hardware and supply lines, that time can be sufficient security to be real security. (The time it would take an attacker to infiltrate the supply line with fake chips would be on the order of a few weeks. So there is some time of fairly good safety.)

The next problem with open source for security in the world of hardware is frankly that one of the goals of RISC-V is for the chips to largely be commodities. This introduces the very real problem of multiple sources for the same type of chip. This increases the risk of supply line tampering by any interested attackers.

Now, most supply line attacks tends to be fairly targeted in their approach, in short, only people that needs security needs to actually worry about it. Since making chips with backdoors to target specific individuals/businesses isn’t really the most “economical” thing in the world, so the attacker won’t be mass targeting at random. (unless the attack is broadly applicable against most people, like the security chips in payment terminals. (and these things have had multiple “successful” attacks.))

If one wants to make a security centered chip open source, then that should only be after the chip is discontinued from production. (One can publish the documentation earlier, but with sufficient margin to ensure that supply line attacks are still practically impossible when the last customers gets their chips.)

In the end.

What is logical in regards to software security, isn’t always applicable for hardware security.

It is two completely different fields.

Obscurity takes no time to circumnavigate with bad actors or bad security by the keepers of the secret. Open source on the other hand with so many eyes on it real flaws that can be abused should be spotted and fixed, or at least mitigated in software afterwards because the flaw is known to everyone (even if a malicious actor spots it first and uses it because the method is available for all to find odds are far better it will be found and fixed, rather than lots of head scratching we have no idea how this is possible moments because the security researchers have no idea how the box of magic works…).

So for me that isn’t a winning point..

Its also far easier to audit chips if their entire behaviour is known in advance – makes it much much harder to actually hide anything in the silicon as out of spec and unexpected behaviour is findable, where black boxes full of magic can do anything at all when poked in strange way x, you know what the fully open silicon should do under than same odd probe…

As for the architecure being used as a commodity chip for all uses, security isn’t the primary concern there, but it doesn’t mean it isn’t still an important focus. Plus security of anything is very much defined by how its used, not just the part you use – Take a really crappy easily picked lock, or very flimsy door in the dark alley – if that is the only part of your security you should expect to get hammered for it. But that same lock or flimsy door combined with the right lighting, cameras, guards, sensors, other doors. It might be a weaker link, but its not opening the kingdom on its own.. Unlike if all the security features and doors were electronically controlled and that single point of failure the electronic controller was dubious in security terms.

“Obscurity takes no time to circumnavigate with bad actors or bad security by the keepers of the secret.” is true, if your own development team leaks the design to the attacker, then yes you have a security issue. But a good design team shouldn’t have corrupt members in it. This can be a bit hard to ensure on the other hand.

And my statement isn’t strictly about the production chip itself having an intentional security flaw in it. But rather that an open source design aiming at security has a large risk of having such copy cat chips on the market.

If the flaw effects all users of it or not is a different question. If it can be patched in software is also a different question. (Though, software patchable backdoors are honestly quite poorly made back doors…)

And in regards to auditing the chip to see if it behaves correctly.

A well implemented back door doesn’t effect the performance of the chip. And any increase in power consumption would be negligible as well. Since the targeted chips would contain millions of transistors or more, while the backdoor might only use a handful of transistors. Making it a needle in a haystack to find.

A backdoor like this is typically implemented as a value check on an instruction or bus. This triggers the backdoor hardware to in turn change some control state in the core or larger processor as a whole. For an example make the CPU consider our thread as having kernel level access to anything we like. (Typically it checks more than just one value in more than one place. So good luck scanning through all possible value combinations in all locations, even for a 4+ GHz CPU, this is still going to take many years.)

(And yes, searching for backdoor hardware around the security systems is a very logical place to start. Since it is likely here that something will be out of the ordinary if one decides to inspect the chip under a suitable microscope.)

Nor is my statement that “Open source is idiotic because it can’t actually ensure hardware security.” but rather, if one needs strict hardware security, open source do have some downsides that one needs to consider.

Hardware security when it comes to chip design is requiring obscurity ahead of production. Since validating that a chip truly is what it states on the tin isn’t remotely trivial. The logic going into the chip can still be publicly documented and scrutinized since it doesn’t paint a clear enough picture of how it will be implemented on a chip.

In regards to hardware level “bugs”, like trace cross talk, power issues etc. This can all largely be avoided by being more careful when designing the chip, and typically going for a less transistor dense implementation. (Then one can at times even put a grounded shield between traces, reducing crosstalk issues further.)

In the end.

I see many issues with fully open sourced chips that makes them unattractive for many applications. Be it just worse manufacturing quality (higher failure rate, lower MTBF, etc), lower performance, worse power efficiency, or a larger risk of tampering. Depending on what one needs out of the chip, then each of these issues can disqualify such a fully open sourced chip from being usable in one’s application.

Wow…Przemek, I remember you from circa 1995 when you used to organise the Linux group meetings at NIH, Bethesda, esp one time when John maddog Hall demoed 64 bit Linux on a DEC Alpha

A blast from the past, huh? Pleasure to hear from you.. Linux and FOSS went farther than we ever hoped back then: as someone said, with Perseverance, Mars is the second planet with most of the computers running Linux

I agree with you that fully open source chip is unlikely. However, open RTL source is something we see and likely to see more. 20 years ago we wanted to integrate an ARM core into our design the licence we were asked to pay were around one million dollars. The Risc-V us a game changer. Even though the RTL it self is no free (for high end core), the licence fee is dramatically reduced.

AIST minimal FAB

Microchip is the only chip vendor that have interest in RISC-V. Arm core parts are much more available for the next little while available. I think we’ll see all kinds of oddball RISC-V stuff from China. They have the volume, motivation and resources to do this.

I do not see traditional manufacturers passing on their savings in licensing in the pricing.

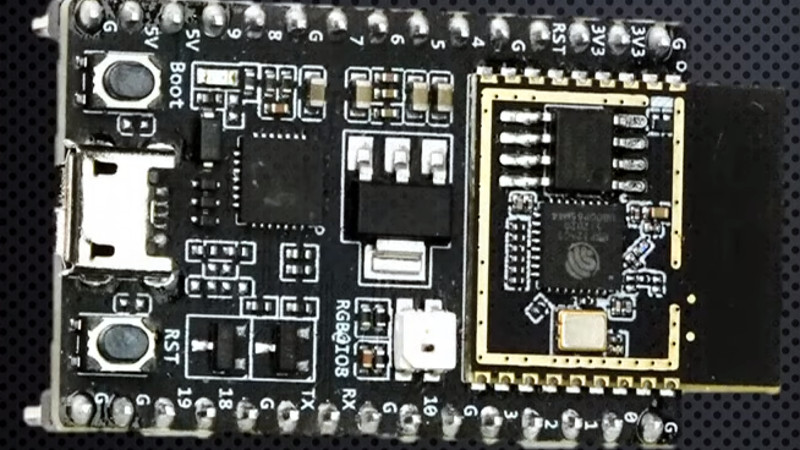

FYI: Only the small PCB in the right hand side with the golden rectangle and the black part (antenna) is the new module. A metal shield will be soldered onto the rectangle when they are in production just like the rest of the ESP parts. The rest is the development board probably where the USB JTAG debugger sits.

Ah, the “‘open source’ [1] is visible, therefore Bad Haxorz can exploit it more easily” meme. This meme has been played by Microsoft’s PR already for like 30 years. It’s a bit long on the tooth.

As always on engineering, it’s a tradeoff and has two sides. If you’re presenting it as one-sided, I tend to guess that you’re from marketing.

[1] I still much prefer the “free” spelling. It’s about freedom, after all.

+1

BENCHMARKS please !!!