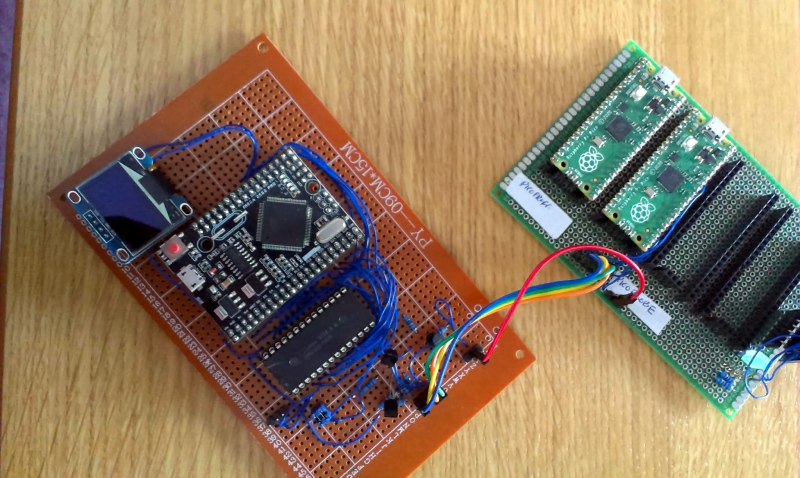

You can’t fake that feeling when a $4 microcontroller dev board can stand in as cutting-edge 1980s technology. Such is the case with the working transputer that [Amen] has built using a Raspberry Pi Pico.

For a thorough overview of the transputer you should check out [Jenny List’s] longer article on the topic but boiled down we’re talking about a chip architecture mostly forgotten in time. Targetting parallel computing, each transputer chip has four serial communication links for connecting to other transputers. [Amen] has wanted to play with the architecture since its inception. It was expensive back then and today, finding multiple transputers is both difficult and costly. However, the RP2040 chip found on the Raspberry Pi Pico struck him as the perfect way to emulate the transputer design.

The RP2040 chip on the Pico board has two programmable input/output blocks (PIOs), each with four state machines in them. That matches up perfectly with the four transputer links (each is bi-directional so you need eight state machines). Furthermore, the link speed is spec’d at 10 MHz which is well within the Pico’s capabilities, and since the RP2040 runs at 133 MHz, it’s conceivable that an emulated core can get close to the 20 MHz top speed of the original transputers.

Bringing up the hardware has been a success. To see what’s actually going on, [Amen] sourced some link adapter chips (IMSC011), interfacing them through an Arduino Mega to a computer to use the keyboard and display. The transputer architecture allows code to be loaded via a ROM, or through the links. The latter is what’s running now. Future plans are to figure out a better system to compile code, as right now the only way is by running the original INMOS compiler on DOS in a VM.

Listen to [Amen] explain the project in the first of a (so far) six video series. You can find the links to the rest of those videos on his YouTube channel.

Wow, THAT is really cool !!! I wanted to play with the transputers back in the day, but never got a chance to do it. I was especially intrigued with Atari’s ABAQ system, which was transputer based. I will be following this project closely. THANK YOU for highlighting the project !!!

Somewhere I think I might still have the original data sheets (books) that document the transputer family of chips. There was a whole ecosystem that went along with the cpus. They were “edge processors” that included peripherals, such as disk controllers, etc.

Ah, that is really interesting. I don’t suppose you know what the part numbers for those edge processors are? I’m aware of the IMSC011 (obviously) and the IMSC012, which is sort of the same thing, and the IMSC004 which is a crossbar switch for the links. I’m not aware of any of the other chips and would really like to see what they had and maybe get hold of some.

Andrew

I worked for INMOS back in the day. The IMS-M212 was the disk controller. This was one of the 16-bit (2xx) range as opposed to the 4xx and 8xx 32-bit ranges.

Did you have any contact with Archipel in France?

Use the commercial equivalent: xCORE processors programmed in xC. You will feel right at home :)

(See my later comment)

Brought back memories of programming in Occam2 back at uni.

Awesome to see transputers on HaD (this article and the Jenny List article).

Part of my degree involved programming transputers. I really liked programming in Occam. I’ve considered buying some transputers off ebay, but too many projects need attention already, I don’t need another.

He should be able to find the compilers that run on Unix. Archipel use an Indigo to program and control their Volvox supercomputer. I have the original Indigo but not the drive or system! If anyone knows where, give a shout. http://www.regnirps.com/VolvoxStuff/Volvox.html

I’m very interested in an Occam compiler that runs on Linux and generates transputer object code so that I can easily compile Occam programs. When you say Unix, do you mean the original INMOS cross compilers? I’ve had a look at those and to get them compiling (as in build the compiler itself) on Linux requires a bit of porting work. There are compilers that compile to other processors, ironically, but to get transputer code at the moment I have to run the INMOS compilers on DOS.

The Archipel Volvox is run by the SGI Indigo running SGI’s UNIX called IRIX. There is an interface card for the Indigo that has some Transputers on it which I assumed were for running code when doing program development (It also connects to the two boxes of Transputer cards. 44 Transputers IIRC). Unfortunately when I got this system there was no drive and I have never found the software needed. Somewhere out there maybe it still exists and I expect full compiler suites for IRIX. If they are written in C, I would think they can be ported anywhere. Otherwise they will be R3000 specific. I have never even seen another Volvox.

You should consider trying DOSbox, which can emulate DOS on any CPU architecture or OS. It’s much simpler than setting up a VM and installing MSDOS.

I probably have Linux versions. I can ask the person responsible for those compilers I have Linux st20 versions. I’ll check it there’s still can output t450 versions.

I have a Solaris version for Occam probably as well.

Amazing project – triggers so many ideas and thoughts – and a great example of how multi-core and multi-processor architectures can now be explored at a low cost using RP2040s.

Makes one wonder how many novel ideas that were proposed in the 70’s and 80’s and were not implemented at the time because the hardware would have been too costly to build.

At the time, I went over with HEMA from Germany to NESTOR in the US,

to adapt the Nestor software for the HEMA transputer boards to run the Nestor RCE Neural Net on multiple transputers https://www.hema.de/

There is a modern commercially available equivalent, from some of the same people now in XMOS (e.g. David May).

The xCORE processors are effectively transputers on steroids: up to 32 cores and 4000MIPS/chip, expandable, FPGA-like IO, guaranteed hard realtime.

The xC language is effectively Occam with a different syntax and hard realtime extensions.

I find them wonderfully easy to program; from a standing start I had my first application running within a day. Application was counting transitions on two 62.5Mb/s inputs and communicating results over USB to a PC. Makes realtime embedded fun again.

Buy them at DigiKey

They are not aimed at general purpose use nor are hobbyist friendly. I remember when they first came out, hobbyists were interested but soon went elsewhere when they saw these were not general purpose devices. Bizarre architecture and limited to on chip memory only. Then there is the fixed I/O port model they use which I find irritating.

It’s too bad they don’t have Occam or a version of Oberon for it. A nice sleek language would make it fun to program

“Bizarre architecture and limited to on chip memory only.”

Not sure where you got that from – they had inbuilt DRAM controllers and full external memory busses. They *could* operate on internal memory only, (for very limited applications) but most certainly were not limited to this.

TRAMs were available with memory capacities well into the MBytes (massive for the 80s)

I used the family from T2xx, T4xx, T8xx right up to T9000, from 1987 to the mid/late 90s, in architectures from single processors, up to around 90 processors, mostly for real-time image processing, and control, in occam and C.

Fantastic architecture for prototyping.

“limited to on chip memory only”

I’m not sure where you got that idea.

I used just about the whole family/variants, from T2xx, T4xx, T8xx, right up to T9000, from the mid 80s to the mid 90s, in configurations with single processors, right up to around 100 mixed T4xx and T8xx, and it wasn’t unusual for a PC-hosted B0xx board to have more memory than the host.

All the processors I used regularly had external busses and in-built DRAM controllers.

It was certainly *possible* to use them with internal memory only, but for very limited applications (Mandelbrot farms, or similar)

Agreed, it was quirky (initially, it was just about occam only, though later I used C extensively), but a fantastic platform for rapid prototyping.

Closest thing to processor Lego I ever found – great fun.

You’re confusing the transputer and xmos stuff. The article is about the transputer, but the comment above you was referring to the xmos devices.

I like the XMOS devices and am surprised they don’t get more coverage on HAD. In the least they’re a nice balance between a powerful µcontroller & FPGA-ish I/O. That’s all before considering the parallel computing aspects & the corresponding expandability. Ease of use is good with xC, but at times you may need to keep in mind just what the language & H/W is actually doing, at least with the older devices that I’ve been using.

I did some Occam programming at university on a Transputer. It was deadly slow — the Occam compiler ran on a single Transputer node. Interesting language.

Fun Transputer fact: later on the design was bought by SGS Thompson and begat the ST20 embedded processor core, which was used heavily in TV set-top-boxes. Just one node, though. I can’t tell if it’s still current, though.

Used to love transputers in the day. We had loads and built sonar systems around them, and it made parallel programming a breeze. You could scale up from software processes to multiple hardware based processes with no or little software changes, which meant develop the code on one transputer running X processes, then run it on 15 processors running 1 process.

In many ways they were ahead of their time, but just ran out of steam. We had initial silicon of the T9000, which on paper looked superb, but just never worked and we could tell it was dead on arrival.

I think with more funding and perhaps a better business model it could of become an important architecture, but it was not to be. Also it pre-empted the move to massive parallisation, so maybe it was just ahead of its time, but still I hit design issue and i think we could do with a few transputers to do that

Takes me back to reading about them in the C’t magazine. I so did want one to make my Amiga 2000 a super computer :)

Same here. :) Just they were so freaking expensive. So I was waiting for some to reach the secondhand market. But by the time they reached a secondhand market close to me, I had lost all interest.

That took me back! I developed transputer-systems and sold quite a lot of transputer modules and motherboards. Then Inmos developed their own modules and my market disappeared.

How are transputers different from something like using MPI across multiple chips?

“The RP2040 chip on the Pico board has two programmable input/output blocks (PIOs), each with four state machines in them. That matches up perfectly with the four transputer links (each is bi-directional so you need eight state machines). ”

One would think one could do the same with the Ti OMAP PRU (Programmable Real-Tine Units).

https://youtu.be/Z37U1Q4R2CA

incredible!

ocam working too?

Wow! Trip down memory lane! I built a Transputer array back in graduate school to “quickly” solve 2D FFTs for frequency-wavenumber analysis of array electromyogram signals. I wrote an article in Circuit Cellar Ink about a home-built Transputer add-in board for the PC that supported 4MB of local DRAM and was compatible with the T400, T414, T800, and T805 Transputers:

http://www.prutchi.com/wp-content/uploads/2021/08/PRUTCHI_Transputer_ArticleCCI_1995.pdf

I didn’t know this was even a thing. Very cool. Makes me think of TIS-100 but real and on steroids.

Cool. I never worked with transputers, but I wanted to, and knew a couple people who did. I’ll have to check out his channel.

Oh my Steve , you’re still alive ?

Straw man argument: there s no pretence that they are anything other than embedded MCUs with unique benefits.

The architecture is not bizarre, but is designed to work seamlessly with the high level language. If you understand the concepts in the Transputer and Occam, it takes maybe 30 minutes to understand the xCORE/xC ecosystem!

This hobbyist finds them extremely friendly!

The can do things no other high-powered MCU can do: guarantee timing in critical parts of code. N.B. running code, measuring, and hoping you have run across the worst case is not guaranteeing anything.

I´ve been interested in the XMOS / xCore processors for some time, but for the time being the price of the chips / developer boards keeps pushing them to “some other day”….

In 1990, I took an entry-level job with Computer System Architects in Provo, Utah. They were shipping Transputer hardware at the time.

They sold a variety of hardware. They had a SCSI interface tied to a 16-bit T212. Another startup in the area was prototyping a RAID-based SQL server using this hardware, along with other, processor boards. I think they ended up selling their stuff to Novell; never heard another word about it, after that.

They had an entry-level board with a T400. That was a 32-bit processor, no hardware floating point, only 2KiB built into the chip (not cache; the RAM was actually in the memory map, meaning you could run small programs on the chip with no external RAM) and only 2 serial interfaces.

They had multiple offerings with the T414 (and, later, T425), anywhere from 1 MB to 4 MB RAM per chip, up to processors on one board. Both of these were 32-bit processors, no hardware floating point, 4 KiB built into the chip and 4 serial interfaces. The T425 was the second-generation chip, with the T400 being a stripped down version of the T425.

They had offerings with the T800, T801 and T805. These were all 32-bit processors WITH hardware floating point, 4 KiB built into the chip and 4 serial interfaces. The T805 ran at 30 MHz, while the others maxed out at 20 or 25.

We had a board which provided a Transputer-compatible serial link to the ISA bus, with all the other boards just using power and ground. We could stuff 20 boards into an external case, slot the ISA serial link card into a host system, hook it all up and let ‘er rip. Booting such a system involved uploading a bootstrapper which was actually a virus. It would propagate through the cores in the system, bootstrapping each of them and setting them up to receive whatever program we wanted. There was no operating system; all development ran “on the bare metal.”

I had a 286-based host machine with at least one of these 32-bit processors in it, at any time. I was working with C (with some extensions), Occam 2 and Transputer Assembly Language. I already had experience with 6502 and 8080 assembly at the time. The Transputer was a different beast; it had a 3-level hardware stack instead of registers (you had to PUSH, PUSH, ADD and POP to add two numbers) and you could send data through the serial ports by plugging the port number, the starting address of a buffer and the length of the buffer onto the stack and calling a specific instruction. It was microcoded into the chip, NOT accessed using a SIO, etc.

Benchmarks I ran at the time, and later ran on other machines, put a 30 MHz T805 on par with a 486DX2-66. Back when a 33 MHz 386 was the fastest thing you could get from Intel.

A common statistic that’s batted around with multi-processing systems is “% linear speedup.” As an example, if your 4-core machine delivers a real-world speed of 3.2x the speed of a single core, that’s 3.2x / 4.0 cores = 0.80 = 80% linear speedup.

We had Mandelbrot rendering programs and ray tracing programs which were, no exaggeration, delivering 95% linear speedup. 20 cores would deliver a real-world 19x speed boost. The message-passing, through those serial links, was extremely efficient.

On one occasion, my 286 host had a ISA serial link card and 4 processor cards x 4 T805s (a total of 16 cores, each with 4 MiB of RAM). The serial link was the bottleneck. Benchmarked performance … I later found a P-II, running 266 or better, could keep up with that rig.

I was doing all this during the summer of 1990. When did the 486DX-2 come out? Or the P-II.

CSA released a low cost student kit at $250 in 1990 – all you had to add was your own DRAM. I know, because I helped develop the kit (I worked at Inmos) and a deal was done to provide the previous compiler release for a nominal cost. Because it was low cost, there was going to be no support, and so a complete manual for people to learn everything for themselves was written – I wrote it. I still have the first prototype kit, and somewhere I have been hanging on to an old PC with an ISA bus in case I ever have the time to get it out and play with it again.

There are some confusions about a board – someone mentioned 44 transputers and transputers only running from internal memory. The famous B042 board cane about because the factory packaged a load of T800 transputers and managed to get the bond wires running to the wrong pins. Rather than throw them away, because the transputer could run small programs in internal memory, some boards were built that had nothing but transputers on – the standard sized board just happened to take 6 x 7 as an array one room had been left for the serial interconnect – hence the 42 designation (Hitchhiker’s Guide to the Galaxy was recent at that point and we all know that 42 is the answer to life, the universe and everything). So programs such as the Mandelbrot Set, which was highly parallel and ran pretty fast on 1 cycle internal memory, could be farmed out to these boards – we could get 10 in a rack with a graphics card, and thus gave an incredibly cheap but limited supercomputer at the time. Ray tracing could also be done, but because of the size of the internal memory, you needed to configure it as three parallel pipelines cross connected at each node, so a B042 gave 14 effective nodes in the pipeline.

Just waiting for the XCORE-AI of XMOS to come out. This should bring down the price to where your can actually ;ut 100 of these beasts together without breaking the bank. The XCORE designs are really the the straight descendants of the Transputers. The idea of the Transputer was great but they were too expensive and their speeds were left in the dust by the main stream processors in the beginning of the nineties. Why spend a lot of time to program something for parallel cpus when a single cpu with a standard OS and standard compiler runs your calculations much faster.Yeah, in principle you can scale to a lot of cpus but they have to be all on a single complicated board to have fast enough Links. I built and used these systems in the late 80’s and beginning of the nineties. It was a lot of fun but not worth pursuing real applications.

The idea of parallel cpus and fast serial links was revived by XMOS (lead by David May) in the end of the twothousands, I think the first real silicon came out in 2009. Again,a lot of fun and a blast from the past! For a project I actually bought a 16 cpu (64 cores in total XK-XMP-64) prototype system. XMOS put out a lot of hype about running their XCORE processors in parallel, but somehow this never panned out. I lost interest for a couple of years and since 2015 they seem to have been just interested in single cpu audio applications for their cpus and left all the parallel enthusiasts out in the cold.

Since last year XMOS is making a lot of noise about their cheap new XCORE-AI cpu, but it has been all hot air up until now. This could have to do with the current chip shortage. Since this cpu is supposed to be really cheap (around 1 $ ?) this could trigger a resurgence of massive parallel systems at a very low price. I’m really interested, but not holding my breath.

And if you still use “the real thing(tm)” and need to get a Zero into your TRAM farm… http://www.geekdot.com/pitram-ahead/

Principal architecture for the Topologix T1000 was developed by myself, Gary “ROD” Rodriguez as IRAD in my Mycroft Machines consultancy, and conveyed to a startup corporation to which I offered the name Topologics.

Jesse Awaida, the founder of Storage Tech — a manufacturer of IBM Mainframe Disk and Magtape Drives — funded the new company, Topologix.

Topologix was heavy with Lisp, and c coders who had AI ambitions, and clearly hadn’t studied Godel, Turing, Hofstadter or Igor Aleksander.

Their first step was to secure my services as Principal Architect.

The second step was to trash Inmos’ OCCAM Programming Code. Was flexible? similar to programming bricks.

I expanded the architecture to support 64MB DRAM per Transputer MPU — featuring 1MBit DRAM — to support massive parallel LISP across a corporate or university campus — finding host sites in various labs and computing centers — in what had was — at the time — a universe that was quite friendly to Sun MicroSystems, they were everywhere. So the 9U form factor allowed a lab to buy a single board, or many, and become part of a campus-wide parallel computer system.

All of this was novel, at the time. PIXAR took a look at their own implementation at the time, before wisely discarding it.

Other features of this series of VME 9U board:

Approx 15” x 15”

8 Layers included

4 32bit T800 Transputers

C012 32-FDX Link Switch

A fifth processor featured Inmos’ 16bit Transputer for Link management and out-of-band systems services including

“soft” interrupts

Hardware Paged-Memory management w/o timing penalty, page computation during RAS of DRAM

32bit VME 9U Interface

Only board at the time that was inter-operable in both Sun III (MC68K) and Sun IV (SPARC) VME backplanes

First–pass PCB design success — the only “fix” was a single wire visible next to the P2 connector

Subsequent Revisions B .. E were built in order to condense SIPP packages to small SMT packages, permitting use of smaller profile DRAM SIPS which allowed T1000 to occupy a single slot each in the VME Chassis

At one point, Topologix to lashed together 24 of these boards for a 96-MPU Parallel System

Inmos, the Transputer manufacturer, later adopted a couple of key T1000 features of the architecture in their T9000 MPU

Topologix had an IPO on Wall Street, 4 Oct 1988.

The company was profitable, garnering as much as $50K per T1000 9U Board, and was tanked principally for tax purposes. It didn’t help our case that Inmos’ repeated delays in growing the T400 into the T800 feature set (Floating Point, etc.) meant that instead of competing against the i386 we found ourselves competing against the i586 Pentium family.

A couple years of 80 hour weeks down the tubes.

It has been quite a ride for decades since, still making discoveries, and building novel things.

See the sysRAND Corp Laboratory Page on ResearchGate.net (Berlin)

Only last week I saw a Transputer at the quite excellent The National Museum of Computing https://www.tnmoc.org/

This seems to be the crowd to ask this:

Many years ago I aquired an ISA adapter by a company named “Nth Computing” It appears to be some sort of image processing coprocessor and has a a T800 and a T414 on it, I’m curious to fnd morer about it.

We (Dutch Forth Chapter) developped a transputer Forth. It was aimed at such ISA cards as you decribed. You can download the gpl compiler from

https://home.hccnet.nl/a.w.m.van.der.horst/transputer.html

You have to run msdos, that go with the USA card. At one time we had a 190 transputer demo running in the HCC fair with that.