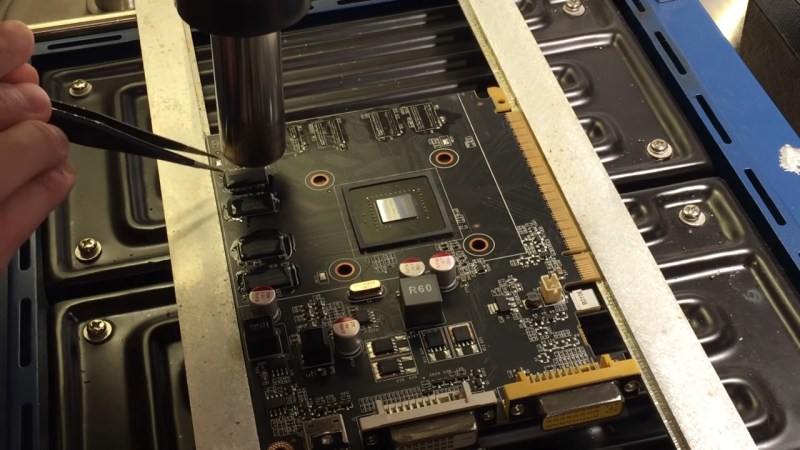

We’re all used to swapping RAM in our desktops and laptops. What about a GPU, though? [dosdude1] teaches us that soldered-on RAM is merely a frontier to be conquered. Of course, there’s gotta be a good reason to undertake such an effort – in his case, he couldn’t find the specific type of Nvidia GT640 that could be flashed with an Apple BIOS to have his Xserve machine output the Apple boot screen properly. All he could find were 1GB versions, and the Apple BIOS could only be flashed onto a 2GB version. Getting 2GB worth of DDR chips on Aliexpress was way too tempting!

The video goes through the entire replacement process, to the point where you could repeat it yourself — as long as you have access to a preheater, which is a must for reworking relatively large PCBs, as well as a set of regular tools for replacing BGA chips. In the end, the card booted up, and, flashed with a new BIOS, successfully displayed the Apple bootup logo that would normally be missing without the special Apple VBIOS sauce. If you ever want to try such a repair, now you have one less excuse — and, with the GT640 being a relatively old card, you don’t even risk all that much!

This is not the first soldered-in RAM replacement journey we’ve covered recently — here’s our write-up about [Greg Davill] upgrading soldered-in RAM on his Dell XPS! You can upgrade CPUs this way, too. While it’s standard procedure in sufficiently advanced laptop repair shops, even hobbyists can manage it with proper equipment and a good amount of luck, as this EEE PC CPU upgrade illustrates. BGA work and Apple computers getting a second life go hand in hand — just two years ago, we covered this BGA-drilling hack to bypass a dead GPU in a Macbook, and before that, a Macbook water damage revival story.

I wish the same could be done on laptops with soldered RAM, like the Macbooks. I like my M1 Air but the 8GB Ram isn’t going to be enough in just a few years.

It depends on how skilled and brave you: https://9to5mac.com/2021/04/06/m1-mac-ssd-and-ram-upgrade/

Honestly though, why buy it in the first place if you know it cannot be upgraded?

eggselent point

Cause it hurts so good!

Apple daddy makes it so they don’t have to think as long as they stick to the golden path.

Most computers have not seen a need to increase RAM in years. Unless you are doing a lot of photo editing or video work 8 gigs on a mac will be enough. Plus since the SSD is integrated on the M1 it is pretty darn fast which makes swap much better than previous machines. I had a 8G mac mini for a while and was dealing with some large (2TB) photo libraries and it was doing pretty OK, and this was before native versions of the app. I did return it to get a 16G version just in case though.

There is a company called iBoff that is able to upgrade the RAM of some recent macbooks provided the motherboard can handle it. They even upgrade the ones with T2 chips.

Wish I could do this to my 4GB Radeon RX 580 in my hackintosh, to bring it up to 8GB.

Be careful to bake the whole PCB at 60°C for 24h at least before trying to desolder any BGA. If your board or component is a bit “wet” (humidity inside) you will kill them. Very important step of the process !

these guys do it as a business: https://www.youtube.com/c/iBoffRCC/videos

It’s time for a new form factor with two adjacent mainboards – one for the CPU and one for the GPU. Each with their own memory banks for easy upgrading and plenty of space for huge coolers. And probably even separate PSU’s.

Passive backplane, server cards have been around since the beginning. They aren’t going to be cheap at all.

I wonder if a dual socket motherboard might be possible eventually, where one socket is for the CPU and the other socket is for the GPU. Then one bank of ram is for the system and another one using specialized ram would be for the GPU. Probably would need its own power too.

Sort of like the Intel Ghost Canyon NUC?

I’m not good enough to do this myself, but it would be awesome for all these new laptops that have soldered on RAM. For example, I’d love to buy the HP Envy x360 13-ay0001nd but it only has 8GB of RAM. But it has a 6c/12t CPU, so 12*3=32GB RAM. If I could upgrade the RAM to 32GB it would make it very useful. With GCC compiling with 8GB, I could use about 3 threads, out of the 12. So to use all of them, 32GB would be preferred. Even with other software like browsers, 8GB is really pushing it for day to day use.

core or thread count is irrelevant to max. RAM, it is all determined by the DRAM controller

He means that 32 GB is what he wants to make use of all the CPU threads *with GCC*. Using his numbers, GCC eats 2 to 3 GB per thread, so 3 threads is the optimum with the factory RAM (before it starts to trash the swap). With 32 GB he could spawn 4 times more threads, and thus make full use of the CPU.

will it actually do that though? I feel like there’s a very deep optimization rabbit hole here, given how complicated modern DRAM actually is…

p.s. say no to hard soldered modules that can easily remain replaceable, vote with your wallet

save for the fact that 3*12=36, can you explain a bit on why you talk about one core needing 3GB? Is it a compilation thing of some kind, i.e. a guideline when compiling gcc?

Main problem here is not really the manual work that needs to be done but finding a right firmware that supports needed amount of memory. I do not know what type of architecture is the GPU memory controller but I see no reason why gpu memory cannot be socketed to allow arbitrary amount of memory as task needs it.

The commonly perceived reasons that it’s not done is that BGA sockets (=> per-chip sockets) are costly and failure-prone, and DDR sockets are large and unsuitable. GPUs also don’t have EDID, something that desktop RAM uses for RAM parameter storage, as RAM parameters are vital for getting the card to boot – it could be added, theoretically, but it’s a pretty decent roadblock that any “changeable RAM” solution will face, and nobody’s been incentivised enough to work on that.

Having DDR sockets with modules on cards, if that’s what you thought of, complicates routing, heatsinking and space constraint management, perhaps, too much for comfort – we’d also need to invent new sockets, as DDR used on GPUs isn’t the same that the DDR used in PCs. With BGA sockets especially, every card getting sockets => a myriad of necessarily-complex and expensive sockets going to waste as GPUs are mostly being upgraded nowadays. Not too many GPUs come with “different amounts of RAM” options, to begin with. Even before the GPU price hikes, adding a chunk of cost on top of the card’s cost for something that most people will never use – not necessarily consumer-friendly, either.

So, plenty of reasons, you do have to look for them, though! Some technical, some “lack of effort” ones, some “tradition” ones. I wouldn’t expect a whole lot of movement in that direction anytime soon.

GPU memory cannot be socketed because they have really really wide bus (4x-6x as wide) and runs at much higher speeds.

Wide bus means insane amount of I/O, so you’ll run into mechanical issue – alignment, pitch and size.

High speed means that you are constraint by impedance discontinuity (e.g. # of vias per trace, impedance mismatch as it crosses connector), insertion losses, trace length.

Also the GDDR standards changes so rapidly that you’ll have a hard time sourcing the right parts when you need the upgrade.

By the time you can do it, the cost is going to be more than it is worth.

I imagine those with HBM couldn’t join anyway.

FYI GDDR runs at 5-6x DDR speeds vs DIMM. See latest Nvidia GPU card. They have to back drill the vias to eliminate the stub as that’s how crazy high speed signal integrity issue is. Even if you can, you’ll have a very hard time trying to fit those memory module between the tight space between the PCB and GPU heat sink.

>I do not know what type of architecture is the GPU memory

Well that sums it up. I have seen too many non-technical people wanting to see sockets everywhere *without* knowing the cost and amount of engineering needed to get that happen.

sockets?? an excellent way to cut the maximum speed to 1/3 of what you started with.

Many video cards in the 90’s had socketed memory, laptop style dimm slots that took proprietary modules, or simple rows of header pins in which a proprietary daughter card memory module could be staked on the board. This all went out of favor around the time the term “GPU” was coined. Probably multiple reasons, faster memory timings probably meant that these dimm and daughter card modules would mess with the RAM signal timings, and then the always popular built in obsolesce. Why sell just a 4GB upgrade module for someone who want’s to upgrade a 4GB card to an 8GB card, when we can just get you to buy a whole new GPU!. Go look up some matrox video cards on ebay and you’ll see video cards that had upgradable RAM. There was also the fact that memory was expensive back in the day. It would be easier to sell a card with a base 4 or 8MB of ram and then make 4 and 8MB upgrades available for those who later down the road upgraded to higher resolution monitors and wanted to slap another 4 or 8MB onto the card to keep 24bit color at the higher resolutions. People also tended to hang on to their video cards longer back in the day, instead of the yearly upgrade the whole card cycles we have today.

Very valid point wrt daughter card connections messing with timings, IMO! Also one note wrt socketable RAM chips, cards with those used DIP and PLCC RAM modules – as soon as RAM switched to dual-row SO* packages, it seems, the “RAM chip in socket” trend faded away at lightspeed. My assumption is that it had to do with obscurity of SO* sockets – this obscurity is still observable to this day!

Back in time, in the late 20th century, in the days of the ISA and PCI bus, PC graphics cards had sockets for RAM upgrades or at least free solder joints for soldering DIL/DIP sockets or the graphics memory. Examples: Paradise PVGA,Trident 8900, ET4000AX, S3 Trio 32/64, S3 ViRGE..

As mentioned the BIOS will show 2GB of memory even if there isn’t. I would test the video card with software like OCCT to actually verify that the 2GB is usable and working.

They used to make socketed DIP memory upgrades, I wonder what happened to those

I don’t think many people bought them–GPU performance went up incredibly fast making plug-in memory upgrades less desireable than just upgrading to a newer card. Another reason is density. I think the chips in the video have 96 “pins”. The MC68000 in just a 64-pin DIP is huge and probably bigger than all eight of the DDR3 BGA chips combined.

Other than scrap motherboards, the last computer I bought with DIP RAM was an Atari ST in 1989. My hand me down Mac Plus in 1993 had SIMMs.

For reasons as stated above, the primary memory on GPUs isn’t socketed. However, I would how feasible it would be to add a “secondary” memory socket, accepting a pool of memory that obviously isn’t as fast as the primary memory, but perhaps a bit faster than accessing CPU memory over on the motherboard (and not conflicting with CPU access)?

Do 4gb gddr6 Modules exist?

I Iike the rx 6400 for it’s low power consumption, perfect for a camper van but the VRAM limitation is what kills the GPU for me.

I wonder if a compromise between upgradable GPU RAM and integrated GPUs can be made, where the GPU and CPU are designed to share the same RAM?