We’ve all gotten used to seeing movies depict people using night vision gear where everything appears as a shade of green. In reality the infrared image is monochrome, but since the human eye is very sensitive to green, the false-color is used to help the wearer distinguish the faintest glow possible. Now researchers from the University of California, Irvine have adapted night vision with artificial intelligence to produce correctly colored images in the dark. However, there is a catch, as the method might not be as general-purpose as you’d like.

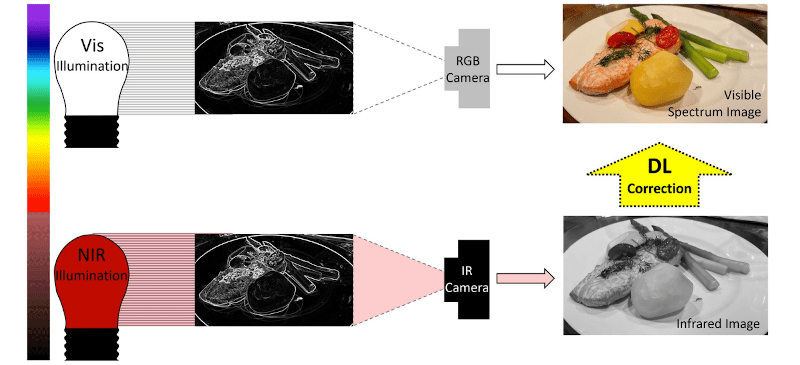

Under normal illumination, white light has many colors mixed together. When light strikes something, it absorbs some colors and reflects others. So a pure red object reflects red and absorbs other colors. While some systems work by amplifying small amounts of light, those don’t work in total darkness. For that you need night vision gear that illuminates the scene with infrared light. Scientists reasoned that different objects might also absorb different kinds of infrared light. Training a system on what colors correspond to what absorption characteristics allows the computer to reconstruct the color of an image.

The only thing we found odd is that the training was on printed pictures of faces using a four-color ink process. So it seems like pointing the same camera in a dark room would give unpredictable results. That is, unless you had a huge database of absorption profiles. There’s a good chance, too, that there is overlap. For example, yellow paint from one company might look similar to blue paint from another company in IR, while the first company’s blue looks like something else. It is hard to imagine how you could compensate for things like that.

Still, it is an interesting idea and maybe it will lead to some other interesting night vision improvements. There could be a few niche applications, too, where you can train the system for the expected environment and the paper mentions a few of these.

Of course, if you have starlight, you can just use a very sensitive camera, but you still probably won’t get color. You can also build your own night vision gear without too much trouble.

No offence [Al Williams], I love your articles.

But…

I get a big belly laugh when people with normal colour perception talk (or write) about colour.

I’m colour blind and I’ll bet someone will have a dig at me for that. Go for it, you not the first.

The reality is that colour blind people have to have a better understanding of colour than people of normal colour perception to be able to live in a world mostly made for people with normal colour perception. Just see how good an average blind persons hearing is by trying to sneak up on them and you’ll understand what I mean.

So first off, the four colour printed pictures, the colours being cyan, magenta, yellow and black and is most often referred to as an acronym of the German names for the colours CYMK. These (CYM) are the primary subtractive colours and all colour perceptions can be made from these colours just like the the four primary additive colours Red Green Blue can make all colour perceptions just additively instead of subtractively.

Quote: “So it seems like pointing the same camera in a dark room would give unpredictable results. That is, unless you had a huge database of absorption profiles”

Yes … and No. Your completely correct that the resultant colours would be wildly wrong. However they would also be predictably wrong. To a normal colour perception person they would look exactly the same wrong as the colours on a LCD screen that give the perception of being correct when they are completely wrong. Or in other words you would believe the colours are correct.

That’s why you can use the four colour CYMK prints in the first place. The objective is not to get the colours right but instead create the perception to a normal colour perceptive person that they are right even if they’re wrong.

And the example of the number of colours – in nature almost infinite.

Created by man – in the early days there were only the number of colour we had names for in common use. An artist used different colours because they could create them.

Then later the number of colours was like the number on those large fold out house paint colour selection charts. More colours but they still had names.

Then with the internet we have about 18 billion (2^24) and only a very small number of humans has the capacity to see most or all of them.

Shades is a different storey. I can stand high on a mountain and see an (old) car on a road that is so small that it’s just a speck with no distinguishable features and for some older models I can state the make model and year. Because that is the only time that “shade” was used as a car paint. Obviously my partial colour perception plays a role but at the same time a person of normal colour perception could not make that identification. And also this is only true for specific makes and models. That’s why “colour blind” people were used on aircraft to spot the enimy in WWII as they were not confused by camouflage.

I think this however really does ask some serious questions about AI because at it’s core there is the principle to deceive humans no matter how trivial this may appear in this case.

Thank you for your interesting insights.

You remind me of a guy I once worked for many years ago that was color blind. I don’t remember which colors he could perceive, but I do remember that he could spot a fluorescent light bulb of the wrong color temperature better than anyone else I’ve ever known. He could also spot a paint mismatch from a mile away. Since we were maintaining buildings that had to be kept at a very high standard, his unique skills were actually quite useful.

“cyan, magenta, yellow and black and is most often referred to as an acronym of the German names for the colours CYMK”

Cyan = Cyan

Magenta = Magenta

So far, so good

Yellow = Gelb

Key (black) = Schwarz

Maybe I was thinking of the Japanese for Black “Kuro”?

But anyway the “K” is for keyline in older printing techniques –

https://en.wikipedia.org/wiki/CMYK_color_model

“You’re”

Your completely correct

You can easily figure this out.

Are you food?

Yes: You’re food

No: your food

You seem to be completely off base here. The issue is that different inks for the same color can have different absorption profiles in the NIR spectrum. As a result, without profiling every ink chemical used by printers then the AI is likely to translate the NIR profile into the incorrect colors. See also: https://journals.plos.org/plosone/article/figure?id=10.1371/journal.pone.0265185.g002

For as much as you claim to know about color, you completely missed the mark on this issue.

This is the way. Metamerism is the magic word here.You can have 2 colors that match under incandescent light, but they won’t match under florescent light or sunlight. The same color inks from different manufacturers or even different batches from the same manufacturer can look different under different lighting conditions.

This is troublesome for car manufacturers in particular when they want certain parts to match and they need to match whether they are on the showroom floor or outside on the lot in the sun.

This is just a proof of concept at uni so that issue hasn’t been considered. They are most probably using one printer with four inks and each ink has one profile.

When they do consider it – Different printers (inks) having different profiles for the same colour is not an issue. However different inks with different colours having the same profile IS perhaps more of an issue.

Past that – suggesting that I am completely of base with the colour issue just indicates to me that perhaps you haven’t completely considered the AI influence on the outcome.

In traditional data analysis we have a saying that has a number of ways to be expressed that basically goes like this – Rubbish in, Rubbish out. This isn’t strictly true for “dig data” and AI where if you get enough “Rubbish” in you can get a usable result out.

Or in simple terms, through enough data at the AI using inks with different IR or NIR spectrum profiles and let it work it out, that’s what AI does best.

I don’t think you’re right. I think the Hackaday article is right.

The thing is that the inks have different responses to red-green-and-blue lighting. Quite predictable actually.

They also have different responses to three different wavelengths of IR light. Now… as long as things are more or less linear, and as long as the responses are different, you can deduce the response to “RGB” light and reconstruct the original colors.

However, when two manufacturers make “yellow” ink, they might use a totally different process, but end up with the same yellow to the (normal vision) human eye. But in infra red, at those three IR wavelenghts, the responses might be totally different. The deduced color will then be totally wrong.

The “AI” in the original article is totally unnecessary. Doing regressions is enough. But if you already know how to train your neural nets, this might be easier.

The experiment that they did will work great, but actual “real life” stuff will work much less.

Ok, Let go though these one by one but before i start –

This device never knows the actual colour and therefore can never produce the actual spectral colour.

Human beings have no capacity to perceive the true spectral colours on a LCD screen, on a printed piece of paper or in nature. We do NOT have spectral vision! We have trichromatic sensory information (some females have Tetrachromatic) that cannot sense or reproduce the original spectral information. However as most humans have very similar trichromatic colour ranges they can easily agree on sensory information which is very similar for most humans and agree to the perception of colours that don’t actually represent what is being viewed but do represent the neural sensations perceived.

So to make it clear, this device never has to reproduce the original colours, in fact it cant. What it can do is reproduce the sensory stimulus in our eyes so that we perceive something in the same way we perceived a colour, that we never really perceived correctly anyway because we are incapable of doing that in the first place.

To do this it is fed an image from a RGB sensor and the same image from a IR or NIR sensor and learns how to convert from one to the other most probably through feedback optimization (Machine Learning). We don’t have to understand how it does that. We can just call it a black box. We can give it weather charts and it can tell us how many potatoes have grown in the garden and what sizes they are under a bell curve. We can give it images of people moving in crowds and ask it to detect the emotions anger or love and it can highlight those experiencing these emotions. OR we can give it NIR/IR scans of carrots and potatoes, a bowl of confetti, or a burnt down house and it can tell us the original colours as long as it has been given enough training information. And the training information for feedback optimization is minimal.

So just about every comment here today is right and in some cases that “right” has some provisors, but that is absolutely and perfectly normal when humans talk about “colour” because we (almost) all have a common perception however this being HAD we know the difference between a tone and chord, a frequency and a spectrum but all to often we don’t realize that we can’t actually see a spectrum of colour like we CAN hear polyphonian or smell combined chemical reactions.

… so you clearly dislike people making comments at your expense due to differences in your perception … but you have no issue making comments at others’ expense due to differences in their perception … oooookkkkkaaaayyyy ….

Also no point calling “no offense” before saying something that will likely be offensive, it’s not like saying the magic words will lessen the blow lol.

*I say this not because I inherently disagree with the specific points you make after the first few sentences, but because the beginning is counterproductive to your argument

Quote [Me] : “I’m colour blind and I’ll bet someone will have a dig at me for that. Go for it, you not the first”

And here you are.

People make offensive comments to me at times, this is the internet what’s new about that. In the vast majority of cases I’m not at all offended. In many cases people (on a site like this) are offering alternative perspectives which is perfectly their right to do. In some cases these alternative perspectives show me that I was incorrect and I appreciate that as it’s a part of the learning experience I come here for. I will acknowledge the correction and often provide a link supporting the correct perspective. There are many people with vast experience and professional capacity on things I have no clue about and wish to learn more about these things.

There is very limited scope in even calling my “colour” perspective different. I still have trichromatic vision like the majority of people however the cones in my eyes are tuned to different wavelengths so I see different parts of the colour spectrum. I am often abused for this or how it effects my life because other people (perhaps with a superiority complex) choose to call a my condition a deficiency while at the same time believing that their vision is perfect and they poses full spectrum sensory capacity when in fact they are simply trichromatic, just as I am.

Now – you ended your comment with – “*I say this not because I inherently disagree with the specific points you make after the first few sentences, but because the beginning is counterproductive to your argument”

But you started with “… so you clearly dislike people making comments at your expense due to differences in your perception” and there has been no point now that I have expressed any concern or dislike about this. In the absence of declaring what exactly you are referring to then I am left to assume you are referring to my difference in colour perception.

Then when you state – “Also no point calling “no offence” before saying something that will likely be offensive”

I will point out that no one other than you has suggested that there is anything in what I have said is offensive or has been taken as offensive.

So perhaps instead of saying “Go for it, you not the first”, I should have elaborated by saying …

I am trichromatic just like everyone else. The wavelengths that my cones are sensitive to are different to the average meaning that I am not at the centre of the bell curve.

This is a permanent condition that I was bourn with and will live with for the rest of my life.

Some people choose to call this a deficiency that I have when the way their vision function is not technically different it’s simply that there is a bell curve distribution and that results in many people being closer to the centre of the bell curve.

As this is a life long condition I have had this response more times than I can count and I vehemently defend myself.

So if you choose this path then “Go for it, you not the first”.

If it’s AI choosing the colours, then you really have no idea if it’s got it right or not. I wonder how easy it is to fool. When people rely heavily on this sort of technology, they trust it far too much.

Considering the likely first use of this technology will be military, there is a significant budget to profile and obscene number of chemicals and build a neural network that is excellent at it’s job.

Military? Probably not. It’d be pretty useless to the military. If you have to emit IR you are visible to the enemy because the night vision goggles etc would perceive your emissions as if you are using a flashlight in the dark. You’d be a great target. Older night vision technology was using IR emission and the military outgrew it and newer generations of night vision tech is passive (no emissions).

Yes, exactly – “correct” here means normative according to whatever the dataset was

“So a pure red object reflects red and absorbs other colors.”

Sounds right to me! My guess is that after you posted this, you realised you wanted to delete your comment, but couldn’t 😀

Then please explain it to us.

Just saying it’s wrong without explaining what you think is correct doesn’t help anyone to understand the science any better.

Scientifically it is correct. However human perception is quite different.

“Check how your eye-brain interface works.”

How the eye-brain interface works is separate from how real objects reflect/absorb light.

Of course, “red” and “color” are terms regarding the perception of light. Still, they represent commonly accepted reflection/absorbtion profiles.

So, for a given reflection/absorbtion profile commonly perceived as “red”, the statement “So a pure red object reflects red and absorbs other colors” is correct – and is measurable using a spectrograph.

Don’t forget emission. You hit atoms with photons, they tend to throw photons back at you, but at a different characteristic wavelength.

Objects appear colored due to absorption, reflection, diffusion, and emission of light.

I’ll let the Museum of Natural History explain it since there is no

advantage to reinventing the wheel.

https://www.amnh.org/explore/ology/brain/seeing-color

I have an interesting idea about this however, and I would like to read what

others think of this. WHo knows. it may spark some ideas.

Back in the analog TV days before the invention of color television,

black and white television sets would show color tv shows in various shades

of gray. I’m sure many of you have seen movies that were originally black

and white (It’s A Wonderful Life) that have been colorized.

My thought is, red is the lowest frequency of visible light, and

violet is the highest. Let’s use the AM radio band as an example.

We will use 1000 khz for red, 1050 khz for orange, 1100 khz for yellow

1150 khz for green, 1200 khz for blue, and 1250 khz for violet.

Now let’s say in this example, that infrared is 200khz to 450khz.

200khz for red, 250khz for orange, 300khz for yellow, 350khz for green,

400khz for blue and 450khz for violet.

A simple conversion is all that would be necessary to “downshift” visible

light frequencies into the infrared or upshift infrared light into the

visible light spectrum. Not like how thermal vision works with hotter items

assigned a color, but actually using a converter.

Thoughts?

You’d end up with a false color image. There would be colors, but they would be completely different from the ones we see. This is pretty common with space photos.

Well, to my simple mind, it makes sense.

If infrared is between frequency A and frequency B, and visible light

is between frequency C and frequency D, a simple conversion table could

be used with no AI involvement at all. That’s just me I guess.

Sound as an example, 1000hz tone, add another 1000 hz and you have a 2000

hz tone. :) Like I said, simple to me. I’m sure those that are more

knowledgeable in the subject will comment. :)

You could downshift an image from the infrared spectrum to the visible spectrum by down shifting all the elemental frequencies down.

If colours existed in the spectrum of sound then on a piano the different colours would be different cords and not simply different notes.

Black and white pictures can’t have different brightnesses at the same time so they can’t be converted directly to colour.

There is a third element called “colour temperature” and this is something that is strongly perceived by colour blind people and this sense gives a perception whereby the colours are apparent even if they can’t be seen by a colour blind person even on a black and white TV. It has to do with contrast which the human eye is a thousand times better at detecting than colour.

At night in a dimly lit area, a person with normal colour perception will perceive colours in the brain when there is no matching stimulus from the eyes. These are anticipated colours which may sound very odd but you have to keep in mind that colours don’t actually exist in the way humans perceive them anyway.

Oddly enough this article comes at a time when I an working with tNIRS modulated with alpha / beta / gamma natural frequencies of human brain output.

Also, because animals can’t naturally see NIR, there aren’t many pigments in this range in nature. Most pigments we have are because they have evolved for seeing animals, or picked by humans. So the colour you get in NIR is probably going to be quite subtle. If what you want is an RGB mapping to see, then there is no reason to just upconvert, or use 3 zones for IR. You could use as many sensors as you like and map those to RGB space.

This doesn’t work because the spectral absorption profiles are different for each molecule. Different substances have different ratiometric absorption and scattering/reflection across the visible and near-infrated. Recoloring just from the infrared absorption into the visible would make an RGB color image, but the RGB colors wouldn’t correspond to the RGB colors we normally perceive for the same substance.

That’s not a Tank, that’s a Banana!

If this is (potentially) to used in the military, I sure hope they overcome any potential countermeasures before it sees use in the field.

This presumes then enemy is well-funded enough to afford and use exotic paints that have no NIR profile… which only works in 100% darkness and does nothing for actual infrared.

The banana is for scale! It’s a really tiny tank.

This approach could be used with a IR camera and a regular RGB camera.

At night, the RGB camera will be almost entirely noise, but with the gain turned up very high, and given as input to the AI network together with the IR image, it should be enough to give that tiny bit extra information necessary to make colours accurate.

Ironically most cheap RGB cameras are most sensitive to IR so they have an IR filter to block out the IR so it doesn’t saturate the sensor.

here are situations where a scene is illuminated with zero visible light = all that can be detected is the thermal spectrum of room temperature emission coupled with the emissivity of the surface. This has been rendered as shades of green in historical night vision goggles(NV). They could use whatever monochrome display they desired – the green they often use is a carryover from the earliest NV. In truth, it is rare to have zero light – so a sufficiently sensitive detector will see a fully illuminated full color scene.

In the past 5 years there have emerged color video cambers with an ASA of 100,000 to 4,000,000 that work to a degree.

When an ASA of 4,000,000 scene is encountered it is de-facto ‘photon starved’ This means they must resort to longer times to accumulate photons to render an image – often quite long, suited for still and not action.

More here:- https://www.dpreview.com/news/8124846439/canon-s-iso-4-million-multi-purpose-camera-was-used-to-record-fluorescent-life-in-the-amazon

and this 5,000,000 ASA

https://www.cined.com/x27-a-5-million-iso-true-low-light-night-vision-camera/

You (and the original author) are labouring under a misapprehension regarding night vision goggles. Historic (and a lot of current) night vision goggles did not detect thermal emission, they used photocathodes for visible and near infrared red light and required a phosphor to convert the electrons back to photons. Of the available phosphors, green works best as it can work with a lower light intensity. For thermal based systems grey scales are typically used as we are better able to discern details

Beer and AI are both technologies, this use of AI is the equivalent of Beer Goggles.