It’s a fair bet that anyone regularly reading Hackaday has a voltmeter within arm’s reach, and there’s a good chance an oscilloscope isn’t far behind. But beyond that, things get a little murky. We’re sure some of you have access to a proper lab full of high-end test gear, even if only during business hours, but most of us have to make do with the essentials due to cost and space constraints.

The ideal solution is a magical little box that could be whatever piece of instrumentation you needed at the time: some days it’s an oscilloscope, while others it’s a spectrum analyzer, or perhaps even a generic data logger. To simplify things the device wouldn’t have a physical display or controls of its own, instead, you could plug it into your computer and control it through software. This would not only make the unit smaller and cheaper, but allow for custom user interfaces to be created that precisely match what the user is trying to accomplish.

Wishful thinking? Not quite. As guest host Ben Nizette explained during the Software Defined Instrumentation Hack Chat, the dream of replacing a rack of test equipment with a cheap pocket-sized unit is much closer to reality than you may realize. While software defined instruments might not be suitable for all applications, the argument could be made that any capability the average student or hobbyist is likely to need or desire could be met by hardware that’s already on the market.

Wishful thinking? Not quite. As guest host Ben Nizette explained during the Software Defined Instrumentation Hack Chat, the dream of replacing a rack of test equipment with a cheap pocket-sized unit is much closer to reality than you may realize. While software defined instruments might not be suitable for all applications, the argument could be made that any capability the average student or hobbyist is likely to need or desire could be met by hardware that’s already on the market.

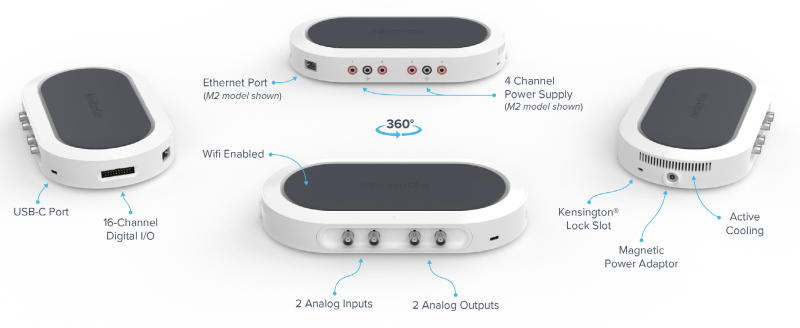

Ben is the Product Manager at Liquid Instruments, the company that produces the Moku line of multi-instruments. Specifically, he’s responsible for the Moku:Go, an entry-level device that’s specifically geared for the education and maker markets. The slim device doesn’t cost much more than a basic digital oscilloscope, but thanks to the magic of software defined instrumentation (SDi), it can stand in for eleven instruments — all more than performant enough for their target users.

So what’s the catch? As you might expect, that’s the first thing folks in the Chat wanted to know. According to Ben, the biggest drawback is that all of your instrumentation has to share the same analog front-end. To remain affordable, that means everything the unit can do is bound by the same fundamental “Speed Limit” — which on the Moku:Go is 30 MHz. Even on the company’s higher-end professional models, the maximum bandwidth is measured in hundreds of megahertz.

Additionally, SDI has traditionally been limited to the speed of the computer it was attached to. But the Moku hardware manages to sidestep this particular gotcha by running the software side of things on an internal FPGA. The downside is that some of the device’s functions, such as the data logger, can’t actually live stream the data to the connected computer. Users will have to wait until the measurements are complete before they pull the results off, though Ben says there’s enough internal memory to store months worth of high-resolution data.

Of course, as soon as this community hears there’s an FPGA on board, they want to know if they can get their hands on it. To that end, Ben says the Moku:Go will be supported by their “Cloud Compile” service in June. Already available for the Moku:Pro, the browser-based application allows you to upload your HDL to the Liquid Instruments servers so it can be built and optimized. This gives power users complete access to the Moku hardware so they can build and deploy their own custom features and tools that precisely match their needs without a separate development kit. Understanding that obsolescence is always a problem with a cloud solution, Ben says they’re also working with Xilinx to allow users to do builds on their own computers while still implementing the proprietary “secret sauce” that makes it a Moku.

It’s hard not to get excited about the promise of software defined instrumentation, especially with companies like Liquid Instruments and Red Pitaya bringing the cost of the hardware down to the point where students and hackers can afford it. We’d like to thank Ben Nizette for taking the time to talk with the community about what he’s been working on, especially given the considerable time difference between the Hackaday Command Center and Liquid’s Australian headquarters. Anyone who’s willing to jump online and chat about FPGAs and phasemeters before the sun comes up is AOK in our book.

The Hack Chat is a weekly online chat session hosted by leading experts from all corners of the hardware hacking universe. It’s a great way for hackers connect in a fun and informal way, but if you can’t make it live, these overview posts as well as the transcripts posted to Hackaday.io make sure you don’t miss out.

very much like a cell phone, it does a number of things, all of them poorly.

poor adc performance, poor noise floor, poor bandwidth, poor resolution, poor this, half way acceptable that.

there are still people , who think their cellphone has a 108Mp sensor. It’s 3x36Mp sensors, all with poor noise supression, poor light sensitivity, poor optics, poor plastic quality lenses, is “just as good” as a DSLR, whose lens alone costs more than the cellphone.

In other news, my raspberry pi is “just as good” as my Ryzen 7, 2700.

Are you saying you never take pictures with a phone because its not a DLSR?? The whole point of this approach is it allows you to have a variety of low-end tools at arms reach for a fraction of the price and space. Exchanging quality for price/size/accessibility is an engineering choice, and just because you don’t fit its use case doesn’t mean it has no value.

in engineering, you don’t get extra credit for getting the wrong answer faster. to call this a lab instrument is a joke. In that case, my RadioShack pocket DMM is also a lab instrument.

phone cameras are good for instagram, selfies, tiktok, etc, nothing more.

I’d call it a lab instrument, in that it seems at first glance like a better version of the USB connected pocket scope/logic analysis tool that I use, and I know has been used in ‘real’ labs – just because its not accurate to 1 billionth of a volt, or able to detect through the noise the low power signals etc doesn’t preclude it being very useful in the right type of experiment. Heck several years of pay super high end type lab equipment from only a few decades ago wouldn’t do as well as many of the cheap available tools we can get now.

It might not be getting the ‘right’ answer – which you can argue no tool is able to get anyway, they all have noise, bitrate limits, etc. But it is getting useful relative trends, accurate enough to be useful results are results!

Also while I hate phone cameras, in general they are useful for more than you suggest – especially the ones you can get the raw output of every CCD involved in. Still nothing on a DSLR or Mirriorless with the vastly larger, more sensitive and probably higher pixel count sensor coupled to much much better lens with aperture control, but still you can use the crappy webcam and phone cam for many things, including real science tasks as long as you can get the raw, not the AI fucked with images to work on.

Apple has made some really high quality commercials with an iphone.

Phone cameras are also superb for machine vision applications like facial recognition and 3d image reconstruction

Phone cameras are used extensively for evidence gathering because you can control the custody of the data and you get timestamps and geolocation for free.

Phone cameras are used extensively in warehouses as a modern replacement for a bar code scanner.

Phone cameras are used by construction workers as remote displays so machinery operators can see what they are doing.

Phone cameras are used by farmers to monitor crop growth, accurate colors are essential here.

I could do this all day ..

In engineering there isn’t a right or wrong answer, there’s just an answer with appropriate accuracy to the task. Yes we had $10 multimeters in our lab. They were appropriate for the task at hand and inappropriate for others.

Calling anything lab equipment or not is just senseless elitism, not engineering.

It’s also a dead giveaway that the person hasn’t spent any time in actual labs. Like the people who say “Arduinos and Raspberry PIs are never used in production.”

If you use an arduino or a raspberry pi in production, you are producing cheap consumer e-waste or something trivial.

I work PCIE-Gen5/Gen6 Serdes development, an arduino, or pi, or this crap has no place in my lab.

if you are working on the next throwaway piece of tech, then sure, go ahead.

Indeed. A good engineed would as “how wrong?” and “how fast?”, and base the decission on the trade-off.

In engineering, it’s question of good, fast, or cheap; pick any two…

Boeing hardware engineers’ software standards required all software modules to be no greater than one page of code. For traceability and maintainability. Standards applies to tools to … like gcc and ld. Standards in place before 1966 to after 1980. In placed after the c/c++ invasion in ~1991?

“The best camera is the one you’ve got with you.” as the adage goes. I’ve long since stopped carrying my DSLR everywhere.

And I carry my DSLR and SLR everywhere so I can take a good picture if the opportunity is there. My backpack is heavy! :D

And for such a premium price you’d expect a bit more as well. If I wanted to make an “ultimate lab” setup like this I’d stick with various USB scopes, some DreamSourceLabs scopes would get you most of the way, for a fraction of the price, with way better performance, and they’re fairly well tested as well.

I will agree on the phone camera part sort of.

My phone has a 64 Mega Pixel camera. But those pixel are tiny. My Cannon camera at home that has a 10.1 MP sensor with much larger pixels.

My phone takes great pictures when there is enough light but make it a bit dark out and even with the flash its nothing compared to my Cannon camera. Just got to pick the right tool for the job which for most of my general usages my phone work way better then fine.

In the case of this lab equipment, its can technically work better then my expensive 20Mhz analog scope which literally WAS lab equipment. Also this looks like it can do a lot and I like how you make assumptions about the quality of the product that you probably don’t own or have looked at the specs of.

Also what’s the deal with using Bill Gates name? Sort of weird.

The input impedance is bad 1 meg is not enough to call it a scope nor a meter, need at least 10 megs

1 meg is the normal input impedance of most ‘scopes. If you want 10 meg, use a 10x probe, you’ll get lower capacitance as well.

This article never claimed the product was “just as good” as anything. You’re quoting a straw man. It’s a cheap and versatile bit of kit that could easily come in handy in many legit labs. If it doesn’t meet your needs designing PCIe 6.0 circuits, then don’t use it. Your lab doesn’t set the standard for what’s legitimate. The only thing that matters is: does it meet someone’s needs.

By the way: modern smartphone cams absolutely go toe-to-toe with what used to be considered “professional equipment”. A Raspberry Pi may not compete with a Ryzen 7 2700, but it could run circles around what used to be considered “professional workstations”. Thinking that professionals can’t make use of such advancements is simply ignorant.

Nobody’s impressed with your ignorance parading as elitism. It’s actually quite sad that a grown adult has to seek self esteem by pretending their above a bunch of strangers online.

Agreed. I hate to break it to him but we use RasPi’s to monitor and test top of the line enterprise network switches. They are cheap which makes them very useful for many things.

Just wait until he learns that NASA keeps using the same radiation-hardened Power PC processor from ~1998 in all their probes and rovers, not because there aren’t better radiation-hardened processors, but because it gets the job done and it’s well-proven, or that his Ryzen 7 2700 was actually a consumer-grade part targeted at the same unwashed masses that use the Raspberry Pi!

Maybe he should compare the specs of the camera George Lucas used to film Attack of the Clones (https://www.panavision.com/products/uk/hd900f) to a modern smart phone.

What if, now bear with me on this one: the quality of a film or lab or whatever is more a function of the minds working on whatever problem than the gear they use. Like someone could make an interesting and compelling movie with a camera phone even if they couldn’t use a $200k lens. A game designer can make an entertaining and ground-breaking video-game even if they don’t have a $10k workstation graphics card. Biologists can use Arduino-based tracking devices to make crucial discoveries.

Maybe our “friend” got lost and doesn’t realize what website he’s posting on…

To be fair monitoring isn’t really relevant to what they were saying, this could be done with nearly anything.

Hard disagree on that “the dream” is for all my equipment to go through a computer – I’ve used both and VASTLY prefer dedicated instruments. You can put it where you need it, buttons knobs and switches are all hardware, and optimized for what you actually need in a way that a mouse and keyboard can never even approach (same goes for software controls though). Not to mention using one instrument doesn’t interfere with using others. Once I got used to it, I can configure any instrument I’ve got for whatever measure I need to take faster than most of the applications can even start on a computer, much less use more annoying menus and everything.

This sort of thing is like a multi-tool – useful to have around and absolutely has a place, but just like with hand tools – there’s a reason we all still have separate tools for everything instead of twenty swiss army knives. Try to do something well and you can build an excellent tool for the job. Try to do everything and you’ll do it all poorly. This thing ain’t cheap but the performance is barely on par with like $100 worth of used, dedicated test gear you could pick up on ebay.

These things looks like they compare poorly even to the established direct competitors.

At the high end, their Lab and Pro models are in competition with the big-name modular instrument things (NI VirtualBench, Keysight’s lower-end Modular lines like the Streamline stuff), and priced like it, and I’m not sure they’re credible enough to play in that (small, conservative, and expensive) market.

In the mid-range, there are a number of established relatively inexpensive USB-attached electronic multi-tools, like the Analog Devices ADALM2000 https://www.analog.com/en/design-center/evaluation-hardware-and-software/evaluation-boards-kits/adalm2000.html ($175; 2x 100MSPS oscilloscope channels, 2x AFG, 0 to +5V and 0 to -5V programmable PSU, 16x DIO, Scopy software that runs on Windows, OSX, Linux, or Android) and Digilent AD2 https://digilent.com/shop/analog-discovery-2-100ms-s-usb-oscilloscope-logic-analyzer-and-variable-power-supply/ (price has recently spiked to $399; 2x 100MSPS oscilloscope channels, 2x AWG, 0 to +5V and 0 to -5V programmable PSU, 16x DIO, Waveforms software that runs on Windows, Mac OS X, and Linux).

Their bottom-of-the-line Moku:Go M0 is $599 and missing the power supplies, the M1 is $700 and the only feature I see differentiating it from the competition is a PSU channel that can get up to +16V.

Also, the mid-range USB-attached-instrument-pod market is getting squeezed from both sides.

From above, since you can get into a mid range oscilloscope with built-in passable AWG for like $1k (Think Rigol MSO5000 or Siglent SDS2000X Plus). Admittedly they don’t have the nice programmable digital IOs even if you add the 16CH logic pods for another $500ish, and also lack the (feeble) built-in PSUs, but they are a _lot_ more instrument.

From below, by the various little USB scope and IO boxes (Look at Sigrok (https://sigrok.org/) to avoid their usually-awful vendor software, think Hantek shitboxes and FX2 based gadgets) in the $25-100 range, or even things like the buck50 stm32 firmware ( https://github.com/thanks4opensource/buck50 ) that gets you a rough low-end instrument pod on a $2 dev board.

I’ve been teaching a class largely using AD2s (and/or the larger ElectronicsExplorer that is on the same platform) for years, and the department I work in has a cohort trying out the ADALM2000 this year because the AD2 prices and availability were getting unreasonable and it looked like the next thing to try when we surveyed the market. They make great basic I/O and _very_ limited PSUs (almost of a feature, they cut off automatically on current spikes which helps avoid dead chips), but their scopes are both mediocre enough that I’ll reach for a bench scope when it’s available even if I already have the pod hooked to my circuit for other functions.

Thanks for the links!

I don’t know if it was paid but they did host the Hack Chat. I suppose if we want to see less corporate aspects like this then more of us should probably respond to the “you should host a hack chat” calls that frequently come out on hackaday.io.

This example isn’t miles away from some of the posts featuring Adafruit products but perhaps we are less sensitive to “promotion” of open source hardware and that is why this piece has a slightly “not a hack(aday spirited)” whiff about it.

I do like the fact people are continuing to explore the trade space of test and measurement equipment to better serve our own priorities and take advantage of new components and price points.

I was interested until the word “cloud” showed up. It was strange to feel that interest evaporate so rapidly.

That’s because the utility of cloud is still emergent. Fully realised it could be a wonderful innovation.

Take for instance this bio-neural genetic atypicality secured blockchain NFTs I’ve invented, that are full cloud on the crowd interfaced. Sit really comfortable and visualise the most relaxing environment possible, focus on it, bringing every detail into sharp focus, sit there 5 minutes and absorb every living detail into your mind… there you go, that can be yours for only 0.1 BTC.

No, the issue with the cloud is that you lack control over your data and the services get abandoned regularly.

Test, replies dont seem to be added anymore, hmmm

I get that same reaction when I read/hear “cloud” too!

B^)

No teardowns anywhere? not interested

Neat idea ! I don’t care about a tricorder, just give me an hypermeter and I’ll be happy !

https://www.zachtronics.com/ruckingenur-ii/

I’m pretty sure I could buy a proper 100MHz scope with real buttons and a screen for that kind of money, and still have plenty left over for a logic analyser, AWG etc… If they weren’t built in to the scope already. It’s a nice idea but sometimes there’s a reason nobody else does it that way.