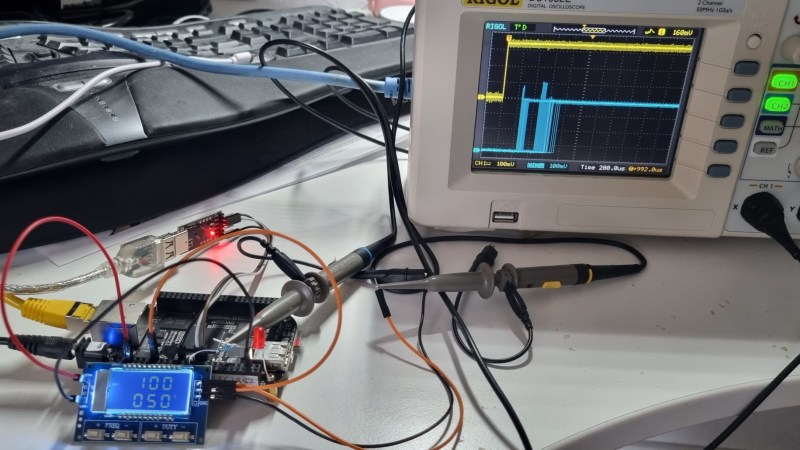

When debugging something as involved as kernel scheduler timings, you would typically use one of the software-based debugging mechanisms available. However, in cases when software is close to bare metal, you don’t always need to do that. Instead, you can output a signal to a GPIO, and then use a logic analyzer or a scope to measure signal change timing – which is what [Albert David] did when evaluating Linux kernel’s PREEMPT_RT realtime operation patches.

When you reach for a realtime kernel, latency is what you care about – realtime means that for everything you do, you need to get a response within a certain (hopefully very short) interval. [Albert] wrote a program that reads a changing GPIO input and immediately writes the new state back, and scoped both of the signals to figure out the latency of of the real-time patched kernel as it processes the writes. Overlaying all the incoming and outgoing signals on the same scope screen, you can quickly determine just how suitable a scheduler is when it comes to getting an acceptable response times, and [Albert] also provides a ready-to-go BeagleBone image you can use for your own experiments, or say, in an educational environment.

What could you use this for? A lot of hobbyists use realtime kernels on Linux when building CNC machine controllers and robots, where things like motor control put tight constraints on how quickly a decision in your software is translated into real-world consequences, and if this sounds up your valley, check out this Linux real-time task tutorial from [Andreas]. If things get way too intense for a multi-tasking system like Linux, you might want to use a RTOS to begin with, and we have a guide on that for you, too.

WooHoo! Debugging micros like it’s 1989!

Great to see reminders of the simple old-fashioned techniques. Refreshingly direct.

Maybe even more useful now in this age of multi-core, multitasking micros with NVICs and complex toolchains that abstract and insulate you from the bare metal.

Linux doesn’t qualify as an Real-Time OS (RTOS), neither is Windows. Both are incompetent behemoths.

Variants of DOS are, however. Real/32, for example. It’s part of the Multiuser DOS family.

I don’t see anyone here claiming that Linux is a RTOS. However with the right approach it can be good enough for various soft-realtime tasks.

Microware OS9 was apparently real time. Nasa used it for some shuttle work

I’m sorry, I didn’t mean claim that anyone was claiming this.

I rather was thinking out loud.

Both modern Windows/Linux kind of take possession of the system timer, including the high-precision timer introduced with ATX PCs (LAPIC, part of APIC).

https://en.wikipedia.org/wiki/Programmable_interval_timer

In addition, the way multi-tasking is implemented in those systems also prevents critical real-time operations.

While it’s globally true, there are ways to do soft real-time on windows via some drivers made for the purposes… I used that like 10 years ago, having a simple headless windows ce running in a windows xp driver. That wasn’t hard real-time but it had jitter counted in microseconds. Definitely enough for most cnc.

Daniel Bristot de Oliveira has been doing some very interesting research in his attempt to determine if Linux can provide rtos behavior (wcet) in certain circumstances and how to actually achieve it. The approach is unsurprisingly multifaceted but if you’re interested here’s a link to the first post in a series that are more easily consumed (https://research.redhat.com/blog/article/a-thread-model-for-the-real-time-linux-kernel/), but for the details here you go (https://bristot.me/linux-and-formal-methods/).

A big part of the puzzle was added for the 6.0 release (Runtime Verification subsystem).

Also, lwn has had a number of articles regarding the preempt rt and you’ll find a number of claims, mostly in the comments (from folks already on the ground), about Linux currently being used as the bare metal kernel in safety critical situations.

Yes, that seems bizarre to me

What makes the difference for you? Which criteria matters for you?

Linux or Unix or Windows are the right platfotm for a Software PLC btw…

I’m with JHB: what’s your definition?

Preempt_rt is a whole bunch of kernel changes that, when used, tell you a lower latency limit for your hardware and as long as you operate above that limit, you’re getting deterministic behavior on your timing. You might want faster real-time than that, sure, but what you’re getting is a type of real-time behavior.

“Real-Time” is too ill defined to really be useful as a description on its own.

Linux even without any of the RT kernel tricks could be considered Real-Time, suitable even for some ‘hard real time’ tasks, as it reliably will respond to the highest priority tasks on the scheduler within some window on this hardware, with the RT tricks that window gets smaller or at the very least much more consistent in reaction. So both variations could be considered “Real-Time” sometimes – way way more than fast enough for most things you want “Real-Time” in (at least on relatively modern hardware, as that stuff clocks way way faster than we need for human time scales).

But sometimes you REALLY need cycle accurate very low response timing, and that sort of extra challenging and presumably “Hard Real Time” application it certainly isn’t the ideal solution for.

Traditionally Hard Real-Time OS or scheduling was defined that certain tasks must be started and ended within defined time. If the time is not met then the system is not meeting its goals and must/should be reset.

You do not want to reboot your Linux constantly due to some core functions or drivers taking time out of the task with deadline.

Just use Real-Time when there exists some sort of real-timish scheduling and priorities, and/or True-Time with lesser functionality.

The intention for this type of Hard Real-Time functionality drains out of home user’s brain because there are rarely such processes in your home but lets talk about those nuclear power plants and how to control its rods….

Mobile phone comms has a lot of hard real time events/processing. So, not as rare as you might think.

USB 3 protocol also has many hard real time limits where connection will be lost if you the driver fails to respond within set time limit.

GNU Hurd cannot support modern USB protocols exactly because it cannot guarantee that the latency is good enough.

However, in case of Linux, USB drivers are part of the kernel. Typically the more interesting question is if it’s possible to write real time user mode applications.

Linux actually has RT patches that make Linux hard realtime OS and the hardware mentioned in this article was about those patches. Those patches are not yet part of mainline kernel, though, and nobody can tell how much extra work is still required to get the hard realtime support to mainline kernel. I think the biggest sponsor for RT patches is currently BMW. I guess they would like to use Linux in their cars.

Linux can be perfectly hard real-time. The trick is to get Linux out of your way completely – pin a thread to an isolated CPU core, run kernel with nohz_full set for that isolated core, and never do any syscalls from the said thread, only do memory-mapped I/O. This way you can have a very good guarantee for timing of all operations, assuming the worst-case DRAM access for everything.

You don’t even need any real-time kernel extensions.

It can be plenty real-time enough, so can windows for that matter. Sure, you would still build your missile guidance computer with a true rtos, but for a generic plc, usability and flexibility of a full desktop os is worth a lot.

To me, real time is just that the computer processes all its inputs before the results are needed.

So – the execution of stuff is always at least a little faster than it needs to be.

Hard real time is another matter.

Real time also means deterministic scheduling and prioritization.

LinuxCNC has a built in latency measurement. I guess it uses a free running hardware time to collect timestamps, and then compares the “measured” timestamp with the “theoretical” timestamp. If you’re really into this, then combining and comparing these could be interesting.

If you want your responses be really fast and deterministic, use no OS.

If slightly less fast is ok, use a OS with a fixed timeslot based scheduler.

Or use some Windows or Linux to control your machine, after the first few crashes with shredded expensive machinery, invest in big rubber buffers on all axis.

That may have been sound advice some 20 years ago, but these days it looks like an oversimplification. Beaglebones for example are now some 10-odd years old and the used sittara processor has two “PRU’s” (programmable realtime uinit), which are (on die?) microcontrollers that run their own firmware. You could for example use one PRU for Ethercat, another for multi axis stepper motor control and have Linux running on the main processor to handle a GUI, file I/O or whatever.

Multi core processors are also very common in these modern times. Some of these systems are capable to lock certain processes to a certain core and do other neat stuff targeted to industrial and real-time applications.

And oh, don’t forget the ever increasing performance of these processors. “Single Core” performance is also creeping upwards although at a not so quick pace, but clockspeeds also seem to be improving lately. Those have been stuck around 1 to 3 GHz, but now I’m hearing rumours of 5GHz and more for desktop processors.

And also, with distributed I/O over Ethercat and smart motor controllers a lot can be done that was not possible in “previous generations” of automation.

And still, you’d better not run an RTOS on those RPUs (usually something like Cortex-R5), code it in bare metal instead. I do it all the time with Zynq UltraScale+ devices, with real-time tasks running on RPUs and FPGA fabric, and UI, networking, logging and other non-real-time load under a generic Linux.

Also, no, “smart” motor controllers do not help. For anything that involves more than one motor you still need real-time coordination and a tight real-time control loop, and a real-time communication. For higher control frequencies something like ethercat can be insufficient for its latency.

Aww bummer, I was hoping (unrealistically) that there would be a tip for how to get just one gpio pin for this purpose on a conventional desktop PC. Being able to signal an event to the outside world with known latency would be great.

Allwinner has is some processors have an ar100 co-processor that a developer is using to control a 3d printer.

https://www.google.com/amp/s/www.hackster.io/news/intelligent-agent-s-recore-board-adds-some-serious-speed-to-your-3d-printer-c2757653d931.amp

The whole point of PREEMPT_RT patches (not to be mixed with CONFIG_PREEMPT which is a step between generic vanilla kernel and true realtime kernel) is to be able to do true realtime stuff without extra dedicated hardware chips.

Of course, if you don’t dedicate full CPU core for your realtime task, the worst case latency will be sum of TLB flush and context switch. I think modern desktop CPUs have TLB flush time around 100 ns and context switch time is maybe a bit less.

If you need better latency than 200 ns, you have to dedicate full CPU core for the realtime process but you can do that, too, if your CPU has multiple cores (like Raspberry Pi) without adding extra chips to the system.

Of course, above assumes that you have so good hardware drivers that you don’t get extra latency induced by the drivers. As this is probably not true, your best bet is to run all non-critical drivers pinned to a single core and all the realtime tasks and drivers pinned to different cores. And even then the question will be do you have some yet unknown bugs in the PREEMPT_RT kernel. There’s a reason why those patches are not yet part of the mainline kernel.

Sometimes even RTOS is not Realtime enough. I would give a little latency up if i could gain consistency on that latency. Jitter kills in my application. Because of this i can’t use any of the ESP32 microcontrollers because the ESP-IDF causes too much jitter. Here is my project that demonstrates this. I debugged in the same way as in the article. https://github.com/FL0WL0W/ESP32InterruptExample

Its all down to how long your ‘defined time’ is… In most cases its way way more than long enough for the massively multicore, high speed ram and impressive clock speeds of today to just set the task priority up in the scheduler. Your Nuclear Reactor really should be in the several minute to react camp – you don’t design such things that close to the ragged edge of major failure, heck F1 doesn’t even do that anymore with engines have to last way way more than just one race…