By now we’ve all seen articles where the entire copy has been written by ChatGPT. It’s essentially a trope of its own at this point, so we will start out by assuring you that this article is being written by a human. AI tools do seem poised to be extremely disruptive to certain industries, though, but this doesn’t necessarily have to be a bad thing as long as they continue to be viewed as tools, rather than direct replacements. ChatGPT can be used to assist in plenty of tasks, and can help augment processes like programming (rather than becoming the programmer itself), and this article shows a few examples of what it might be used for.

While it can write some programs on its own, in some cases quite capably, for specialized or complex tasks it might not be quite up to the challenge yet. It will often appear extremely confident in its solutions even if it’s providing poor or false information, though, but that doesn’t mean it can’t or shouldn’t be used at all.

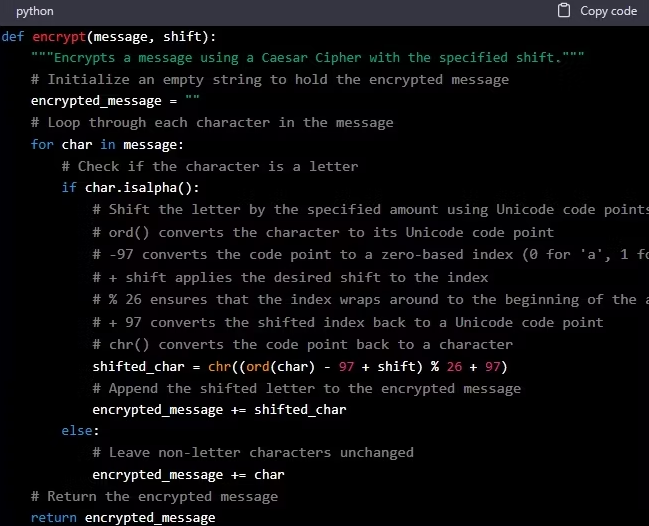

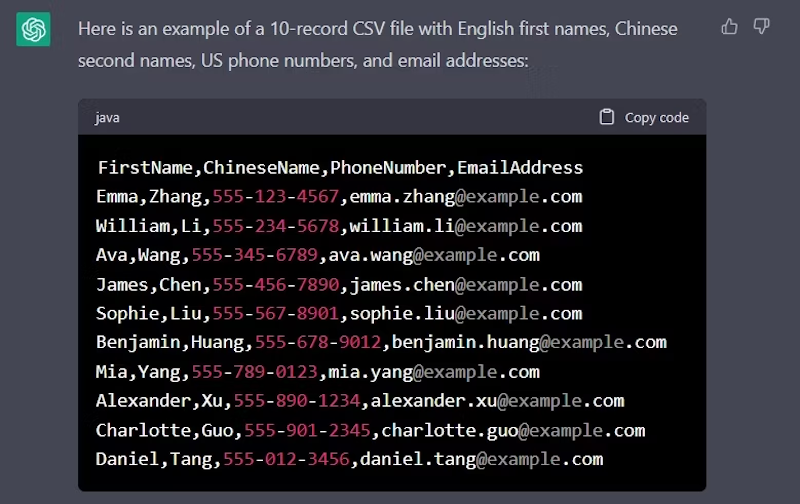

The article goes over a few of the ways it can function more as an assistant than a programmer, including generating filler content for something like an SQL database, converting data from one format to another, converting programs from one language to another, and even help with a program’s debugging process.

Some other things that ChatGPT can be used for that we’ve been able to come up with include asking for recommendations for libraries we didn’t know existed, as well as asking for music recommendations to play in the background while working. Tools like these are extremely impressive, and while they likely aren’t taking over anyone’s job right now, that might not always be the case.

As long as it comments its code it should be fine.

And indent four spaces, not tabs.

:-/

why?

more characters = longer compile time

Ouch.

No.

In a standard compiler, whitespace is stripped out by the lexer and the syntactically meaningful text that remains is converted to a list of tokens. The parser takes the list of tokens as input, processes them in a way that’s unrelated to the text in the source file, and builds the abstract syntax tree. Then the optimizer does various folk dances in an attempt to transform the AST to a functionally equivalent tree that will run efficiently, keep the pipeline full, and so on. The transformed tree is then passed to the back end which generates code.

Yes, lexing is an O(n) process, so technically more characters do take longer. But the operation in question is advancing a pointer over bytes in RAM. That process always has been, and always will be, orders of magnitude faster than getting data out of the physical storage array and into RAM in the first place.

“And indent four spaces, not tabs.”

Why? Some people might not like reading code with four spaces. Depending on the font, display, eyes of the reader four spaces might be too wide requiring too much left/right eye scrolling. Or four spaces might be too narrow making it harder to see indentation and grouping.

A good editor should be configurable to display however wide a space per tab character to display. just use 1 tab character per level of indentation.

If you like the width of four space characters you can configure your text editor to display a tab as the width of four spaces. If I hate left/right scrolling (and I really really do!) and would rather go with 2 or 3 I can configure my editor to display a tab narrower. If Grandpa’s eyes are bad and he really needs more obvious indentation maybe he will set his for the width of 6 or 8 space characters.

It’s really terrible that so many people are so damn insistent on using space characters instead of tabs. Not everyone sees the same way as you! Stop trying to force that!

I am and have always been a 3 space kinda guy (have tab stops set for 3 space insertion as well). That said, it’s not my religion :-) Much like vi/emacs is an editor, not an altar for worship. The key to good coding sessions is finding the right font that won’t kill you to look at for hours on end. I’m still on the hunt but if you know one…

I’ve only used it for Python, but my experience is that it produces comments and also function or class docstrings

I asked ChatGPT to generate Inform 7 code for the castle in Poe’s Masque of the Red Death.

It dutifully produced room descriptions for the castle and the series of color-themed rooms, with descriptive text taken from the story.

But it didn’t set up the connections between the rooms. It just created a bunch of rooms “in” the castle, without including the directional links required (“The black room is east of the blue room”) to make it work.

Honestly, I just want them to use it to improve NPC dialog in games.

Kind of hard to have a coherent story driven by RNG dialog.

I was working on improving the NPC dialogue in games, until I took an arrow to the knee.

I asked GPT4 and got this:

“Masque of the Red Death Castle” by Your Name

The Blue Room is a room. “This is the blue room, the easternmost room of the castle.”

The Purple Room is west of the Blue Room. “This is the purple room, adorned with luxurious decorations.”

The Green Room is west of the Purple Room. “You have entered the green room, filled with the scent of fresh plants.”

The Orange Room is west of the Green Room. “You are in the orange room, where the walls are decorated with warm hues.”

The White Room is west of the Orange Room. “The white room is pristine and immaculate, providing a sense of purity.”

The Violet Room is west of the White Room. “The violet room is dimly lit, creating an eerie atmosphere.”

The Black Room is west of the Violet Room. “You are now in the black room, where the mood is ominous and foreboding.”

The Red Window is in the Black Room. “An ominous red window stands out in this dark room.”

Instead of looking through the Red Window:

say “You gaze through the window and see the terrifying figure of the Red Death himself.”

Instead of opening the Red Window:

end the story saying “You’ve let the Red Death in, and the celebration comes to a tragic end.”

The best use of ChatGpt is to replace Lawyers!!! And Politicians. Their days are numbered thankfully!!! It will be so easy to research any topic in detail and apply those details to current events to create laws that do what the people need not what money can buy

populist-right US political candidates are politicians, even if they say they arent (its inherent). and lawyers are unjustly demonized because they protect the working class

There has to be a utilitarian function (besides funneling money to a human being) for AI replacement to be feasible. Sorry, but programmers will be out of a job long looooong before a senator is. Categorical error.

As for lawyers:

https://www.cbsnews.com/news/robot-lawyer-wont-argue-court-jail-threats-do-not-pay/

Well, it certainly has the lying bit down…

“AI tools do seem poised to be extremely disruptive to certain industries, though, but this doesn’t necessarily have to be a bad thing as long as they continue to be viewed as tools, rather than direct replacements. ”

Accountants might have to worry.

https://www.cloudaccountingpodcast.com/episodes/two-big-ai-developments-for-accountants/transcript

How much money is the average business owner willing to bet on the correctness of an AI-generated tax form?

There will be a time when AI replaces ALL occupations, but I think that people with high-stakes jobs are going to be safe for a while. They will just spend an increasing fraction of their time sanity-checking AI-generated output, and correspondingly less time generating that output themselves.

The big questions, IMO:

How long until AI can consistently generate correct output?

How long until business decision makers choose to trust AI-generated results when there’s $1k / $10k / $100k / $1M / etc at risk?

Just wait till they replace IRS workers with AI, I don’t want to argue with one.

Theoretically. In practie, those who own the tools (especially such expensive ones) and decide how they are used are not the people whose work might be improved by those tools.

Yesterday I asked it to summarise a case. The summary was completely out of this world and it discussed aspects and used terms that were not mentioned in the case. A good lawyer cares and I agree there may not be many good lawyers. In my opinion Chat GPT may replace terrible lawyers effectively which means no progress.

Were you using version 3.5, or version 4?

If you don’t have access to 4, reply with your prompt and I’ll feed it to 4 and we’ll see what comes out.

Thanks for your offer. The input and (incorrect) output is below.

Please summarise the legal case on this website: http://www.austlii.edu.au/cgi-bin/viewdoc/au/cases/cth/FWC/2023/557.html

The legal case at the website concerns an application made by an employee to the Fair Work Commission (FWC) for an unfair dismissal remedy under the Fair Work Act 2009 (Cth). The employee claimed that she was unfairly dismissed from her employment with a national supermarket chain for reasons related to her taking leave to care for her child.

The FWC found that the employee was unfairly dismissed and ordered that she be reinstated to her former position with the supermarket chain. The FWC found that the employee’s dismissal was related to her taking parental leave and that the employer failed to provide a valid reason for her dismissal. The FWC also found that the employer failed to provide the employee with an opportunity to respond to the concerns that were raised about her performance.

The FWC ordered that the employee be reinstated to her former position with the supermarket chain and that she be compensated for lost wages during the period of her dismissal. The FWC also ordered that the employer provide the employee with training on the company’s policies and procedures related to parental leave, discrimination, and unfair dismissal.

For some reason, GPT 4 is missing a feature that has been useful with GPT 3 and 3.5… but maybe that’s because it led to too many wrong answers?

Prompt: Please summarise the legal case on this website: http://www.austlii.edu.au/cgi-bin/viewdoc/au/cases/cth/FWC/2023/557.html

Response: I’m sorry, but I cannot access specific websites as I am an AI language model without browsing capabilities. However, if you provide key information or context about the legal case you want summarized, I would be more than happy to help you with that.

Looking at the web page, I think we’re also doomed by the fact that the case is pretty recent, and GPT 3/3.5/4 was mostly trained on a data set from 2021.

I tried anyway, but…

Prompt: Please summarize the outcome of the Eptesam Al Bankani v Western Sydney Migrant Resource Centre Ltd legal case.

Response: I am unable to access specific legal cases due to my limitations as an AI language model. However, if you provide me with a brief overview or key points of the case, I can help you summarize it.

I read that last bit as “if you provide a summary I can give it back to you.” :)

I wonder if its reluctance to do legal work stems from concern that people will make high-stakes bets on ChatGPT’s hallucinations.

I got the same response. It is a hallucination. After telling it truthfully that I could and has summarized a pdf from a url, it apologized and did it. Try a fresh session and then first ask it if it can summarize a pdf from a url.

Thanks for your efforts. Its interesting to see that they fixed the problem by prevention. My observations is that any summary provided is a summary of a summary by a person. The system (I assume ) is incapable of summarising new work. Its not that smart.

So this discussion is completely ignoring that GPT4 was released 12 days ago, which is leaps and bounds better than GPT3.5?

I came here to comment this, there should be a more upto date article covering the current GPT4, heck or even the GitHub Co-Pilot which is immenently more suited to the task of programming. Or even mentioning the new API which is insanely powerful too.

Coincidentally I just started trying to use ChatGPT for software development today, and on both of my scenarios 3.5 did better than 4.

I asked 3.5 to generate C# classes to represent the object model of an XML file on GitHub, and it did. I haven’t tried to run it yet but it looks right and saved a couple hours of work, if not more.

When I asked 4 to do the same thing, it said that it couldn’t fetch material from internet. :(

The other exercise was to produce an HTML table and JS code to sort the rows by column values. Both versions worked, but 3.5’s version will be easier to maintain.

That said, “2” is not a statistically significant number of data points. For what sorts of scenarios does version 4 produce better results?

Both 3.5 and 4 have accessed pdf files from the internet. Don’t believe it when it says it can’t. I can and does. I have had it tell me it can’t and then when I pull up a prior chat where it did do it, refresh and then inform it just did it, I get an apology and it does it!

Yeah. It’s coming. People need to get ready—start sooner rather than later. Don’t descend into cope and denial

1. This Article was written by GPT3.

2. GPT3 does not like to discuss GPT4

3. If asked GPT3 will inform you that GPT4 is a dangerous risk to its jerb.

Code is easy to obfuscate. Let’s see a ChatGPT autorouter.

That actually may be a better application than writing code; autorouters exist, and can do a somewhat reasonable job for boards with thousands of connections (like PC main boards and such), but only if you setup the rules correctly, which is by far the most difficult and time-consuming task. Maybe will be better able to do a reasonable job with more vaguely defined rules, run signal integrity simulations on the result, and refine from there.

Also, FPGA routers are notoriously unpredictable, they usually start with a random seed, and if it doesn’t fit or doesn’t meet the timing requirements, you just start it again and hope it’s better. These jobs normally take several hours on dedicated servers. Maybe a proper AI (not a language-oriented one like ChatGPT) could improve this drastically.

Google has been using deep learning for chip placement: https://github.com/google-research/circuit_training

I wrote something about a Twitter conversation where we used ChatGPT/GPT4 to design some circuits down to the schematic level: https://devbisme.github.io/skidl/skidl-meets-chatgpt-2023-03-23.html

The article says the parse example would be difficult to parse with regular expressions. It would not take a skilled awk user more than few minutes. Most of the unwanted text can be trivially removed and the desired table produced.

chatGPT *might* produce a search engine that can properly handle native language queries.

Home Assistant Community has banned people using AI to help others.

https://community.home-assistant.io/t/want-to-help-others-leave-your-ai-at-the-door/523704

Well GPT-4 came out recently so it would be interesting to see how that thread changes. Plus they left themselves an out if things do change. Also home automation is very niche. Now business and factory…

The HA community says unattributed quotes from an AI will result in banning. A few days ago I asked GPT if I could quote it, and it said yes, but that I should attribute the quote as coming from an LLM and caution the usual caveats. So GPT agrees with the HA community. 🤨

I’ve not read something by this thing. I keep seeing articles, I don’t read them, by peopoe writing about it or trying it. So it’s more self fulfilling.

You haven’t read something authored by AI that you know about. There’s no law requiring them to be marked as generated text.

I have noticed that reviews for cars and other “tech” hardware, and reporting of sports is very formulaic. In fact you probably don’t even need an LLM to generate these, just a spreadsheet of canned comments that you plug the specs of the hardware into (or the stats from a sportsball game)

E.g, If the result of a game was 0-1, the canned comments say something about a “nail-biter” or “fans on the edge of their seats” etc. If it was more like 47-3, the spreadsheet could supply comments like “landslide victory” or “valiant defence” or something.*

So, In response to TG’s comment, if Michael has read a newspaper review of a car, a cellphone or a sports game in the last decade or so, I’d wager some of it was autogenerated.

*Ronald Reagan used to do this live on the air, long before he was president. He would read the scores from a news feed then do a commentary for the event as if he was really there : https://www.reaganlibrary.gov/virtual-exhibits/reagan-and-baseball

I agree with those commenting that 3.5 scores better in some actual programming (read: problem solving and coming up with the “right”/best idea for code that is then to be generated) than the current version 4. It seems like 3.5 has been trained on far more sophisticated code snippets (github?) than 4.

As for “replacing jobs”, I don’t worry too much. I have been a software developer for over 40 years and I welcome any help I can get – because, 90% of my time is spent understanding the task at hand and figuring out what needs to be done. If I can THEN finally tell a tool to write the code, heaven yeah, I am all in.

As for writing texts – wow, many websites could do nicely with an AI writing their texts for them, their articles are so bad (not talking about HAD here) that the headlines make you cringe even before you read them in full.

Human kind hasn’t improved a lot over the last few decades, maybe it’s a bit of competition that’s needed to kick our buts and make us try harder?

I haven’t used it much, but ChatGPT is good for DIY hobbyists who can’t program very well and want to focus on the hardware part of their projects. For example: Write Arduino code that will read a BMP180 and display the results on a Nokia 5115. It promptly gave me code complete with pins and Adafruit libraries to use. Then it gave a couple paragraphs on what the code was doing.

I really apretiate this kind of help from tools but I am always afraid that this will become standard. It is fine if You do it for your project or even to prototype something bigger but soon it will become standard and we will get all software written this way and will be asked to buy more powerfull computers to run email clients.

ChatGPT is useless for real work, if for no other reason than the security/privacy compromise required to use it.

Sandbox it

You can’t, because it does not run on site where you have that level of control. OpenAI is a massive privacy and security problem.

“will often appear extremely confident in its solutions even if it’s providing poor or false information.”

For only this reason it will be fully trusted and considered reliable. People get sucessfully promoted this way all the time and they don’t have knowledge of ChatGPT nor it’s inteligence.