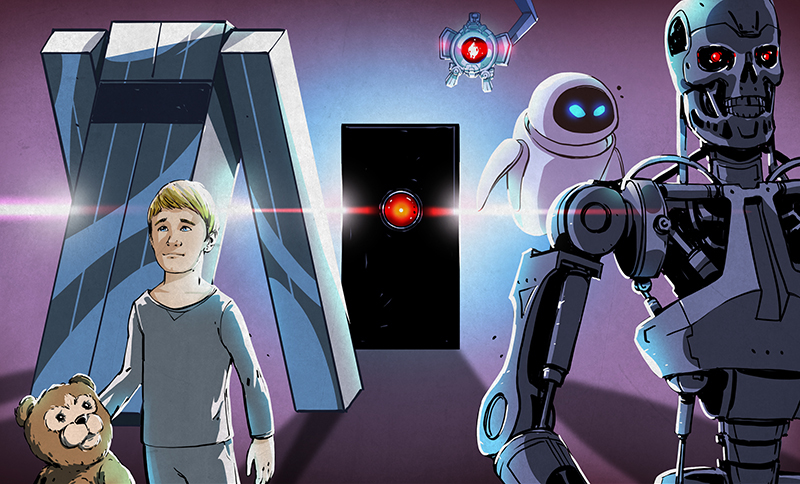

The Propellerheads released a song in 1998 entitled “History Repeating.” If you don’t know it, the lyrics include: “They say the next big thing is here. That the revolution’s near. But to me, it seems quite clear. That it’s all just a little bit of history repeating.” The next big thing today seems to be the AI chatbots. We’ve heard every opinion from the “revolutionize everything” to “destroy everything” camp. But, really, isn’t it a bit of history repeating itself? We get new tech. Some oversell it. Some fear it. Then, in the end, it becomes part of the ordinary landscape and seems unremarkable in the light of the new next big thing. Dynamite, the steam engine, cars, TV, and the Internet were all predicted to “ruin everything” at some point in the past.

History really does repeat itself. After all, when X-rays were discovered, they were claimed to cure pneumonia and other infections, along with other miracle cures. Those didn’t pan out, but we still use them for things they are good at. Calculators were going to ruin math classes. There are plenty of other examples.

This came to mind because a recent post from ACM has the contrary view that chatbots aren’t able to help real programmers. We’ve also seen that — maybe — it can, in limited ways. We suspect it is like getting a new larger monitor. At first, it seems huge. But in a week, it is just the normal monitor, and your old one — which had been perfectly adequate — seems tiny.

But we think there’s a larger point here. Maybe the chatbots will help programmers. Maybe they won’t. But clearly, programmers want some kind of help. We just aren’t sure what kind of help it is. Do we really want CoPilot to write our code for us? Do we want to ask Bard or ChatGPT/Bing what is the best way to balance a B-tree? Asking AI to do static code analysis seems to work pretty well.

So maybe your path to fame and maybe even riches is to figure out — AI-based or not — what people actually want in an automated programming assistant and build that. The home computer idea languished until someone figured out what people wanted to do with them. Video cassette didn’t make it into the home until companies figured out what people wanted most to watch on them.

How much and what kind of help do you want when you program? Or design a circuit or PCB? Or even a 3D model? Maybe AI isn’t going to take your job; it will just make it easier. We doubt, though, that it can much improve on Dame Shirley Bassey’s history lesson.

Programming is easy. Programming well is hard. AI is self-reinforcing mediocrity. We’re all doomed.

GPT is just one model, though, and a generalized one at that. You’re not going to get the best results if your training data is coming mostly from mediocre submissions to GitHub. There are loads of alternatives in the works to remedy the shortcomings, and you’re always welcome to download free models like Alpaca and fine tune with your own LoRAs.

The point right now is not to replace programmers, but to speed up the workflows of existing programmers. Copilot is not going to automatically write and git push a bunch of bad code. You still have to select a suggestion, Tab it in, proofread it, and push the commits yourself.

The thing is, programming isn’t hard at all. Doing things right and without bugs is hard.

So, lets say I have a huge code base like Linux kernel or Chrome. And I ask AI to do something for me there. I get a diff. Now I need to review it and make sure AI didn’t fuck it up.

At this point I am probably better of doing this shit myself.

Another example, 95% of my work is fixing bugs. Chat GPT or Bard can’t do shit about that.

All it is moderately useful for is to give you code snippet about something that you don’t understand. Say, I asked Bard to give me example how to use PID on Arduino and it looked reasonable.

But when you ask for something more complex, you get plausibly looking code that is completely broken and not working. Good to impress people who don’t know shit about programming, useless for actual work.

If AI improves I am sure it will give me code that looks even better and it will have more subtle and hard to find catastrophic bugs.

Programming isn’t hard at all. I’m just dumb.

In theory:

Programming isn’t hard at all.

Figuring out exactly what the problem is, is hard.

Once that’s done, a monkey could do the coding.

In practice:

Programming is a bitch.

The people with the problem have no clue. You have to interrogate them to get tiny parts of the problem. Then attempt to fit together all the little bits of problem understanding into a big picture to build on.

At least one user ‘doing the needful’…since way before our incompetent brahmin brothers got here in force.

Nobody will understand the whole problem when you are forced to start laying out entities etc.

Nobody will understand the whole problem when you are forced to start coding.

‘They’ will tell you to ‘get agile’ when they usually mean ‘waste time on scrum rituals’.

In the end you will hate your clients/users.

They deserve to _suffer_.

The mark of an experienced programmer.

@HaHa

I agree.

But you also forget that everyone will tell you that you’re a bad communicator because you communicate too much. While if you have a really complex problem that takes a while to figure out, and you know from experience that they don’t have even the patience to hear you out, you’ll be told that you don’t communicate enough. If you then try to explain it anyway, you’ll be told that you communicate too much again. If you then just leave all the communication about the problem, and start explaining in terms of *when* it will be fixed, you’ll be told that you’re being condescending and an asshole developer. And be asked for a precise time and date when you will have it finished.

Can’t win that, never.

Bottom line is: many people are now looking at ChatGPT as a way to bypass those ‘asshole’ software developers. Thinking the task will be done quicker if they had developers that just do what they tell them.

But basically they have no clue how much developers protect them against themselves. And they will find out to their own detriment.

Software development is not about writing lines of code. That’s the easy part.

I wish with all my heart that software development would only be about writing lines of code. But the last time software development was only about writing lines of code for me, was in University.

If people will massively start using ChatGPT instead of actual software developers, we will see a decline in work for software developers. But not even 3 years later, the demand will go up *sharply*. Because now they have a product that makes them money, but their software is actually costing them money. And they need real and experienced software developers ASAP.

But of course, by that time, all software developers switched to doing consultancy work. And they’re all going to safeguard their pensions. Meaning: they will pay for their mistakes.

I don’t really feel too concerned. Maybe I’ll get a few months of holiday out of it. :P

ChatGPT is nothing more than rapid prototyping.

ChatGPT isn’t a prototyping tool. It’s a code repository obfuscator and consolidator.

ChatGPT just scraped the internet.

It’s answers are as good as the average answer on stackoverflow (not very) because that’s (and GitHub etc) where it copied them from. I’d suggest only scarping the top rated answers, but apparently they like to return the wrong ones too.

ChatGPT will never be better than the average code available for scaping. Which is to say, complete shit/Tata/Infosys/EDS.

It’s at a local maximum. Getting better at coding will require doing a complete restart and throwing away the statistical word salad approach.

AI might be better for systems architecture than programming.

https://www.interviewbit.com/blog/system-architecture/

Can’t wait until there is a plugin featuring Visio integration for ChatGPT, i bet Microsoft is working on it right now.

As for programming, if the model is a level 7 programmer (with 10 being the best) everybody in the world up to level 7 (probably 95% of the population) can benefit from it by asking wat they need in a certain stage of writing the program. Initial program, improvement, extension of functionality, switching to or from the use of a library, explanation, changing al variables to a dogspecies theme, adding or removing comment. And they can do so (with the right prompt, this is essential) that the AI adapts the response to their level. Since github is full of mediocre code (according to some comment) it means that we (mediocre programmers) are going to have a lot of use from ChatGPT and it’s brothers/sisters.

At least if you know exactly what you want to happen, but you switch languages and forget what they have built in, they can probably remind you the syntax and that you don’t need to write your own sort function.

I’ve been working with computers my entire career. Through this I have only learned to hate them. Every step of how we build and program computers is slow, tedious, error-prone, and ripe for automation.

I want programming to be as easy as Scratch. I don’t want to continually have to learn new languages and infrastructure as technology changes, I just want to make applications. I can’t count how much time I’ve wasted learning a language only to have a business leader suddenly decree that our teams switch to another. The basic programming concepts have not changed, but for some reason I have to continually keep learning a different way of writing them. It’s bonkers.

That’s exactly why Visual Badic 6 was so beloved and a great success.

It was a fine RAD tool that allowed all types of users to unfold their creativity and visions.

All criticism aside, it was fine prototyping software, really.

It’s unbelievable how time makes you forget how bad some things were. As someone that wasted a bit of time in VB6, and his unholy sibling, Visual Basic for Applications, I can tell you: they were trash. As was COM, that was in fact VB object model. The only reason we got so many years of this stuff is because Bill Gates was so fond of his own Basic heritage.

There were companies that sold modules for various tasks.

Things are worse now: Javascript

Anywhere outside the browser.

Who does that? How did they stay alive this long? Seems like they would drown the first time it rained and they looked up at the sky with gaping mouths.

I’m not scared of programming AI.

I’m scared of marketing AI as seen in social media. We’ve trained machines to psychoanalyze and manipulate human beings – it’s terrifying.

You have AIs stealing Kias, distributing child abuse material, and inciting riots at the US Capitol.

And of course the profiteers hide behind it – “oh it’s just the algorithm. It just optimizes for engagement!”

I just want my AI to do something other than manipulate me.

Didn’t think to make this editable – to be clear, an AI that blindly tries to feed people things that are as engaging as possible is absolutely an exploitative, dangerous machine.

“I want programming to be as easy as Scratch.”

It’s been over 200 years of industrial economy. You’d think after such a long time we’d figure out way to build stuff easily. However, machine design is still hard, still requires knowledge and experience. There are so many intricacies involved in getting things right that I doubt engineers (including software engineers) will be out of work anytime soon.

For an excellent example, see this article: “Story of one half-axle”

https://www-konstrukcjeinzynierskie-pl.translate.goog/wybor-redakcji/31-zyczenie-redakcji/wybor-redakcji-2010/113-historia-jednej-poosi?showall=1&_x_tr_sl=auto&_x_tr_tl=en&_x_tr_hl=pl&_x_tr_pto=wapp

The oldest Engineering field (civil) is the most cook book. Having been practiced for millenia.

But even the guys that do nothing but repeatedly designing the same old drainage (just for example) have to _understand_ the cook book and it’s limits.

That wa a wonderful read, thanks for the link!

I do want to think for myself.

An assistant program which makes recommendations is something else, though.

A bit like the online help in Visual Studio 6, which highlights commands and explains syntax.

Personally, I think the danger isn’t AI becoming better at something, but users/devs losing understanding of a given matter.

Education is priceless. Phantasy and curiosity, too. As long as we understand at least the principles of our technology, we’re okay.

(Though it’s highly favorable that we’re staying better at this than just that.)

What I want from a programming assistant: something to make me nice coffee, do the housework, wash my clothes, cook me food etc while I do the interesting stuff. That would assist my programming greatly.

I don’t want one, and certainly not in the present state of so-called artificial intelligence.

Writing code for a specific method isn’t a problem – as long as you can find the documentation for the language and libraries you are using. No AI needed.

Writing a bunch of methods that work together to accomplish a task isn’t a problem, as long as the task is defined. No AI needed.

Writing a large program that carries out many tasks simultaneously on the same data from multiple users gets hairy – and no AI at present can help with the systems design issues that come up.

Large systems with multiple data sources used in different ways by multiple users with multiple tasks and goals that all interact is where the real difficulties lie. Where multiple people and multiple data sources and multiple external systems all work together you get effects that you can’t readily predict – and your AI will be completely clueless because it doesn’t understand the systems. If you’ve let some automaton write the pieces of your system, then you will be completely clueless, too, when all the interactions of all the bits and pieces conspire to through your system into total lockup.

The largest problems we’ve had where I work have all been systems problems, not problems with “write a method to do X.”

If you can’t handle “write a method to do X,” how are you going to build a system that handles X thousand different methods and concepts?

Even if you can find the documentation and write the code yourself for a specific method, the AI may be able to do it a fraction of your time. Present AI is often not good enough, but we’re just at the start of this development, and progress is still rapid. Compare the current set of AI tools to what we had 2 papers ago.

Right. And the AI says use method Y of library X, only library X doesn’t have and never had a method Y.

Chatbots CHAT. They are not programmers and they do not understand the words they string together.

You’ve hit on something.

Chatbots will never replace programmers, but middle managers are already _done_.

As a part time programmer what I have enjoyed about chat GPT is the instant boilerplate code without having to google what libraries exist that might possibly enable me to do what I need. I can get a small project off the ground pretty quick and skip the research and go right to development.

“I can get a small project off the ground pretty quick and skip the research and go right to development.”

With AI, that’s what is going to happen with most projects regardless of their size.

What do I want in a programming assistant? Its absence. If I don’t know what I’m doing better than machine learning trained on badly written code, I need to keep reading the code and thinking until I do. If you don’t understand why an assistant is telling you to do something, you’re an idiot if you go ahead and do it.

i dont even like using an ide. just give me my preferred text editor for the platform.

Yep. Good o’ Notepad++ in Windoze, and Geany in Linux. Only IDE that worked very well was Delphi. I liked that IDE.

I, like above, don’t like instant boiler-plate as it may not be the ‘best’ way of approaching a problem. I don’t know about you guys, but I went to school and was introduced to many different ways of solving problems with different data structures and such. Eye opening. All part of earning a CS degree. So having a ‘mind-less’ machine suggest ‘solutions’ and the person ‘programming’ is clueless because he/she doesn’t have the CS background to ‘self-think’ solutions to a problem …. Well, I see a problem there :) . Don’t you? Dangerous.

The post above has ‘chatbox’ nailed “they do not understand the words they string together.” Which is the crux of the problem. Yet my fear is people will be swayed by the ‘results’ which all goes back to ‘who’ programmed the machine in the first place and what is ‘fed’ into the machine. Huge political and social consequences…. In my mind.

As an aside – 25 years since decksanddrumsandrockandroll?! Get off my lawn!

“Dynamite, the steam engine, cars, TV, and the Internet were all predicted to “ruin everything” at some point in the past.” And each of them DID ruin everything. If by “ruin” you mean make everything we knew obsolete, leaving us with no reliable map of the world we live in. I expect nothing less of AI. ChatGPT may just be figuring out what sequence of syllables come next that will result in a positive response, but that is also how baby humans learn. Learning how sentences works comes first, then understanding, then application of that understanding to other situations. The belief that computers will never understand what they are doing, nor contribute any original idea, is naive.

You can not mention History Repeating without linking to their wonderful music video:

https://youtu.be/yzLT6_TQmq8

Obligatory programming content – strictly a hardware guy (started out with S-100 systems) but always loved the odd languages. FORTH & Lua. Loved VB6. Somewhat competent with C++

fig forth 8080 software technology led the way to compiler to binary, avoiding assembler, with C,?

IBM OS 36O FORTAN compiler writers went from high-level to machine code in the ~1970s?

That is the last link in the letter — maybe you are reading text only?

I looked all through the article before posting the link.

Wondering if that last paragraph was not added a little later in an edit.

I do not believe the link was there when I first read it – looked and did not see…

Once the dust settles, I think improvements to static analysis and formal validation will be the *actual* innovations in this field. Sure, an AI can generate some boilerplate code. But it cannot tell you what the best approach to a problem would be.

Well part of the review process.

https://ai.googleblog.com/2023/05/resolving-code-review-comments-with-ml.html

Current AI can already do much more than generate boilerplate code. Granted, still full of errors, but once the dust settles, these errors will be minimized. Google is already experimenting by letting the AI actually execute the program it came up with. Put that in a feedback loop where it can figure out what went wrong, and then a) fix the error, b) update training material for next version.

I have tried using the different “AI” models to build a simple C++ program that has some “People” struct and manipulate the data in different way, editing, printing, saving to disk, restoring .. Something a junior university student would master in a couple of weeks. The models never get it right. Segfault left and right. It’s pretty amazing that something so simple can be “so hard” after throwing a ton of money on training machines to “learn” this.

Out of 100 random people from the street, how many would do better?

0% .. but out of, lets say, 50 c++ first year students.. who would do better? ALL OF THEM :D

The ability of AI to outperform median humans is already most of the battle won. Keep in mind that the AI was not optimized for programming, but rather trained with general knowledge. Within 5 years, the AI will outperform the median of the first year students, and that’s a pessimistic estimate.

I guess this is somewhat off topic, but I’d never heard that Propellerheads song. I immediately found it on Youtube. What a great tune. Thanks, Al, for mentioning it in the article.

The last thing I want is for some stochastic blackbox process to generate stackoverflow jumble code that will presumable go into production. (No, don’t be cute to say that’s how compilers work. This is different and you know it :)

What I really want is better, more contextual error debug output. Don’t just print out useless error messages into a log, tell me how the error that caused expensive downtime happened with respect to the codebase it stemmed from and the comments that define what that code is trying do accomplish at a high, conversational level.

So far the few times I’ve asked our new Oracles what a specific error means with respect to a function, I’ve gotten some absolute trash replies that just wasted my time when I could have been reading through formal documentation and learning more along the way.

My initial experiments with LLMs were disappointing – I got equal amounts of worthless hallucination and useful code. But things have improved noticeably over the last few months.

I experimented a bit with ChatGPT as a code generator to bootstrap a new project, and I’ve been pleasantly surprised. I had an idea way out of left field, asked it how to do approach the part I know the least about (an unfamiliar file format) and produced some sample code using a library that I didn’t know about. And that library actually exists, so this is promising.

I’m also using GitHub Copilot at work, and it occasionally surprises me with useful autocomplete suggestions. It’s obviously wrong half the time, but that doesn’t cost me anything, and when it’s right it saves some time. Overall, it’s moderately helpful, and I suspect it’s going to get better over time.

What do I hope for?

Parallel product & test development: I want to write unit tests, and watch it write code that satisfies those tests in real time in another text editor pane. Or, I want to write code, and watch it create or modify the tests in real time. Either way would be nice, and I’d probably adapt my habits to get the most out of it. But, being able to alternate on-the-fly would be ideal.

Turn docs into code: I want to point the assistant at the documentation for a protocol or a REST / GraphQL / whatever service, and stub out the API that I want, and have it generate code that implements that API for that protocol or service.

For example, at home I’d love to point it at a wire-format protocol document, stub out the API, and have it generate code. That low-level stuff, at the boundary of hardware and software, is just not my cup of tea, which is why I’ve been putting off what would otherwise be a fun project for a couple years now.

(On a related note, if anyone wants to get J1850 VPW working reliably on the Macchina M2 you’ll have my undying gratitude. The hardware allegedly supports it, and someone took a stab at it a while back, but there’s been no progress for some time. There’s also an Arduino library for the same protocol, but it’s not very reliable.)

If I had a wish that would mainly be to have Microsoft out of that equation.

A close second would be we stopped calling language models “intelligent” just because… investor money.

Otherwise, a LLM could be very useful, especially as a fake news generator.

It would be nice if we could program a chatbot to coherently, convincingly, and endlessly re-re-re-re-explain the difference between software development and magic.

The biggest problem programmers deal with is the lack of well-defined problems. All we usually get is a list of a few key things the program should deliver if everything goes right. We have to find the other deliverables by trial and error, along with the key dependencies, failure conditions, and any fundamental assumptions of the problem domain. There are many different ways to solve the problem, each full of leaky abstractions that add implicit dependencies and success/failure conditions. There are an infinite number of similar deliverables that aren’t what we want. And let’s not forget that the deliverables we do want will evolve as we gain experience using the program in the problem space.

The idea of a tool that dips into that universe of alternatives and selects code that will meet our mostly-undefined needs isn’t technology. It’s magic. It’s a wish for something that knows what we want before we know it ourselves.

“Ah” says the hopeful wish-maker, “we just need a way to tell the tool what we want.”

Excellent systems for doing that already exist.. they’re called ‘programming languages’. Bad programming aside, the source code for a program is probably the most efficient description of the problem we want to solve and the result we want the computer to deliver. That’s what Alan Perlis meant with:

‘When someone says “I want a programming language in which I need only say what I wish done,” give him a lollipop.’

We use iterative development cycles to build tools that are just good enough to help us learn what problem we need to solve next. The path of development tracks our own evolving knowledge of the problem domain, the problems we want to solve, and the deliverables we want.

We’ve had a not-infinite-but-suitably-large number of monkeys trying to go straight from ‘vague ideas’ to ‘deliverable ready to ship’ generating about 60% of the trade press for decades, and the problem of ‘solving a problem correctly before we actually know what the problem is’ remains unsolved.

Because it’s not a solvable problem.

I have found chatGPT to be fantastically helpful. For a year I’ve tried to make a model rocket flight computer,with limited success. I’ve had to learn about electronics in general, how to solder, what a microcontroller is, how to code. Absolutely everything, from scratch. The most difficult thing was the coding. The difficulty gap between writing a program to flash an LED, or read a temp sensor and a program to use a mpu6050, a bmp180, and an SD card writer seemed insurmountable.

Until chatGPT that is. I gave it my three working programs, which were examples. It combined them and produced code which worked perfectly. It even added a feature I wanted (timestamps on the card data).

To say I am impressed is a massive understatement. It made a dream possible. A daft dream (deciding out of nowhere to build and fly a rocket with a fight computer so I could pretend to be sat in the Apollo firing room. Before my fiftieth birthday.) But a dream nonetheless.

Good luck with your plan to be a federal felon.

You are building a guided missile. Ten years federal minimum. Same as building a machine gun. No warhead required, guidance is sufficient.

that is, i am afraid utter bollocks. but it’s been nice chatting.

Good luck.

Best to beat someone up on your first day.

like telling someone utter twaddle on their first day?

Eva Vlaardingerbroek.

https://i.pinimg.com/736x/15/3a/5a/153a5a9476c5e0730f8124f36aa4c897.jpg

“Calculators were going to ruin math classes. ” Are you claiming they didn’t? Can you explain the increase in math morons if it wasn’t teaching kids how to be addicted to calculators? Many don’t seem to understand the concepts because they didn’t need to understand in order to push the buttons.

If it was just the calculator, they’d be able to function even if they were dependent on a calculator. But the ones who have trouble with math don’t know what to do with the calculator either beyond the basic functions. And the ones that can get pretty far in math but don’t understand what they’re doing aren’t new either. Without the calculator, they’d be the ones who can fill an entire page mechanically working through formulas without ever realizing they made a mistake, because they only understand how to follow a set of steps.

That said, at the lower levels of math a calculator is as much of a learning-destroying shortcut as a computer with google search would be at higher levels. It’s just that while a four-function could interfere with learning to multiply if you never went without it, and a scientific might interfere with learning about other operations, a graphing calculator can help visualize functions when solving equations. A slide rule has been argued to do some of the same things as the first two, though not quite so bad as you get a grasp of what’s happening at least as you manipulate it. The same has been said of an abacus, to be fair.

At higher levels, something with a solver can help once you’re ready to skip mechanically solving for things in favor of the next thing, and something programmable with a library of functions can be nice once you’re moving into either advanced enough theory or practical problems. (Eventually you’ll end up on the computer for a lot of things.) There the important part is really just knowing what you’re talking about and what you want to do rather than being able to actually perform every step from memory. At least in my opinion, I do not want nor need to have to do a fourier transform or something manually as long as I have an idea what a function looks like going both ways, and what that means for a filter or whatever.