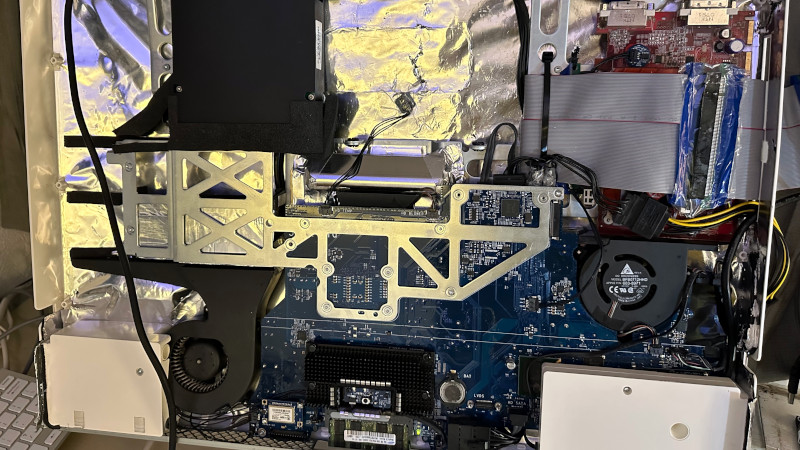

Over its long lifetime, the Apple iMac all-in-one computer has morphed from the early CRT models through those odd table-lamp machines into today’s beautiful sleek affairs. They look pretty, but is there anything that can be done to upgrade them? Maybe not today’s ones, but the models from the mid-2000s can be given some surprising new life. [LowEndMac] have featured a 2006 24″ model that’s received a much more powerful GPU, something we’d have thought to be impossible.

The iMacs from that era resemble a monitor with a slightly chunkier back, in which resides the guts of the computer. By then the company was producing machines with an x86 processor, and their internals share a lot of similarities with a laptop of the period. The card is a Mac Radeon model newer than the machine would ever be used with, and it sits in a chain of mini PCIe to PCIe adapters. Even then it can’t drive the original screen, so a replacement panel and power supply are taken from another monitor and grafted into the iMac case. This along with a RAM and SSD upgrade makes this about the most upgraded a 2006 iMac could be.

Of course, another approach is to simply replace the whole lot with an Intel NUC.

About as ‘upgradable’ as the engine in a modern car.

Going to need to change the drivetrain, dashboard, steering wheel, pedals, wiring harness, brakes and all the computers too. Then you get to start looking for bugs.

Fool’s errand.

@Jenny List said: “Of course, another approach is to simply replace the whole lot with an Intel NUC.”

But… In July 2023, Intel announced that it would no longer develop NUC mainboards and matching mini PCs. They subsequently announced that NUC products will continue to be built by ASUS, under non-exclusive license.[1]

So the concept of a new model NUC which is purely exclusive to Intel – is no more. But you can obtain a new ASUS NUC [2], or a NUC made by anyone who chooses to do so because as of July 2023 the concept of the Next Unit of Computing (NUC), is no longer exclusive to Intel.

1. Next Unit of Computing (NUC)

https://en.wikipedia.org/wiki/Next_Unit_of_Computing

2. ASUS NUC Mini PCs

https://www.asus.com/us/displays-desktops/nucs/all-series/

She did not say it had to be a *new* NUC. Still an upgrade. :)

About the iMac’s screen. I believe it’s simply an LVDS screen. You could have bought an HDMI to LVDS adapter, or a DisplayPort to LVDS adaptor, and just use the original (quite good quality) screen. And saved a bunch of trouble and space.

I used an old Apple iMac 4K (3840×2160) panel with a DisplayPort to LVDS converter for a while. Wasn’t too pretty ;).

Would be nice if Apple just included a display input with those things that’d still work even if the built in computer bites it, to prevent ewaste. Oh well, Apple’s gonna Apple.

> Oh well, Apple’s gonna Apple.

When was the last time you met a Samsung device which was upgradeable, and not destined for e-waste?

Asus? HTC? …?

Fill in the name of any company above, and you’ll get ‘XXX’s gonna XXX’.

Perhaps the problem isn’t the company, but the economic model. After all, they’re all capitalists, and capitalists are gonna capitalize.

In all fairness to capitalism, it was never meant to manage resources for future generations. Capitalism rewards exactly one thing: Making as much money as possible. To that end, Apple, Samsung, etc are all doing precisely what they’re supposed to do under capitalism – they ensure they can make the most amount of money by locking consumers into a never-ending forced upgrade cycle, which necessitates devices becoming e-waste.

The question for us is: How do we ensure that recycling and device longevity are rewarded, rather than simply making money?

They used to! There were models that had that feature, before the 5k model.

I actually used the inner computer as a media server while using the monitor with a PC, and it worked quite nicely. The power draw wasn’t trivial though.

On the iMacs with Thunderbolt (not including this one), they did. It only works over Thunderbolt, so you have to use another Mac, but you could use them as standalone external displays.

Huh. I’m writing this on a 2014 27 inch iMac with 5K Retina display. It has a 4GHz Quad I7 and 32GB of RAM. Bought used. I can’t see upgrading for a very long time. But I’m not a gamer.

I like this, for the simplicity of the modifications performed. However, for getting the most out of your eGPU, dosdude1 has a video detailing the removal of the GPU from a 2011 17-inch MacBook Pro and then wiring it to a x8 PCIe slot at https://www.youtube.com/watch?v=nq2XmgtzLE0. Doesn’t change the fact that it’s exactly what bothers LowEndMac, it requires soldering of components and that’s not for everyone.

I have a broken 2014 iMac waiting for its guts to be replaced though and for sake of simplicity, I’ll take the NUC route. Or Framework. Or ZimaBoard. Or …

fwiw the 2009-2011 iMacs all have relatively normal MXM-A or MXM-B graphics cards, and thanks to OpenCore and some vBIOS modders you can run them with only a few caveats (such as breaking target display mode) depending on the GPU. I’ve got a Radeon Pro WX4150 4GB MXM-A card from an HP EliteBook (the WX4150 being essentially an RX470) in my mid-2010 27″ iMac, replacing the broken stock Radeon HD 6670. the only niggles were flashing a new vBIOS and installing a 0.3mm copper shim on the GPU die to improve heatsink contact.

there’s a very lengthy thread on the macrumors forum on this topic, and iirc some people are working out GPU upgrades on the 2006-2008 Intel iMacs like the one in the linked LowEndMac article.