[Jcparkyn] clearly had an interesting topic for their thesis project, and was conscientious enough to write up a chunk of it and release it to the wild. The project in question is a digital pen that uses some neat sensor fusion to combine the inputs from a pen-mounted gyro/accelerometer with data from an optical tracking system provided by an off-the-shelf webcam.

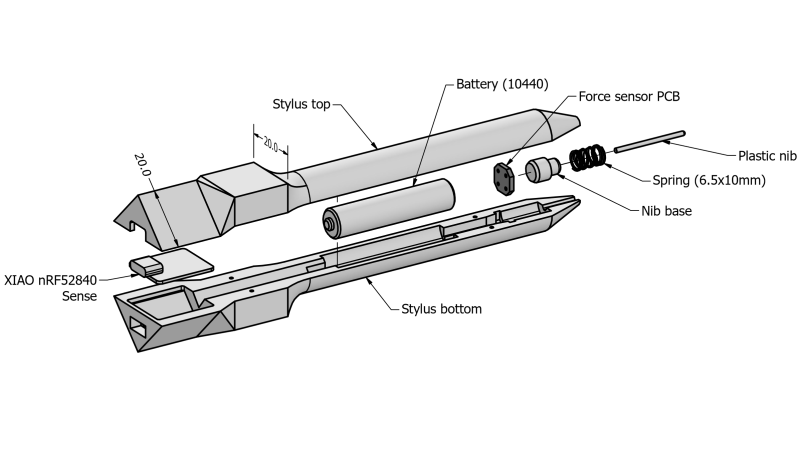

A six degrees of freedom (6DOF) tracking system is achieved as a result, with the pen-mounted hardware tracking orientation and the webcam tracking the 3D position. The pen itself is quite neat, with an ALPS/Alpine HSFPAR003A load sensor measuring the contact pressure transmitted to it from the stylus tip. A Seeed Xaio nRF52840 sense is on duty for Bluetooth and hosting the needed IMU. This handy little module deals with all the details needed for such a high-integration project and even manages the charging of a single 10440 lithium cell via a USB-C connector.

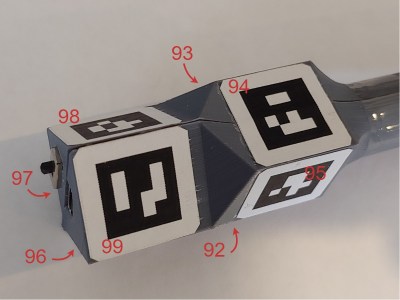

Positional tracking uses Visual Pose Estimation (VPE) assisted with ArUco markers mounted on the end of the stylus. A consumer-grade (i.e. uncalibrated) webcam is all that is required on the hardware side. The software utilizes the familiar OpenCV stack to unroll the effects of the webcam rolling shutter, followed by Perspective-n-Point (PnP) to estimate the pose from the corrected image stream. Finally, a coordinate space conversion is performed to determine the stylus tip position relative to the drawing surface.

stylus. A consumer-grade (i.e. uncalibrated) webcam is all that is required on the hardware side. The software utilizes the familiar OpenCV stack to unroll the effects of the webcam rolling shutter, followed by Perspective-n-Point (PnP) to estimate the pose from the corrected image stream. Finally, a coordinate space conversion is performed to determine the stylus tip position relative to the drawing surface.

The sensor fusion is taken care of with a Kalman filter, smoothed with the typical Rauch-Tung-Striebel (RTS) algorithm before being passed onto the final application. This process is running in Python using the NumPy module, as you would expect, but accelerated using the Numba JIT compiler.

Motion tracking is not news to us, we’ve seen many an implementation over the years, such as this one. But digital input pens? Why aren’t they more of a thing?

Thanks to [Oliver] for the tip!

This pen had some similar functionality (and a roller/encoder too) 7 or so years ago.

https://www.indiegogo.com/projects/01-world-s-first-dimensioning-instrument#/

(I actually bought one, and have never remebered that I have it when a suitable task came up)

23 years ago I had something called C-Pen. It used optical sensor to convert writing into text, either in internal, limited memory, or sending it via RS-232. It also could scan bar codes. Due to limited hardware it used Palm style letter shapes. I think it was made since 1998, but I’m not really sure…

I got quite good at Graffiti on a string of Palm PDAs. I even have it installed on my Android phone, but aside from trying it once I’ve not used it.

I was going to say that the sensor fusion would be the tricky point, but the demo video shows that they already have that developed to an extremely impressive level (I do love the main.py that only points to app/app.py, gave me a laugh but I’m sure there’s good reason when considering rearchitecture for future development). There’s definitely a little room for improvement, especially for the pen orientation (it’s a little jittery for applications like 3D object manipulation, etc.), but the writing looks to already be in really great shape.