Let’s say your CAD workflow is starving for spatial awareness. Your fingers yearn to push, twist, and orbit – not just click. Enter the Nebula Mouse. A 6-DOF DIY marvel, blending 3D printing, magnets, and microcontroller wizardry into a handheld input device that emulates the revered 3DConnexion SpaceMouse – at a hacker price. It’s wireless, RGB-lit, powered by a chunky 1500 mAh cell, and fully configurable through standard apps. The catch? You print and build it yourself, with a little help of [DoTheDIY]’s design files.

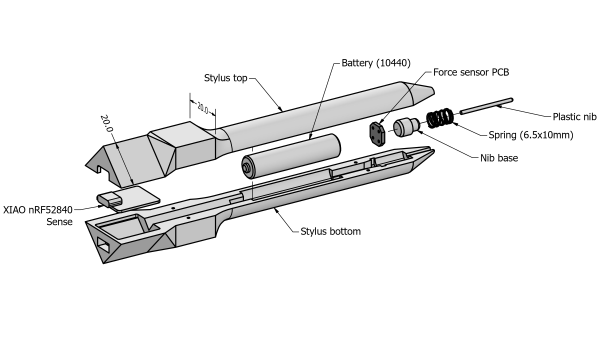

This isn’t some half-baked enclosure on Thingiverse. The Nebula’s internals are crafted with the kind of precision that makes you file plastic for hours just to fit weights correctly. Hall effect sensors track real-world movement in all axes; a Seeed Xiao nRF52840 handles Bluetooth duty. It’s hefty (280 g), intentional, and smartly designed: auto-wake, USB-C, even a diffused LED bezel for night-time geek cred. Just beware that screw lengths matter. Misplace a 20 mm and you’ll hear the soft crack of PCB grief. No open firmware either – you’ll get compiled code only, unlocked per build via Discord.

In short: it’s not open source, but it is deeply open-ended. If your fingers itch after having seen the SpaceMouse teardown of last month, this might be what you’re looking for.

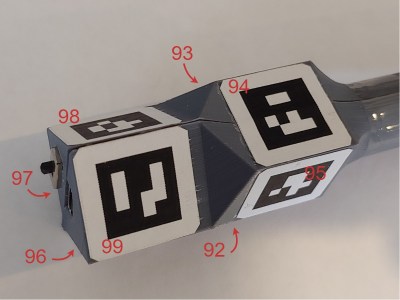

stylus. A consumer-grade (i.e. uncalibrated) webcam is all that is required on the hardware side. The software utilizes the familiar OpenCV stack to unroll the effects of the webcam

stylus. A consumer-grade (i.e. uncalibrated) webcam is all that is required on the hardware side. The software utilizes the familiar OpenCV stack to unroll the effects of the webcam

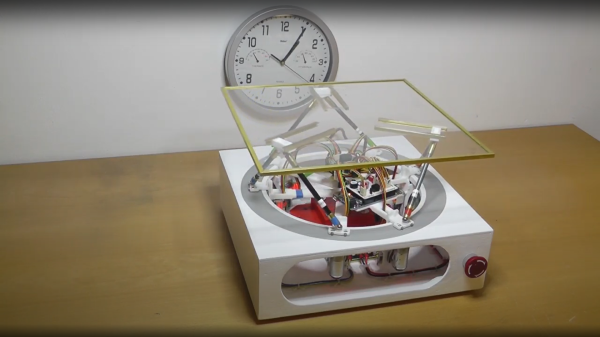

series of videos from a few years ago, showing the construction and operation of such a beast. This is a very neat mechanism comprised of six geared motors on the end of arms, engaging with a large internal gear. The common end of each arm rides on the central shaft, each with its own bearing. With the addition of the usual six linkages, twelve ball joints, and a few brackets, a complete platform is realised.

series of videos from a few years ago, showing the construction and operation of such a beast. This is a very neat mechanism comprised of six geared motors on the end of arms, engaging with a large internal gear. The common end of each arm rides on the central shaft, each with its own bearing. With the addition of the usual six linkages, twelve ball joints, and a few brackets, a complete platform is realised.