Last week, we wrote about the Leica M11-P, the world’s first camera with Adobe’s Content Authenticity Initiative (CAI) credentials baked into every shot. Essentially, each file is signed with Leica’s encryption key such that any changes to the image, whether edits to the photo itself or the metadata, are tracked. The goal is to not only prove ownership, but that photos are real — not tampered with or AI-generated. At least, that’s the main selling point.

Although the CAI has been around since 2019, it’s adoption is far from widespread. Only a handful of programs support it, although this list includes Photoshop, and its unlikely anybody outside the professional photography space was aware of it until recently. This isn’t too surprising, as it really isn’t relevant to the casual shooter — when I take a shot to upload to Instagram, I’m rarely thinking about whether or not I’ll need cryptographic proof that the photo wasn’t edited — usually adding #nofilter to the description is enough. Where the CAI is supposed to shine, however, is in the world of photojournalism. The idea is that a photographer can capture an image that is signed at the time of creation and maintains a tamper-proof log of any edits made. When the final image is sold to a news publisher or viewed by a reader online, they are able to view that data.

At this point, there are two thoughts you might have (or, at least, there are two thoughts I had upon learning about the CAI)

- Do I care that a photo is cryptographically signed?

- This sounds easy to break.

Well, after some messing around with the CAI tools, I have some answers for you.

- No, you don’t.

- Yes, it is.

What’s The Point?

There really doesn’t seem to be one. The CAI website makes grand yet vague claims about creating tamper-proof images, yet when you dig into the documentation a bit more, it all sounds quite toothless. Their own FAQ page makes it clear that content credentials don’t prove whether or not an image is AI generated, can easily be removed from an image by taking a screenshot of it, and doesn’t really tackle the misinformation issues.

That’s not to say that the CAI fails in their stated goals. The system does let you embed secure metadata, I just don’t really care about it. If I come across a questionable image with CAI credentials on a news site, I could theoretically download it and learn, quite easily, who took it, what camera they used, when they edited it and in which software, what shutter speed they used, etc. And thanks to the signature, I would willingly believe all of those things are true. The trouble is, I don’t really care. That doesn’t tell me whether or not the image was staged, or if any of those edits obscure some critical part of the image changing its meaning. At least I can be sure that the aperture was set to f/5.6 when that image was captured.

Comparing Credentials

At least, I think I can be sure. It turns out that it isn’t too hard to misuse the system. The CAI provides open-source tools for generating and verifying signed files. While these tools aren’t too difficult to install and use, terminal-based programs do have a certain entry barrier that excludes many potential users. Helpfully, Adobe provides a website that lets you upload any image and verify it’s embedded Content Credentials. I tested this out with an image captured on the new CAI-enabled camera, and sure enough it was able to tell me who took the image (well, what they entered their name as), when it was captured (well, what they set the camera time to), and other image data (well — you get the point). Interestingly, it also added a little Leica logo next to the image, reminiscent of the once-elusive Blue Check Mark, that gave it an added feel of authenticity.

I wondered how hard it would be to fool the Verify website — to make it show the fancy red dot for an image that didn’t come from the new camera. Digging into the docs a bit, it turns out you can sign any old file using the CAI’s c2patool — all you need is a manifest file, which describes the data to be encoded in the signed image, and an X.509 certificate to sign it with. The CAI website advises you to purchase a certificate from a reputable source, but of course there’s nothing stopping you from just self-signing one. Which I did.

Masquerading Metadata

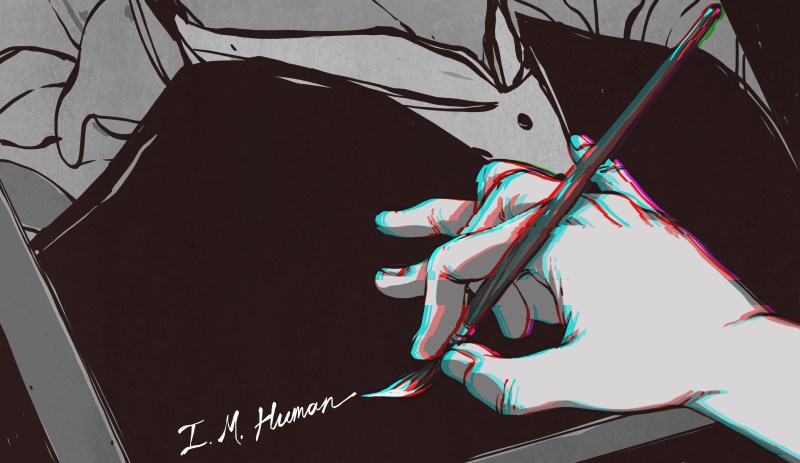

I used openssl to create a sha256 certificate, then subsequently sign it as “Leica Camera AG” instead of using my own name. I pointed the c2pa manifest file at my freshly minted certificate set, pasted in some metadata I had extracted from a real Leica M11-P image, and ran c2patool. After some trial and error in which it kept rejecting my fake certificate for some reason or another, it finally spit out a genuine fake image. I uploaded it to the Verify tool and — lo and behold — not only did the website say that my fake had been taken on a Leica camera and signed by “Leica Camera AG,” but it even sported the little red Leica logo.

One of the images above was taken on a Leica M11-P, and the other on a Gameboy Camera. Can you tell the difference? Adobe’s Verify tool can’t. Download the original left image here, the right image here, then head over to https://contentcredentials.org/verify to try for yourself.

Of course, a cursory inspection of the files with c2patool would reveal the signature’s public key, and it would be a simple matter to compare that key to Leica’s key to find out that something were amiss. Surprisingly, Adobe’s Verify tool didn’t seem to do that. It would appear that it just string matches — if it sees “Leica” in the name, it slaps the red dot on there. While there’s nothing technically wrong with this, it does lend the appearance of authenticity to the image, making any other falsified information easier to believe.

Of course, I’m not the only one who figured out some fun ways to play with the CAI standard. [Dr. Neal Krawetz] over at the Hacker Factor Blog recently dove into several methods of falsifying images, including faked certificates with a method a bit more straightforward than the one I worked out. My process for generating a certificate took a few files and different commands, while his distills it into a nice one-liner.

Secure Snapshots?

So, if the system really doesn’t seem to work that well, why are hundreds of media and tech organizations involved in the project? As a consumer, I’m certainly not going to pay extra for a camera just because it has these features baked in, so why are companies spending extra to do so? In the CAI’s perfect world, all images are signed under their standard when captured. It becomes easy to immediately tell both whether a photograph is real or AI-generated, and who the original artist is, if they’ve elected to attach their name to the work. This serves a few purposes that could be very useful to the companies sponsoring the project.

In this perfect world, Adobe can make sure that any image they’re using to train a generative neural network was captured and not generated. This helps to avoid a condition called Model Autophagy Disorder, which plagues AIs that “inbreed” on their own data — essentially, after a few generations of being re-trained on images that the model generated, strange artifacts begin to develop and amplify. Imagine a neural network trained on millions of six-fingered hands.

To Adobe’s credit, they tend to be better than most other companies about sourcing their training data. Their early generative models were trained solely on images that they had the rights to, or were explicitly public domain or openly-licensed. They’ve even talked about how creators can attach a “Do Not Train” tag to CAI metadata, expressing their refusal to allow the image to be included in training data sets. Of course, whether or not these tags will be respected is another question, but as a photographer this is the main feature of Content Credentials that I find useful.

Other than that, however, I can’t find many benefits to end users in Content Credentials. At best, this feels like yet another well-intentioned yet misguided technical solution to a social issue, and at worst it can lend authenticity to misleading or falsified images when exploited. Misinformation, AI ethics, and copyright are complicated issues that require more than a new file format to fix. To quote Abraham Lincoln, “Don’t believe everything you read on the internet.”

These all sound like UI problems, nothing in the “attack” presented above appears to actually break the chain of trust. I don’t care much about this stuff but if I was, say, running the photo desk at Reuters then I would absolutely care about this stuff. The ability to have an image signed that it came from a specific device isn’t a bullet-proof solution to “fake news” but it’s a step in the right direction. Do I want it on my camera? Nope, but I’m creating news images for a wire service isn’t my job. That doesn’t mean the use case is invalid.

Well……

Yes, technically you retain the ability to verify whether the image was actually signed by a Leica camera.

But the idea that Adobe would put together a verification tool where verification amounts to “does the image have a valid signature and does the certificate it was signed with have ‘Leica’ in the CN” is mind-boggling in this day and age. The whole point of the chain of trust is that you _can_ verify that it was _actually_ taken with a Leica camera, not that someone can generate a certificate with a name including ‘Leica’.

“I get your perspective, and you make a valid point. While the average user might not be concerned about these issues, for certain professional use cases like Reuters, ensuring the authenticity of images becomes crucial. It’s a balancing act between usability and security. Thanks for sharing your thoughts!

While I agree that DRM systems like this are unsound, it does seem like this is an issue with the “verifier” tool and not the standard itself.

This is not a DRM, it is simply yet another cryptographic signing scheme.

It is no more fundamentally “unsound” than, oooh, https on the WWW!

And exactly like https (rather, the underlying crypto being used, ss it isn’t limited to https) you can create your own certificates and use them to sign anything you want. The only trick is to have a way to look up the certificate on a trusted register to – which is where the Adobe verification and/or “drawing arbitrary conclusions from the string in the certificate” (like putting up a Leica logo) are failing.

If I was working at some reputable news company I would also not just trust on a red dot, but news agencies seem to be degrading a lot over the last 20 years, and anything published in either a banana republic, the USA or any dictatorship (even if there is a “president” on top, is deserves a lot of extra scrutiny. Leica accepting self signed certificates also looks like a gaping hole.

But with today’s technology, I guess it would be relatively easy to make a picture with such a camera from a poster hanging on a wall. It would need some proper lighting and such, and that is not trivial to set up, but I guess anybody with some photographing experience and the budget for a decent printer, lighting and some extra equipment can set that up in a way the picture looks genuine. Especially when you abuse things like news pictures do not have to be of extreme resolution, in focus, good lighting and such.

I also have a feeling that ever more people are getting their “news” from youtube and facebook to tiktok. And whenever an election occurs, the more intelligent people are swept of the table by the sheer numbers of those masses who are manipulated and mislead by a few bad actors.

This reminds me of a scene in The Lost Metal (spoilers)

There is a very primitive film ‘moving picture’ of the capital city having been destroyed, ash falling, fires burning. The antagonists used this film to convince victims that the world had ended. The protagonists discover that it used a filming miniature.

I think many of us would agree, the matte paintings and miniature effects used in the last century often had a far more believable result than modern CGI does.

“I think many of us would agree, the matte paintings and miniature effects used in the last century often had a far more believable result than modern CGI does.”

Much like anything it depends upon the skill of the artists.

Wait, so Adobe’s verification tool doesn’t run the signer cert up to a CA? What’s the point then? It still makes me fell like if we wanted an actual secure protocol from this it would have come from the TCG.

I suppose there is an unavoidable analog hole. You take your fake picture, print it out in really high quality, and take a photo of the printout with your crypto signing camera, carefully arranging the lighting and camera settings so the aperture, ISO and speed are right for the alleged scene. If the metadata includes focus distance, then that will be wrong (unless you print really big), but there are probably ways around that, like using a manual lens that doesn’t send focus data to the camera, or sticking a diopter filter in front of an automatic lens to shift the focus.

With modern 8K HDR televisions, this is probably pretty easy to do and get the lighting information in a reasonable range, at least for indoor scenes.

Wouldn’t you be able to tell? My camera is 42mp, while an 8k TV is only 30mp. And you couldn’t use the whole of the TV screen, since the aspect ratios are different.

There are a few different solutions that could be used here, the simplest and cheapest is to use a diffuser or a soft lens to prevent the camera from ‘seeing the the screen pixels, a more sophisticated solution would be to use a long exposure with motorized tripod, blanking the screen during tripod moves though exposure values are going to be highly suspicious there’s also the the tried and true technique of aligning and calibrating arrays of multiple projectors to achieve resolutions beyond what a single protector is capable of.

Alternatively you could probably break into the signal chain with a high performance FPGA and inject your chosen image directly

Your camera isn’t _really_ 42 Mpix. The Bayer pattern requires a bit of resampling and averaging to get the image out, and the camera is almost never designed to take advantage of the “full” resolution because of moire effects. The picture has to be blurred below what the sensor can record, otherwise you get weird beat patterns.

Bayer images are generally considered as full-resolution monochrome with lower-resolution color data, even though the actual data is RGGB. Btw we’d be comparing a 6480 x 4320 screen (assuming perfect crop) and a 8000×5320 sensor most likely. But anyway, some modern cameras have sensor-shift modes that make it so that you *do* have color data from all four color filters for every single pixel in the output. And some also intentionally lack OAA/OLPF’s in case you expect them to perform poorly and want to avoid anything that may dampen high spatial frequency signals worse than the lens itself will.

For most pictures, you can get away with a color resolution far less than the brightness resolution, so I would choose to assume that adjacent pixels are similarly colored and so if they vary in value, it’s most likely a lightness variation rather than a color variation. It should help that if you’ve got enough dynamic range, 14-15Ev being possible now in single images without hdr bracketing, that even something strongly colored will have a significant component of the other two colors. I imagine that in general you can make good a significant portion of your rated resolution, even if there’s a different better set of reasons.

You have to account for the Kell factor when processing the image to avoid the screen-door effect, which is typically somewhere between 0.7 – 0.9. That number is the effective line resolution, and translated to effective megapixels, it would be between 50 – 80% of your nominal. You still get 42 or whatever Mpix out of the sensor, but the information they contain is blurred to somewhere between 20-34 Mpix which even in the best case is just barely sharper than the 8K television.

Besides, with CMOS sensors you have such heavy noise reduction algorithms anyways that you’d be lucky to retain 50% of the actual image information. Whatever detail you think you’re seeing at the individual pixel level is mostly image processing artifacts and distortion.

What I can find written about Kell factor seems to think that with World–>CCD/CMOS–>LCD–>Eyes the factor should be greater than 0.9 and possibly 1, unlike with CRT’s. Some of the references to the contrary may be old enough to be from when CMOS sensors had less than full coverage, given the context clues. And it doesn’t seem to be related to LCD–>CMOS directly, though I am not certain by any means. But I’m thinking no matter if there’s this factor, the screen may still be distinguishably sub-ideal, unless you can explain further. I would definitely disagree about the noise reduction and pixel peeping – the ones that have heavy algo’s are known to eat stars and disliked for it by astrophotographers. The sensors I’ve had have had visible noise in low light, but also visible detail otherwise when circumstances permit. One field where there’s good information available is digital photomicroscopy, where they do calculate and test the limits of resolution, and I don’t think they talked about Kell factor there.

Go take a 42MP picture of your 8k TV and see if you can crop a single TV pixel out clearly so we can see the edges.

I suspect it’s harder than you’d think.

The edges of the TV’s pixels are smaller than the pixel… Of course you’d need more than the TV in order to see them.

If the certificates were also tagged with geolocation (inc GPS time) that would solve some of the difficulties (or make it necessary to take an encrypted GPS spoofing device with the camera).

Anyway, don’t be so racist! Some of us in Norfolk are proud of our 6th digits! :-)

I believe they are – certainly canon’s previous signing unit did.

Wow that didn’t take long. I knew that overpriced crap was a gimmick, but I didn’t expect for it to be exposed quite this fast

Sounds like while this one isn’t great, and there’s always ways to take a real picture that’s misleading or fake – such as by staging things or paying body doubles, even without taking a picture of a screen – the vulnerability isn’t in the crypto itself?

My cameras’ raw files bundle a jpeg inside which shows the original image using the in-camera settings at time of capture. If I was a publisher trying to do something along these lines, I’d want to see a crypto-verified version of this original preview image alongside any later edited one, so I could just look at it and visually confirm that the original looks as if it is substantially the same. Just like your gameboy vs leica side by side. Failing that, I’d like to look at the raw itself, though naturally as a photographer you’d like to keep your raws and release only the final versions generally, and you won’t put that in your final release because of the size – but the little crypto-signed preview? Maybe.

You can DIY signed media, just generate a checksum and push it onto the blockchain, that is all it takes to be able to prove a given data object existed in a given state at a given point in time. There is also rfc3161. The point being that the oldest provable object version is the real one, any derived data can’t be proven to be older, that establishes a form of provenance that an automatic audit can detect and changes/variants can be mapped out on a timeline. This means that if you take a shot of a politician sniffing a hamburger and make it look like he is sniffing a person you will not get away with it as substantial parts of the data will be a match for another object that is known to be older. You can still fool fools with your fake image, there is no cure for stupid.

Even if they fix all the security bugs, I expect there will be people (or governments) who will figure out how to extract the secret key directly from cameras so that they can fake a signature on any image they want. Unless it’s a cloud based service, the secret key has to be stored on the camera and people are really good at figuring out how to read those keys.

Encryption is only as safe as the secret keys that are used.

Sadly this article only said anything about Adobes tool, and nothing about the camera end or how well thought out it is. Every camera should have a unique private key, which is itself derived from Leica’s

Extracting the private key of one camera will (should) not let you spoof another camera, only that one.

Of course after Chinese customs has “examined” your camera, you have to throw it away…

I would also expect a decent camera system to have a cellphone connection, so it can get a timestamp from an external service. That is the only way that you can prove that the photo was not taken/altered after that timestamp. A camera could also have a secure non resettable clock module inside it which could combine with later external timestamps to give confidence that the photo was taken/altered by the camera at the time it claimed.

Now defeating this means hacking into the camera before it writes the signed image out to the memory card. This means hacking the camera firmware, but even then the signing might happen inside the image processor, not the UI processor, and this would likely be harder again to hack. Again if there is a secure signing and timestamping module, then hacking the firmware does not let you sign a new image with an earlier date.

Now it would further be possible to SHA checksum the image as it is read out, along with the secure (signed) local timestamp and signed GPS position, and then sign the overall SHA checksum in the image sensor module.

Now you would need to hack the secure module firmware inside the image sensor packaging.

There is at least one such sensor that has been made, almost a decade ago now. It was not very high resolution, and not very fast, by today’s standards, and was for a very specific job, but its design was such that if you could produce the original ceramic image sensor package intact in court, then the images were pretty hard to contest.

Mostly what this does is verify the time an image was locked, and what camera signed it. It stops post editing or faking. You can still pre-fake images and just photograph them from a high res screen as long as you can get the camera at the time you want to reveal the fake image.

So they’ve learned absolutely nothing from how web browsers have failed at displaying certificate information in a way that doesn’t confuse the user and actually increses security for ages.

And that’s before someone extracting the actual certificate from a camera, which is going to happen. Assuming it’s not just part of the firmware (which would obviously be a disaster), even extracting a TPM module from an actual M11-P or a similar camera would only require getting hold of a single camera. Which, depending what you are planning to do, might even make sense at full price.

My Nikon Z 9 are possibly getting C2PA through a firmware upgrade. I don’t assume the hardware was built with a TPM because Nikon only joined the standard later.

There’s a really good overview of C2PA / ProofMode, and what it’s designed to do, in Episode 748 of Floss Weekly for anyone interested in learning what it is designed to do:

https://twit.tv/shows/floss-weekly/episodes/748

What we really need to know these days is when the original image was taken and if it has been modified after that. That is what you need to weed out deep fake or AI generated images from real ones.

Absolutely fascinating that a simple story about “Adobe can not even run a bog-standard cryptographic signature system” has people talking about faking stuff, AI generated images etc etc.

The only meat in this is that Adobe are not doing it properly.

What is being left out is that you – yes, you, the reader or the photographer or anybody else – could, if you actually care about signing your photos, could use any of the methods that already exist. For example, just generate a GPG signature and send it out with the image: anyone can then tell if the image was edited and, if – IF – they trust you to stick in the correct metadata (such as the EXIF block) then they will also know the f-stop, GPS, AI program used to generate it, everything and anything you feel like putting in. BTW, talking of trust, do not blindly trust the EXIF data on any photograph, it can easily be edited and I have images were even the camera firmware put in incorrect values (ok, that was running a mod, but it was still straight out of the camera).

The *ONLY* thing Adobe could bring to the party would be an accurate and secure validation and verification of the signatures. Which, it appears, they have not.

Given that failing of Adobe, any talk of AI or fakes etc is totally irrelevant, the only added implication of the article is that everyone who has signed up for it, especially if they are boasting it as a Good Thing, has totally failed their due diligence in the matter and are making a laughingstock of themselves.

This is a really small nitpick: C2PA doesn’t claim to be “tamper-proof”. They claim to be “tamper-evident.”

However, as you pointed out, your alterations show no evidence of tampering, so C2PA fails to do what it claims.

I REALLY wish you would put a link TO the article, not take the lazy way out with this stupid generic link.

To be clear this was the result of the Verify site being historically permissive in its checking of certificate chains, to enable testing and experimentation. It’s no longer the case.