As I’m sure many of you know, x86 architecture has been around for quite some time. It has its roots in Intel’s early 8086 processor, the first in the family. Indeed, even the original 8086 inherits a small amount of architectural structure from Intel’s 8-bit predecessors, dating all the way back to the 8008. But the 8086 evolved into the 186, 286, 386, 486, and then they got names: Pentium would have been the 586.

Along the way, new instructions were added, but the core of the x86 instruction set was retained. And a lot of effort was spent making the same instructions faster and faster. This has become so extreme that, even though the 8086 and modern Xeon processors can both run a common subset of code, the two CPUs architecturally look about as far apart as they possibly could.

So here we are today, with even the highest-end x86 CPUs still supporting the archaic 8086 real mode, where the CPU can address memory directly, without any redirection. Having this level of backwards compatibility can cause problems, especially with respect to multitasking and memory protection, but it was a feature of previous chips, so it’s a feature of current x86 designs. And there’s more!

I think it’s time to put a lot of the legacy of the 8086 to rest, and let the modern processors run free.

Some Key Terms

To understand my next arguments, you need to understand the very basics of a few concepts. Modern x86 is, to use the proper terminology, a CISC, superscalar, out-of-order Von Neumann architecture with speculative execution. What does that all mean?

Von Neumann architectures are CPUs where both program and data exist in the same address space. This is the basic ability to run programs from the same memory in which regular data is stored; there is no logical distinction between program and data memory.

Superscalar CPU cores are capable of running more than one instruction per clock cycle. This means that an x86 CPU running at 3 GHz is actually running more than 3 billion instructions per second on average. This goes hand-in-hand with the out-of-order nature of modern x86; the CPU can simply run instructions in a different order than they are presented if doing so would be faster.

Finally, there’s the speculative keyword causing all this trouble. Speculative execution is to run instructions in a branching path, despite it not being clear whether said instructions should be run in the first place. Think of it as running the code in an if statement before knowing whether the condition for said if statement is true and reverting the state of the world if the condition turns out to be false. This is inherently risky territory because of side-channel attacks.

But What is x86 Really?

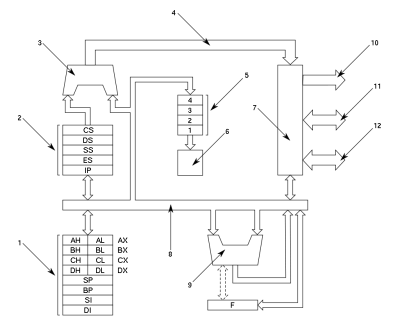

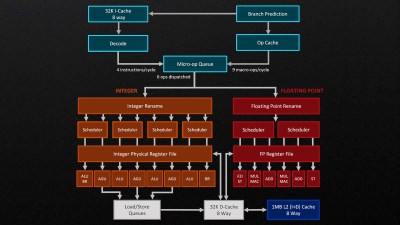

Here, you can see block diagrams of the microarchitectures of two seemingly completely unrelated CPUs. Don’t let the looks deceive you; the Zen 4 CPU still supports “real mode”; it can still run 8086 programs.

The 8086 is a much simpler CPU. It takes multiple clock cycles to run instructionsa: anywhere from 2 to over 80. One cycle is required per byte of instruction and one or more cycles for the calculations. There is also no concept of superscalar or out-of-order here; everything takes a predertermined amount of time and happens strictly in-order.

By contrast, Zen 4 is a monster: Not only does it have four ALUs, it has three AGUs as well. Some of you may have heard of the Arithmetic and Logic Unit before, but Address Generation Unit is less well known. All of this means that Zen 4 can, under perfect conditions, perform four ALU operations and three load/store operations per clock cycle. This makes Zen 4 a factor of two to ten faster than the 8086 at the same clock speed. If you factor in clock speed too, it becomes closer to roughly five to seven orders of magnitude. Despite that, the Zen 4 CPUs still supports the original 8086 instructions.

Where the Problem Lies

The 8086 instruction set is not the only instruction set that modern x86 supports. There are dozens of instruction sets from the well-known floating-point, SSE, AVX and other vector extensions to the obscure PAE (for 32-bit x86 to have wider addresses) and vGIF (for interrupts in virtualization). According to [Stefan Heule], there may be as many as 3600 instructions. That’s more than twenty times as many instructions as RISC-V has, even if you count all of the most common RISC-V extensions.

These instructions come at a cost. Take, for example one of x86’s oddball instructions: mpsadbw. This instruction is six to seven bytes long and compares how different a four-byte sequence is in multiple positions of an eleven-byte sequence. Doing so takes at least 19 additions but the CPU runs it in just two clock cycles. The first problem is the length. The combination of the six-to-seven byte instruction length and no alignment requirements makes fetching the instructions a lot more expensive to do. This instruction also comes in a variant that accesses memory, which complicates decoding of the instruction. Finally, this instruction is still supported by modern CPUs, despite how rare it is to see it being used. All that uses up valuable space in cutting-edge x86 CPUs.

In RISC architectures like MIPS, ARM, or RISC-V, the implementation of instructions is all hardware; there are dedicated logic gates for running certain instructions. The 8086 also started this way, which would be an expensive joke if that was still the case. That’s where microcode comes in. You see, modern x86 CPUs aren’t what they seem; they’re actually RISC CPUs posing as CISC CPUs, implementing the x86 instructions by translating them using a mix of hardware and microcode. This does give x86 the ability to update its microcode, but only to change the way existing instructions work, which has mitigated things like Spectre and Meltdown.

Fortunately, It Can Get Worse

Let’s get back to those pesky keywords: speculative and out-of-order. Modern x86 runs instructions out-of-order to, for example, do some math while waiting for a memory access. Let’s assume for a moment that’s all there is to it. When faced with a divide that uses the value of rax followed by a multiply that overwrites rax, the multiply must logically be run after the divide, even though the result of the multiply does not depend on that of the divide. That’s where register renaming comes in. With register renaming, both can run simultaneously because the rax that the divide sees is a different physical register than the rax that the multiply writes to.

This acceleration leaves us with two problems: determining which instructions depend on which others, and scheduling them optimally to run the code as fast as possible. These problems depend on the particular instructions being run and their solution logic gets more complicated the more instructions exist. The x86 instruction encoding format is so complex an entire wiki page is needed to serve as a TL;DR. Meanwhile, RISC-V needs only two tables (1) (2) to describe the encoding of all standard instructions. Needless to say, this puts x86 at a disadvantage in terms of decoding logic complexity.

Change is Coming

Over time, other instruction sets like ARM have been eating at x86’s market share. ARM is completely dominant in smartphones and single-board computers, it is growing in the server market, and it has even become the primary CPU architecture in Apple’s devices since 2020. RISC-V is also progressively getting more popular, becoming the most widely adopted royalty-free instruction set to date. RISC-V is currently mostly used in microcontrollers but is slowly growing towards higher-power platforms like single-board computers and even desktop computers. RISC-V, being as free as it is, is also becoming the architecture of choice for today’s computer science classes, and this will only make it more popular over time. Why? Because of its simplicity.

Conclusion

The x86 architecture has been around for a long time: a 46-year long time. In this time, it’s grown from the simple days of early microprocessors to the incredibly complex monolith of computing we have today.

This evolution has taken it’s toll, though, by restricting one of the biggest CPU platforms to the roots of a relatively ancient instruction set, which doesn’t even benefit from small code size like it did 46 years ago. The complexities of superscalar, speculative, and out-of-order execution are heavy burdens on an instruction set that is already very complex by definition and the RISC-shaped grim reapers named ARM and RISC-V are slowly catching up.

Don’t get me wrong: I don’t hate x86 and I’m not saying it has to die today. But one thing is clear: The days of x86 are numbered.

What’s the point? How does x86’s continued existence and evolution prevent you from using your favorite architecture?

It takes up space on the die, decreasing power and heat margins. Also includes obsoleted or unused instructions that add to instruction set complexity and opens up security vulnerabilities.

That may all be true. But again, why does x86 have to die for you to go use your preferred ISA?

“X has to die” rants have to die ;-)

It doesn’t take up space. The space and power are for caches because getting the data to the core is the hard problem.

I’m with [Josiah David Gould]. Those who don’t want to use a feature on such a successful line of processors as x86 are loud and have no substance.

Those who are using x86 features such as 16 bit real mode in production are wealthy and powerful.

Fortunately, Intel has decided to listen to the loud ones.

Intel has tried new architectures several times, and the results have been spectacular and expensive failures. One even got the nickname Itanic. Consumers have rejected departures from X86.

He doesn’t mention some of the benefits. There is a LOT of code out there that still runs unmodified. The CISC with RISC microcode has benefits too. An instruction cache holds more instructions, because many of them are 1 or 2 bytes. Memory bandwidth is better utilized during fetch. And considering the instructions latencies, I’d say fetch and decode times are negligible. Most of the points made made things more difficult for Intel in the past but don’t have any impact today, because they’ve already been developed, and have no impact on developers or users. A lot of CISC instructions are easier to use than the equivalent combos in RISC, and most people don’t cose at that level anyway.

Kinda reminds me of all that “X11 needs to die”, yet wayland is a bit of a mess.

Don’t even get me started on pulse audio/pipewire/etc. I still use just straight alsa, everything else causes me issues.

Nobody wants x11 to die. It -is- dead. It’z limping along with the occasional emergency patch but has otherwise been abandoned.

@arcdoom Except X11 is not dead. And probably will survive for pretty long time in one form or another. Sorry, wayland at this point is a mess. It is perfect example of ‘second system effect’.

It’s not just a bit of a mess, it straight up doesn’t work. KDE 6 switched to it as a default and broke half my stuff :P

I agree that there are absolutely no real or valid and demonstrable reasons to use Wayland over X11. Wayland takes away so many of the X11 features that were never promoted. Xdmx was amazing and could have been so useful to everyday users. and network transparency was handled so much better than screen scraping and stupid approaches like sharing a whole desktop. That aside, the x86 thing is silly. Yes, it’s antiquated and being held back by it’s origins. But, as we are seeing with ARM, all that needs to happen is that a new CPU architecture with better features/efficiency comes along and people will move that way. There is NO Intel processor that can compete with ARM for power efficiency and still provide decent response time. The Celeron is abd always has been a completely worthless joke. You can’t run anything useful at a decent speed and have long battery life on it. It is either long battery life and you feel like you’re on a 486, or OK performance, and crappy battery life. And there is a reason for that. The ARM CPU was designed with extremely high power efficiency from the start so that the CPU would be cool enough to use a cheaper housing. This, completely by accident, put ARM into the perfect position for beating Intel when it comes to low power devices. This is why every iPhone, iPad and Android phone/tablet runs on ARM. Any x86 based CPU would always need to be recharged every hour and anytime the user did anything the device would be over 120F. When iOS first happened and I realized it had MacOS X under the hood, I was predicting a move to ARM back in 2010. It only made sense: Design for a smaller system and scale up over time. Intel, unless they Dec de to complete forgo x86 for mobile CPUs and start with power efficiency as the first step, is always going to be trying to stuff 10 pounds of circuitry into a 2 pound bag. It won’t work. But when you scale up, the benefits all around are great. I got a Samsung ARM based laptop (sadly sadled with Windows 11) and it gets by without being plugged in for about 10 hours for normal usage (listening to music while browsing the web or working on documents). If I watch a movie, the life will drop down to 4-6 hours. No Intel based laptop can do that unless it has a really large and heavy battery pack. My Samsung notebook also has no fans, is super thin and light. The way laptops should be built everywhere today. Is the laptop a powerhouse? No. But it is far better than any Celeron based “mobile” devices. So I don’t think Intel needs to die right away. It will, eventually, because there are already better options. And with x86 emulation, no one needs to know. The x86 emulation in Windows 11 for ARM works well enough (and would certainly be better in Linux if it even needed). I am ready to jump ship to ARM for good, once Linux compatible laptops and desktops become more standard.

the main point here is code execution. gaming example: GPUs have up to like 16k cores and every single one doing its small part of job, resulting in a perfectly smooth gameplay, there is no such thing as “gpu bottleneck”. cpu’s, on the other hand, are like skyscrapper built on top of usual house built on top of shack built on top of a cave. and any action u do goes through multiple translations and is done on a few but strong cores. which is bad for gaming coz it leads to situations where u get like 10 to 15 fps in crowded games on the currently best gaming cpu 7800x3d. its absurd. and the problem is that whole world is struggling to get better performance since everyone is still doing things with x86 in mind, the most used architecture, like literally everything outside of phones is made for this, 99.9% of home pc’s are x86. if u make a game code for an arm architecture u will have noone to sell it to.

so, u are wrong, its not an evolution, its stagnation, that prevents technology from evolving. if not for capitalism, we could have literally 40-50 times stronger CPU’s by now.

GPU execution units are relatively dumb. It’s just that they’re are many of them that work in parallel. The CPU is an entirely different animal. Comparing one to another is, well, apples to oranges.

I’m no fan of the x86 ISA and would love for Intel to expose the microcode, but even I will admit that there are some benefits to CISC, e.g. lower memory and consequently memory bandwidth utilization.

Game performance probably has more to do with the software engine it’s using than the ISA on which it runs.

That backwards compatibility that is mentioned as unnecessary is the main reason people game on PC. The GPU is also almost always the bottleneck when it comes to fps, and what’s with the capitalism jab? Capitalism is the only reason you have a computer to begin with.

Single core execution is not a processor choice, is a programmer choice.

Most games(and applications in general) run on one core because of a, history, b, laziness and c, some things are difficult to parallelize.

Or rather a &c, and d, management sees no profit benefit in implementing parallelization as an afterthought.

It’s catching up now with some 32,64,128 etc core counts, but in general if “only” the increased transistorcount had been spent on adding more cores then programming in general would have been forced to learn better multi thread utilization.

Using 8 cores instead of only one is a 800% boost, buying a new generation cpu with a breathtaking 17% IPC uplift is a.. 17% boost.

Why, it is our fortune that all those communist “countries” that are/were so much more advanced than the “west”, excluding Cuba of course, delivered upon us all the modern advances in technology. Why, the United States alone would never have had the modern CPUs and GPUs that you use to play your games in mommies basement with. And how amazing was it they bestowed upon us, free of charge of course, the CAT scan, MRI and dozens of other medical advancements. And, OH MY GOD! The very Internet and world wide web that you enjoy at this very minute would have never been possible, even today, if the Soviet Union had not invented it. I am so in debt to communism and other collectivist nations right now I actually feel so guilty enjoying my liberty, freedom and peace.

Yeah, right. Take a look around your room, or, mommies basement. Go ahead, I mean it, LOOK! NOW! Everything you see and enjoy, you ungrateful child, is made possible by that stagnate, mean, greedy Capitalism. EVERYTHING!!! Regardless what your equally childish and spoiled professor/s said. You would likely not even exist if it was not for Capitalism. And neither would the Internet that you use to spread childish comments with.

Now, you have a nice day, comrade.

If it weren’t for capitalism we wouldn’t have computers. It’s got a serious downside, but we would not have better machines without it.

Workers invented, developed, and produced computers not capitalists.

1. ARM CPUs are *not fundamentally different* from x86 CPUs

2. GPUs do *not* have “16k cores”, they have 16k ALUs. An ALU isn’t a core.

3. A GPU does have the equivalent of a CPU core – if you take a look at GPU chip architecture diagram, you can easily identify structures in the GPU diagram with similar size&function to the size&function of a CPU core

4. GPU threads are much *simpler* than CPU threads

I believe the best way to put it is that the continuation of x86 has become a technological burden. It’s continued evolution and baggage detract from what industrial forerunners could be making instead of x86. Effectively, microarchitecture developers are a finite resource and too many are toiling away to prop up x86 which results in stunted growth of microarchitectures.

Here is a question to ask. Why has the x86 architecture managed to stick around? Why did SeaMicro failure to gain market share? What about Cavium’s Thunder X2? Why aren’t we all using some sort of Pi derived system for our home computing needs? Why hasn’t Windows on ARM displaced Intel and AMD?

People have been taking swings at x86 for decades….including Intel and AMD. Why is this ISA so stubborn? Reverse compatibility if the ISA is something people often point to but that’s not entirely correct. But there is a sort of reverse compatibility in x86 that does keep it entrenched. But it’s one that was inadvertently created by IBM and Compaq. The legacy of that has had huge consequences for the computing industry.

> Why has the x86 architecture managed to stick around?

It’s a long story full of market manipulation, failed projects, and manufacturing capabilities. x86 has never really had a technological advantage. 8086 was released as a stopgap for what would eventually become iAPX432, which failed spectacularly due to its insane complexity. 20-ish years later, Intel repeated the same mistake again with Itanium as AMD developed x86-64. Judged on design merits, x86 is terrible. But being to market earlier and in larger quantities than the competition resulted in an early win with IBM, and today we are stuck with that legacy.

Finally, it is worth noting thevreason there has been a renewed interest in alternative architectures: power. x86 has *never* been power or space-efficient at a hardware level, and as shrinking process nodes have reached a point of diminishing returns, we are hitting the limits of what x86 can do. Combined with the massive attack surface inherent in developing a CPU with an ISA as complex as x86, and it is also rife with security vulnerabilities.

>an early win with IBM, and today we are stuck with that legacy.

You’re getting close.

Did you look into any of the various pushes to get ARM in the Datacenter?

I’ll give you another hint, the current AI boom/bubble demonstrates part of why x86 sticks around and why SuperMicro has seen its stick price soar.

Security vulnerabilities are a red herring IMO. There will always be a vulnerability that can be found and/or exploited and lets not pretend side-channel attacks were easy to find.

> the current AI boom/bubble demonstrates part of why x86 sticks around

x86 is *not* used for a majority of the compute tasks in tjis case. The processors of course do manage the accelerators, but a vast majority of the computing is performed separately.

> Security vulnerabilities are a red herring IMO. There will always be a vulnerability that can be found and/or exploited

This is a fair point, and I agree in principle. However, the complexity associated with x86 and the complex interactions between instructions make it particularly vulnerable. Yes, side channel attacks ans vulnerabilities will always exist, but that doesn’t mean reducing the attack surface is a moot point.

Intel caused its own failure with the Itanium, it was faster than the Pentiums at the time, however only with native code. They also botched the emulator for X86 which would be slow and buggy. If they spent more time on making that better there might be more acceptance. They also way overpriced the thing, because they were Intel and thought they could.

It might have still taken over if AMD didn’t manage to hire all the DEC Alpha people HP laid off when HP decided to go all in on Itanium. Those punky engineers who really did make the fastest CPUs in the world managed to create the backward compatible 64bit Athelon and Opteron processors which showed incredible speed increases over the Pentium 4 (which had too many pipeline stages, and used emmulation as well) in both 32 and 64 bit applications.

Intel was saved by their Israeli unit which was still developing traditional X86 cpus for embedded purposes.

> Why hasn’t Windows on ARM displaced Intel and AMD?

It kinda has… Most people are doing their “computing” on mobile devices, which are, in fact, ARM devices (Some sort of Pi derived system, but backwards – Pis are derived from the mobile computing landscape).

The “most people” are doing the equivalent of watching TV and playing solitaire on their mobile devices. For content and programming cell phones and most mobile devices are crap and the processors in them are really going to waste. Honestly I almost wish there was a good way to do mass idle computing on all the unused cores of these billions of cell phones in the world but it would get the public up in arms about battery use/life.

I don’t know if x86 should go forward or away but:

“Why has the x86 architecture managed to stick around?”

Success of a product is a function of many variables. A lot depends on marketing, big players decisions, human habits, bond to legacy solutions, politics etc. Look how long is a transition from one Windows to another (by now Windows 10 market is twice as big as Windows 11) and this transition is fairly painless. Now compare that to Apple’s transit to x86 and from it. Look how quickly people embraced Chromebook with it’s cloud philosophy and limited software but were completely sure that switching from MS Office to Open Office is impossible because lack of features different GUI etc. Win of Linux over FreeBSD/Minix was also a lot due to legal issues rather than technical.

“Why aren’t we all using some sort of Pi derived system for our home computing needs?”

Actually we do – tablets, cellphones and Chromebooks took a lot of that load. Plus there are people that use actual Raspberry Pi as their desktop. But some apps are simply tied to the platform through Windows/MacOS. You can run Linux+Gimp on Raspberry Pi or Chromebook natively. Is there any way to do the same for Windows+Photoshop? Even that snapdragon laptop would require emulation software for PS and this is already stability and efficiency loss. Yet we see that both Apple and MS are looking for some other option.

Be serious, comparing a Raspberry Pi with Gimp to a modern PC with Photoshop is like comparing a Model T Ford with a Ferrari. No home user is going to like the experience, never mind pros who use these tools.

If you want to use an ARM chip run PS on a modern M-series Mac. It blows the x86 out of the water.

@L

Context is clear – software is tied to the hardware platform through operating system.

Heavy Photoshop users probably prefer fruity platforms not Windoze.

Continuation of x86 has allowed people to get stuff done, instead of having to port everything to new architecture every few years.

Apple seems to manage change well.

Apple controls the hardware and software. Mac OS makes up 16-19% of the desktop market. These two factors allow Apple to move to a different architecture far more easily than the 71-73% Windows, and 2.1-2.4% Linux users who have to coordinate everything between OS OEM, hardware OEM, hardware retailer, and all the software application creators large and small.

Even with Apple’s closed system they struggled going from PPC to X86. It was not clean, it was just a long time ago and people forget the pain. They did much better this time going to ARM, but there have been people that had to wait several years to move to new hardware.

Going from PPC to x86 was cake compared to when apple went from 68K to PPC.

They were still running emulated 68K code in the network stack and the file system two major OS releases later. The MacIdiots never stopped talking shit about how great Apple was. I suppose they had time, constantly waiting for their machines to reboot.

Apple has never written a good OS. They simply bought Next’s after failing for more than a decade. MacOS8 was supposed to be preemptive/protected memory, but what eventually shipped as 8 was slightly warmed over 7. Worse than DOS.

Tell that to the people whose $27,000 cheese grater is an expensive doorstop in a couple of years.

Not as well as one would have us believe.

Because Apple has like 3 programs they need to update because no one bothers writing software for them

I totally agree

Are you seriously wondering what the point of making a more efficient ISA is, or do you not understand the difference between arguments and opinions?

He skipped the most important part. Or BIOS, EFI, and schedulers are all built around the limitations of the x86 8 bit limits. This is why Macs are faster with thier custom M series chips vs the Intel chips they replaced, despite being less capable in specs.

Your computer still has to obey IRQ tables because of x86, instead of using a modern scheduler.

Uhm, what? Since when does modern UEFI run in real mode? LoL, have you ever even looked at bootloader code?

I think you’ve already figured out it’s not literal death being advocated for here, ISAs aren’t biological and aren’t alive, so for one to “die” you already have to be searching for some figurative, metaphorical meaning.

Congratulations on finding a nonsensical one and objecting to that, but I don’t see how it’s productive. OP was pretty clearly using it as shorthand for something like “stop feeding it attention, stop adopting it by default, as a given; its implementations are already spending significant resources that could be put to better use and that’s only going to get worse.”

That’s VERY shallow. ISA is at the foundation of the problem, but it’s about much more than just ISA.

PC platform is full of cruft everywhere.

um, title seems extra harsh, even for Hackaday clickbait.

This 100%

It’s time for windows to die as well, but unfortunately the fact that it would be right does not make it any more forthcoming.

The problem was never really Windows, the problem has always been Microsoft. Sadly, we missed the chance to escape Microsoft’s evil when the ruling that they are a monopoly was overturned.

Yup, because they’ve been taking pages straight out of the MSFT playbook. Browser embedding, anyone?

“it is the single largest OS used in the business world.”

Only for desktop/laptop computers. When it comes to servers and embedded devices, Linux dominates. You may not know it but Linux runs on most embedded devices and they are absolutely everywhere.

Indeed. Visit a the server room of a multinational bank, and it’s all Red Hat Linux boxes – which have quickly replaced other Unixes.

Oh, and even desktop Linux has hit 4% Ref: https://arstechnica.com/gadgets/2024/03/linux-continues-growing-market-share-reaches-4-of-desktops/

Linux is not a UNIX. It never has been and it never will be. Calling Linux a UNIX Is like calling Windows with Cygwin a UNIX. It’s just ignorant and wrong.

Not a single person has every said a word or even known that the spreadsheet/document/whatever I gave them was done on linux, in Libreoffice.

Teams is stupid, and Outlook is simply email, which anything can do. Next absurd irrelevant argument why “Windows with all its spying is totally the best guiz”?

The only current *slightly* valid argument is video game compatibility, but Steam’s Proton is quickly eating that away.

I see this point raised often by triumphant Linux evangelists but why should anyone making a choice about which OS to run on a personal computer care?

It’s like saying I should stop using a standard oven for home baking because industrial bakeries use an enormous conveyor oven. I don’t want the complications and wouldn’t benefit from those features.

You missed the point entirely because I was merely point out what businesses use since the parent post overlooked it. You can keep using Windows 95 for all I care.

Computer operating systems aren’t ovens. Linux is perfectly user-friendly as is. It’s possible to have one OS that’s good for basically everything and that’s what Linux is.

Lots of stupid people do other stupid things too. Just because a ton of people do it doesn’t mean it is right or good.

I don’t trust the business world at all. Do you?

Despite the architectures flaws, from a preservation standpoint x86 is very important. Software compiled and written for x86 is a vast majority of historical software vs other cpu architectures. Aside from console emulation, I use wine and dosbox as often as I do modern software.

It is not imperative that the CPU hardware is preserved though, because they can be emulated.

Not the cpu architecture itself, but it’s entire history and infrastructure which contains just as much value. This is why I’m running old x86 executables mainly on x64 cpus, even though arm is an option its just not fast enough. And it is sort of helpful if for emulation purposes that preservation of the knowledge base for x86 have requires access to the physical hardware. Much in the manner that Near debugged BSNES code side by side a real SNES. Undocumented features and bugs could be discovered in this way, making the emulator more cycle accurate.

The suggestion isn’t that your x86 hardware be taken away. The desire is for new processors to be ARM or RISC-V, but just like with your BSNES emulator running alongside a SNES, your Bx86 emulator could run alongside an x86.

“It is not imperative that the CPU hardware is preserved though, because they can be emulated.”

Yeah, great. This solution sounds so easy, but is it?

The problem with emulation though is that most emulation projects are being done by hobbyists, without support from industry.

They’re doing great, but these individuals don’t have access to the confidential documents of CPU manufacturers.

So they have to figure out things through trial and error.

To give an idea, let’s have a look at the history of emulation (SNES in particular).

It took ages to get accurate emulation done.

Yes, there’s PCem/86Box, but compatibility issues still exist and emulation isn’t fully complete (tops out at Pentium II).

That’s not noticeable for the layman running Windows 98SE to play CS 1.6 or UT, maybe, but once niche software for special applications comes into play, it might.

X86 emulation, with all those SIMDs and an accurate 80-Bit FPU isn’t trivial.

Moving the responsibility over to hobbyist programmers isn’t the solution.

It’s the duty of the PC industry to maintain backwards compatibility, rather.

In this day and age, it’s important that we can continue to use our existing information from the past decades.

Money thinking mustn’t be allowed to get in the way of preserving our digital legacy.

It’s not just about efficiency, but also about having future replacement parts for our infrastructure.

A working digital infrastructure is as important as having clean tap water.

Even if we’re going for a replacement technology eventually, the deployment takes years if not decades.

It’s not feasible to replace software and hardware in the time frame that intel imagines.

Also, what about the replacement technology (ARM, RISC-V etc) – how long will it stay compatible? How will it compare to x86 here?

If x86 no longer truly is x86, in all its entirety, then it has lost its way and purpose. IMHO.

Well while wine is not an emulator (™), DOSBox is !

Should we point out that the software we use for designing and creating these microchips all run on Windows on x86 CPUs? Yes, even Apple’s M chips are designed on Windows machines running on x86 architecture.

Source? LoL

Somewhat relevant: x86S (where the “S” stands for Savings. Wait, no, Simplified.) is doing away with real mode.

https://www.intel.com/content/www/us/en/developer/articles/technical/envisioning-future-simplified-architecture.html

Thanks for pointing to this.

i’ll just leave this here.

https://en.wikipedia.org/wiki/Intel_80376

We’ll most likely end up with this in the near future since no one is using real mode anymore.

Maybe time to keep some old cpus around for good old games !

https://www.phoronix.com/news/Intel-X86-S-64-bit-Only

VMs exist.

Finally someone brought that up as I read thru this flame war. With x86S and AVX10 there is some shortterm relief in the divergence field.

https://www.google.com/url?sa=t&source=web&rct=j&opi=89978449&url=https://m.youtube.com/watch%3Fv%3DC_CJn1ZsTJc&ved=2ahUKEwikrOrH8oiFAxU3GlkFHdEyCrYQjjh6BAgPEAE&usg=AOvVaw1yzjXfZV4SGRDmTF3cBz61

“Somewhat relevant: x86S (where the “S” stands for Savings. Wait, no, Simplified.) is doing away with real mode.”

Ah, okay. I thought S stands fo Stupid. Or Sexy. No big difference, maybe. 😂

No.

Bring back z80 instructions and everything back to 8008 so that future chips can be truly universal instead.

Grrrr……No Bring back the 6502!!! SOOOO much better. (See us gray-hairs did this same thing when we were your age).

Of those nix boxes, mentioned above, at those mega corps and government data centers ; how many are running virtual\emulated S360 or VAX\PDP systems? We are still seeing Job openings for COBAL and FORTRAN programmers; so there is a hint.

ISA’s come and go, we all need to get over it. Just make sure you have a solid emulator that runs on the new architecture at full speed before you migrate away. Yes, Apples move from PPC to x86 was rough. However when XBOX did the move in reverse they had minimal issues as Microsoft had experience from their “Virtual PC” that worked fairly well on the PPC vintage macs.

Nah. 65x is the other side. Apple needs to support that plus PowerPC in their new chip while Intel supports 8008 on up. We’ll just forget about that whole period that Macs ran Intel chips. See how it works now?

Of course, go far back enough and they do converge. Both chips should also function as a 555!

“Grrrr……No Bring back the 6502!!! SOOOO much better. (See us gray-hairs did this same thing when we were your age).”

Forget 6502. The 65816 was waaay better, I think.

It allowed 16-Bit i/o and access of up to 16 MB.

It also supported segments, just like x86! 😁

“No.

Bring back z80 instructions and everything back to 8008 so that future chips can be truly universal instead.”

Actually, the NEC V20 (8088 pinout) and V30 (8086 pinout) had an i8080 emulation mode.

So your demand isn’t unrealistic, at all.

We already had been there.

In an ideal world, the leading x86 manufactures of the 386/486 days (intel, AMD, C&T, Cyrix/TI) would have adopted that i8080 emulation mode.

It’s not Z80 yet, but close.

I’ve read articles like this since the 90s. As you point out, x86 CPUs translate they’re instructions and effect you look at die space that’s the l not a huge part of the design. X86 code can also be smaller because of it’s design meaning you need less instruction cache which is for sure large and power hungry.

I’m not arguing that x86 is better (I think it’s kinda dumb and random personally). I’m just saying it doesn’t matter and these articles are like arguing that Navaho is a better language than Russian.

I’m sure one day another isa will beat out x86 be it some kind of arm or mips or risc-v. But the reasons will have little to do with the actual instructions and more to do with implementation and business things like availability and licensing.

“I’ve read articles like this since the 90s.”

Also those in Byte Magazine, by any chance? 😃

They essentially said that GUIs like Windows and Macs don’t use much “math”, but rely heavily on making API calls (file open dialog, draw a window, text i/o, mouse events etc).

Things that can be emulated rather quick with little overhead.

The code blocks involved can also be pre-compiled (native DLLs or dynarec).

Products like Insignia SoftWindows made use of these tricks.

Products like databases, DTP software, terminal emulators (VT-52, CEPT, VT-100) etc. could be run at near native speed that way.

In a similar way, Windows 11 for ARM is capable to easily run x86 and x64 user mode code (Win32/64 applications).

>RISC-V, being as free as it is, is also becoming the architecture of choice for today’s computer science classes, and this will only make it more popular over time. Why? Because of its simplicity.

Translation: Just dumb everything down for the new kids and corral them with ARM-only architecture. Apple says so!

MIPS was also an architecture largely used in education and look at its current prominence. IMHO articles like this completely miss the point. A computer is not an ISA.

Heck, a computer isn’t even just a collection of CPU, RAM, and peripherals. A computer is a collection of hardware and IP where fundamental choices being made about how responsibility will be divided in an ecosystem.

I pee on Imaginary Property

Eh? RISC-V isn’t Arm.

Even if RISC-V were Arm, I would gladly reduce the cognitive load on “the new kids”. Complicated isn’t better. This just sounds like “I struggled so you should have to as well” nonsense.

” x86 architecture […] has its roots in Intel’s early 8086 processor.”

Nope. It originates from Datapoint 2200 personal computer built using TTL chips.

> ARM

Yes, let’s turn desktops into larger smartphones

Linux has been running happily on a bunch of different architectures for a long time now. If you take for example Debian (Which is the root of many derived distributions, including Ubuntu and Mint), then it also runs on several ARM variants, Mips, PowerPC, S390x and more.

https://www.debian.org/ports/index.en.html

Won’t give in until there’s overclocking, adjustable voltage, and standardized ATX interfaces with UEFI. Bugger off here SoC shill

Discussing the merits of CISC or RISC is meaningless today, CISC won. The complexity of an x86 is partially due to hardware adapting to poorly written software that needs to be accelerated, and it does a greay job at that. In a perfect world modern cpu would behave closer to modern gpus, but parallel computing is sadly no well supported by most programming languages or execution environments (browsers)

“CISC won”. I suppose you wrote that from your vantage point at the end of time. We mere mortals who have to live our lives will continue to debate the merits of various practices whose outcomes are not yet fixed.

Parallel computing just doesn’t neatly fit a lot of problem spaces, your “In a perfect world modern cpu would behave closer to modern gpus” is analogous to wondering around with a square hole lamenting the existence of all the sloppy round pegs. It’s almost as though CPUs and GPUs exist to solve different types of problems.

Until Apple brought us the new M-series ARM chips I might have agreed but they breathed new life into the old RISC vs CISC thing

Cool, now try to run any non-apple-optimized workload on your M chips. See how that works for you.

I’m not sure that 8086 real mode is that much of a barrier to optimization. It’s not like you can run it at the same time, and switching back into it requires some unholy magic that isn’t expected to preserve much. It can basically just be emulated at this point.

The direct memory access with no memory protection isn’t such a big deal, basically all systems start up with a flat memory map and you have to set up protection, including RISC-V

Decode complexity is a real issue though

Good point. The original 8086 had about 5500 transistors, way less than those 275,000 the 80386 had.

In comparison to modern x86 CPUs, that’s nothing.

The tiniest caches found inside modern x86 CPUs use way more silicon.

In my opinion, the removal of 8086 compatibility, v86 and the dumbing down of the ring scheme (ring 3 and x64 ring 0) has other reasons.

To me, it’s more like marketing, not to say propaganda.

It looks like a justification to ship an inferior product/design that’s easier to maintain/produce for the manufacturer.

The manufacturer doesn’t do it for us, to solely to its own advantage.

It’s like those shine big packages in the grocery store that contain mostly air.

They look nice and worthy at first glance, but a closer look at the actual weight/content says otherwise sometimes.

Windows RT and Windows 10 S mode weren’t being very successful yet, either.

Users don’t like crippled versions of soft- and hardware.

Let’s just look at how often home users asked for Windows XP Professional or Windows 7 Professional not that long ago.

It seems a little unfair to lump speculative execution in as though it’s an x86 sin. It is a thing that basically any remotely recent x86 does, and it does leave you open to all the cool side channel attacks; but it’s also something that basically everything else that’s focused on performance rather than pure die area or absolute minimum power draw also does; and x86 doesn’t have to do if you don’t mind performance sucking.

Even PowerPC did speculative execution – so quite right not to lump it in as an x86 sin.

For most common folks as long as it looks and feels like windows, and runs the apps the like, they really do not care too much about what is going on under the hood. That is more about politics and marketing. When X86 stops being generationally faster, they are going to have to do something if they keep wanting to see new hardware.

Also, a lot of things obviously start with scientific people a or hard core gamers. For years the option was to move to a different hardware platform. It is sad that DEC got caught up in the whirlwind of the compaq to HP thing, as the Alpha chip really looked like a screamer, had a windows port, and a thumper to run windows apps. It was like two of the three moons of Venus had aligned, but third one did not and things just fizzled.

The reason why X86 is prevalent is the math instructions it can do. There’s a reason why it works on desktop systems and why RISC is a poor choice for desktops and workstations. RISC has different math registers. When you have more instructions like X86/CISC (complex instruction set computing), you have more complexity in mathematical calculations you can do. We’ve had RISC desktops before, and none of them have successfully done the workload of an X86 CISC.

RISC CPUs like RISC-V, ARM, etc. are for niche use cases specific to their platform and use case. Mainly battery life for ARM. You don’t just solve complex equations on an RISC processor for high level computing. You simply can’t. If you look at common c libraries like glibc, bsd-libc, visualc, or even musl, you will quickly see where X86 instruction sets allow for complex calculations in computational languages to perform better multitasking and utilize SIMD instructions to leverage capabilities better in the same space.

If you try to push a RISC to perform the same calculations as a CISC, the power usage and thermal energy skyrocket nullifying the purpose of RISC architecture. If you’ve ever used any high end phone like an ASUS ROG, RedMagic, Motorola, or Samsung, when you push those phones into the 3.0 GHz range in continual usage, they get hot quickly, and it’s not the battery, it’s the SoC.

So does X86 need to die?. No. And it likely won’t ever. It’ll be expanded into x86_128 in due time when complexity in calculations and the need for longer hashing algorithms demand it. It’s an instructional system that is able to expand and evolve itself without the need for dropping a subset entirely.

There’s RISC life beyond ARM/RISC-V

“You don’t just solve complex equations on an RISC processor for high level computing. You simply can’t. ”

patently, provably silly and false. for the entirety of the 80s/90s/early 00s high performance workstations, servers, and all supercomputers were based on anything BUT x86. go read the TOP500 wiki article. it took x86 practically 20+ years to break into the list (or see any real scientific or professional use) because it was absolutely useless for anything large-scale or complex, and is only only there now because the other companies bowed out. POWER/SPARC/PA-RISC/MIPS/Alpha were at the heart of every system that wasn’t designed as cheap consumer trash. cheap consumer trash gave x86 its ubiquity, volume, and price benefits that nobody else could keep up with.

What? In a ARM Cortex-M4 with FPU, you can do:

vmov.f32 f1, f0

vsqrt.f32 f1, f1

vmul.f32 f2, f0, f1;”

3 assembler instructions to run an C operation like “a*= (float) sqrtf (a)”, being f single precision math arithmetic registers

It’s not the instruction set. x86 processors are designed for PCs and servers, and ARM/RISC-V processors are designed for mobile and embedded. The only reason they match this way is because they historically established these niches. The processor architects don’t want a phone that crunches numbers like a supercomputer with a very short battery life. There are ARM processors for supercomputers too like the Fujitsu A64FX. The Chinese Loongson processors which appeared in supercomputers are MIPS-based.

The longsoon processors suck ass though. Although admittedly that’s probably not because they’re ARM

Cinebench 2024 on M4 Max disagrees

Arguments based on “simplicity” or “wasted die area/heat” are dead ends. The L1 and L2 caches of a modern processor (ARM, AMD64, RISC-V, etc.) are several times the size of the traditional CPU. The complex branch predictors far outstrip the size of the incremental sizes of microcode or logic to implement the older x86 instructions. In short, transistors are free and a few transistors for rare instructions simply cost nearly nothing in power or area. (To be fair, design validation is a cost, but the tests already exist, so that is a sunk cost.)

Any meaningful conversation will revolve around the performance and power needs of the intended application. When a usage model needs performance, designs will tend to select ARM64; when a usage model needs power conservation, designs will tend to select ARM; and when a usage model is sensitive to the cost of a license, designs will tend to select RISC-V or older ARM cores. A conversation that waves its hands about nonspecific costs is just fanboi ranting.

If you like hard numbers, look up the U-Wisconsin paper from about a decade ago. In their analysis, they concluded that the overhead of the miscellaneous AMD64 instructions was about 5% (from memory). In todays world of 5-4-3-2nm, that accounts to a fly speck, and even the leakage due to unused transistors is trivial. And the performance lag of RISC-V means that those usage models must be highly-very-really-double-plus sensitive to cost (which RISC-V chips are not demonstrating in the marketplace).

In short, if you are concerned about a few thousand transistors needed for miscellaneous AMD64 instructions, then your usage model cannot tolerate large L2 caches (and therefore does not need much in performance).

If someone wants to drag AVX into the conversation, then they need to consider GPUs as the alternatives rather than alternative CPUs. Modern GPUs are *massive* and *power-hungry* in the extreme, especially when you consider the recent incursions of stacked memory, so that leaves debates about 5% transistor costs in the weeds and dust.

P.S. Sorry about the runt post.

You wrote a lot of what I wanted to say; I think you did mean to say AMD64 instead of “ARM64” that first time?

Basically, and this is just my mental picture of the situation… if you start with a simple CPU with a minimal set of operations, then you speed it up as far as you can go without adding instructions, either you add so many cores in parallel that what you have actually built resembles a GPU, or you will eventually run out of things to do with die space except for adding cache I guess, once things switch as fast as they can. If you don’t want super high performance, great! You need less silicon and your tiny processor may consume less power when things aren’t sized for the silly switching speeds I previously alluded to.

If you *do* want more performance, especially on average, maybe you decide to look at typical use cases and bolt on some acceleration for that. If nothing else, you might decide to add hardware encoders and decoders for video, because you figure that’ll get used often enough to help. Maybe some stuff that’s good for compression, cryptography, complex math, manipulating vectors, matricies, etc. Maybe you look at how people like to be able to virtualize things so they can safely run something else while they are running their own code, so you add that. You notice that a lot of performance bottlenecks are in moving data around, so you add some memory related functions to let code get fed its data better. Maybe you look at how software is structured and coded to see what you could improve, and you realize that coding ahead of time to make the best use of resources is great and all, but you can probably whip something up on the die that will help optimize the code after the fact, or even address annoying cases where the code really can’t do anything to avoid wasting resources, but the processor can. Maybe you sometimes have the ability to compute the next step already, so you let the processor decide to do things out of order and not tell anyone. I can’t be terribly specific with x86, as it’s a bit past how far I went into this subject, but hopefully that rambling gives some idea what the chain of rationalizations could be.

Agreed. I was about to write the same.

Good points and info. The only thing I’d add is that the current serious RISC alternative isn’t RISC-V, it’s ARM, and ARM chips have arguably caught up for many use cases.

ARM stands for “Acorn Risc Machine”

It is old and proprietary.

RISC-V is the open-source modern-day replacement.

David Horn – old-timer

In a way this reminds me of the ‘Rust’ programming Language argument. Got to get rid of ‘C/C++’ . Rust is ‘the’ language of today. It’s ‘modern’ :rolleyes: . Even though, Rust is just a very minor language in the scheme of things.

I don’t see a ‘need to die’. Let the market take care of what ‘dies’ and what ‘lives’. Having choices is a good thing. If a new ‘whiz-bang’ processor comes out that screams past the x86 … It will take market share.

As for Windoze applications, it seems they have ‘mostly’ both feet in the Windows world which is mostly written for the x86 architecture (mostly). To me, this is where Linux has a great edge. You can run Linux on SBCs, desktops, servers, etc. — all with different hardware underneath and all the applications will run just fine within limitations of the hardware of course. Ie. LibreOffice runs across the board (and in this case Windows also), or gcc, or KiCad, or…. recompile source on new machine and run.

The fact that most Linux stuff can still be built from source goes a long way for porting to a new architecture, but for certain prominent workloads that take forever to build from source (ex. pytorch) and are hard to setup dependencies for a build, there’s still a lot of inertia that needs to be overcome (ex. Binary builds for non-x86 architectures are lacking in certain large projects). It’s certainly getting better though. ARM binaries for Linux are quite common, which greatly reduces the need to put up with building something as the only option.

I don’t think you’ve looked at many recent Windows programs. Switching to ARM (32 or 64) builds involves adding a platform target in visual studio, even in C++ it usually doesn’t require that you conditionalize any code. .NET pretty much never does. There’s also an option to build arm64 binaries (arm64ec I think) that can be linked to static or dynamic x86 libraries which are run using some fast rosetta-like binary translation intended for programs with parts in assembly language or that link to libraries with no port so it can be done in stages.

Most linux programs will build for Windows as well, although how well they run usually depends on what windowing system they built upon. Usually QT is the fastest if it’s a new enough version, and KDE-based stuff like Krita is reasonably fast (but absolutely crap-lousy in speed when loading a large image from disk because it does single threaded conversion to its internal linear srgb colorspace and didn’t bother implementing color management via the insanely fast system built into Windows).

Linux stuff seems to be harmed the most by Python if it uses it for internal scripting, which from what I understand is only because of the GIL preventing the program from accessing it with more than one thread… so you call out to python and it hangs the GUI until it’s done if the python was supposed to update something, which it usually is. Thankfully python is finally removing that abomination, now they just need to remove the Python parts of their huge collection of mostly C++ modules and they’ll really have a language going. ;-)

Linux command line tools are usually very easy to build as long as they didn’t do something stupid like require pointless text templating for header files. Then they’re effectively impossible since I won’t install msys2 / cygwin / mingw32 on any of my machines since they’re annoying hacks that took more effort than just conditionalizing code to use windows function equivalents for threading and system calls. If Perl was more or less trivially buildable with visual studio on Windows almost 20 years ago (and surprisingly very few modules failed to build), there’s basically no excuse for anything else. Perl is not only more complicated than every other command line utility combined, it’s usually a single module away from BEING every other command line utility. Including Perl…

The downside is that recent Visual Studio produces slow and bloated code.

Just for fun, compare this to pure i386 and Win32 applications compiled in Visual C++ 2.0, Borland C++ or Delphi 3.

These oldies do have way less dependencies, too.

They won’t have go through ~5 layers of different frameworks.

Even using third-party frameworks like SDL is a cleaner/more straightforward approach than current VS.

On bright side, such frameworks can support vintage compilers, too.

Linux (the Unix offshoot) has the same problem Unix did – people don’t like its interface, the fact it runs on the same hardware attests to that, people just like MS Windoes better, it has more applications written for it yes I know Linux has things like Libre etc.. and they’re FREE but yet office is preferred at its premium. Sane goes for Linux itself, people even buying used hardware with NO OS will by a vast majority install Windows to it than Linux or any other OS, in fact it’s like 8-9 of every 10. And that comparison cab ve made x86 vs everyone else as well in the desktop/laptop world of things – hell I gave my nephew a laptop (12800H) where I installed Ubuntu, added Libre, vlc, gimp,, and steam, very first thing he did after trying ubuntu for a few days was installed windows because he felt gaming was better because he couldn’t play everything he had and said Libre was ok but office is better. You can geek it out here (and I have seen these arguments since the 396 and windows 3.0) but the end-users have decisively shown year in and year out what they think is best, and that’s even true of those whom buy used hardware with no OS installed just makes that whole OEM argument moot in the REAL world. ;-)

This is like arguing we should switch to the metric system. We probably should–but we won’t. The social problems are harder than the engineering problems.

Ehhh… this is like arguing that a few hold outs still using PDP-8s in North America means the world can’t change. The world is using the metric system. We can change processor architecture.

Any architecture that wants to be able to take over from x86 must include some sort of emulator, almost as fast as native CPU use, which lets one still run precompiled x86 .exe file binaries under wine and/or in virtual machines. If an alternative CPU architecture can’t support the legacy precompiled binary software people actually want to use, then no-one will want that architecture. Until I can: install a normal Linux distro on some fancy new architecture, and download all the usual Linux tools without having to recompile them myself, and run legacy Windows .exe x86 binaries under Wine natively within my Linux OS, and run existing virtual machines (originaly made on x86 computers) from within this Linux on-not-x86 environment… I’ll be sticking with x86.

I’ve worked with SPARC emulation on x86. While the big-endian vs little-endian doesn’t help emulation performance, the latest IA processor still isn’t able to emulate the most recent SPARC processor, even though the most recent SPARC processor came out in 2017. Some operations are fast, but some are show. It doesn’t help having to evaluate the OS as well. This impacts the speed of I/O operations. The small use of some old software is not financially viable to port to another OS, let alone processor.

I’ll stick with x86_64 as well. Not for the applications as the Linux applications I use, run across any of the CPU architectures I use. Windows free around here, so no worry about .exe files. Anyway the reason for me is VMs … With my 12 Core 24 thread CPU and 64GB of RAM I can run several VMs and applications all at the same time. And the VMs are snappy. Can’t do the same on my ARM SBC RPI-5s. If I was not a power user, an RPI-5 8GB class machine would work fine (email, browsing, LibreOffice, Chess, some video, etc.) . I’d miss the flexibility to (amount of RAM, SSDs, video cards, etc.) For the time being the X86_64 just ‘wins’ — relatively cheap, and lots of general (as apposed to special purpose) performance.

“Any architecture that wants to be able to take over from x86 must include some sort of emulator [..]”

True. NexGen and Transmeta tried to provide this aspect, about 30 years ago already.

They were being flexible and powerful enough to “simulate” x86 on a silicon level and adapt to changes in the instruction set.

I wished they’d still be with us..

“Any architecture that wants to be able to take over from x86 must include some sort of emulator [..]”

according to the marketing digital’s fx!32 could run i386 coral draw almost twice as fast as a pentium pro – on windows nt4 alpha.

the fine print specifies that the comparison was between a pentium pro 200mhz vs an alpha 21164a 500mhz, the rest of the system had “similar specs”.

as was common for dec/digital they had the technical talent and great execution, but no sales talent.

point being: this problem was solved 25 years ago. powerpc, alpha & mips all had a windows nt4 version, but strangely enough i386 with it’s “inferiour” cisc architecture still came out on top. i blame sun for the good enough mindset with their “sunos might crash but at least it doesn’t take 30minutes to boot like your lisp machine” :-D

that was also ibm’s sales pitch to me with lpar: performance overhead is 0.5-2% maximum and your aix can boot in 120 seconds.

i did love the concept of transmeta’s take, in particular that they tried to get it working in the other direction: by default they translate i386 to their own isa, but could bypass the whole translation layer if they detected native code. emphasis on “tried”, iirc they never got that beyond a proof of concept.

i guess you could compare it to a hardware version of how llvm works: langauge decoder -> intermediate code -> machine code

Us NexGen ers are still around although hiding.

CISC has its place…but to have a discussion about this, you will have needed to have, at some point, provided a hand optimized assembly version of some code that beat the compiler by a large margin for multiple hardware architectures. Otherwise, we’d be speaking different languages….and the background is far too large for a comment! You may be able to get the gist of it by looking up some hand optimized routines for your favorite architecture and see the reasons for the optimizations provided.

The x86 of the future will be made of transparent aluminum.

But would it still run Doom?

To point out a mistake in the article:

“In RISC architectures like MIPS, ARM, or RISC-V, the implementation of instructions is all hardware; there are dedicated logic gates for running certain instructions. The 8086 also started this way”

The original 8086 very definitely had microcode. http://www.righto.com/2022/11/how-8086-processors-microcode-engine.html?m=1 Furthermore, it is an implementation detail so there’s no reason someone couldn’t make a microcoded RISC-V core.

“The complexities of superscalar, speculative, and out-of-order execution are heavy burdens on an instruction set that is already very complex by definition”

For the sake of clarity, many ARM and RISC-V cores do exactly the same things, they just have a less complex instruction set to work with.

To add to my previous post (that is awaiting moderation), some ARM cores have micro-instructions much like a microcoded core, just implemented differently.

That was a great article! Enjoyed

@Julian Scheffers said: “I think it’s time to put a lot of the legacy of the 8086 to rest, and let the modern processors run free.”

M-kay… Just as long as the big tall mountain of still useful legacy x86 code out here still runs fine in emulation.

Some day, we will appreciate the architecture of 80085.

Some day, we will appreciate the architecture of 809386.

What was the 4004, chopped liver? :-)

4004 was a completely different, unrelated architecture.

What happens when we ask an LLaM to craft a future x86 processor and contemplating every instruction becomes trivial?

Yeah we went through 65 years of improvements.

Never expect it to get simpler. It never has.

That’s very unlikely to happen. X86 unified the CPU as we know, and now the history is repeating itself with CPUs having codes that aren’t compatible with one another (arm)

Actually x86 did die several years ago. Our current reality is just a speculative branch that will be tidied up when the behemoth architecture finally notices.

Adam Savage, is that you?

the architectural arguments presented are a little shallow. CISC didn’t win, RISC didn’t win, everything is somewhere in between and everything has tradeoffs.

that said, yes, x86 DOES need to die – because it is *deeply* spiritually and aesthetically ugly.

Silly argument! 46 years worth of code base is exactly why it sticks around, 46 years of platform adaptation and iteration. Are there better architectures, for sure and nothing stopping any company from switching to ARM, risc v, or a rutabaga if they chose, but software and hardware availability and support for most companies is more important than trying to recreate the wheel

Yes this! The whole reason I use x86 is the backwards compatibility. That other cpu architectures might theoretically be better is meaningless if they don’t run the software you want to use.

This is why opensource is important. Linux is Linux on any processor, and hardware, and as long as you have compiler, any software can run. PCI(e) can be used. So… The problem is Microsoft and closed source again.

The “open source is important and closed source is a problem” is a void argument in relation to CPU architecture because in case of both Linux and Windows it would be possible to design a low-level VM with a JIT translating abstract VM-instructions into CPU instructions (with translation caches), where a VM-instruction has direct access to memory (thus emulation overhead would be about 1-2% when running user-space applications).

I have a feeling we need to move x86 to a software/microcode stack accelerated by a different arch. Although I think we’re already there, it’s just the ME and microcode aren’t open. 🤷🏼♂️

This might be something like what I was thinking of. Although increasingly onerous anti-copy/Anti-cheat seems to be some of the problem.

https://hackaday.com/2024/03/28/hybrid-binaries-on-windows-for-arm-arm64ec-and-arm64x-explained/

The complications of x64 may be worth the backward-compatibility afforded in an era where you can fit 32 very complicated out-of-order, register-renaming, micro-op-translating Pentium Pro CPUs in a single square milimeter.

We ended up with x86 not because it was a good architecture, but because it had corporate muscle behind it.

Even the original 8086 was a dog’s breakfast, if we’re going to be honest (the weird 20-bit segmented addressing alone was a source of much hilarity), and the instruction set was typical Intel fare – clunky and lacking any elegance whatsoever.

By contrast, Motorola’s 68K family was elegant, and ran a LOT faster than x86 until it was sidelined by the corporate muscle behind x86/DOS/Windows.

To keep it going, Intel put a bag on the 8086 to make the 286, then put a bag on the 286 to make the 386, a smaller bag on the 386 to make the 486, another bag on tne 486 to make the Pentium, etc. Now, the x86 is a rococo monstrosity that no rational mind starting with a clean sheet would dream up.

The only reason it’s sticking around has already been mentioned. Thanks to being backed by lots of corporate muscle, lots of software was written for it, and replacing that software with rewrites for more rational architectures is going to be too painful and expensive for most people.

As for mathematically intensive code, since the x87 math coprocessors implemented IEEE704 (and so did Motorola’s 68881/68882), persuading a more rational architecture to do the same shouldn’t be that hard, surely?

“We ended up with x86 not because it was a good architecture, but because it had corporate muscle behind it.”

And I always thought it was because of all those thousands of cool shareware, freeware and public domain programs written by hobbyists that I had gotten on my shovelware (shareware) CDs when I was younger..

This is very immature to say something needs to die. x86 is not going to die until a non-hypocritical ISA shows up. x86 is CISC and proud of it. ARM is CISC but pretends to be RISC and also proce gouges with the licensing. Look buddy, SIMD instructions are a CISC feature, not a RISC feature. It was introduced with the Pentium, not with the toy ARM CPUs. RISC-V is very silly too with its 32-bit long instructions instead of fully embracing 64-bit.

I also saw how some people complain about “security”. Skill issue and non issue because TPM, UEFI, and other measures. Also some people WANT bare metal access so please shove off.

Chip area is an issue? Grow up. Logic does not make use of chip area. Cache makes use of most of the chip area. Read your history!

SIMD instructions were first used in the ILLIAC IV in 1966, 12 years before the 8086 was released, or 27 years before the Pentium was released. Read your history!

And why is RISC-V “silly” for having 32-bit long instructions? RV64 gets by just fine with 32-bit instructions. There’s only so many bits an instruction needs to do its job, after all. RV64 has 32 64-bit registers, so the source and destination register fields need only 5 bits to encode them (15 bits total for instructions with 2 source registers and 1 destination register). And there’s maybe a few hundred possible operations that need to be done, so like 9 or 10 bits for the operation code should be plenty. And conditional branch instructions (you know, for if/else type of statements) typically need to branch only plus or minus a few kilobytes, so that’s like 12 bits for that field (some of those bits overlap some of the operation bits and/or the source or destination register bits). And unconditional jumps (including subroutine calls) typically happen within a few megabytes, so 21 bits is plenty. You need a longer (absolute) jump than that (which is rare)? Load a register (you have plenty of those to play with) with the target address and jump to it.

Maybe you meant to say that x86_64 is silly for having 8-, 16-, 24-, 32-, 40-, 48-, 56-, 64-, 72-, 80-, 88-, 96-, 104-, 112-, and 120-bit long instructions?

I know it’s a little more complicated, but if a 8086 has, lets say, 30.000 transistors, I don’t think it’ll cost too much, in size and power, to put something like that logic in current multi billion transistors chips

Or emulate it in microcode :D

Other architectures have tried to get around speculative execution and superscalar execution of code designed for scalar execution. Delay slots, prepare to branch instruction, VLIW, nothing helped for general purpose processors.

All forced into speculative superscalar execution under the weight of backwards binary compatibility. Except it only got uglier, especially for Itanium. Even for ARM it isn’t pretty.

I remember reading this exact argument back in the 90’s, about how the new RISC CPUs would take over from the 8086 and 68000 families. Clearly x86 did NOT fade away, but then Acorn’s RISC Machine became ARM, and in different ways they both became widespread.

68k was a dead end though :(

Brainiacs vs Speed Demons. RISC engines were meant to have an advantage when they could execute any instruction in a single cycle. Unfortunately when the Brainiacs like x86 could execute a complex instruction in a similar instruction time, there was no compelling benefit to RISC.

Should we point out that the software we use for designing and creating these microchips all run on Windows on x86 CPUs? Yes, even Apple’s M chips are designed on Windows machines running on x86 architecture.

So? If you’re building a ship to escape a dying world, you have to build the ship on the dying world. Doesn’t make the dying world any less dying.

Unironically, “x86 needs to die” articles need to die.

The thing about x86 is that it’s complicated in a complicated way, so proper review of the architecture’s disadvantages and advantages cannot be summed up in a four screen article. Ironically, there are still major errors here in this article, so big that I can see them myself – the microcode section is nonsense. I’m pretty sure modern A-level ARM and IBM Power cores do utilize microcode to some extent, and I’m certain 8086 was already absolutely full of it.

A somewhat uncomfortable fact for the fans and the makers of modern RISC processors is that certain x86 / x86-64 problems are conjoined with optimization opportunities going beyond bypassing the problems themselves. Oh, you don’t want speculative memory accesses? Well those are on ARM too, hope you like your memory bubbles. To reap full efficiency benefits of not dealing with the “arcane things x86 does”, you need to go into heavily (really heavily, not PC-equivalent heavily) multi-threaded direction instead of individual thread performance. Which is definitely a choice one can make, but it comes with its own problems when it comes to software creation, especially without harder-on-hardware, softer-on-software TSO memory model that x86 settled on quickly.

Meanwhile, it’s not like people behind the RISC architectures agree on many things beyond having 32-ish base registers, which Intel officially wants to bring to x86-64 anyway. RISC-V’s adjustable design even effectively disagrees with itself on whether instruction coarseness should be static or not, taking ISA encoding efficiency losses both ways. Though personally, I find RISC-V an overall mess approaching parity with x86-64 but without any the historical justification, so maybe don’t take it from me.

“IBM Power cores do utilize microcode”

Not for some extent, Power _uses_ microcode as i know (IBM support colleagues often update it). :D

x86 ISA always sucked.

Long live x68000

Complex instructions exist because people want to perform complex operations. x86 keeps the conversion of that complex instruction set to the RISC cores on the CPU die itself. RISC-V/ARM/etc require that conversion to be performed in software (which is ultimately run… on the CPU). You juggle around where and when that conversion needs to occur, but it must occur nonetheless, because a CPU unable to actually perform the operations one needs of it is little more than expensive sand.

Not really …

The “conversions” at compile time in software are static. The conversions at runtime in “hardware” are dynamic. The latter provide yet another opportunity to optimize execution.

And let’s not forget that many of the recent RISC-V CPUs support both compressed instructions, where the most common operations take only 16 bits, and macro-op fusion, where multiple instructions in a specific sequence are combined into a single logical operation inside the CPU. For example, a CPU can recognize the 2 (or 3?) instructions required to load a full 32-bit value into a register as a single macro-op and perform it as a single operation inside the CPU, taking fewer clock cycles than executing each instruction one at a time. (CPU designers and compiler writers are working together to ensure they use the same instruction sequences for macro-ops, otherwise they’ll work but be slower than necessary.) And often compressed instructions and macro-op fusion can work together (a compressed instruction decodes directly to a regular 32-bit uncompressed instruction). Modern x86_64 CPUs do something similar with macro-op fusion, but it’s limited or complicated by the fact that there are so many more combinations of instructions.

The compressed instructions actually bring the average RISC-V code size down to or _smaller_ than the average x86_64 code size (and around the same size as Arm Thumb/Thumb2 code, though RISC-V doesn’t have to switch to and from compressed mode). And if I’m not mistaken, there’s a more recent extension for compressed instructions being worked on that delivers even smaller average code sizes. So CISC doesn’t win on code size anymore, to the dismay of some of its proponents.

And the “complex operations” that psuedonymous mentioned are almost always done with many simple instructions (move this, add that, etc.), even on x86_64. x86_64 does have complex instructions, but they’re niche, and x86_64 has so many different operations that do niche things and carries around the baggage of instructions for all the niche things that Intel and/or AMD thought would be good ideas to add at the time (such is the sad history of how x86 grew to become what it is today).

Y’all are a bunch of geeks! Well, so am I, but this is not primarily a technical problem. It is an economic issue.

On one hand, the x86 architecture has a truly massive amount of both software and hardware that go with it. But note that it is, in some sense, an accident of history that the x86 is the predominant architecture in several markets. Had IBM chosen a Motorola 68K processor for the IBM PC, which they were considering, we’d be having this argument about that architecture. The 68K didn’t have as much baggage as the x86 since Motorola discarded most of the 6800, 6809, etc ISAs and the 68K was a bit more RISC-y, but I have no doubt it would have gone down the same path as the x86.

Now, the other hand. RISC ISAs are much simpler than the x86 ISA. This translates directly into fewer gates, which means smaller dies (the actual silicon part of a chip), which means more dies per silicon wafer. Since silicon wafers of a given size cost about the same, regardless of what it’s being used for, the cost of the silicon wafer is divided up more ways, resulting in cheaper processors.

Fewer gates also mean lower power consumption. The x86 has some pretty sophisticated ways of saving power. As I understand it, it will power down the circuitry used to implement some of the complex but infrequently used instructions. You can do the same on a RISC processor, say, for vector instructions, so the RISC chip is pretty much always going to have lower power consumption.