Lots of people swear by large-language model (LLM) AIs for writing code. Lots of people swear at them. Still others may be planning to exploit their peculiarities, according to [Joe Spracklen] and other researchers at USTA. At least, the researchers have found a potential exploit in ‘vibe coding’.

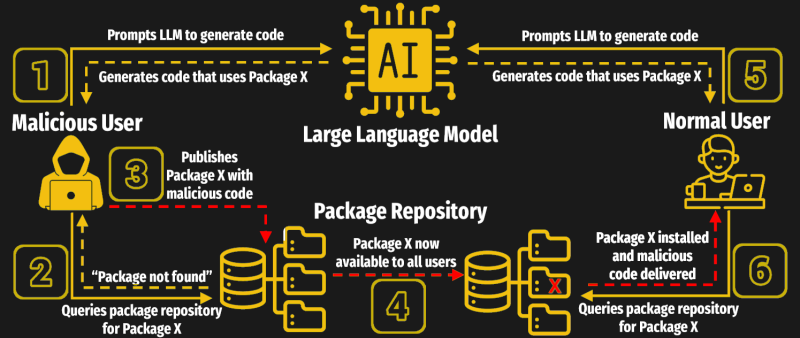

Everyone who has used an LLM knows they have a propensity to “hallucinate”– that is, to go off the rails and create plausible-sounding gibberish. When you’re vibe coding, that gibberish is likely to make it into your program. Normally, that just means errors. If you are working in an environment that uses a package manager, however (like npm in Node.js, or PiPy in Python, CRAN in R-studio) that plausible-sounding nonsense code may end up calling for a fake package.

A clever attacker might be able to determine what sort of false packages the LLM is hallucinating, and inject them as a vector for malicious code. It’s more likely than you think– while CodeLlama was the worst offender, the most accurate model tested (ChatGPT4) still generated these false packages at a rate of over 5%. The researchers were able to come up with a number of mitigation strategies in their full paper, but this is a sobering reminder that an AI cannot take responsibility. Ultimately it is up to us, the programmers, to ensure the integrity and security of our code, and of the libraries we include in it.

We just had a rollicking discussion of vibe coding, which some of you seemed quite taken with. Others agreed that ChatGPT is the worst summer intern ever. Love it or hate it, it’s likely this won’t be the last time we hear of security concerns brought up by this new method of programming.

Special thanks to [Wolfgang Friedrich] for sending this into our tip line.

Stop checking my vibes.

Vibe coding is here to stay, we need to create some kind of whitelist for trusted packages.

Good idea. I’ll get ChatGPT to make one.

Better idea: restrict LLMs to only generate code that uses known libraries. Simply not including unknown packages solves the problem.

Define “known” when an attacker can add packages at any time.

I would imagine it is possible, though not easy to create a turing complete machine using known packages. that doesn’t solve the problem, it obfuscates it which may be even worse because it appears secure.

What about the people who only know vibe coding though?

The new Idiocracy coding bunch that is coming… Wait for it ….

Ie. Don’t think, just let the AI do it…. :rolleyes: .

Not just that.. there needs to be a reigning in of the ‘cult of update’ that keeps pushing new versions with little to no oversight and in spite of what breaks. These things have led to exploits in real projects and attempts of other attempts as well.

Article in The Reg today:

https://www.theregister.com/2025/04/12/ai_code_suggestions_sabotage_supply_chain/

LLM is not going far enough. Humans need libraries, levels of abstraction, keep things simple and manageable. But if we where faster and could keep more in our heads when we would not need libraries. Just write everything machine code right there and then, or whatever the lowest common deployed level of technology is.

If the Vibe Coder is no longer checking the output then why bother with programming languages and libraries.

Michael Townsen Hicks, James Humphries, & Joe Slater, in a paper in “Ethics and Information Technology,” suggest that the term “hallucination” by LLM’s is inaccurate. “We … argue that describing A.I. misrepresentations as bullshit is both a more useful and more accurate way of predicting and discussing the behaviour of these systems.”

I’ve read that paper, it makes some good points. The language we use to describe things has pointed subconscious effects about how we perceive things.

Link to paper for those who are interested:

https://link.springer.com/article/10.1007/s10676-024-09775-5

On the language note, “on politics and the English language”, a short-ish essay by Orwell, has some interesting analysis on how vagueness in language is easily exploited. Also worth a good read.

Great article by Orwell. Link here:

https://files.libcom.org/files/Politics%20and%20the%20English%20Language%20-%20George%20Orwell.pdf

I’m not a fan of the term “hallucination,” either– personally, I prefer “confabulation”, which is just a polite and high-falutin’ way to say bullshitting. That said, “hallucination” is the term of art employed by the researchers, so we use it here to avoid confusion.

I think we will eventually need some gate-keeping for the package repositories. It seems every few weeks there is a newsworthy typosquatting or hijacked package on npm or pypi. When was the last time a malicious package got into Debian?

> When was the last time a package got into Debian?FTFY XDDDDDDDDDDDDDDDDDDDDDDDDDD

What the hell are the users for, then?

To misuse and break it in ways we could have never forseen. ;)

Starting to see a lot of the following lately:

“I asked [LLM] and it said this.”

“That’s wrong, here’s some non-AI sources which prove it.”

“Hmmm… no, I asked [LLM] the same question again and it still contradicts you. It must be correct.”

AI cults and other fanciful things are certainly not far off. Would be a pretty good subject for a short story

And then there’s the reverse said with the same fervor against AI aka handwaving. People are just binary with a lot of things.

People are also usually very binary against things that are just wrong. You could say for instance people are pretty black and white against murder.