“If there’s one thing the Commodore 64 is missing, it’s a large language model,” is a phrase nobody has uttered on this Earth. Yet, you could run one, if you so desired, thanks to [ytm] and the Llama2.c64 project!

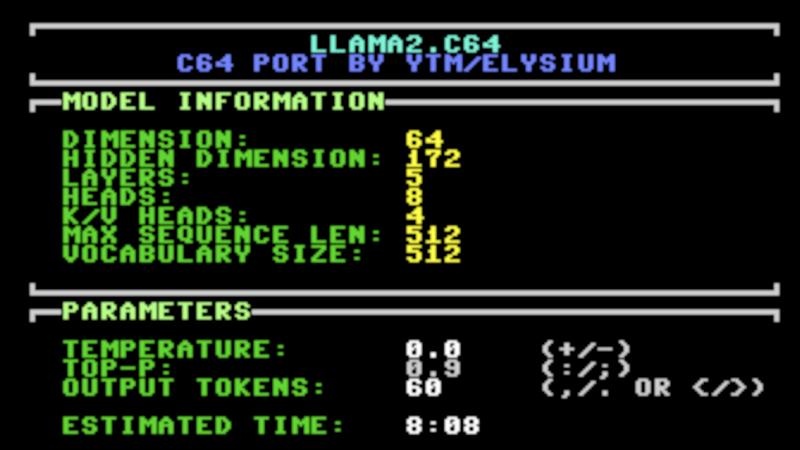

[ytm] did the hard work of porting the Llama 2 model to the most popular computer ever made. Of course, as you might expect, the ancient 8-bit machine doesn’t really have the stones to run an LLM on its own. You will need one rather significant upgrade, in the form of 2 MB additional RAM via a C64 REU.

Now, don’t get ahead of things—this is no wide-ranging ChatGPT clone. It’s not going to do your homework, counsel you on your failed marriage, or solve the geopolitical crisis in your local region. Instead, you’re getting the 260 K tinystories model, which is a tad more limited. In [ytm]’s words… “Imagine prompting a 3-year-old child with the beginning of a story — they will continue it to the best of their vocabulary and abilities.”

It might not be supremely capable, but there’s something fun about seeing such a model talking back on an old-school C64 display. If you’ve been hacking away at your own C64 projects, don’t hesitate to let us know. We certainly can’t get enough of them!

Thanks to [ytm] for the tip!

LLMs as the FPGAs of our time.

C64s as the FPGAs of our time.

Very cool, uses the REU, but apparently uses 32-bit floats. Should prolly switch that over to 16- or 8-bit floats.

Also: “You will receive one output token approximately every 8 minutes.” 😆

the c64 doesn’t have float hardware, so you’d probably be better off with 16 or 8 bit fixed-point ints.

This is how I describe all LLM: “Imagine a 3 year old Genius, how far would you trust them?” A bit concerning how many companies are turning to them dor content and customer service.

I personally trust LLMs more than most the of people on this planet.

It is not biased by one source or one agenda.

“But the companies can inject biased info into it” – well, don’t use that one. Like you don’t want to use Google as search engine. (actually who uses web search anymore)

Aren’t LLM as bias as there training data. I’ve read dozens of stories of sexist or racist AI’s, that were inadvertently feed the wrong training data. I think [Miles] 3 year old metaphor is apt, you can teach the AI to believe what you want.

“seeing such a model talking back on an old-school C64 display”

It could use S.A.M. Reciter to say it.