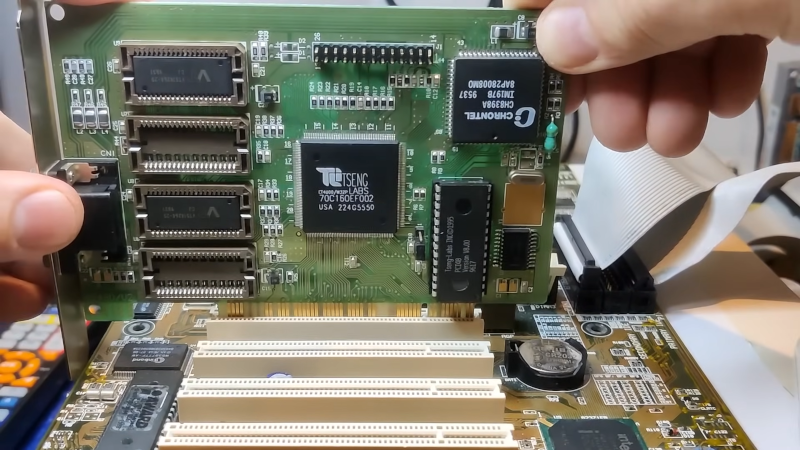

These days, video cards are virtually supercomputers. When they aren’t driving your screen, they are decoding video, crunching physics models, or processing large-language model algorithms. But it wasn’t always like that. The old video cards were downright simple. Once PCs gained more sophisticated buses, video cards got a little better. But hardware acceleration on an old-fashioned VGA card would be unworthy of the cheapest burner phone at the big box store. Not to mention, the card is probably twice the size of the phone. [Bits and Bolts] has a look at several old cards, including a PCI version of the Tseng ET4000, state-of-the-art of the late 1990s.

You might think that’s a misprint. Most of the older Tseng boards were ISA, but apparently, there were some with the PCI bus or the older VESA local bus. Acceleration here typically meant dedicated hardware for handling BitBlt and, perhaps, a hardware cursor.

It is fun watching him test these old cards and work on them under the microscope, too. Since the PCI bus was new when this board was introduced, it apparently had some bugs that made it incompatible with certain motherboards.

We recall being blown away by the color graphics these boards provided when they were new. Now, of course, you wouldn’t see graphics like this even on a cheap video game. Still, fun to take a walk down memory lane with these old boards.

[Bits and Bolts] definitely has a hobby. We love that these were high-tech in their day, but now designing a VGA card is well within reach for anyone adept at using FPGAs.

What hardware acceleration? Isn’t an “old-fashioned VGA card” basically just a double ported ram and a DAC? A bit of control logic to push bytes into the ram, and that’s it?

“old-fashioned VGA” is not even a double-ported RAM. But the S3 Trio, the Virge and others were the first to have some 2D acceleration, like copying rectangular blocks of memory (bitblt), painting and filling, video layers…

First was 1987 IBM 8514 (terrible hardware interface) . ATI (1991 mach8) cloned 8514 down to register level compatibility. S3 (1991 911?) also derived design from 8414, but with tweaks so not 1:1 compatible. Others like Tseng (1992 W32) or Cirrus Logic (1993 5426 very primitive) incorporated custom blitting engines.

FIRST was the 1984 “IBM Professional Graphics Controller” which was 3 full-length PCBs sandwiched into a 2-slot form factor, containing as much computing power as the full original IBM PC (including its own 8088 CPU and 320 KB of RAM – potentially equal to even more than the computer it was put in!) It could run its own unique display at 640×480, 256 colors (out of a palette of 4096, making it better than VGA that came out three years later.) It was used to accelerate CAD programs, by doing all the video calculations on itself instead of the host computer.

Before that there were cards from various small manufacturers based on the NEC 7220. I programmed one from Frontier Technologies in the early 1980s.

For home computers, 1982’s VIC-II says “hi.” It didn’t do advanced blitting stuff but the movable sprites were a huge deal.

There also were special solutions for CAD before this,

such as the external “Vectrex VX384” display controller device.

AutoCAD supported it, for example.

https://tinyurl.com/ym4u2vcz

In terms of IBM standards, though, I think you’re correct.

I always wondered if the PGC could be “upgraded” by a NEC V20 just like an PC/XT, by the way!

Because unless it uses that one instruction the 8088 has and the V20 lacks, it should be compatible.

(Info: Some PC BIOSes were V20 incompatible because they relied on that instruction and needed an V20 Patch;

others measured timings such as that of the PIT and weren’t aware of the V20 being quicker on a cycle basis,

thus thought the timer ICs were broken.)

Anyway, I think we owe the PGC something.

It introduced the 640×480 pels resolution (square pixel) that later VGA got so famous for.

Well, besides 320×200 256c (mode 13h)..

The IBM 8514/A was like a successor to the PGC in some ways.

Which in turn was followed by XGA and XGA-2 (still 8514/A API compatible).

Another standard of the 80s were the TIGA standard, by the way.

Which uses the TMS34010 and TMS34020 programmable graphics processors.

Windows 3.1 has a driver for it, just like OS/2 1.x had one for 8514/A.

However, the TIGA driver is just a middle-ware that talks to the real TIGA driver on the driver diskette of a TIGA board.

Anyway, there’s one thing to keep in mind: Performance is not only about speed!

Performance and being powerful also has to do with off-loading computing power to dedicated hardware.

While early accelerators were quite capable, they weren’t necessarily the fastest.

For example, the ET-4000 and many “dumb” VLB graphics cards were often faster at displaying bitmaps than intelligent graphics boards.

So what’s the point, you may ask.

It’s about multitasking and quality, not seldomly.

An intelligent graphics board can handle text font rendering, scaling and drawing GUI items -at any supported resolution/color depth- without stressing the main processor and system RAM all time.

Good point about “dumb” VLB cards sometimes being better choice. Just before VLB standard came out (first proprietary implementations mid 1992, first real VLB slots late 1992, by 1995 dead technology with PCI taking over) DELL (VP Charlie Sauer) together with Intel did a demo at Fall Comdex ’91 presented by Intel CEO Andy Grove himself – that tells you how important it was. Intel called it JAWS, DELL Processor-Direct Graphics:

https://www.youtube.com/watch?v=u7VNl4Np7Vo

3 months later at January NeXTWORLD ’92 Steve Jobs was presenting first NeXTstep x86 port running on this very same Dell PowerLine 450DE/2 DGX Graphics Workstation.

March 10, 1992 Computer Chronicles 9×18 “Intel 486” episode also has a demo by Charlie Sauer https://archive.org/details/intel486?start=379.4

The crazy thing about JAWS was the graphic subsystem wasnt even a full blown graphic chip, just a dumb Inmos G332 framebuffer (self clocking DAC) directly on CPU card.

NeXTWORLD September 1993 https://blackholeinc.com/Library/93%20Sept.html has this to say

That’s MCGA hardware, basically. ^^

https://www.swiat-owocow.pl/lang/en/1285.html

VGA had a sophisticated CRTC that could be re-programmed for various frequencies and video modes.

To make things more complicated,

most VGA cards besides the original IBM VGA (aka”IBM PS/2 Display Adapter”) were Super VGA really.

Many could emulate CGA and Hercules at register level, even, thanks to a DOS-based mode utility on the driver diskette.

https://www.minuszerodegrees.net/5162/cards/5162_cards.htm

Some higher end models such as ATI Mach8 or Mach32 had an IBM 8514/A compatible graphics processor even.

They could handle complex drawings on their own.

The popular S3 Trio32/64 or Trio 64v (but not ViRGE) had an 2D core that was derived from this technology, I think.

https://en.wikipedia.org/wiki/IBM_8514

The demo (demoscene production) “Countdown” supported S3 mode directly.

https://www.pouet.net/prod.php?which=1524

By comparison, the Tseng ET-4000AX had an 8514/A software emulator (for DOS) on the driver diskette.

This was good enough to play Mah Jongg -8514-.

https://www.classicdosgames.com/game/Mah_Jongg_-8514-.html

The memory could be addressed as four planes, with selection of which planes should take part in processor I/O. This allowed some speedup when rendering bitmap text in graphics mode.

Not true dual ported, that was expensive stuff at that scale. Tseng labs used MDRAM, where the RAM chips have, internally, a bank of independent, small, 32kB blocks DRAM.

As long as the CPU and the screen driver are not accessing the same block, it appears as if it was dual ported. Only if both are trying to access the same block at the same time is one held up. (I presume that would be the CPU). The more blocks, the less likely the 2 are to clash.

So, almost as good as dual ported, very close, at a fraction of the cost.

Really enjoyed watching him do the reflowing. Nice.

“We recall being blown away by the color graphics these boards provided when they were new. ”

Had an early PowerVR card. The graphics were something else for the time, even if a niche piece of silicon.

Oh for the days of 8bit isa vga cards!

Mono, cga, ega, 8bit isa vga, 16bit isa vga, 32bit vlb vga, 32bit pci, and beyond! How times have changed.

Wonder what ever happened to OakTek and Trident video card makers.

Graphics went 3D, and only a few managed the transition. AFAIK Trident’s graphics assets.were.sold to XGI while Oak got acquired.

Hercules. If I remember, it was monochrome but with variable brightness level while original monochrome video card were just black and white, pure digital signal.

They’re basically DSPs (digital signal processors) and use SIMD technology (single instruction, multiple data).

Before graphics accelerator cards or GPUs such as 3dfx Voodoo, ATI Rage II, Nvidia GeForce 256 etc. became common,

it was up to the CPU to do such things.

For a short time in 1996/1997, people speculated if MMX (a SIMD extension for integer math) couldn’t do such things on the main processor instead.

That was the time when Photoshop filters, MPEG 2 software decoders or WinModem drivers used MMX.

ET4000 was state of the art in the early 1990s. I would have liked an Orchid Pro Designer II to replace my Orchid Pro Designer in 1991. 15-bit color was high stakes! Even in the mid 1990s that was old hat.

The ET-4000AX was popular in demoscene, I think and it was known for its good performance at the time.

In parts that’s because it had a FiFo buffer for the ISA bus interface, I think.

By early-mid 90s, it was the standard VGA card so to say, the “you can’t go wrong with that” kind of model.

Compatibility with VGA wasn’t perfect, though. Some games had their issues with the ET-4000 (Keen IV etc).

Also, the later ET-4000W32 was different to the original ET-4000AX (and similar), I think.

It had GUI acceleration but dropped hardware emulation for Hercules, CGA of the ISA card.

Nowadays we do know, however, that the ET-4000 wasn’t the very best.

Cards such as Trident 8900D (not B!) or Cirrus cards had similar good performance.

Some did even beat the ET-4000 (on the ISA bus)!

Speaking of SVGA games compatibility, there were two important types.

The ET-4000AX and the Paradise PVGA.

Games by Magnetic Scrolls supported 800×600 16c resolution for Paradise/Western Digital (and V7 VEGA?)

Games by Legend Entertainment supported 800×600 16c resolution for ET-4000AX

In principle, that’s just about BIOS video modes of each card, though.

In 16c color modes, the geaphics is usually planar just like in normal VGA mode 12h (640×480 16c).

So these games can be patched for VESA VBE mode 6Ah (same as 102h).

Magnetic Scrolls’ Wonderland, for example, was successfully patched for VBE that way.

Last but not least, there were a few VESA VBE games that ran in 640×400 256c.

Just like 800×600 16c games they will run with the basic 256KB of VGA memory.

Provided that an VBE TSR is loaded – and UniVBE refuses to work with 256KB of VGA RAM!

So the VBE utility of the chip maker must be used instead.

Ideally in a later VBE 1.1 or VBE 1.2 version. Many applications have trouble with VBE 1.0.

I remember a three-card set for IBM’s PGA color graphics “card.” It was their forerunner to VGA, with the same resolution but was digital, not analog. We have come a long way…

Hi! The PGC/PGA was way interesting indeed!

It used RGB, but not the usual RGB H/V of the later VGA/MCGA card.

https://en.wikipedia.org/wiki/Professional_Graphics_Controller

Some of the old stock of official PGC monitors was later modified for VGA, though.

Drawing bitmaps via PGC was tedious, though. It used a ring-buffer for commands etc.

It’s a very interesting story, all in all.

The PGC monitor predated the PS/2 design lanugage and fits the PC/AT and PC/AT much better.

That’s why retro and vintage computing fans do like the PGC monitor, I think.

IBM 5175 Monitor conversion: https://tinyurl.com/nhfp274f

YT Video: https://www.youtube.com/watch?v=XUS5yiu96i4

Sorry, wrong link! This one is about the monitor conversion..

https://tinyurl.com/4wa7vda4

Nobody else remembers the Bitchin’fast!3D^2000? Just me? OK.

https://www.vogons.org/viewtopic.php?t=59199

Thought with ‘memory lane’ in the title we were going to get in depth with the memory controller or RAM chip specifications. Was disappointed.

I remember my family’s second PC, a beige-box 486 that we got in 1991, had an ISA Tseng ET4000 in it, which was quite capable for accelerating Windows 3.0 (and later 3.1) and running at a reasonably high resolution for the time, especially after we upgraded it to a whopping 1MB of VRAM and could run at 1024×768 at 256 colors!

Tseng labs ET4000 was the best in the day. We had small PCs, Megabytes of RAM, and you could get the most frames out of doom in DOS. I had a vesa version, a mate had PCI. We all chased this card to have the most killer gaming PCs.

My server up in the attic, boasts an Asus M5A79 motherboard, running an FX 8350 CPU, but has an S3 Trio graphics card :) (Because the PCIe slots are all in use)

04:06.0 VGA compatible controller: S3 Graphics Ltd. 86c764/765 [Trio32/64/64V+] (prog-if 00 [VGA controller])

Control: I/O+ Mem+ BusMaster- SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx-

Status: Cap- 66MHz- UDF- FastB2B- ParErr- DEVSEL=medium >TAbort- <TAbort- SERR- <PERR- INTx-

Interrupt: pin A routed to IRQ 7

NUMA node: 0

IOMMU group: 10

Region 0: Memory at fd800000 (32-bit, non-prefetchable) [size=8M]

Expansion ROM at 000c0000 [disabled] [size=128K]

Reason being simple that the board refuses to boot without a monitor/video card installed… To be fair, this was true for the previous motherboard in there (some AM3 socket still) and I do know that you can ‘skip’ missing monitor errors, but without an VGA adapter, the thing just beeps.

Funny bit, is that the bios is one of those (semi)UEFI bioses that’s graphical, but the S3 trio can’t render it, just showing a black screen :)

The story of Tseng Labs is a curious one. I’m surprised nobody has bothered to talk at length about it.