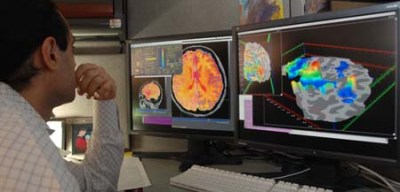

Among brain researchers there’s a truism that says the reason people underestimate how much unconscious processing goes on in your brain is because you’re not conscious of it. And while there is a lot of unconscious processing, the truism also points out a duality: your brain does both processing that leads to consciousness and processing that does not. As you’ll see below, this duality has opened up a scientific approach to studying consciousness.

Are Subjective Results Scientific?

In science we’re used to empirical test results, measurements made in a way that are verifiable, a reading from a calibrated meter where that reading can be made again and again by different people. But what if all you have to go on is what a person says they are experiencing, a subjective observation? That doesn’t sound very scientific.

That lack of non-subjective evidence is a big part of what stalled scientific research into consciousness for many years. But consciousness is unique. While we have measuring tools for observing brain activity, how do you know whether that activity is contributing to a conscious experience or is unconscious? The only way is to ask the person whose brain you’re measuring. Are they conscious of an image being presented to them? If not, then it’s being processed unconsciously. You have to ask them, and their response is, naturally, subjective.

Skepticism about subjective results along with a lack of tools, held back scientific research into consciousness for many years. It was taboo to even use the C-word until the 1980s when researchers decided that subjective results were okay. Since then, here’s been a great deal of scientific research into consciousness and this then is a sampling of that research. And as you’ll see, it’s even saved a life or two.

Continue reading “The Real Science (Not Armchair Science) Of Consciousness”