Most electronic components available today are just improved versions of what was available a few years ago. Microcontrollers get faster, memories get larger, and sensors get smaller, but we haven’t seen a truly novel component for years or even decades. There is no electronic component more interesting with more novel applications than the memristor, and now they’re available commercially from Knowm, a company that is on the bleeding edge of putting machine learning directly onto silicon.

The entire point of digital circuits is to store information as a series of ones and zeros. Memristors as well store information, but do so in a completely analog way. Each memristor changes its own resistance in response to the current going through it; ‘writing’ a positive voltage lowers the resistance, and ‘writing’ a negative voltage puts the device back into a high resistance state.

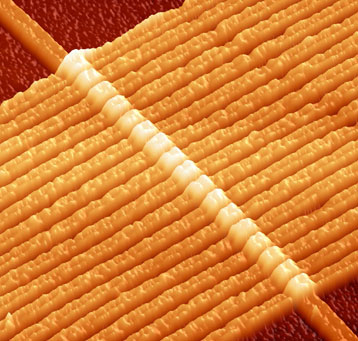

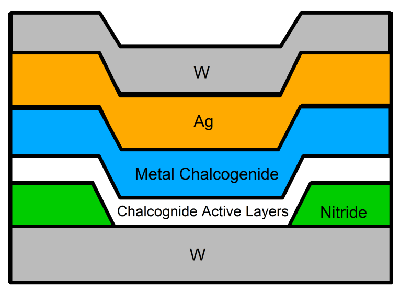

This new memristor is based on research done by [Dr. Kris Campbell] of Boise State University – the same researcher responsible for silver chalcogenide memristors we saw earlier this year. Like these earlier devices, the Knowm memristror is built using silver chalcogenide molecules. To lower the resistance of the memristor, a positive voltage ‘pulls’ silver ions into the metal chalcogenide layer. The silver ions stay in this chalcogenide layer until they are ‘pushed’ back with the application of a negative voltage. This gives the memristor it’s core functionality – being able to remember how much current has gone through it.

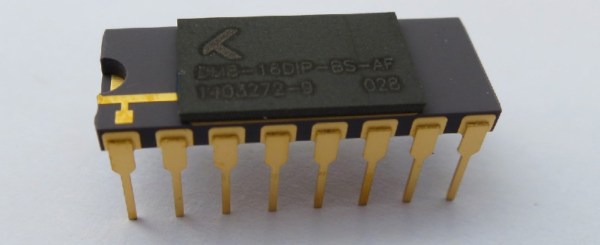

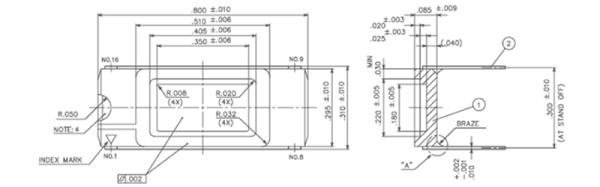

This technology is different from the first memristors made by HP in 2008, and has allowed Knowm to create functional memristors on silicon with a relatively high yield. Knowm is currently selling a ‘tier 3’ memristor part that only has two out of eight devices failing QC testing. A ‘tier 1’ part, with all eight memristors working, is available for $220 USD.

As for applications for this memristor, Knowm is using this technology in something they call Thermodynamic RAM, or kT-RAM. This is a small coprocessor that allows for faster machine learning than would be possible with a computer with a much more traditional architecture. This kT-RAM uses a binary tree layout with memristors serving as the links between nodes.

While it’s much too soon to say if a kT-RAM processor will be better or more efficient at performing machine learning tasks in real life, a machine learning coprocessor does have a faint echo of the machine learning silicon developed during the 80s AI renaissance. Thirty years ago, neural nets on a chip were created by a few companies around Boston, until someone realized these neural nets could be simulated on a desktop PC much more efficiently. The kT-RAM is somewhat novel and highly parallel, though, and with a new electronic component it could be just what is needed to push machine learning directly into silicon.