Apple recently patched a security problem, and fixed the Psychic Paper 0-day. This was a frankly slightly embarrasing flaw that [Siguza] discovered in how iOS processed XML data in an application’s code signature that allowed him access to any entitlement on the iOS system, including running outside a sandbox.

Entitlements on iOS are a set of permissions that an application can request. These entitlements range from the aforementioned com.apple.private.security.no-container to platform-application, which tells the system that this is an official Apple application. As one would expect, Apple controls entitlements with a firm grip, and only allows certain entitlements on apps hosted on their official store. Even developer-signed apps are extremely limited, with only two entitlements allowed.

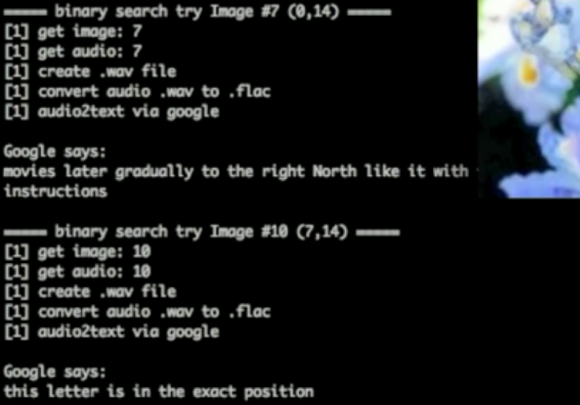

This system works via an XML list document that is part of the signed application. XML is a relative of HTML, but with a stricter set of rules. What [Siguza] discovered is that iOS contains 4 different XML parsers, and they deal with malformed XML slightly differently. The kicker is that one of those parsers does the security check, while a different parser is used for that actual permission implementation. Is it possible that this mismatch could contain a vulnerability? Of course there is.

Continue reading “This Week In Security: Psychic Paper, Spilled Salt, And Malicious Captchas”