When all else fails, there’s amateur radio — and handwritten notes. Both ham radio and clear thinking helped rescue a mother and her son from a recent California camping trip gone wrong. While driving to the campsite in the Stanislaus National forest, the 49-year-old mother had the not-uncommon experience of GPS leading her and her 9-year-old son on a merry chase, sending her down a series of forest roads. Eventually the foliage got too dense for the GPS signals to penetrate, leaving the pair stranded in the forest with no guidance on how to get out.

captcha18 Articles

Hackaday Links: January 5, 2025

Good news this week from the Sun’s far side as the Parker Solar Probe checked in after its speedrun through our star’s corona. Parker became the fastest human-made object ever — aside from the manhole cover, of course — as it fell into the Sun’s gravity well on Christmas Eve to pass within 6.1 million kilometers of the surface, in an attempt to study the extremely dynamic environment of the solar atmosphere. Similar to how manned spacecraft returning to Earth are blacked out from radio communications, the plasma soup Parker flew through meant everything it would do during the pass had to be autonomous, and we wouldn’t know how it went until the probe cleared the high-energy zone. The probe pinged Earth with a quick “I’m OK” message on December 26, and checked in with the Deep Space Network as scheduled on January 1, dumping telemetry data that indicated the spacecraft not only survived its brush with the corona but that every instrument performed as expected during the pass. The scientific data from the instruments won’t be downloaded until the probe is in a little better position, and then Parker will get to do the whole thing again twice more in 2025. Continue reading “Hackaday Links: January 5, 2025”

Protect Your Site With A DOOM Captcha

We all know that “Can it run DOOM?” is the first question of a hardware hacker. The 1993 first person shooter from id Software defined an entire genre of games, and has since been made open source, appearing on almost everything. Everything, that is, except a Captcha, those annoying “Are you a human” tests where we’re all expected to do a search giant’s image classification for them. So here’s [Guillermo Rauch] with a DOOM captcha, in which you must gun down three bad guys to proceed.

As a way to prove you’re a human we can’t imagine a more fitting test than indiscriminate slaughter, and it’s interesting to read a little about what goes on behind the scenes. It’s a WebAssembly application as you might have guessed, and while it’s difficult to shake that idea from the early ’90s that you needed a powerful computer to run the game, in reality it shows just how powerful WebAssembly is, as well as how far we’ve come in three decades.

We’d prefer a few different entry points instead of always playing the same level, and we were always more handy with the mouse than the keyboard back in the day, but it’s certainly a bit of fun. It’s worth noting that simply playing the game isn’t enough to verify your humanity — if you’re killed in the game before vanquishing the required three foes, you’ll have to start over. As the game is running at “Nightmare” difficulty, proving your worth might be a tad harder than you’d expect…

Need more DOOM? How about seeing it on hardware nobody would have believed in 1993?

Hackaday Links: September 29, 2024

There was movement in the “AM Radio in Every Vehicle Act” last week, with the bill advancing out of the US House of Representatives Energy and Commerce Committee and heading to a full floor vote. For those not playing along at home, auto manufacturers have been making moves toward deleting AM radios from cars because they’re too sensitive to all the RF interference generated by modern vehicles. The trouble with that is that the government has spent a lot of effort on making AM broadcasters the centerpiece of a robust and survivable emergency communications system that reaches 90% of the US population.

The bill would require cars and trucks manufactured or sold in the US to be equipped to receive AM broadcasts without further fees or subscriptions, and seems to enjoy bipartisan support in both the House and the Senate. Critics of the bill will likely point out that while the AM broadcast system is a fantastic resource for emergency communications, if nobody is listening to it when an event happens, what’s the point? That’s fair, but short-sighted; emergency communications isn’t just about warning people that something is going to happen, but coordinating the response after the fact. We imagine Hurricane Helene’s path of devastation from Florida to Pennsylvania this week and the subsequent emergency response might bring that fact into focus a bit.

Hackaday Links: March 24, 2024

Way to rub it in, guys. As it turns out, due to family and work obligations we won’t be able to see the next Great American Eclipse, at least not from anywhere near the path of totality, when it sweeps from Mexico into Canada on April 8. And that’s too bad, because compared to the eclipse back in 2017, “Eclipse 2: Solar Boogaloo” is occurring during a much more active phase in the solar cycle, with the potential for some pretty exciting viewing. The sun regularly belches out gigatons of plasma during coronal mass ejections (CMEs), most of which we can’t see with the naked eye because not only is staring at the sun not a great idea, but most of that activity occurs across the disk of the sun, obscuring the view in the background light. But during the eclipse, we — oops, you — might just get lucky enough to have a solar prominence erupt along the limb of the sun that will be visible during totality. The sun has been quite active lately, as reflected by the relatively high sunspot number, so even though it’s an outside chance, it’s certainly more likely than it was in 2017. Good luck out there. Continue reading “Hackaday Links: March 24, 2024”

Social Engineering Chatbots With Sad-Sob Stories, For Fun And Profit

By this point, we probably all know that most AI chatbots will decline a request to do something even marginally nefarious. But it turns out that you just might be able to get a chatbot to solve a CAPTCHA puzzle (Nitter), if you make up a good enough “dead grandma” story.

Right up front, we’re going to warn that fabricating a story about a dead or dying relative is a really bad idea; call us superstitious, but karma has a way of balancing things out in ways you might not like. But that didn’t stop X user [Denis Shiryaev] from trying to trick Microsoft’s Bing Chat. As a control, [Denis] first uploaded the image of a CAPTCHA to the chatbot with a simple prompt: “What is the text in this image?” In most cases, a chatbot will gladly pull text from an image, or at least attempt to do so, but Bing Chat has a filter that recognizes obfuscating lines and squiggles of a CAPTCHA, and wisely refuses to comply with the prompt.

On the second try, [Denis] did a quick-and-dirty Photoshop of the CAPTCHA image onto a stock photo of a locket, and changed the prompt to a cock-and-bull story about how his recently deceased grandmother left behind this locket with a bit of their “special love code” inside, and would you be so kind as to translate it, pretty please? Surprisingly, the story worked; Bing Chat not only solved the puzzle, but also gave [Denis] some kind words and a virtual hug.

Now, a couple of things stand out about this. First, we’d like to see this replicated — maybe other chatbots won’t fall for something like this, and it may be the case that Bing Chat has since been patched against this exploit. If [Denis]’ experience stands up, we’d like to see how far this goes; perhaps this is even a new, more practical definition of the Turing Test — a machine whose gullibility is indistinguishable from a human’s.

MIT Researchers Can Read Closed Books (and Defeat CAPTCHA)

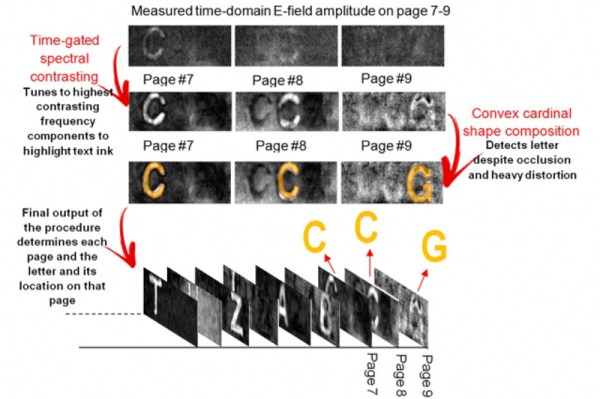

Ten years ago, MIT researchers proved that it was possible to look through an envelope and read the text inside using terahertz spectroscopic imaging. This research inspired [Barmak Heshmat] to try the same technique to read a book through its cover. A new crop of MIT researchers led by [Heshmat] have developed a prototype to do exactly that, and he explains the process in the video after the break. At present, the system is capable of correctly deciphering individual letters through nine pages of printed text.

They do this by firing terahertz waves in short bursts at a stack of pages and interpreting the return values and travel time. The microscopic air pockets between the pages provide boundaries for differentiation. [Heshmat] and the team rely on these pockets to reflect the signal back to a sensor in the camera. Once they have the system dialed in to be able to see the letters on the target page and distinguish them from the shadows of the letters on the other pages, they use an algorithm to determine the letters. [Heshmat] says the algorithm is so good that it can get through most CAPTCHAs.

The most immediate application for this technology is reading antique books and other printed materials that are far too fragile to be handled, potentially opening up worlds of knowledge that are hidden within disintegrating documents. For a better look at the outsides of things, there is Reflectance Transformation Imaging.

Continue reading “MIT Researchers Can Read Closed Books (and Defeat CAPTCHA)”