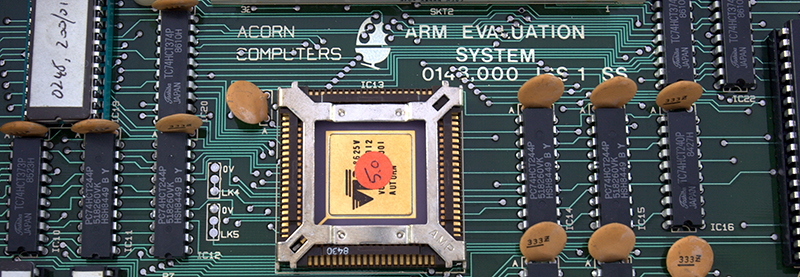

By all accounts, the ARM architecture should be a forgotten footnote in the history of computing. What began as a custom coprocessor for a computer developed for the BBC could have easily found the same fate as National Semiconductor’s NS32000 series, HP’s PA-RISC series, or Intel’s iAPX series of microprocessors. Despite these humble beginnings, the first ARM processor has found its way into nearly every cell phone on the planet, as well as tablets, set-top boxes, and routers. What made the first ARM processor special? [Ken Shirriff] potsed a bit on the ancestor to the iPhone.

The first ARM processor was inspired by a few research papers at Berkeley and Stanford on Reduced Instruction Set Computing, or RISC. Unlike the Intel 80386 that came out the same year as the ARM1, the ARM would only have a tenth of the number of transistors, used one-twentieth of the power, and only use a handful of instructions. The idea was using a smaller number of instructions would lead to a faster overall processor.

This doesn’t mean that there still isn’t interesting hardware on the first ARM processor; for that you only need to look at this ARM visualization. In terms of silicon area, the largest parts of the ARM1 are the register file and the barrel shifter, each of which have two very important functions in this CPU.

The first ARM chip makes heavy use of registers – all 25 of them, holding 32 bits each. Each bit in a single register consists of two read transistors, one write transistor, and two inverters. This memory cell is repeated 32 times vertically and 25 times horizontally.

The next-largest component of the ARM1 is the barrel shifter. This is just a device that allows binary arguments to be shifted to the left and right, or rotated any amount, up to 31 bits. This barrel shifter is constructed from a 32 by 32 grid of transistors. The gates of these transistors are connected by diagonal control lines, and by activating the right transistor, any argument can be shifted or rotated.

In modern terms, the ARM1 is a fantastically simple chip. For one reason or another, though, this chip would become the grandparent of billions of devices manufactured this year.

Programming ARM gives me cancer. It’s just like browsing /b/.

I’m a die hard x86 coder and gamer, other architectures for me are just pure BS.

Could you elaborate please?

Yeah its arm rubbish that why it manged to be the leading mobile processor

^Probably not.

That’s funny. I have held the exact opposite sentiment ever since having access to an Acorn Archimedes with an Intel 80486 coprocessor at college. The ARM was the programmer’s lipstick to the ‘486 pig. (And it could run Windows 3.1 in a window.)

I prefer MIPS though and quite like Sparc (from undergraduate CS). My first professional w/s was a PARISC based Apollo. Nice machine but something like 20x the cost of a PC at the time.

Ditto. Of course, since it was much easier to write an optimizing C compiler for ARM, you tended not to do much ASM to begin with. Code in C, profile, and then hand optimize the few bits that actually need it.

The secret to purchasing Unix workstations “back in the day” was to get them used. I worked for the academic computing department back when I was in college (late 80s/early 90s) and got to be good friends with our SGI service engineer. He hooked me up with an R3000 Indigo in ’93 for about $3k. A chunk of change to be sure, but it did include a 20″ display, and the box did go for about $20 to the previous owner two years earlier.

Going from 68K assembly to 386 assembly is like a root canal that just won’t end.

RISC processors really never were meant to be programmed by humans. They were meant to be programmed by compilers which would optimize things like register usage. I’m a very old skool guy myself, learned on the 8080A and transitioned to x86 but I do understand the philosophy at work here and I have to admit it’s elegant.

Love doing assembly on ARM. Not mentioned above is that the barrel shifter will shift a value in a register by any number of places in 1 cycle, and can be combined with any other instruction. Multiplication and all kinds of cool tricks become only a couple instructions if not just one. Also in pre-thumb ARM every instruction is conditional – say goodbye to all those branch instructions to jump over one instruction. Also, everything can include pre or post increment or decrement. So simple and so clever! Sure made the 65816 look ridiculous. If [papal stick] had used 6502 in the early days then ARM would be very natural and x86 would be goofy.

The 32 bit Nation Semi part is pretty cool. Registers are all in RAM like stacks in C so context switching is fast and every task can have a full set of everything. At least that is what I recall from the data books. I have an NIB developers kit I picked up at the little Fry’s in Sunnyvale in about 1986. The Google would know, but I think it failed because National made a set of boards to fit the PDP-11 family as the main reason to buy them and DEC took legal action of some sort. Not sure, but I remember something like that.

Some time before 1990, VLSI, who did the early ARMs for Apple, I think for an ARM based Apple II prototype (wish that had been a green light) and the Newton experiments. The VLSI ARM7500 is like a PC and they made a motherboard that had PC slots and all the connectors. Here are some photos of the 7500 and the NS32000 http://regnirps.com/Apple6502stuff/arm.htm Don’t buy anything on that site! It is ancient and being moved and revised/modernized.

Not positive, but I think you can trace the decline of RISC for big systems to the Pentium Pro group in Oregon developing efficient out-of-order execution and blowing everyone away, including their fellow Silicon Valley Intel researchers.

I feel the same way about Intel. I see ARM as the future and don’t want to spend any time on low level x86.

Thumb, Thumb2, interworking, super-interworking … ugh. I think much of that has *finally* gone away now that there has been some convergence.

RISC had immediate benefits even at the time, but I believe that as compilers have matured they’ve become even better, as compilers have gotten better at generating even more efficient code. The increased “granularity” of RISC operations as opposed to CISC ones has made more room for optimization. And RISC designs usually have vastly more registers, which leads to fewer memory operations (this doesn’t count quite so much when RISC registers are implemented as a memory window, as I believe is/was the case with SPARC).

Not only have compilers improved, but also the CISC CPUs have been optimized to handle the instructions quickly.

At the same time, modern ARM Cortex has been become much more complex than the first ARM1 that this article is talking about.

Absolutely true to be sure, but ARM parts are still much simpler and use less power than their CISC counterparts.

Also, the Cortex M0 only has about 12,000 gates IIRC, so that’s only about twice the number of transistors as the original ARM1.

I program x86 and ARM in C, and that makes it equally simple :)

The complexity of a modern Intel CPU isn’t really due to CISC. It’s the superscalar, out of order execution, branch prediction, register/memory renaming, exception handling, and many other modern features that’s making stuff complicated (and fast). And of course, the floating point units aren’t exactly small or simple either.

If you wanted to make an ARM chip as capable as an i7, it would likely suck just as much power and have similar complexity, because you’d need to do all the same things. The only difference would be a tiny piece of the chip where you did instruction decoding.

When was it, the Pentium era I think, that Intel switched to RISC and microcode? The “complex” machine instructions go through the microcode and are turned into a collection of simpler instructions. Didn’t last long. Then there is the whole IA-64 line of Itanium chips. Totally different RISC code, has to emulate the old x86 instruction set. Which means the compilers need a ton of work so code could run natively on the IA-64 instruction set. On the other hand, the AMD line of x86-64 kept the same old core and bolted on the 64-bit instructions, so already compiled and distributed software ran just as fast and the stuff targeting the new abilities of the chip could be added later.

Personally, I prefer to code in C++ or higher level languages on x86-64, and C or lower on ARM. In straight C or C99, I always feel that there is pretty close to a one instruction per line of code if the code is written right; and that feels more natural on a chip where I know what those instructions are. x86-64 is so complex that even “++i; ” for a counter loop could be in the asm right after the previous instruction, or it might get filed away earlier or later depending on all of the out-of-order stuff.

Modern ARMs are all superscalar, and out of order execution is what I what separates the top two tiers. You can even get big.LITTLE chips with quads of both types – hardkernel.com will sell you a “raspi”-like SBC which runs Ubuntu quite a lot faster than the Broadcom chips will.

Not as cheap as a raspi, obviously, but even more hacker-friendly.

Not Raspberry Pi or Samsung Galaxy S6 Ancestor, but iPhone? So what exactly makes this more an iPhone ancestor vs any other ARM device?

I think they just meant ‘one device everyone has heard of/may not have, but can identify with’.

Gets more clicks :)

Yeah, it’s not like Apple invented the ARM processor. It was a collaboration between Acorn, Apple, and VLSI. I guess VLSI wouldn’t count, as you don’t see TSMC cited as ‘creating’ samsung’s newest Flash or AMD’s newest cards, though. Oh, and Acorn doesn’t exist any more.

So yeah, of the companies that created ARM, only Apple still exists. HORRIBLE CLICKBAIT AND THE AUTHOR SHOULD BE ASHAMED.

Well ARM610 was a collaboration with Apple, used in their Newton… ARM1 was entirely without Apple’s help

The division of Acorn which developed ARM — conveniently named ARM — is very much still around, although with an altered acronym. I think UC Berkeley also is knocking around somewhere.

What involvement did Apple have with the ARM1 (the subject of this article)?

Apple sent the Acorn guys to the Western Design Center to look at 65816. They saw what a short term useless processor it was and decided they could do better on their own. Part of Apple was looking for something better for Apple II and part was trying to kill the Apple II line.

@Comedicles, “They saw what a short term useless processor it was and decided they could do better on their own”

That’s not what I heard Steve Furber say at the BBC Micro @30 event. What he said was that they went to the US and asked Intel whether they could use the core of an 80286, but with their own bus design. Intel refused. Then they visited WDC and realised that if one person can design a whole CPU by himself, then they could certainly do the same. Steve Furber never said the 65816 was a useless short-term CPU. Of course, it wasn’t very short term, since it’s still in production 30 years later.

One of the Acorn design goals was to create a high bandwidth system, hence the 32 bit bus on the ARM. The 65816 is still restricted by an 8 bit databus, so it wasn’t very good. Yes, it’s still being produced, but nobody’s buying it.

@comedicles No, Apple were not even in the picture when the Acorn people went to WDC. Apple got involved much later. Acorn were looking for a wider CPU for their own post-6502 offerings, had tried the 32000 and were not impressed by the memory bandwidth efficiency. In a time before caches, memory bandwidth was seen to be the limiting factor. Apple were not interested in the ARM cpu until the ARM cpu existed.

(Accidentally flagged your comment, oops)

Also phones have driven the ARM market more than probably any other application. They had few inroads to set top boxes, unlike ST20 and MIPS for example.

STM used to do set-top boxes based on SH4 and Broadcom did based on MIPS.

Now a days both uses ARM for most of their set-top boxes.

ST20TP2, ST20TP3, ST20TP4, STi5512, STi5514, STi5516, STi5510 from STM were all ST20 (Transputer) They accounted for something like 25 or 30 SD boxes in the UK. MIPS were used in 4 boxes (NEC EMMA/EMMA2), ARM just had 1 box. I’ve heard of SH4 and PowerPC boxes but never here. When things went high def they went MIPS in a big way. 3 boxes used MIPS and 1 used ARM, but in terms of box numbers MIPS boxes shipped over ARM probably more than 10:1. IIRC STM solutions now mostly use MIPS but we don’t see those here any more, Broadcom mostly still use MIPS and Connexant use ARM. BTs DSL service used ARM last I knew. MIPS are in the only boxes now in production for pay sat TV and they are from Broadcom. This may be quite region specific.

I can’t prove it, but I would posit that if the iPhone (counting all generations) is not the single largest consumer of ARM processors in history, it’s easily in the top 10. Last time I checked, the iPhone was closing in on three quarters of a billion units.

Most hard disc controllers are ARM based that I’ve heard about. That could compete.

Every Android phone also has an ARM processor and there are something like three android phones for every iPhone worldwide.

Around the release of the first iPhone, someone estimated that it actually had ~6 ARM cores. Of course, most of them were little dinky cores in various dedicated ASICs.

Certainly the SoC in iPhone (and smartphones in general) account for a huge number of ARM cores, but there are probably even more under the covers of all sorts of devices.

I can answer this… Arm was used in the last of the Psion devices. Epoc32 was the first(ish) mobile phone o/s, I can still remember the thrill of epoc32 booting for the first time, Symbian launching, and reading the spec for “Linda”, which you’d recognise as being as the definition of the smartphone… RIP Symbian.

I have (and still use) a Psion 5mx, which is an ARM handheld with a surprisingly good keyboard. It can write to CF card storage, which I use to transfer text files from it to modern systems.

I used to have Psion 5MX booting Debian Woody from CF, best pocket computer ever… connected to the internet using IR and some Sony Ericsson phone (T68i if I remember). those old ages using GPRS :D

This is very interesting to me, and after some time of personal study, ‘feel’ I am getting close to the heart of the concept– But, to admit and be honest, the concept of hardware ‘registers’ still confounds me a bit… A ‘physical’ variable, a pre-defined tagged ‘switch’ of sorts ? Or it is probably my first suggestion that relates to the confusion as you don’t have to have ‘memory’, to have a register, but, yet it is kind of like that…

I thought some savvy HaD user might be able to clarify and provide a better analogy around this point.

All of your data (variables etc) has to live somewhere in a physical piece of memory. There’s no magic going on, a computer’s just a machine and data has to go somewhere. When you allocate a variable, you’re just attaching a certain significance – from your point of view, a name – to some location in that memory, so that you can access it when you need it.

The rub is that making RAM big — so you can fit all your stuff in it — also makes it slower. A register is a much smaller piece of memory inside the processor, big enough for perhaps one variable (depending on your data type), but which you can access much more quickly.

A modern CPU has a whole hierarchy of different types of memory, from the very small and fast registers to the slow but large RAM, and the intermediate levels are called cache. Your compiler and some clever hardware in the CPU conspire to hide all this complexity from you, giving the illusion (most of the time) that your memory is both large and fast, when really it can only be one of those things.

It should be noted that, while obviously there are physical limits (more delay as you get further from the cpu, larger memory spaces needing more addresses, etc), the key limit is money. You probably could have 16GB of ram that was as fast as the CPU registers, or at least the L1 Cache, but it would be insanely expensive. It’s the same reason why you put up with having a Hard Drive, which is super slow compared to DDR3 Ram (4GB/s vs 100MB/s write), but also super cheap ($5/GB vs $0.05/GB). This image shows the heirarchy of memory: http://computerscience.chemeketa.edu/cs160Reader/_images/Memory-Hierarchy.jpg

“Physical variable” is a good way to think of it. In assembly code, the registers have names that never change. You can load values into the registers from code, from memory, from other registers. You can manipulate the values in the registers with arithmetic. You can also compare values in registers. Every operation requires putting values into a register and manipulating it there. Furthermore, registers can be used as pointers, indices, etc. Luckily, compilers for higher level languages handle all these details. So, when you write i++, some compilers might actually store the value of i in memory and actually load it into a register each pass through the loop to increment it. But, optimizing compilers will pick a register to use for i and simply increment the register, without involving physical memory. Involving memory requires using a pointer to the memory location (in a register), and then loading the value from memory into another register, manipulating the value while in the register, and then saving the value back into memory (using the other register that holds the value of the memory location). Meanwhile, incrementing the register takes a single atomic instruction (and basically every processor has physical optimizations that make incrementing super fast). If you need i later but you want your loop to be fast, you could increment through i in a register (fast) and then save the final value to memory (only one memory operation outside of the loop), and then you can retrieve i later one and use that register for some other purpose in the mean time. … The main idea is that registers are where all the magic happens, while memory is just storage.

In some cases, the distinction between registers and memory is blurred. Take, for instance, AVR microcontrollers. Their registers share the same address space as the I/O registers. It’s a different memory space than the flash space where the code lives and also the RAM (this is a modified Harvard architecture, after all), but it is an address space shared with other functions (namely all of the I/O).

I tend to think of the registers as a bucket, or the surface of my desk. I could go out to the mailbox every time I wanted a single envelope (the HD), I could keep a pile of letters that need to be worked on in a bin near the front door (RAM), and I can keep a small pile that I know isn’t junk near my desk. But if I need to really work at one or two items, they’ll be on the surface of my desk. Even without memory (all the letters come in one at a time as machine code instructions would) I would keep the one or two pieces of data that I need to work on directly right on my desk while the next instruction of what to do to that data showed up.

No, the transistors of the register aren’t necessarily any different than the ones that make up the different levels of cache, but they are close to “where the instructions happen” so they are faster to access. The limited space available on the chip itself determines how many registers you can have.

As for how to really grok a register, best I can recommend is programming in assembly. I learned MIPS asm in uni (couldn’t tell you what version, it was emulated on x86 PCs), and got a very good low level understanding of how the CPU works. If you want to focus on x86, I’ve been reading http://www.amazon.com/Assembly-Language-Introduction-Computer-Architecture/dp/019512376X and, while the SASM C library is no-where to be found, the descriptions of how the hardware all clumps together are easy enough to understand. Won’t help if your problem is just registers, and it won’t help if you really need to write code that is calling BIOS for system level stuff; it’s all a high overview of the very tiny parts of the CPU.

Don’t confuse a CPU register with a GPIO register. They have some similarities but the CPU registers do not have a memory address.

A register in a CPU is a set of logic gates that can hold a word of data, whatever the system’s word size is. Data is transferred (read) from memory to the register or from the register to memory (written). There are usually several of the registers, like the Arithmetic Logic Unit (ALU) or the Program Counter (PC), maybe a Stack Pointer (SP), Status Register that shows the result of an operation was true or false, positive or greater than and such. These registers have extra circuitry to perform logical functions on the data, like AND, OR, NOT, shift, compare, add, set a flag if it is zero.

Even chips that have the “registers” in memory have to transfer from RAM to an ALU and back to RAM in order to do anything. In x86 you see MOV instructions. Move memory contents from here to there. The data still passes through the ALU. In ARM you do it in two parts. Move to a register and then move from the register (or to a different register).

In ARM and RISC in general, there are a bunch of general purpose registers in the CPU/ALU and the ALU can operate on any of them. It is like a little patch of 16 memory locations where you stuff things if you need to operate on them or on each other. ARM has 16 you can use at a time. Other RISC chips had 64 or more ALU registers.

In comparison, 6502 (Apple II, BBC Micro, Comodore64) has 1 ALU register and 2 (X and Y) that can be involved in operations plus PC and stack pointer and the usual house keeping. 6502 is RISC like in that it is what is called a One-Address Machine, or what some awkwardly call a load-store architecture. You read from memory to a register with an instruction and write to memory with an instruction. 8080/x86 (IMSAI 8080, IBM PC) is a two-address architecture. There can be a source and destination specified in an instruction. It still does the same things but to a programmer it is as if you can move memory contents directly from one location to another, which isn’t true. The abstraction of the instruction obscures the process and makes it a bit harder for a programmer to conceptualize what is really happening.

A register is a row of flip-flops, each of which is made out of a bunch of logic gates, with some of their outputs connected to their inputs so they can “remember” their state. This is what “SRAM” is made of, “DRAM” uses fewer transistors and stores data as charge on a capacitor (that slowly leaks and needs to be refreshed every so often) so more data can be fit in.

Getting back to the flip-flops, they are usually either “latches”, or “edge triggered”, both of which refer to how they are set when data is “written”.

They essentially just serve as toggle switches whose output can be set when needed, to hold information.

How they are used and exposed to the programmer is defined in the Instruction Set Architecture of the machine.

There’s a good, intro level book called “But how do it know?” That I refer you to, if you’d like to pursue this further.

The register file in the ARM doesn’t use real flip-flops. They are made from two weak cross coupled inverters. A bigger transistor is used to change the state by brute force.

A register is a memory location _inside_ the processor. If your variable live in a register, it is accessed with maximum speed. Otherwise, to access your variable you need to wait until the data stored in your variable is transported from the memory to the processor core. This will take _a_lot_ of time compared to a register access. Please look at the table from this Peter Norvig’s article:

http://norvig.com/21-days.html

You might be amazed by how long it will take to fetch the data from other places then registers.

The reason arm was so successful was due to licensing the core. That allowed other companies to take the core and create there own chips with additional support hardware around it for custom/low cost applications or to implement the core in an fpga. At the time everyone else was selling “family of chipsets” targeted at generic markets. This distinction was very important in the case of cell phones where cost is extremely important even down to fraction of cents.

That fits in with my earlier comments about compiler development. By making the machine language widespread across architectures, they effectively put all of the compiler development wood behind a single arrowhead.

^across manufacturers, not architectures

The main reason was it’s power efficiency, and that from it’s beginning.

The core licensing success came later after their cooperation with Apple, DEC, and Intel.

The ARM (Acorn/Advanced RISC Machine) processor was designed in some respects as a 32-bit replacement for the 8-bit 6502 processor, used in the Acorn BBC Micro. It had the stated design aim to run BASIC code as fast as machine code ran on other architectures, the comparison being with the IBM PC x86. Improving the power efficiency was something pushed later, through things like the AMULET project, but the RISC architecture generally proved easier to make lower power than the CISC x86. This low power use made it very attractive for portable devices. In the Raspberry Pi the Broadcom SoC used supposedly came out of set-top boxes, and in one story came from having a powerful video processor, then realising a corner of the chip could be used to sneak a ARM processor in, rather than the CPU being a separate chip. The SoC on the latest iPhone is a 64-bit ARM, for reasons that are not completely obvious…

The reason ARM caught on is the same reason Microsoft made fools of IBM. Licensing.

Microsoft held onto the licensing rights for both DOS and Windows, they didn’t care WHAT you ran it on or who made the system, if you were willing to pay for a copy, ENJOY. This is something NOBODY else was even CONSIDERING in the 1980’s or even most of the early 1990’s!

No one company “makes” ARM processors. You license the design and make it however you want to make it. You hand ARM Holdings a token downpayment, with a promise of a reasonable skim off the top, and boom you can make as many of them as you can sell in whatever configuration fits your needs. Compare this to Intel or AMD where thanks to past issues (that let AMD and Harris outsell Intel in the early ’80’s on Intels design) where these days you want one of their processor designs, you’re going to them to have it manufactured!

If you are already a chip manufacturer but lack the resources to have a design team create a processor architecture from the ground up, having a organization like ARMH say “just license our reference design” is ridiculously appealing, which is why Samsung, Freescale, Broadcom, Allwinner, Applied Micro, nVidia, Texas instruments and even Apple license flavors of the ARM architecture to put their own spins on it.

ARMH operates on a brilliant business model! They design the architecture, but leave it up to others to manufacture it under a license.

As to the performance side, there is such a thing as “fast enough” — I’ve got a friend who’s always harping on how a ARM7 at 1ghz doesn’t even deliver the computing power of a 450mhz Pentum II, and I have to keep pointing out that processing per clock is NOT what modern ARM is about. It’s processing per WATT.

Which is where some of the Atom offshoots from Intel — like the J1900 I put into my workstation (knocking $30/mo off my power bill since that rig is on 24/7) are very quickly closing that gap. See how the short-lived netbooks so quickly went from arm+linux to intel+windows, to the point that at the peak of netbook sales nobody gave a flying purple fish about the ARM ones.

… but that’s been Intel’s game all along, they just outlast their competitors — they pick one, they figure out what they are good at, then they stay the course to bury them. NEC, Harris and Fujitsu couldn’t keep up with the design specs needed to compete with Intel, and it’s a miracle AMD made it out of the pre-Pentium era and they only did so by being cheaper and doing a few innovations of their own. A64 being the pinnacle of that, as well as lowering design costs by focusing on processing per clock more than clock speed as Intel did in the P4 era.

Since Yonah dropped all those ears ago, Intel has burned past AMD on the per clock measurement, and have eliminated the processing per per dollar gap at both the high and the low. With the focus on low power making things like the 4790 consume two thirds the power of a three processor generations back quad core 2, or the low end surface mounts sipping power like a ARM so that you are seeing Intel powered tablets at the checkout line at grocery stores…

… well, it’s getting kind of clear who they’re setting their sites on next.

Yeah I’m pretty sure the iPhone’s(itouch/ipod) ancestor (and device it was copied from) was the iPaq and similar PDA devices, many of which used the ARM processor long before Apple decided to get in the game..Let’s bring some noise and love to the device which utilized this chip before having an Apple ‘was a thing’ (for some..) and didn’t mean ya had a portable device…

The iPaq came 10 years later than the Newton MessagePad!

The thing about Apple is they don’t need to be first. When you ask an engineering manager at Apple when will they bring out a PDA, Phone Car, etc. They say “When we can do it right”. Maybe not such a bad way to work. The company has over $600,000 income per employee!

The Symbolics Sunstone architecture was also RISC but the company stumbled and fell cancelling it as it was being taped out – else you mitgh al be coding in LISP npw… (never hire a CEO to asset strip your IP)

DEC Alpha

RISC-V is going to take over the world within 10 years. Just wait and see.