Your quad-copter is hovering nicely 100 feet north of you, its camera pointed exactly on target. The hover is doing so well all the RC transmitter controls are in the neutral position. The wind picks up a bit and now the ‘copter is 110 feet north. You adjust its position with your control stick but as you do the wind dies and you overshoot the correction. Another gust pushed it away from target in more than one direction as frustration passes your lips: ARGGGHH!! You promise yourself to get a new flight computer with position hold capability.

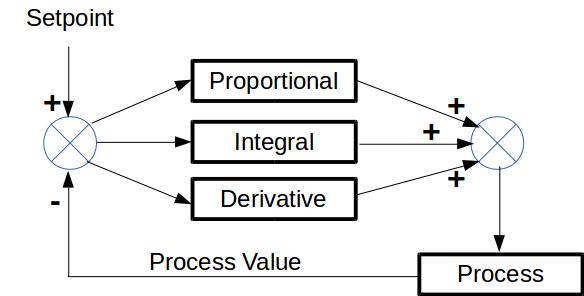

How do multicopters with smart controllers hold their position? They use a technique called Proportional – Integral – Derivative (PID) control. It’s a concept found in control systems of just about everything imaginable. To use PID your copter needs sensors that measure the current position and movement.

The typical sensors used for position control are a GPS receiver and an Inertial Management Measurement Unit (IMU) made up of an accelerometer, a gyroscope, and possibly a magnetometer (compass). Altitude control would require a barometer or some other means of measuring height above ground. Using sensor fusion techniques to combine the raw data, a computer can determine the position, movement, and altitude of the multicopter. But calculating corrections that will be just right, without over or undershooting the goal, is where PID comes into play.

We won’t discuss the sensors or fusion in this article, only the PID process since it is the widely applicable mainstay of control systems. We’ll use flying the copter manually to get an intuitive idea of how PID works. But keep in mind the example is illustrative and not 100% accurate since we humans do much more than simple PID when at the controls.

Let’s start with some terminology and symbol definitions for the math. The position where you want the copter to hover is the setpoint (SP). That’s 100 feet away. The current position is the process value (PV). That’s 110 feet after it drifted. The difference between the setpoint and the process value is the error (e). That’s 10 feet of drift. The output of the calculations are the control output (C), which in turn changes the control inputs to the system.

Let’s start with some terminology and symbol definitions for the math. The position where you want the copter to hover is the setpoint (SP). That’s 100 feet away. The current position is the process value (PV). That’s 110 feet after it drifted. The difference between the setpoint and the process value is the error (e). That’s 10 feet of drift. The output of the calculations are the control output (C), which in turn changes the control inputs to the system.

There are three parameters that control how a PID loop reacts to the error, one for each of the PID terms. These are call called gains and are Kp, Ki, and Kd for proportional, integral, and derivative, respectively.

Proportional Control

Once again you’ve got the copter hovering nicely at 100 feet away with no control inputs. The wind picks up and it’s now at 110 feet. You add control input to bring it back. How much input? What if it had gone to 120 feet? You’d probably add more input for 120 feet than 110 feet because you want to get it back to 100 feet quickly. That is proportional control. The farther the copter moves away, the more input you use to bring it back.

The math is simple. Calculate the error as the difference between the setpoint and process variable and multiply the result by the proportional gain:

The table and chart show the movement of the copter away from the setpoint and the recovery. Notice that the copter stabilizes at 106 feet and not the setpoint. This offset is inherent to the math of proportional control.

The spreadsheet implements a full PID process. To demonstrate only the proportional gain all that is required is to set the integral and derivative gains to zero which causes the integral and derivative terms to be zero.

Proportional gain by itself can be useful if the system doesn’t generate large errors. Typically systems require at least proportional and integral (PI) or proportional and derivative (PD) control.

Integral Control

You bring the copter back to 100 feet despite the wind blowing. Now the controls are no longer in a neutral position, but require some input to hold the distance at 100 feet. Bringing the copter back to the setpoint and holding it there requires the integral part of PID.

The integral looks at the history of the errors over time by maintaining a summation of the error. The integral equations require a variable, the summation (Si), which is the sum of the previous errors each multiplied by Ki. The proportional component is calculated as above and added to the integral component.

An alternate form, more typically shown in discussions, maintains a summation of the errors and multiplies the summation by Kp. This form leads to a problem, called a bump, if the value of Kp is changed while the PID is operating. This probably won’t happen in our systems but is an issue for long running processes like in a refinery.

Again, the chart shows the copter’s movement away from the setpoint and the recovery. Notice that the copter is stabilized in 9 seconds using PI whereas it required around 12 seconds using only P. The integral tends to get you back to the setpoint more quickly.

One potential problem with the integral is integral windup. If an extremely large error occurs or the setpoint is changed by a large amount the summation can build to a large value. This may result in a control value that exceeds the capabilities of a system. For a copter, the control value might attempt to drive the motors to 120% of their capability. Removing this accumulation can take a long time and can introduce overshoot, i.e. the copter returns to the 100 foot setpoint and keeps going. The two simplest ways of handling this are to either limit the value the summation can reach or to disable the integral until the process has stabilized. For our projects, limiting the summation is probably the easiest.

Derivative Control

Again you start bringing the copter back from its position at 110 feet but you don’t know the strength of the wind. As the copter begins to return you watch how quickly it is returning and increase the input if the copter is not returning as quickly as you like. You’re judging the amount of distance the copter is covering. Another way of stating this is you are looking at the change in the error. That’s the derivative of the error.

The calculation of the derivative introduces another variable, the previous error (ep). The difference between the current error and the previous error is multiplied by the derivative gain. The PD is calculated by adding together the P and the D value. The current error is saved as the previous error for use in the next cycle of calculations.

The chart shows the movement of the copter under PD control. Here again, with no integral, the copter does not return to the setpoint but stops at about 104.5 feet, an improvement over P only. The time to a stable position is again around 12 seconds.

Cycle Time

The next logical step is to show you a full PID but there is another factor to consider, the time between processing cycles. I left this out above for clarity. The equations above will work okay if the PID is processed at regular intervals, perhaps being driven by an interrupt. But if the interval is not constant an adjustment must be made to the integral and derivative calculations. This requires the use of the time elapsed between each PID calculation. This is the delta time (dt). After adding the dt factor, a minimal change, the PID control value is just the sum of the previous calculations:

The integral is now multiplied by dt. As a result, the summation is now the error over a period of time. If the time in error is long the integral becomes larger to more quickly force the copter back to the setpoint. If the time is small only a small correction is added.

Similarly, the derivative is divided by dt to amplify the control value.

The chart shows the full PID handling the copter deflection and return. Since the integral term is being used the copter returns to the setpoint and is stable at about 9 seconds. But it is reasonably close to the setpoint at 7 seconds, which is better than with the earlier approaches.

The dt I used is 0.5 seconds but when it was put into the chart the copter only moved to 105 feet. I doubled the speeds of the wind and copter so the movement was 110 feet to make it easier to compare all the charts.

PID Tuning

Setting up a PID for optimal control of a process is a black art. Adjusting the three gains is tuning. Determining good PID gains is difficult because they interact and not always in a predictable manner. There are a number of papers and discussions that suggest how to tune a PID. The concept of auto-tuning has been around as long as PIDs but there is no universal way of doing this.

If you look at the gains in the four charts above you’ll see that each of them uses different values. These are the optimal values I could obtain for each set of calculations by experimenting with different gains. I had two goals. First, to get the copter stabilized as quickly as possible. Second, to not overshoot the setpoint. The first is almost always the goal in tuning. The second is arbitrary but it helped limit the number of tests I needed to do. Some processes like the copter can tolerate overshoots. Others cannot and an overshoot could actually result in damage to the overall system.

PID Class Code

The code for a very basic PID class, at least enough to start you off, is a straightforward implementation of the equations. The class declaration is:

//

//-------------------------------------------------------------------------------------------------

class PID {

public:

PID(const double p_gain, const double i_gain, const double d_gain, double setpoint);

const double calculate(const double current_value, const double time_interval);

private:

double mProportionalGain;

double mIntegralGain;

double mDerivativeGain;

double mSetpoint;

double mIntegralSum {0};

double mPreviousError {0};

};

There are just two class members. One is the class constructor to initialize the gains for the PID calculations and the setpoint value. The other performs the PID calculations.

The actual class code for the constructor and calculation is:

//

//-------------------------------------------------------------------------------------------------

PID::PID(const double p_gain, const double i_gain, const double d_gain, double setpoint) :

mProportionalGain(p_gain), mIntegralGain(i_gain), mDerivativeGain(d_gain), mSetpoint(setpoint) {

}

//-------------------------------------------------------------------------------------------------

const double PID::calculate(const double current_value, const double time_interval) {

const double error = mSetpoint - current_value;

const double proportional = mProportionalGain * error;

const double integral = error * mIntegralGain * time_interval;

mIntegralSum += integral;

const double derivative = mDerivativeGain * (mPreviousError - error) / time_interval;

mPreviousError = error;

return proportional + mIntegralSum + derivative;

}

I used this code to duplicate the results from my spreadsheet but wouldn’t turn it loose on a robot without more work. A more useful set of code is the Arduino PID library developed by [Brett Beauregard]. In his project blog, [Brett] explains the code, especially the changes introduced to make it more robust and useful. An interactive PID simulation based on [Brett’s] code is on [Raul’s] Coding Laboratory blog.

We’ve mainly considered the disruption to a system caused by environmental factors. The PID is also useful in changing the actions of a system. We positioned our copter with a setpoint of 100 feet away from us. The PID, actually multiple PIDs, interact to keep it at that location. The multiple PIDs are controlling the tilt, rotation, height, and bearing, correcting for errors as they occur. If we decided the copter should be 120 feet from us and 20 feet higher the controller may actually change the setpoints and let the PID correct the error to the new setpoint.

The PID is a workhorse for controlling systems that any hacker working with robots or other interactive systems should know. The example code here is just a starting point to provide a feel for how the algorithm works.

Are there any predictive control algorithms out in the open for quadcopter control? PID is nice and stable, yet slow. Since the behavior of the craft can be estimated from current conditions, a predictive algorithm might be higher performance…

If it’s slow, then you’re doing it wrong.

I believe that taulabs was working on an LQR controller for quadcopters a little while ago.

Well you can make it arbitrarily fast (within the stability region) as long as you permit enough overshoot.

Exactly. And MPC can get around to some degree.

I believe Kalman filters are thrown around in quadcopter controls fairly regularly.

Kalman filters and others, like complementary filters, are used as part of the sensor fusion process. I’d be surprised if they are used in PID since their purpose is to combine the readings from multiple sensors to offset the errors caused by the senors.

True, but nothing to do with the flight regulation.

This is a pretty nice introduction to the concept of PID controllers. I do hope anyone reading this understands that this is all a little simplified (although none of it is wrong).

What I think is missing is the “why” of the derivative controller: it’s there to provide dampening! If you try to increase the proportional and integral gains to get it to respond more quickly, the system will, sooner or later, start to oscillate. The derivative controller is used to dampen this oscillation, without drastically slowing the controller. It allows you to use more aggressive gains. It does this by actually reducing the actuator value if the error is decreasing quickly, to prevent overshooting, and increases the actuator value if the error is increasing.

One problem with derivative control though, is that it tends to amplify sensor noise if the gain is high or the sensor is particularly noisy. It’s pretty common to low-pass filter the error value before calculating the derivative, to eliminate most of the sensor noise and quantization noise before calculating the derivative.

Also, there is rather practical way to get reasonably good gains quickly, it’s call the Ziegler Nichols method:

https://en.wikipedia.org/wiki/Ziegler%E2%80%93Nichols_method

Other than that, the common way is to increase the proportional and integral gains until oscillation starts, and the reduce the gain by approximately 1/3rd, adding a little derivative gain, and then trying to increase the proportional and integral gains a little more. It does require a process that does not spectacularly blow up when it overshoots or starts to oscillate.

Thanks that cleared things up for me immensely as the derivative going to zero when error isn’t changing made no sense as a correction. Using as damping on another correction makes sense now. I still remain baffled on the integral. It seems like if I have something off target and it returns to the target the integral is still positive and it should thus over shoot. In deed it should stay overshot until the area on either side of the target point is equal so the integral goes to zero. Can you explain why the graph shown doesn’t depict that.

All this time I thought IMU was Inertial Measurement Unit. Go figure. Everything is getting smarter.

Good catch it is an Inertial Measurement Unit. Guess I lost my bearings for a moment.

The only thing missing is the time variable. Change in x with respect to time for example. it isn’t with respect to hair color or with respect to y. That and they are not derivatives, they are differences. And not integrals, they are sums. They are only integrals and derivatives in the continuous math used to derive the PID and analyze its nature and prove it works – these were analog systems in the beginning with op-amps doing the math and pots setting the gains. This means all the tricks and pitfalls of discrete mathematics get involved in a digital PID. As for tuning there are iterative tuning methods out but if anyone wants to have some fun, I think an auto-tuner based on a Simplex would be pretty cool in the DIY space (It apparently has been done http://electronics.stackexchange.com/questions/50049/how-to-implement-a-self-tuning-pid-like-controller but doing a simplex yourself is a great experience). Wish I had the time. Today I wold guess auto-tuning is done by Kalman Filter, which should be darn good but is definitely beyond the scope here or the ken of most of us.

Nice overview of PID. I was happy to see integrator windup discussed. I might suggest a slightly more sophisticated approach, which is to apply a variable gain <= 1 to the error measurement before it is added to the running total. This gain is decreased smoothly as the value of the integral approaches its maximum/minimum value. Simply setting a max/min integral (which leaves this gain as 1 all the time, but then abruptly drops it to 0 when the max/min sum is reached) introduces a discontinuity into the controller, which can affect stability.

The anti-windup trigger doesn’t reset the summation to zero, it limits the summation to a maximum value. You might implement it with:

if (abs(sum + (error * Ki)) < limit) sum += error * Ki;

That may look inefficient but the compiler should optimize it to be efficient.

I never once suggested that the anti-windup resets the summation to zero. What I said was, the amount that is added to the summation should have a gain applied to it that decreases smoothly as the summation limit is approached. It’s called integrator saturation. If you simply apply a hard limit to the summation once the max value is reached, you make the integral term of the controller C1 discontinuous (that is, the first derivative of the integral will have discontinuity). Because the integral is included in the output control signal arithmetically, that in turn makes the control signal C1 discontinuous, which will be a source of instability. The best way (from a smoothness standpoint) is to use an exponential gain on the instantaneous error before it is added to the running summation. When the absolute value of the summation is far less than the limit, this gain should be close to unity. However, as the summation nears its limit, the gain decreases exponentially to zero. The exponential function is C-infinite continuous, so all of its derivatives are smooth, and then the smoothness of the output control signal will be limited by your sampling rate and the filtering applied to the derivative term. It may seem like a subtle point, but the smoothness of control signals can be very important.

“Setting up a PID for optimal control of a process is a black art.”

It’s not. It’s just that PID is not an optimal solution – it’s just a very good solution, which means you can spend hours and hours tuning it for one thing just to have it fail when the conditions change.

^^^ This. PID is used all over the place because it works “well enough” in so many situations and because it is so simple to understand – not because it provides the “best” control.

I disagree. I feel that if your PID is failing either you’re using it incorrectly or applying it somewhere it really shouldn’t be. I feel a PID is pretty straight forward as to what it can and can’t do. What would you suggest to replace the PID?

I’ve tried to understand PID controllers before, and nothing has explained it as clearly as this. Raul’s simulator didn’t work for me; maybe because I tried using Safari. Is there anyway you would be willing to share the excel file? I thought about trying to just recreate it, but I’m a little confused about how the windspeed affected the parameters (among other things).

PID for getting rid of PIO. Pilot Induced Oscillation. But when a poorly tuned PID is your pilot, it can PIO your vehicle into the ground real quick.

Interesting stuff; I’ve been looking at acrobatic FPV quads, and those guys spend a lot of time discussing PID gains. Interestingly, most of them disable or just don’t include stuff like GPS, barometers, compass and accelerometer; they just want stable and fast angular rate changes and thrust. You can see the quads hunting for the commanded rate of change in some videos where the PID’s are tuned well. Lots of entertaining crashes too. :)

“const double derivative = mDerivativeGain * (mPreviousError – error) / time_interval;”

shouldn’t it be like : error – mPreviousError ???

I just took a masters controls course mainly focused around PID. I have a suggestion, something to stress for the readers, and a comment.

The suggestion would be to include a graph of a PID functioning (with many points), the readers who don’t know how a PID system works won’t realize it can look like any transfer function of that it can be tuned so finely. Wiki PID controller if you’d like to see a basic graph (it’s still a little rough but it gets the point across).

The stress for the reader is that PID controllers are entirely up to you. You can change settling time, set points, and other function parameters to respond to a certain overshoot percentage, rise time, etc. If your correction is slow, you change that, if your correction undercorrects, you can fix that!

My comment is how transfer functions play in with PID controllers. For those of you adventurous ones you can easily create a PI of PID controller (normally you only need a PI controller tbh) using a transfer function. When you make your own PID controller you can use the settling time, overshoot, and other variables as direction equation inputs to determine the proper PID input to get YOUR PERFECT CURVE.

Recommended reading, NISE Control Systems. There is a 7th edition free as a download, 6th edition free as well.