For the last three and a half Billion years, evolution has built sensors. The nerves on your fingertips are just as good as any electronic touch sensor, a retina is able to detect a single photon, and the human ear is more finely tuned than the best microphones.

At the 2016 Hackaday SuperConference, Dr. Christal Gordon, educator and engineer, talked about the hardware behind our wetware. While AI researchers are still wondering if they have to define consciousness, there’s still a lot that medicine, psychology, and neuroscience can teach us about building better hardware with simple tools, just like nature has been doing for Billions of years.

Processing Data In Hardware

Christal’s talk focused on two senses, vision and hearing. The physical hardware you have for these senses — your eyes and ears — have unique features that allow for very advanced processing right at the first layer of hardware.

Anyone with a basic education has a pretty good idea of how the human eye works. Light enters the pupil, goes through a lens, shines on the retina ‘sensor’, and information travels up the optic nerve to the brain. There’s nothing wrong with this explanation of how the eye works, but like everything you learn in school, it doesn’t go into the details that make the human vision system so amazing.

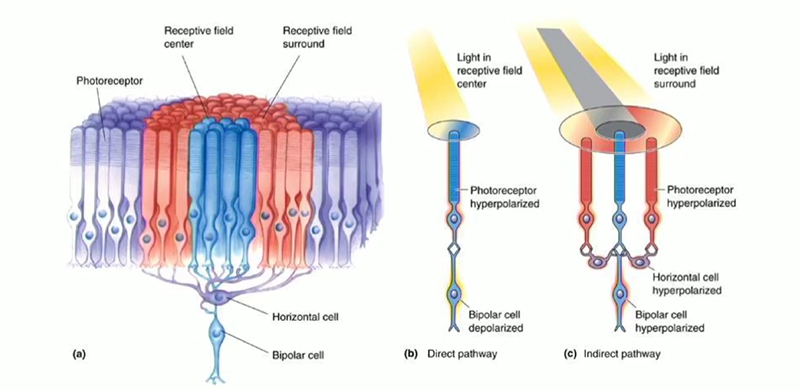

Unlike the most simplistic explanation of how the eye works, image processing doesn’t exactly happen in the brain. On the retina, there are groups of rods and cones wired together in circular patterns, with the center of the pattern sending a very strong signal to the brain, and the rods and cones surrounding the center sending the opposite signal to the brain.

What does this weird wiring setup get you? In the language of image processing, you get a Mexican Hat function. Practically, you get edge detection and noise rejection, built directly into the hardware.

Eyes and vision processing are one thing, but what about audio. The 8th-grade biology class explanation of the ear tells us the eardrum vibrates, making very small bones in our ear vibrate, which in turn makes tiny hairs in the snail-like cochlea vibrate. These vibrations are sent to the brain. Simple enough.

Following this explanation, all the audio processing then happens in the brain. This isn’t the case, though; the cochlea is finely tuned to different frequencies, and these frequencies translate into phonemes of speech. The cochlea does this by its own physical arrangement and automatically separates a sound into different bins of distinct frequencies. Your ear has FFT in hardware, and a few researchers have already taken this idea of discrete filters and put them into ASICs.

You can do a lot with silicon and sensors, but for most applications evolution already has a solution. In most cases, evolution has come up with a better solution, and we’re happy Christal could speak at the 2016 Hackaday SuperConference on making hardware smarter with this biological-inspired approach to sensing.

Adaptive too.

There’s more going on with the ear than meets the eye, or the highschool textbook description.

https://en.wikipedia.org/wiki/Otoacoustic_emission

The hairs in the cochlea are actually mechanical amplifiers that generate sound in response to sound. The effect is loud enough to be recorded from the outside of the ear.

Otacon confirmed

So they’re actually regenerative receivers?

So eyes and ears have already built in passive filters!

Please, change your pictures. The light is going from opposite side, not lighting directly to sensors. Yes, exist spieces with direct “lighting” like on pictures (octopus) but “standard” way of light is opposite, from the bottom to top. Yes from engineering point of view does not make sense, but this is nature (and reality).

“The nerves on your fingertips are just as good as any electronic touch sensor, a retina is able to detect a single photon, and the human ear is more finely tuned than the best microphones.” all of which are in the same body. And yet he says they evolved.

Well, it would certainly appear that way.

The world is like, a bazillion years old, man.

An over-exaggerated statement about the age of the world does not even imply evolution.

I dispute the claim that ears are more finely tuned than microphones…

Same goes for the single photon detected by human retina… only PMTs are capable of that kind of sensitivity…

There’d be no need for NVG equipment if our eyes were that sensitive :P

Yes, it can detect a single photon >

http://math.ucr.edu/home/baez/physics/Quantum/see_a_photon.html