What are the evocative sounds and smells of your childhood? The sensations that you didn’t notice at the time but which take you back immediately? For me one of them is the slight smell of phenolic resin from an older piece of consumer electronics that has warmed up; it immediately has me sitting cross-legged on our living room carpet, circa 1975.

!["Get ready for a life that smells of hot plastic, son!" John Atherton [CC BY-SA 2.0], via Wikimedia Commons.](https://hackaday.com/wp-content/uploads/2016/12/656px-early_1950s_television_set_eugene_oregon.jpg?w=342)

There are many small technological advancements that have contributed to this change over the decades. Switch-mode power supplies, LCD displays, large-scale integration, class D audio and of course the demise of the thermionic tube, to name but a few. The result is often that the appliance itself runs from a low voltage. Where once you would have had a pile of mains plugs competing for your sockets, now you will have an equivalent pile of wall-wart power supplies. Even those appliances with a mains cord will probably still contain a switch-mode power supply inside.

History Lesson

Mains electricity first appeared in the homes of the very rich at some point near the end of the nineteenth century. Over time it evolved from a multitude of different voltages supplied as AC or DC, to the AC standards we know today. Broadly, near to 120 V at 60 Hz AC in the Americas, near to 230 V at 50 Hz AC in most other places. There are several reasons why high-voltage AC has become the electrical distribution medium of choice, but chief among them are ease of generation and resistance to losses in transmission.

The original use for this high-voltage mains power was in providing bright electric lighting, which must have seemed magical to Victorians accustomed to oil lamps and gas light. Over the years as electrical appliances were invented there evolved the mains wiring and domestic connectors we’re all used to, and thereafter all mains-powered appliances followed those standards.

The original use for this high-voltage mains power was in providing bright electric lighting, which must have seemed magical to Victorians accustomed to oil lamps and gas light. Over the years as electrical appliances were invented there evolved the mains wiring and domestic connectors we’re all used to, and thereafter all mains-powered appliances followed those standards.

So here we are, over a hundred years later, with both 21st century power conversion technology, and low power, low voltage appliances, yet we’re still using what are essentially 19th-century power outlets. Excuse me for a minute, I need to hire a man to walk in front of my horseless carriage with a red flag — I’m off to secure some banging tunes for my phonograph.

Given that so many of the devices we use these days have a low power consumption and accept a low voltage, surely it’s time to evolve a standard for low voltage power distribution in the home? Not to supplant the need for mains sockets entirely, after all there are times when 3 KW on tap is quite handy, but to do away with the need for all those power cubes and maybe allow the use of other low voltage sources if you have them. Does it still makes sense to send the power to our houses the 19th century way?

The Old Plugs

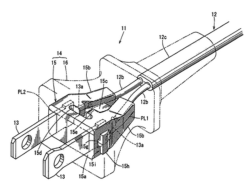

There will be some among you who will rush to point out that the last thing we need is Yet Another Connector System. And you’d be right, with decades of development time behind them you’d think that the connector industry would already have something for low voltage and medium power in their catalogue. The IEC60309 standard, so-called CEE-form connectors, for example has a variant for 24 V or below. Or there are connectors based on the familiar XLR series that could be pressed into service in this application. You might even be positioning USB C for the role.

Sadly there are no candidates that fit the bill perfectly. Those low-voltage CEE-forms for example are bulky, expensive, and difficult to source. And the many variants of XLR already have plenty of uses which shouldn’t be confused with one delivering power, so that’s a non-starter. USB C meanwhile requires active cables, sockets, and devices, sacrificing any pretence of simplicity. Clearly something else is required.

Oddly enough, we do have a couple of established standards for low voltage power sockets. Or maybe I should say de facto standards, because neither of them is laid down for the purpose or indeed is even a good fit for it. They have just evolved through need and availability of outlets, rather than necessarily being a good solution.

The USB A socket is our first existing standard. It’s a data port rather than a power supply, but it can supply 10W of power as 5V at 2A, so it has evolved a separate existence as a power connector. You can find it on wall warts, in wall sockets, on aeroplane seats, trains, cars, and a whole host of other places. And in turn there are a ton of USB-powered accessories for when you aren’t just using it to charge your phone, some of which aren’t useless novelties. It’s even spawned a portable power revolution, with lithium-ion battery packs sporting USB connectors as power distribution points. But 10 W is a paltry amount for more than the kind of portable devices you are used to using it with, and though the connector is fairly reliable, it’s hardly the most convenient.

Our other existing standard is the car accessory socket. 120 W of power from 12 V at 10 A is a more useful prospect, but the connector itself is something of a disaster. It evolved from the cigarette lighter that used to be standard equipment in cars, so it was never designed as a general purpose connector. The popularity of this connector only stems from there being no alternative way to access in-car power, and with its huge barrel it is hardly convenient. About all that can be said for it is that a car accessory plug has enough space inside it to house some electronics, making some of its appliances almost self-contained.

The New Plug?

So having torn to pieces the inadequate state of existing low voltage connectors, what would I suggest as my solution? Given a blank sheet, how would I distribute low voltage?

First, it’s important to specify voltages and currents, and thus look at the power level. Where this is being written the wiring regulations do not apply to voltages under 50 V, so that sets our upper voltage limit. And while it’s tempting to pick a voltage near the top of that limit it also makes sense to stay with one more likely to be useful without further conversion. So while part of me would go straight for 48 V I’d instead remain with the familiar 12 V.

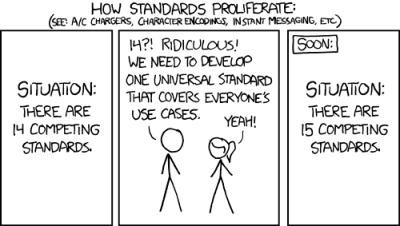

Looking at our existing 12 V standard, the car accessory socket delivers 120 W, that should be enough for the majority of low voltage lighting and appliances. So 12 V at 10 A per socket is a reasonable compromise even if the car socket isn’t. We thus need to find a more acceptable socket, and given that a maximum of 10 A isn’t a huge current, it shouldn’t be too difficult to imagine one. If I had a plug and socket manufacturer at my disposal and I was prepared to disregard the XKCD cartoon above I’d start with a fairly conventional set of pins not unlike the CEE-form connector above but without the industrial ruggedisation, and incorporate a fuse to protect the appliance cable from fire. Those British BS1363, fused-mains-plug habits die hard. In the socket surround I’d probably also incorporate a 5 V regulator for a USB outlet.

![These are best kept out of sight. Smial (Own work) [FAL], via Wikimedia Commons.](https://hackaday.com/wp-content/uploads/2016/12/atx-netzteil.jpg?w=400)

We do not however want to replace a load of wall warts with a load of switch mode PSU boxes. The point of the sockets described here is to take away other paraphernalia, not to just deliver power. Clearly some form of cabling is required. At this point there has to be a consideration of topology, will there be one cable per socket, and if not how many sockets will go on one cable? If the former then 16 A insulated flex from our distribution point with a single 10 A fuse per cable would suffice with enough margin to allow for sensible cable runs, while if the latter then something more substantial would be required. There are flexible insulated copper busbar products from the domestic low voltage lighting industry that would suffice, for example.

</rant>

This has been an epic rant, a personal manifesto if you will, for a future with slightly safer and more convenient power outlets. Somehow I don’t expect to see a low-voltage home distribution system like this emerge any time soon, though I can keep hoping.

Your vision for low-voltage power distribution may differ from mine, for example it may include some smart element which I have eschewed. You might even be a mains-voltage enthusiast. Please let us know in the comments.

I’ll just leave this here:

http://s3files.core77.com/blog/images/0truepowerusocket.jpg

I have a few of those. But I assume they contain always-on switching power supplies that constantly consume power. That’s wasteful.

And often very noisy, RFI wise, especially when nothing is connected.

Make them manually switchable.

It would be pretty straightforward to design a USB port with a plastic lever inside that operates a microswitch to cut the mains power to the transformer automatically when nothing is inserted.

You do not have to. USB can be used to detect if something is pluged in. The shield(metal case) of USB is isolated, pull it up to 5V on the board(100k resistor) and tie it to GND in the cable connector. If nothing is plugged in, the pin will stay at 5V. When you plug something in, the voltage drops to GND. Done properly, and you can feed this straight into the enable pin of the regulator. No control logic required.

And they are probably a fire hazard too. And probably breaks down within a year or two. Normal outlets can last for decades…

The two sockets equipped with these in my house have been in operation for a bit over two years so far.

This isn’t to say that they aren’t wasteful, but they are still operating.

YMMV, as brand quality does as well.

We have these in our house as well. Manufactured by the same company as all standard outlets in the country. Potted, and wiring double insulated per standard. If you have a fire hazard then stop buying stuff from China.

According to this: http://the-gadgeteer.com/2010/05/08/truepower-u-socket-wall-outlet-with-two-usb-charge-ports/

“The USB ports aren’t vampires – they don’t pull any power unless something is plugged in for charging.”

I would want to test that claim before taking it at face value. Unless there’s some sort of mechanical or optical switch in the panel that connects the supply to a regulator only when the USB plug is inserted, then by definition, there is always something connected to the mains source to convert the supply to USB voltages, which does pull power.

Agreed, especially based on how that is worded.

“They don’t pull any power unless something is plugged in” –> sounds like they don’t know what they’re talking about.

“They only use 3 nanoamps when no device is connected, so phantom power usage is negligible” –> fact! Sounds like they know what they’re talking about (if of course this is correct)

or

“They use a physical switch to detach the usb ports when nothing is plugged in to conserve energy”

I’ll believe them because they made up a figure, rather than just saying “negligible” :-D

It’s 3.00000 exactly right? :-D

Wow. Until coming to this page, I didn’t think that there were people that existed who worried about their electrical outlets costing a few dollars extra per month on their electric bill because the USB plugs were consuming electricity when not being used. If that is a big deal to you, I would take a step back and look at your life as a whole.

As one person said, “not enough to charge an I-pad”. I would add and not enough to power any lap top or maybe a L.E.D. light fixture. As author of this rant said, we have 19th century AC system powering a house full of 21 century devices. He also mentioned why we have this system…AC has less IR losses when you are distributing across the grid. Tesla was right, Edison was wrong…in 1900. But maybe now we need both. I bet the average home has dozens of those plugs converting 115 vac to 12 or 5 vdc for damn near everything in your house….TV, radio, computers, chargers for phone, camera, dildo (hahaha).

you name it. I envision you plugging in 70 to 80% of all your appliances into a DC socket in 15 to 20 years. And a DC volt built in system in your home for ALL the lights, L.E.D.s maybe built into the baseboards or crown molding…any color any brightness you want coming on automatically when you enter the room, “Alexa lighting to level 8, color warn blue.”

If only there was a standard that set limits on that

It’s only wasteful if you ignore the fact that the standby current of these are so incredibly tiny that putting one in every outlet of the house will be worth less than that half eaten banana you threw away because it felt a bit squashy at the end of the year.

mmm maybe not the goal of this post. Here you will need the 220/110 V cabling behind you wall. We want to avoid that, here I believe.

Did you read anything other than the headline?

Well this is article is what I had in mind when I said I didn’t know of any houses having DC rails. Someone mentioned POE, but I don’t see that fitting the article’s requirement either, although the negotiation aspect helps a lot in making it safer.

I brought it up in another thread.

This article lacks an explanation of low voltage, power limited regulations that already exist.

No, you won’t be seeing 10Amp circuits. That exceeds Class 2, whose NEC limit is 8 Amps, within 5 seconds.

Power handling systems already exist in USB and PoE and USB 3.1-C. The devices power on and handshake at a low, power limited level. The device identifies its power requirements and the supplying device supplies more voltage or current as necessary.

PoE can already go to 100 Watts if you use versions supplied by specific vendors.

Is RJ45 too uncommon a connector?

What about USB3 ?

Anderson powerpole connectors for the win.

Exactly what I was going to recommend. Small, genderless, available in a variety of colors, easy to install, handle upwards of 45 amps in the most common size shell, and already used in commercial DC applications. The only real problem is a lack of a slick wall plate arrangement to mount them. There are solutions out there, but they’re not the greatest. If there was a market, it would be relatively easy to design a better solution.

The real issue with the whole idea is quality DC power conversion and efficient distribution, even within a house’s footprint. If you’re powering a house by solar, less of a problem. problem. Converting AC mains to DC cleanly and distributing it throughout a building is difficult. Sensitive DC devices are probably still going to need filtering to remove any non-DC junk introduced in the line due to RF, AC interference, and the like.

I think this wall plate arrangement is actually pretty slick: https://powerwerx.com/powerpole-connector-outlet-box-coverplate

DIN Hella connectors like trucks and motorcycles use – rated for 20A/24V – http://www.maplin.co.uk/p/20a-standard-hella-plug-a94gw

that name makes me angry, i hate it when manufacturers use their name to name a plug.

the “Nava Plug” the “Hella Plug” its the same as calling a car the “bmw car” no model or series just the “bmw car”

iso4265 is the connector standard and its non branded name is the “Merit” connector.

/Rant

ps: not having a dig at you dapol2

It’s ok, but I don’t think it would pass the wife test for a lot of people. It’s not exactly a Decora wall outlet. Powerpole inserts for standard wall plates would be far preferable, but there’s no market for them.

Replace one connector block with four to six USB ports with a 60 watts of power.

Agreed. They’re also available in panel mount and outlet plate form already. I just installed some in a utility trailer.

No thanks, I’ll take XT60 over powerpole any day.

XT60 is relatively new and not necessarily high cycle count. The gold plating will wear off.

Anderson powerpole are far more common, spring loaded and make use of the wiping action to keep themselves clean. They are great modular connector and can easily satisfy multiple circuit configurations.

No thanks. I’ll take my hugely customisable click together connector over a single purpose single size connector any day. Want to run 12VDC at 10A? Yay XT60. Want to run it at 100A, can’t do that with XT60. Want to run +/-12V with ground? Can’t do that with XT60. Want to create a variable supply which can handle anything from small 5A to 200A using only the small powerpoles? Can’t do that with an XT60.

there are a lot of sizes for these connectors, and no standard for determining how much power you can pull from them (and you can’t assume that you can pull the full capacity of the connectors, almost nobody has them hooked up to power supplies that can provide that much power)

Once you go to Anderson PowerPoles you never go back!

Yes, I believe Anderson Powerpoles are the best existing connector. Capable of high current (45A in the smallest size), self-wiping contacts, and genderless. They’re widely used by amateur radio operators, among others, for 12V power distribution. I’ve outfitted my vehicles with them, including my camp trailer, and have several 120VAC to 12VDC power supplies outfitted with them, so that I can plug any device needing 12V into any 12V supply.

But even with a good solution to the connector problem, I don’t see too much of a future in widespread 12V household power distribution, because of the issues already mentioned around voltage drop. For a given power level and wire gauge, losses are inversely proportional to the square of the voltage, and directly proportional to the length of the wiring run. 12V is practical for distributing power around something the size of a school bus, but not too much bigger than that. 120V is good for something the size of a large house. We go to kilovolts for something the size of a neighborhood.

But just because it’s not ideal to distribute 12V around the entire house doesn’t mean we can’t improve on the status quo. There are a couple of places in my house where I have one beefy 12V supply feeding multiple 12V devices. My main purpose is to cut down on the number of RFI-generating cheap switch-mode wall warts, since I like radios and prefer not to destroy their reception by generating local RFI.

I can also imagine an appeal to having a 12V system that is independent of the grid, backed up by batteries, which could be charged directly by solar. The goal here would be to have some minor creature comforts during utility company power outages. If the length of the runs are kept short, and the power demands kept low (basic LED lighting and a few low-power appliances), the losses might be tolerable. I don’t have such a system in my house, but I do have something like this in my camping trailer.

You missed one of their greatest strengths. They click together like LEGO blocks. You want a 100A circuit but you only have the 30A powerpole connectors? Use 6 of them in a 3×2 formation to create your plug. Best thing is if someone then comes along with a device that only needs 10A they can still plug it in.

110+ kV for transmission, 33-66 kV for subtransmission, kilovolts for local distribution, 240/120 V within the home, and 5-20 V at endpoints for low-power electronics. It’s a pretty good tradeoff between conductor sizing, power losses, and insulation requirements.

Those last few feet have been dominated by a pile of competing standards and solutions, but we’ve been slowly moving towards switch-mode supplies and USB. With the new USB power spec, the final “wall socket” is going to end up being a USB C multi-port charger brick, taking the place of the power strip we all have under our desks. Consumer electronics is already migrating to this at the cellphone & laptop level, with displays next in line. Anything with power requirements over 100W is better off pulling power through the AC wall socket anyway.

The appeal of a 12V battery-backed solar system is lost on me. When I lose power, I want to run my fridge, my heating system, and some lighting. All of these are distributed throughout the house and run off 120VAC. Why in the world would I want to spend money on a 12V backup system that can’t run these? Between distribution losses and power requirements, I think this is why you’ll have such a system in a camping trailer, but never in a house.

lighting works very well at 12b, the fridge can get by for a day or two without power (if you keep it closed)

heating can usually be handled by adding layers (in most areas, in others, you need a non-electrical heating capability, but even a wood-fired house heater needs power for it’s controls/fans)

having the ability to charge a cell phone is a rather important factor under such conditions.

And with people using computers/tablets/phones for entertainment, it’s really useful to be able to power those.

The other thing is that these items take little enough power that it’s practical to power them from a affordable battery, while powering a fridge/heater/AC from stored power requires a much larger battery system

> the fridge can get by for a day or two without power (if you keep it closed)

> heating can usually be handled by adding layers

>And with people using computers/tablets/phones for entertainment, it’s really useful to be able to power those.

Ah, priorities.

@beaker

> Ah, priorities.

actually, in a disaster situation, entertainment of some kind is rather important. There’s a reason why it’s highly recommended to have a deck of cards in a survival kit. People who have nothing to do go stir crazy and tend to do things that make their situation worse.

never mind the stress on everyone if young kids have nothing to do other than sit quietly all the time.

Distributing DC around the house sounds a good idea, especially if you have many appliances or like making up electronic circuits. 12v is not really practical,24 could work but there are few devices that work from this voltage.36v would not be of use. 48v would be good, but appliances are still not widely available. So I have 110/120V around the house, garden workshop, this is far the best. Edison was right after all! ! appliances are plenty, just got to make sure that they are not an induction device or has a flimsy slow break switch. Also possible to use 240v tools, (at some reduced power) all fed from 9 x12v lead acid units, with a capacitive charger from 240v AC, or fed from solar panels. Proved a great success.

Power Pole is what I use for all my in-home Ham station needs (one large 32 amp low RFI supply for the whole thing) and does a great job as most other radio types are finding out. But there is one glaring fact of Physics not being addresses by any low voltage system, namely if you’ve got something bigger than a large car or truck any high current loads are going to start heating even 8 or 10 gauge wires and the losses will climb as the distance fro, the source and load grows. So really, in outlet low voltage supplies may be the intermediate so;Union but at the end of the day why not just plug the dang thing into the HV outlet as in a few years (or decades) the whole concept of “mains power” will probably get revised anyway.

North america has USB built in wall sockets and power bars available…

But still, even on a household scale, DC distribution falls prey to the enemy resistance, end up needing more weight of copper deployed per room than even in a dozen old school transformer based wall warts. It also seeeeems easier to keep safe than DC, but in environments where DC the norm, personal experience is, Automotive electrical fires 5, household electrical fires, 0.

What is the goal? Is it to have a single high efficiency inverter and reduce losses? because arguable that’s going to have to be a hell of an inverter to power everything in my house during the day, and be able to throttle down (Without significant loss of efficiency) to only charge my phone at night.

Now if you are looking for ~100W the USB power delivery spec can provide that.

Of course the simplest reason not to have an outlet hard wired that only provides 100W is because we have things like hair driers and vacuum cleaners and microwaves that we might want to plug in anywhere in the house, so if we decided we needed a DC line wired to every room in the house we still need to wire an AC line to every room in the house.

Meanwhile small inverters are cheap and easy to build…

While there might be an argument for off grid solar needing to skip the losses of converting to a sin wave and then back, for most people a DC outlet standard isn’t really a practical solution to a problem that doesn’t really exist.

Exactly. as well as long as resistance heating is economic (and it is where hydro is cheap) high voltage AC is the best choice for domestic service.

Yah, most “high efficiency” switch mode PSUs are only that at about 60-80% load, down below 30% and above 80% load they are terrible…

Presuming switch mode wall warts are getting smarter and more efficient, going into milliwatt sleep modes when no power drawn and well matched to device when in use, then central PSU makes no sense.

In the US and EU there were new regulations passed this year that force efficiency standards higher. Over 1W the supply has to be > ~65% efficient at any load. Not great but not that bad either.

You’re forgetting a few important bits –

1. Appliances still need lots of power. Delivering this power at such a low voltage is imparactical

2. AC motors are simply simpler. Lower initial and long term costs. They’re also better for simple applications where all you need is a quick, torque-filled start.

3. Delivering 120W to one fixture is fine, but you don’t want to run a circuit to each fixture.

4. Delivering power at 12V means you need a #14 or #12 circuit wire for every 120W. That is going to get expensive.

5. Those long runs are also more succeptable to voltage drop. Voltage drop is a raw number largely unaffected by the DC voltage applied (only current matters) – meaning if 10A causes a 2V drop, you’re losing a larger percentage of your power to votlage drop (which is lost as heat, in your walls…). There’s a reason why transmission lines are at such high voltages.

5. Current is what will overheat your wiring and start a fire. Lower current is better in general.

Isnt this one of the many, many, reasons why N. Tesla decided on AC and higher voltages?

DC had problems being transmitted as easily as AC (probably improved these days) and the high voltage gave loads more distance without such losses.

AC had the advantage that if your power source works by spinning a generator (which is nearly everything, even today, except for photovoltaics), then you don’t need to do anything else. It also steps up and steps down very easily and efficiently with transformers, which are mostly just bundles of copper wire. Edison’s DC approach would have required inverters at the power station before sending power out. (Guess who wanted to sell those inverters?)

You can actually transmit high voltage DC just fine–better, in fact, because you don’t need 3 wires for 3 phases, and there’s no skin effect. These days, with copper getting more expensive, it’s starting to make sense.

Indeed.

There are a number of examples of HV DC service connections, generally ship-to-shore or submarine cables to island communities, ie the Cook Straight cable.

I believe transmission lines are typically aluminum to save on weight and cost. They have to be slightly larger to account for resistivity but I expect they are significantly cheaper than copper.

Edison didn’t have inverters. The whole grid operated at the same voltage. This is clearly impractical for all but the smallest installations.

Edison had rotary inverters.

Correct me if I am wrong about something here, but I do not think there is any reason you actually need to have three phases with ac transmission. You could just as easily wire your generator to produce single phase power, and transmit that, after all most homes do just fine with a single phase in the US. The difference is that industrial scale motors work better and are easier to design with three phase power. Since industrial usage is the biggest consumer of power the power companies use three phase systems, and consumer households (at least in the US) only use one phase of that power.

Actually, US homes use two phases, 120v outlets only use one phase, but 240v outlets use both phases. If you look in your breaker box, you will see that you have two hot wires, interleaved with each other, a simple breaker connects to one, a 240v breaker (AC, stove, heater, etc) will connect to both.

That’s true. You could run everything off of single phase. But three phase is much more efficient with rotating equipment (motors and generators). I think it’s something to the effect of ~60% efficiency vs 95%+. Motors can be smaller as well due to less torque ripple.

I can’t reply to the below comment, so I’ll just reply to davidelang here. Residential power is NOT two phase. It’s split single phase. That’s different a different creature.

One must understand what a “phase” is. When referring to “single phase” or “3 phase” one is not referring to the secondary side (i. e. your homes power) they are referring to the primary side power. It takes a single phase of HV line to a transformer to create the two phases used in ones home.

@Joel

Except for a few historic buildings in Philadelphia which do use 2 phase. But definitely not the norm.

Actually a reply to davidelang’s post which does not have a reply option.

US households DO NOT USE 2 phase power, they use 1 phase of the 3 phase power distribution (in my area is distributed at 7200 volts per phase (12.6KV phase-to-phase) that is brought down to 240 volts by the pole/ground mounted transformer then is center tapped to get 2 120 volt feeds which as described above can be paired to use 240 volt equipment (electric ranges and dryers, central and even large window/wall AC units, proper air compressors, welders, etc). If you have power poles near you the top most wires will most commonly be a group of 3 wires then if you follow them to a transformer which will be hooked to one of the phases, down the street the next transformer will be hooked to another, and further down it the next transformer will be hooked to the 3rd phase. It is done like that as 3 phase is the most efficient for transferring power and for load balancing by hooking transformers to different phase wires.

The closest analogy would be having +12VDC and -12VDC supplies in a piece of equipment with a common ground: between either the + or – and ground will have 12 volts between it and ground but you can get 24 VDC by using both the + and – connection of the supply. Yes the power distribution is AC so there is no true + and – connector like on a battery, but the concept is similar.

For facilities that may be feed by 3 phase power: any equipment will be powered by either one or two phases of power (unless for a 3 phase motor which will use all 3) and will get a 120V single or 208V 2 phase or possibly 277V single and 480V 2 phase for lighting type circuits or other heavier duty equipment that using the higher voltages would be beneficial for.

Three phase delta is more efficient for power distribution at the cost of having to balance the loadings on each phase.

“AC motors are simply simpler. Lower initial and long term costs. They’re also better for simple applications where all you need is a quick, torque-filled start.”

BLDC’s are smaller, more efficient, and getting cheaper, they also have a better torque profile as torque is under control all of the time.

AC Motors are cheap and their drive mechanisms are also cheap, that is their sole advantage.

In low power application (fractional kW) BDLC are taking over 1ph and 3ph AC.

But above some kW AC still reign, even more since 3ph electronic drive are cheaper (they replace big DC motors) and very close to BLDC. Their main strength is they do not requires big magnets which are a reliability regarding temperature and rebuilding requirements (try changing a BLDC bearing!).

I live on board a boat and my life is 12V powered by a bank of deep cycle batteries, charged by the boat’s diesel engine and conditioned by solar panels. Dave’s answer is pretty much spot on. The complication in my life is voltage drop and it is a pure function of distance. The answer is copper and lots of it. I have 100 amp welding cable connecting my solar panels to the regulator and the panels are paired up in series to halve the current and double the voltage to the regulator. I have upgraded the cables to the toilet macerator and the fridge (the 12V DC fridge draws 45W, about 1/3 of the time, which is “a lot” in my life) and the remaining issue is the fresh water pump where I record a drop of almost 1 volt when it is running compared to when it is not. It also draws about 45W when it runs. It’s impossible to avoid 230V entirely – I have a 5kW inverter for vacuum cleaner, dehumidifier, power tools, espresso machine and hair dryer – I run the engine when using it for any length of time – and the inverter draws 20W just in standby – ie., more than the fridge overall. I hate the cigarette lighter sockets but have installed them all over my boat – it is the unfortunate standard and USB chargers, laptop chargers and other equipment all have integrated electronics to step the voltage up or down. This is actually a good thing – they are pretty tolerant of the variable supply voltage from ~10V with dead batteries to 14.8V when on the constant voltage absorption phase of the charging cycle. The biggest step change was switching from 12V halogen bulbs to LED lighting. My first attempt failed because the early LED bulbs were horribly unreliable and suffered from very premature failure, but the present lot are very good and the wiring stays cold!

PoE is the answer, houses should come with structured cabling cat6 and a PoE switch. Then you can use those outlets simply to power something or put it on the network.

As long as the question is “What only marginally useful DC distribution system could I have for cheap when I already have cat6 run?”

I use PoE for EVERYTHING. I love it more than my family.

PoE doesn’t hanle enough power, it’s limited to 12w or 30w (depending on the version)

90W is offered by Linear Devices products. One of the nice things with PoE (apart from having a very high speed data connection integrated within the power connector) is that the ‘hub’ could switch the devices on or off.

The standard doesn’t, but there are non-standard versions by different vendors, up to 90W. The only reason this is not standard is the CAT6 cable, it simply doesn’t have the copper to carry 90W up to 100m, you’d need something thicker and that would push the price…

Up to 90W per plug would fit probably most of portable devices, only downside is the fairly big connector, there would need to be a smaller standardised version for device sockets (wall socket can stay the same).

I would choose 24V isch AC.

This would allows laptops to charge, long wires without many loss.

You can use single diode or a bridge to get power, and the generation can be done with two lead batteries and a simple electronic.

Why 24Vish and not steady 24V ?

To accept a simple battery management system.

To accept multiple battery standards.

Say from 20V to 30V.

12V isn’t enough for laptops.

Plus, by using 24V, you can drop the amp.

Or allows more power.

Just my opinion.

A colleague has this with his solar + lead-acid battery in-house network. Uses it also for some lighting.

I’m not your colleague :-) But that’s what my house has. Off-grid, 2.5KW PV, 1320ah lead-acid cells, 24V unregulated lighting and power circuit, 240VAC sine-wave inverter for conventional appliances. 24VDC light bulbs are EXPENSIVE and I’m slowly replacing them with LED. The original owner had the place constructed with dual circuits, but using the same 10 amp 240VAC cabling, i.e. live, neutral and earth, and the earth wire is sealed off on the DC circuits. Yes, it means more cable runs to make sure no DC circuit gets overloaded, but it’s a *lot* cheaper than buying dedicated DC cable. Even running 1 x 24VDC and 1 x 240VAC cable to each room is cheaper when using the same type of cable. The whole thing comes down to two big copper busbars near the batteries, and I could switch an entire DC circuit over to the the AC circuit by moving the wire from the DC busbar to the AC, connect the earth cable, and change the light switch or socket, if necessary..

I found a big old CRT TV, I think with valves, at a salvage yard. The smell of burnt dust and heated electronics from the back of the box transported me back to sitting in-front of the old Bush TV with four buttons (you had to twist them to tune the channel) during the 70s, happy days flicking between the available channels, ker-chunk.

The main (pun not intended) problem that I see is that existing low voltage devices are currently set up to run on whatever voltage the manufacturer chose. So the best way I can see to make a new standard would be to do something that piggybacks off the existing standards. For example, the new standard connector might run at 5V but at 10 to 20 amps, with an adapter cable to USB A plugs for devices that use a USB plug. Or it might be a more convenient 12V connector and power supply with an inline adapter to an automotive accessory plug. Once this device catches on, manufacturers could start making things that plug into the adapter directly.

I’d try to stick the switch mode power supply in a form factor that could mount inside a standard electrical box, if possible. That would minimize the difficulty in converting existing wiring.

The average house is simply too big for low voltage wiring to be practical.

Even when I’m wiring up largish off-grid solar/battery sites I’ll use an inverter to convert to 230VDC before running cables more than about 15-20metres.

Add in the fact that you’ll either need to run at least 3 pairs of wires (let’s be generous and say mains, 12V and 5V) or else do DC-DC conversion at the end anyway.

Things like transformers for USB sockets hidden behind sockets are a good space-saver, but there’s no way you would ever want to run 5V all over your house. If you want to run 50V and then convert to 5V then fine, but what the hell would the point be? You’re doing AC-DC and then DC-DC, it’s all going to be much less efficient than what you already have!

https://en.wikipedia.org/wiki/Coaxial_power_connector

Now, if we could just standardize a size…

…and a polarity. I’ve burnt out more negative-tip devices than I should admit.

too right, that’s an exceptionally silly thing about those

There are negative tip devices?

Sorry, there *were*?

Might explain a few faulty devices…!

Yeah, those universal power supplies with the 3, 4.5, 6, 9, and 12 volt output. And I burn out something that should’ve contained at least a diode before the expensive parts…

And the the $30 micro-controller shunts all the reverse polarity voltage to protect that 5c filter capacitor.

This.

I’ve set up my own personal “standards” of polarities, voltages, sizes, even reworked some store-bought devices to abide to them. It’s not practical for the end user, but for me this works well.

the jack connector is for dc :)

Low voltage connectors designed for delicate mouse sized fingers. There are a few things in life that seem to be chasing the “smaller is better” mantra and are causing (me at least) a lot of grief. While certainly better than some of the predecessors, the micro-USB connector that cell-phone chargers seem to have encouraged, are still too small. There’s a lot of mechanical stress constantly inserting that little connector in the little hole. I would vote for setting minimum port and handle sizes for any connector whose duty cycle exceeds once / day. A standard 3.5mm TRS headphone jack is about as small (and short!) as I’d like – very durable. Proven durable. I’m willing to settle for the current USB standards, but barely – do not go smaller, please! I’m getting old and my fingers and eyes won’t be able to perform the micro-surgery needed to keep my gadgets running.

Agreed. Those micro connectors wear out very fast in real life, even if you just leave ‘m plugged in.

For 12V (24V) I prefer the DIN EN ISO 4165 socket. It is smaller and has much better contact reliability. I use it in the garden house and on a portable camping solar system. Only the connector for the panel is XLR. If somebody tries to connect an audio line directly to a small box glued to a car battery and containing a panel meter, it is his own fault. Although probably nothing would be destroyed, as there is no voltage present at the panel-input.

Use a negotiated protocol with existing wiring. It should be possible to build an outlet replacement that signals a main power source to switch the branch circuit between AC and DC power. If there is a conflicting demand – say branch is already on AC and you plug in a DC device – just get a red LED when you plug in the device and it receives no power. You could add this ability to an existing smart outlet for a small cost since it is already able to control the outlet.

These smart outlets would consume power, but they would be made in high volume so a lot of effort can be spent on optimizing their power usage. is is possible to build smart outlets that are active on less than a milliamp of mains current.

The whole “negotiated power” thing only works if all your devices follow the standard. If you get something that doesn’t, boom.

I personally use 12VDC at home for some things, there is a standard plug for RV and truck things, and it works reasonably well.

You can design it so that existing devices don’t need any change. For example plug in existing AC device, socket sees resistance, turns on AC power. New DC devices need some kind of signa;, a good one I have seen is embedding a strong magnet between the prongs. When DC device is plugged in, outlet see the magnet and supplies DC.

My thoughts exactly, it should be powered when something is plugged in, providing circuit resistance, and when it detects an open it shuts off. 12VDC is the obvious choice. Perhaps a DC in-wall transformer, with supercap circuit monitoring to close the AC side of the transformer when resistance is present.

A device expecting 12V that can plug into a 120Vac outlet? And needs a special outlet to give it 12Vdc?

Whatever could go wrong there…

Then a glitch causes your smart phone to request 240VAC and it promptly burns down your house

Hmm? Can’t you see several easy solutions for that?

Yup, just write ‘samsung’ on the device, and you can blame the battery instead of your crazy wiring…

This would make for an interesting hacking target…

The problem with low voltage dc rails is the immense size of wiring and cost of the copper to ensure low voltage drop. I mean really at 12V? Come on now, if you want to supply up to 2A of current you would need 12 guage wire to ensure voltage losses were minimal for only a 20 foot run. Now lets imagine sending 10 amps at 12V to the whole house, ridiculous! Look at your wire sizes needed to keep the voltage from sagging. There is a reason we use 120 and higher. If I was going to make a DC rail house it would be at 48V so I could at least get some decent power out of it at a couple amps without using ginormous wire sizes.

^This. I worked out that with the 6mm^2 range cable (rated for 50A with 240V AC) that I use for the LED strip lighting in my house (15m cable run), I’ll see 11V of the 12V applied when I reach 600W of lighting. I’m nowhere near 600W though.

DC slip connectors oxidize and since DC current flows thru the middle of conductors they are unreliable. AC current flows on the surface of conductors and so the oxidation is less of a problem making them more reliable.

I don’t think surface effect means anything at 50-60Hz on standard wire gauges.

DC flows through the entire wire (run the skin effect calculation for a frequency approaching zero)

Skin depth for Copper at 60Hz is ~8.4mm; AWG12 is about 4mm in diameter

https://en.wikipedia.org/wiki/Skin_effect

Skin effects can be ignored for both DC and low Hz AC (to a first approximation at least). Oxidation isn’t a problem anyway not even for outdoors installations.

If you want to avoid having a wall-wart for every device, USB outlets are the answer

no, they aren’t ideal, but real standards seldom are.

As others have noted, DC power distribution in the walls doesn’t make sense due to the losses, and the occasional need to plug in a high wattage device.

by the way, in you list of “everything takes less than 100w”, you are leavign out one huge category of devices, computers. Laptop power supplies are pushing 100w and desktops routinely use a lot more.

The current situation with the switching power supply built-in to the outlets is the answer for 90% of things, and using multi-output USB adapters is the real answer for most of the remaining situations.

My work laptop complains if I use a less than 130W brick and will refuse to charge on a 65W one. The adaptor plugged into the dock is 230W or so. My work desk has 3 monitors on it as well. So is need a single outlet that supports let’s say, 500 -600 watt minimum. I skipped the phone, the phone headset, test equipment, speakers, and the occasional additional laptop or two. If everything was pulling power at the same time I could likely push a kilowatt.

At home my desk has a 290W peak desktop and a 300ish watt server, along with a monitor for each. All of this is plugged into a single outlet. On the other side of the wall from the office is the workshop. Hard to run a 2hp bandsaw on really anything other than 240V. Yes they make DC motors large enough, but 1500W ones are still hard to find, expensive, and require external controls.

Want a standard DC connector? Talk to Ham radio guys, we have been doing that for decades the most recent is a very nice and very cheap one.

https://powerwerx.com/anderson-power-powerpole-sb-connectors

Ham radio operators use 12V DC power far more than any other hobby or user on the planet so if you want guidance as to what you should use as a universal, look there.

Also The same connector scaled up has been a Default standard for DC for well over 20 years. Forklift batteries and other very large DC power systems have used the Powerpole for a very long time. Most APC UPS’s have them as the battery conenctor.

I have a smoothed dc psu providing 12v and 5v rails in the comms cabinet area, and two POE injection panels. Anything that needs a dc supply often needs a ethernet connection too and the house is flood wired with cat5, so the patch to the switch just goes via a port on the injection panel and voila equipment powered.

The only time it gets dodgy is if the device needs more than the usual tiny dc supply delivering sub 2w, then poe is out the window over tiny little cat5e wires.

Poe https://en.wikipedia.org/wiki/Power_over_Ethernet

POE is 12.95W and up to 30W

Trouble is that CAT5e uses thinner wire then CAT6, so CAT5e can’t deliver the full power very far…

I think USB C may be the best new candidate for wall supply ports. It can be configured (by the target) for different voltages and can supply as much current as a low voltage device should want.

The downside is that it’s complex, but as always you can count on the likes of Texas Instruments and ON Semi to reduce the problem to which interface chip to select.

When this concept has been discussed before, 48VDC has always been proposed as the house wiring standard. 48V is the voltage of choice because it’s 2 volts lower than the usual lower limit of “high voltage,” which triggers particular safety regulations, and so on.

The problem really is chicken-and-egg. Houses won’t be built with a whole-house LVDC distribution system until there’s ubiquitous devices available to use such a standard, and they won’t exist until there’s such a standard available. I think USB-C might finally break that logjam.

This is the correct answer, and I can’t wait for AC plugs with USB Type C outputs to start proliferating. USB-C can be negotiated for up to 20V and 5A (100W), which can power most non-appliance devices. Don’t bother shuttling high current DC around the house, the losses in the cable will EASILY negate any efficiency gains. 12AWG wire has a resistance of 1.5 milliohms per foot. If you have a 50 foot run and are trying to provide 12V at 2A, there’s 5% power loss in the cabling alone. God help you if you need more than 12W. When you can build small offline switching supplies at greater than 85% efficiency, there is no point to distributing low voltage DC, and as there already exists (in USB-C) a connector standard with enough capacity for the majority of small devices, the solution seems obvious.

More than 24 watts, I meant. Bah.

I have a 12v lighting and power running threw out my house with 900w of solar panels and 5 72 deep cycle batteries.

All my rooms have led lighting, and every room has 3 12v outlets. Each room has its own fuze at 10a.

And yes I have gone threw a lot of wire. A LOT OF WIRE!!!!.

One day I hope to have a story here about my system.

Just so much to do.

And hydro is doing nothing but going up.

And this is the only reason for doing this.

OK we have a lot of power outages. It seams like if a car hits a hydro pole with in 10k (7m) the power goes out.

What is the winning of low voltage? if you want to have 10amps and 12 volts, u still need the same area on the cables as if it was 230 volts.. :/

Oh, current favorite DC connector ….

https://upload.wikimedia.org/wikipedia/commons/3/3d/SAE_Connector.png

I used these to hack my line trimmer for an external battery and they got quite warm during use. I replaced them with XT60, which are stone cold

Thing that drives me nuts is all the copper wiring we use for things like 3-way switches. It’s insane. Why there isn’t a low-voltage/low-current setup where you run the main power to the device (Say light fixture) and then smaller wiring to the switches is beyond me….

It’s just a simpler design, which keeps the cost down.

Think about a room with 6 pot lights:

With the normal 14-2 romex, you run a single cable to each light in series, then from the last light you run a cable to the switch and then on to the breaker. You’re using 1 material and 1 set of tools.

If you want to use a LV switch, you’d still have to wire the lights in series with 14-2 for power, but then you’d also have to install a controller box (with a microprocessor or solenoid, a step down circuit, etc…) in between the breaker and the lights. Then you’d have to switch materials and run your LV wire to the switch.

14-2 romex costs less than 15 cents per foot. The LV controller would be far more expensive.

And not only that, but you would be inserting another point of failure into your system.

For the vast majority of applications, it’s just not worth it.

If you were an electrical contractor, you’d understand why: money. Time is money. It takes precious time for the electrician to change installation components–wire and tools–to accommodate smaller-gauge wire for switch-legs; it also means (at least) one MORE spool of wire which must be warehoused and hauled to the job site. Translation: it takes more money to do it the way you’d like.

“No matter what they say the reason is, the real reason is always money.”–anon

Because they need to be installable by electricians, not EEs.

In Germany the Speakon connectors are a common sight for electrified bikes and similar DC-powered connections that need a plug that does not fall out when subjected to vibrations. Usually used for PA event/stage loudspeakers the plugs are rated for up to 40A

(https://en.wikipedia.org/wiki/Speakon_connector )

I was going to say the same, NL2s, would be great for DC power. 30A DC for ~$3.50, doesn’t seem too bad.

4-Pin XLRs are pretty nice too.

But really, if you are pushing that kind of current, you really don’t want runs longer then a few feet at low voltages. As others have said, USB-C is really the best for now for home use. 5V for little stuff, no negotiation required. 20V for higher power stuff. Just put a multi-port PSU at your computer desk or in your entertainment center to power all the bits and bobs. If you need more power then USB-C can provide, you are probably better to send 110V the distance.

I suppose I could see an argument for things that need just a few miliamps: Clocks, nightlights, etc, but those will take a DC-DC converter anyway, and USB-A would work just as well as a connector standard.

In the commercial A/V world I am seeing more stuff move over to phoenix connectors for in-rack wiring. Quite easy to wire things up that way!

http://www.middleatlantic.com/products/power/dc-power-distribution/dc-power-distribution/pd-dc-125r.aspx

I use NL4s for lots of non-speaker projects, good rugged multipurpose connector.

There are cheaper options, but speakons are just cool, and the panel connectors are available in the standard D size mounting.

I also use Powercons for AC wiring.

RF power transfer anyone? (downside is you have to wear a foil onesie – or is that an upside?)

Oh! look those two people are attracted together!

that “article”/rant is one of the worst i have read on hackaday so far.

the main problem is NOT that there is no standardized connector!

for 10A the voltage drop for you average home installation will be MASSIVE.

at 0,0175 ohm/mm2/m and an average distance of let’s say 15m to the outlet we get 0,30 ohm for the 30m of 1,5mm2 cable.

so you have 3,5V of voltage drop.

your only hope to make this come true is that the Trump administation can abolished voltage drop. :-)

and then you still have the nightmarish installation in houses. here in europe you would have the 240V in seperate tubing so basically you have to double the whole installation in a house.

building a wall-e type robot with a battery in it’s belly following you to power your stuff everywhere sounds extremely realistic, cheap and practical in comparison.

the whole article is basically a rant about wanting some magical power outlet with a total lack of technical understanding.

if you dream, at least do it right:

here is a shorter version of the article above, done properly:

i wish there were pixies that could power my device whereever i put it.

If you mean furry pixies that eat sunflower seeds… http://www.otherpower.com/hamster.html

Common existing US home electrical wiring is 10 or 12 gauge. That is 1 ohm per 1000ft. So your 15m run has 0.045ohm resistance, not 0.3ohm. Loss at 10A is 0.3V not 3.5V. US home AC can supply up to 10A 120VAC in a normal outlet. So the current with DC would be similar to the current supplied with AC, the DC system would just supply less power. 48VDC is typically used for runs line this so about 450W is available from DC side. AC supplies up to 1200W.

No it is not. For most circuits it is 14 gauge (1.6 mm diameter), 1 Ohm per 400 feet (120 m). Therefore 15 m run has ~0.125 Ohm resistance.

https://en.wikipedia.org/wiki/American_wire_gauge

yes, you could mitigate the losses by just putting more and more copper in.

anyways you both forgot to factor the way back in. so if you need 15m to get there you need another 15 meters for the pixies to travel back again. so it’s 30m. which makes it 0.25 Ohm. -> 2,5V drop and with that a 20% loss.

so you have to double that number. and the production of the copper cable probably uses more energy than you could save in a lifetime.

in europe we use similar wiring (2,5mm2) too, but mainly for high power devices like the heatpump of my geothermal heating. (16-25A) but you can tollerate the losses at 240V. not at 12V.

You are right. There are good reasons why low voltage is not in much use for power distribution.

tl;dr – similar to what markus above said, there are some pesky realities to consider. Just ignoring installation costs and assuming a household bus that magically can match household AC distribution loss, you can decrease system losses by about 4%.

——————————

Below are some fast (think Fermi-type) approximations based on a little Google-fu and some math. Efficiencies are based on the first-page DC-DC and AC-DC converters modules and IC datasheets, which tend to be under optimal conditions.

Amount of wire in an American household: 1 ft of wire per 1 sq foot housing (contractor rule of thumb)

Average American household: 2400 square feet

Given: Copper resistance – 8.26mOhms/meter for AWG14 solid copper wire (a common household distribution level wire)

Total wire resistance for 750m of wire: 6.2 ohms

Average electricity consumption American: 11kWh/yr

Average American residential electricity cost: ~$0.12 kWh

Amount used for “low voltage” items (residential) – these will be LV bus “candidate” items

* lighting: 11%

* TV and related: 7%

* “other” is 42%, will take 1/4 of that as low-voltage bus compatible (data includes dishwashers, stoves, etc, as well as computers and other small items.

* http://www.eia.gov/energyexplained/index.cfm/data/index.cfm?page=electricity_use

Average watts consumed for candidate items: [11,000,000/(365*24)]*(11%+7%+42%/4) = about 360W

I think I saw a number that there are 24 or so “appliances” that may be LV, so assume replacing 20 devices throughout the house, each consuming an average of 18W

Assume a LV DC-DC buck can achieve 90% average efficiency easily (e.g. 12-to-5 buck, or 24-to-5 buck)

Assume dedicated distributed AC-DC converter achieves 85% avg efficiency (e.g. switcher wall-wart)

Assume a single dedicated high-power distribution AC-DC converter will achieve 95% efficiency (e.g. household LV converter capable of 400W)

Loss with AC distribution and local AC-DC conversion (current model):

* Current through AWG14 solid wire = P/V = 360W/120V = 3A

* Loss from Cu resistance = I^2/R = 3^2/6.2 = 1.45W

* Loss in local converters = (360W-1.45W)*(1-0.85) = 54W

* Total loss: 54W + 1.45W = 55.5W

* Annual losses cost = (55.5W/1000)*365*24*0.12 = $58.35

Loss with DC distribution (at 12V) and local DC-DC buck

* Current through wire = P/V = 360W/12V = 30A

* Loss from Cu resistance = I^2/R = 30^2/6.2 = 145W

* Loss as Dedicated distribution AC-DC converter = 360W*(1-0.95) = 18W

* Loss in local converters = (360W-145W-18W)*(1-0.90) = 21.5W

* Total loss: 145W + 18W + 19.7W = 182.7W

This is not even factoring in the expected voltage drop across the wire (average about half a volt). Clearly does not work. This is where we assume some special distribution system which reduces wiring loss to AC distribution equivalent (REALLY big aluminum bus bar? unnecessary to deal with at this point).

Loss with DC distribution (at 12V) and local DC-DC buck, with super-duper conductors

Current through wire = P/V = 360W/12V = 30A

Loss from distribution = 1.45W _assumed_

Loss as Dedicated distribution AC-DC converter = 360W*(1-0.95) = 18W

Loss in local converters = (360W-1.45W-18)*(1-0.90) = 34W

Total loss: 1.45W + 18W + 34W = 53.5W

Annual losses cost = (53.5W/1000)*365*24*0.12 = $56.24

In this system, you have saved 2W, or about $0.24 annually.

Buy yourself a gumball for your efforts!

Sweet, so that 95% efficient convertor only has about a 4000 year pay back time, and I thought you’d be waiting until the sun burned out.

While I agree the idea of running AC and DC and the conversion between the two within the walls is an expensive and somewhat scary proposition. I do like the question though of how we might be able to eliminate the intermediate power conversion/supply between the wall and the device.

I myself have wondered and looked for solutions of having 5V devices, a la IoT, hanging off the mains for household chores/tasks. In many of my test cases, I do not want a wall socket taken up with these devices and in many not being exposed at all. I haven’t found any all-purpose solution so I am building it myself. (Although any advice given here is greatly appreciated.)

So what I am seeing here is really a question too about transitions. Will we always need AWG14 running through US households? It’s the nearly the same question I posed to a Cree engineer. What would an LED light bulb and lamp look like if we did not need to have the bulb retrofit into the current E26 standard? Would the bulb package look different? Could you place the driver in the lamp instead of the bulb? Would that reduce costs? Would that do anything to the lifetime of the bulb or base?

Couldn’t most IOT things be POE fairly easily? I’m mean a raspberrypi is powered by a 5v 2A source.

Nice to plot down some numbers, but please use the right formulas :)

Loss from Cu resistance = I^2/R = 3^2/6.2 = 1.45W

Power dissipation in an ohmic restance is I^2 *R.

Apart from that you are stacking frar too many assumptions onto each other which invalidates the whole exersice.

True, I messed up Ohm’s law/power law – Cu loss would be 55.8W in the 120Vac case, not 1.45W.

As for stacking assumptions invalidating the exercise (<— while we are being pedantic, note the correct spelling :D ), note that the calculations started with the preface that this is a Fermi-type approximation, also known as Fermi estimates. These calculations are not exact and never meant to be – they give a ballpark idea of where the numbers are. An order of magnitude off is quite acceptable for these. My assumptions are noted; if there are assumptions you feel are way off (or forgotten), please post (and give the search keywords or link you used).

For reference:

https://en.wikipedia.org/wiki/Fermi_problem

https://what-if.xkcd.com/84/

https://what-if.xkcd.com/106/

One of the biggest errors is the assumption to use the same ball park figures for the 12V wiring as for the 120Vac wiring.

Loss from Cu resistance = I^2/R = 30^2/6.2 = 145W

30^2*6.2 = 5.5kW

And the only thing it prooves is that you do not want to be dependant on 12V power for the whole appartment.

Idle power consumption of a decent dc powerplug is <100mW and this makes the whole idea of DC distribution uninteresting anyway.

And I'm not a native englisch speaker/writer and don't care much about little spelling errors as long as the senence stays in the same order of magnitude.

Yea, I started with the Cu loss calculated for 12V at 14AWG and it was far larger than any other loss (second calculation set). Even more now that I am using the correct equation :). The last calc series I ran assumed the loss was roughly the same as the 120V case to circumvent the series of “what-if’s” involving increasing conductor size to minimize loss. Even neglecting the Cu losses, there seems to be no real gain to providing whole-house LV in parallel with the AC system.

“…sentence stays on the same order of magnitude” :D touche. I’m going to have to remember that one.

Such an intresting concept, and one ive considered myself.

Connector: i like the old center positive dc barrel jack, it can take large current and easily breaks away if you trip over it. something of a pain here in the uk because all our mains plugs are at right-angles making the humbe vacum cleaner a trip-wire death trap

Goal: Eleminate Wall Warts

Method 1:

have a main varable voltage high wattage switching psu near consumer unit. voltage will drop at different ammount to diffecrent sockets due to distance and load. each socket will have to be on a radial spur to the switching psu and auto-negotiate the voltate.

Method 2:

design an in-wall type of mains socket, 240/120 in, 12v out.

DC barrel jack on the front and will need to be quite a deep unit ~1.5 inch for reasonable power output.

alternate method 2:

Put the dc on the mains!, you can combine ac and dc creatig a floating ac voltage with combined negative/neutral. each mains socket will need to isolate, seperate and regulate the dc/ac voltage out but should not need a transformer and allow a much slimmer outlet unit.

the dc can be distrubuted from the consumer unit but will need more advanced protection mechanisms

method 3: put the wart in the middle of the cable so it doesn’t take up space at the socket.

My initial thoughts were to have an intelligent interface, with an initial output voltage of 3.3V at the outlet. The interface would require a third connection to the wall socket (or whatever), which would be used as a 1-wire interface to the power supply for programming purposes. The output of the wall socket would be programmed by an 8 pin processor to set the output voltage, to a max 48V. An initial default 3.3V would power the 8 pin processor for initial setup, and would also monitor incoming voltage overall current draw of the device to be operated and to send a shutdown command to the wall socket power supply if over current or voltage is detected. Unfortunately, this is not in line with the KISS (Keep It Simple Stupid!!!) method of engineering.

The simplest method would be a 48V output with current limiting at the wall socket. It’s a safe voltage, and could be used for high power devices. Secondary voltage and current regulation would be done by each device. A simple yet small connector, able to carry the maximum current efficiently. Long runs of power would not be used due to the voltage drop. Portable 48V supplies could be made available to operate devices far from the usual 110VAC sockets still in place.

John Lightner has a point that I initially thought as a very valid one, but I researched the issue, and found that skin effect would not help corrosion. For instance, at 60Hz skin depth copper wire is 8.47mm, indicating the conductor would have to be greater than 1/3 of an inch diameter to realize the effect. DC flows through the entire conductor, not just the middle. Corrosion is caused by poor contact in the connector that results in overheating due to the resistance created in the poor connection that is not equivalent to the size of the wire carrying the current. Skin Effect Reference – https://en.wikipedia.org/wiki/Skin_effect

Correction… For instance, at 60Hz skin depth copper wire is 8.47mm, indicating the conductor would have to be greater than 1/3 of an inch diameter to realize the effect.

Should read: For instance, at 60Hz skin depth copper wire is 8.47mm, indicating the conductor would have to be greater than 2/3 of an inch diameter to realize the effect.

AC does help with corrosion because electrolytic effects are net zero.

look at what you are proposing compared to what USB-C is already shipping.

While your proposal has higher capacity, USB-C is already out there and will go to the 100w of power that the original article listed as the common cut-off for devices (a figure that will probably drop over time anyway)

don’t try to re-invent the wheel, take advantage of what is already in mass production and being used on devices.

All you need to do is deploy in-wall USB-C power supplies and use them.

My reasoning for the higher voltage is that power capacity is higher, and since everything currently in use is at a significantly lower voltage, voltage drop over the cable to the device becomes irrelevant. A USB-3 hub for instance would take the incoming 48V and regulate down to 5V, 12V, or 20V as desired by each device, and ALWAYS have sufficient power for each output, and not be loading down a computer power supply. It also allows for voltages higher than 20V if the standards change, which possibly could.

The way I read this article, it is to supply power to devices, and that’s all. It is not addressing any particular standard. A safe power source that can be used for USB, monitors, amplifiers, projects under development, etc. Also, it is not meant as a replacement for the 110VAC mains already in use, and would eliminate the multitude of Wall-Warts, and larger power supplies we currently use. For instance, I have several projects that could use such a system. It would eliminate the five lab supplies that currently take up very valuable bench space. This article has spurred me to build such a system with multiple outputs.

I just researched DC-DC buck converters as well as analog regulators, and found absolutely no reasonably priced supplies that would take 48V. Although I feel that this i the best voltage for distribution, currently there is just nothing out there that is capable of a 48V input. So, my DC distribution project has been reduced to just 24V, which should suffice for most everything I need.

have you looked at surplus telecom equipment? vicor dc/dc converters go for cheap on ebay.

I could do that for sure. I was looking at the bigger picture of manufacturing, which would actually be able to source anything they need. Currently though, there is very little 48v new stock.

Dear Jenny,

I certainly understand that you would want to have a DC power plug… well then how high does the voltage needs to be, 48, 24, 12 or perhaps the already dominant 5V. And because you can’t feed your hair dryer with low voltage DC, you always need to have a set of double standards, low voltage DC and high voltage for big users.

Considering the impact of USB and the number of devices using it… well I guess we already have such a candidate and sure there is a lot wrong with the USB 2 plug, it did serve our needs for the last decaded and probably will for the next. So end of discussion about the plug.

The true discussion about the wall warts and laptop charger bricks and TV-settop boxes that require an external power supply all comes down to certification. If you want to build a device (for instance a settop box for your TV) that meets the CE and/or FCC standards it needs to comply to the rules for the given function. If you place a powersupply inside your settop box, the whole settop box needs to comply with the high voltage regulations. If you move the power supply outside the settop box the settop box itself no longer needs to comply with the (more complicated) highvoltage rules and is therefore much easier to certify. Although understandable from a designers point of view the resulting effect is that allmost every modern design throws in an external DC power supply (most likely 12V or 5V, which is inside the device that requires it, regulated down to an even lower more stable voltage )… The whole situation it is completely crazy (perfectly indicated by the image on top of this article), I guess we all share this problem.

And we all know that the power supply (little brick, wall warts, etc) would have easily fitted in most applications (like the DVD player, the settop box, the XBOX (whatever version), the WII, the wifi modem/router… and the list goes on and on…

Going over to a low voltage situation in order to address this problem is most likely not the real solution the real problem has to do with safety regulations, and shifting this (literally) to the other side of the wall (embedding the DC power supply into the home infrastructure) will become very difficult and expensive to do it properly and will never solve the problem completely.

Kind regards, Jan

PS: I liked the comparison with the car and person with a red flag, but honestly, DC power isn’t as easy as it seems.

Do the math about possible losses, do some research about breaking inductive loads, etc. It goes far beyond then choosing a voltage and a plug, please think a little bit harder before you rant (though I fully understand).

Dear Jan,

You are bringing up some points and treating them as the whole truth. Certification will dictate some choices – having an external supply allows it to be certified separately, allowing reuse without further certification among designs and exclusion if the base product requires a change. However, an engineer will recognize that it is, as always, a series of tradeoffs. Moving the power brick outside the product decreases heat in the product, removes internal EMI sources, allows certain ergonomic profiles, and even simplifies design (if you can buy a pre-built supply that does what you need that is less development time as well as one less Certification headache). While there certainly are certification advantages to external supplies, it is limiting to think of this as simply a Certification issue. At best, it is usually an economies of market and scale decision.

Low voltage distribution is indeed not the real solution, but again, I think you will find the stopping points are raw economics well before you arrive at safety / certification. LV distribution falls into SELV (Safe Extra-Low Voltage) ratings, which tend to be far less stringent than hazardous voltage levels.

Okay, I have to admit, I let myself go (a little bit). You certainly have a point to which I cannot disagree. Thanks for your comment.

And likewise thank you for bringing up certifications / EMC compatibility / safety. They are a topic that regularly gets lost, forgotten, or glossed over (even (or maybe especially?) in industry), and they are one of hurdles between an idea that works on the bench and a widespread usable system.

I understand where the author is coming from, low power DC equipment is ubiquitous these days what with our computers, phones and TVs. I actually have a setup on my lab bench designed to cut cable clutter by using a high wattage computer PSU, this single box supplies power to all the lighting (which takes the form of low voltage led and halogen bulbs) and some instruments on my bench. For anything that needs a voltage that isn’t 12v, 3v, or 5v, I just use cheap Chinese DC-DC converters in a 3d printed enclosure. (Btw molex minifit jr is a great connector and we should all be using it)

48V systems for lower power devices would be practical for small/medium electronics, and something similar is already used in PoE systems, but it would be wildly impractical for appliances like electric ovens, heaters, washing machines and dryers. We could have two sets of electrical lines running through the walls, but then what’s the point of creating another standard?

Ever looked at old fashioned telco standby power? 48V supplied by some *huge* 2A wet lead acid cells with bus bars beyond belief – I have no idea what current those battery banks were rated for, but I know that it wasn’t that infrequent for telco engineers to vaporise spanners by accidental short circuiting – supplying 48V to an oven from that sort of rig (NEBS power basically) would require some huge busbars, or wire bundles, but is doable.

If I understand correctly, US electric ovens already have their own separate power supply anyway to deliver 220V 3 phase power separate from the 110V single phase for most accessories. This is just taking that further, although if US ovens are anything like UK ones, you’re looking at 12V at 200A to get near the same power rating.

The small sites I helped perform DC upgrades for had two -54v banks of 24 2.3v sealed lead acid cells that weighed 300lbs per cell. The rectifiers were capable of 2000 amps @ -54v in a single rack cabinet. It was lots of fun carrying them up two flights of stairs.

A not uncommon 24V/20A connector is the DIN Hella plug (http://www.maplin.co.uk/p/20a-standard-hella-plug-a94gw) which is present in a lot of modern trucks (at least in Europe) and is also (in 12V guise) fitted to a lot of BMW Motorcycles – connection is a lot more positive than the cigar lighter barrel, while still being fairly managable.

As goes the issues with voltage drop due to cable run – these can to some extent be addressed by changing your wiring standard to a ring-main where every outlet is on a ring, with single-outlet spurs reduced to a minimum – does require the devices themselves to be fused, as otherwise you’ll lose the entire ring if there’s a fault, but this has worked well enough in the UK for the last 60 years for AC (240V/32A per ring for sockets).

I have a plan…

Install inch by half inch copper bus bars in every wall now while copper is cheaper…

Claim it’s for DC distribution…

Rip it all out and sell it in next commodities bubble…

Enjoy 5 years “free” electricity off the proceeds.

I’d settle for unregulated AC with a low enough voltage that it becomes practical is direct input to a switching PSU, thus eliminating the need for external transformers in most electronics gear. It’s really only the cabling mess that bothers me.

If you are go to build any electronics into a non consumer replaceable wall outlet it had better last for decades. It will nave no electrolytic caps or spike vulnerable semiconductors inside it.

Every time I smell a new CFL I smell phenolic or ginger-nutmeg cap juice, yuck.

If your wall outlets aren’t consumer-replaceable, you need better consumers!

I’m guilty of installing wall outlets I’m not real sure will last decades (particularly a GFI with built-in LED night-light in the bathroom), but they cost 20 bucks and take 20 minutes to install — if it goes out in 5 years, I’ll replace it then.

WTF? Why do your outlets have individual GFIs on them? Why not do the entire circuit?

they don’t make GFI breakers for all styles of panels. they also cost a lot more than a couple individual GFI outlets. so if you are in an older house you 1) may not have a choice, and 2) it may be cheaper to replace the (few) outlets that need the protection.