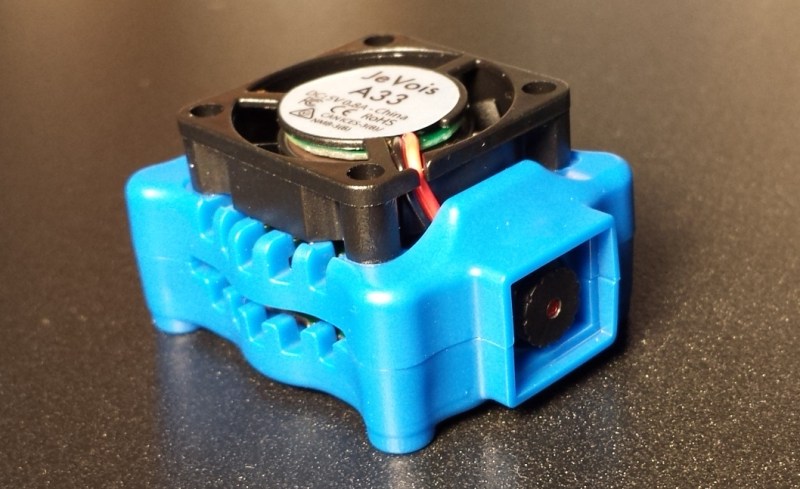

JeVois is a small, open-source, smart machine vision camera that was funded on Kickstarter in early 2017. I backed it because cameras that embed machine vision elements are steadily growing more capable, and JeVois boasts an impressive range of features. It runs embedded Linux and can process video at high frame rates using OpenCV algorithms. It can run standalone, or as a USB camera streaming raw or pre-processed video to a host computer for further action. In either case it can communicate to (and be controlled by) other devices via serial port.

But none of that is what really struck me about the camera when I received my unit. What really stood out was the demo mode. The team behind JeVois nailed an effective demo mode for a complex device. That didn’t happen by accident, and the results are worth sharing.

The Importance of a Good Demo

When it comes to complex systems, a good demo mode is essentially an elevator pitch for the unit’s capabilities. To a user, it answers “what can this do, and what possibilities does it open for me?”

The JeVois camera’s demo mode succeeded in this by doing several things:

- Make the demo self-contained and easy to start. Require a minimum of parts or setup from the user to get started. After putting the system image onto the included SD card, I only needed to plug it in to my laptop and start a camera viewer.

- Make it interactive. Respond to user input immediately, and show the processes at work as much as possible.

- Keep it simple. The demo isn’t the device’s one and only opportunity to explain everything! Leave the user free to focus on absorbing what is being shown; avoid bogging the user down with figuring out an interface or troubleshooting issues.

Demo mode on hardware is frequently an afterthought if it exists at all, but it deserves attention and polish if for no other reason than it is the one element of a product that it is virtually certain every user will engage with.

Setup and Demo of JeVois

I had to copy a system image to the micro SD card to ensure I had the latest version of the JeVois software, but after that all I needed to do was plug it into a USB port (where my computer recognized it as a USB camera device) and open a webcam viewer. Once that was done, the demo automatically started. The hardware streams a hybrid mixture of a looping “tour” video mixed with live camera images and a number of vision processes running in parallel. To the host OS, it’s just a video stream from a USB camera like any other.

Not every element is flawless; the handwritten number recognition in particular is hit or miss, although the demo acknowledges this. The demo video below was recorded in a white room with sunlight, but things still look on the dim side. Finally, the camera and lens clearly aren’t intended to be changed or adjusted. I’d have preferred a module with an M12 (S-mount) lens, but the camera on JeVois at least makes for a very small package. Regardless, the demo succeeds in easily and concisely showing off what’s possible.

[wpvideo 52uanku3]

The default camera system image comes with a variety of bundled machine vision modules and demos, each anchored to a specific camera setting. For example, one of these modules recognizes ArUco markers. To activate this mode, set the camera to “YUV 640×500” and the module activates immediately. A recording of this mode is below.

[wpvideo 3WacdWRb]

Details on detected markers are sent over the serial port. In this way an Arduino or microcontroller can interface to and even control the camera. There are many other bundled modes in the default image, and of course users are free to develop their own.

Other Embedded Machine Vision Cameras

![Pixy [image source: CMUcam.org]](https://i0.wp.com/hackaday.com/wp-content/uploads/2017/05/pixy.jpg?w=453&h=339&ssl=1)

![OpenMV [image source: OpenMV]](https://i0.wp.com/hackaday.com/wp-content/uploads/2017/05/openmv.jpg?w=339&h=339&crop=1&ssl=1)

The CMUcam5 “Pixy” (also funded on Kickstarter) shipped in 2014 had a clever demo mode. Out of the box, it had the ability to recognize colors that were held in front of it, and even had headers for hobby servos to be used in a pan/tilt configuration. This allowed the camera to be trained to track a color, then move to follow that color, all without involving a computer. It was also possible to view raw or processed video, but that required connecting to a laptop or desktop by USB and running a program unique to the Pixy in order to access video and configuration. The ability to “see what the camera sees” was a great feature that made setup and troubleshooting much easier, even if it was limited.

The OpenMV project is an open source python-enabled machine vision module that was a semifinalist for the Hackaday Prize in 2014, and has grown since then. OpenMV have just started shipping their newest M7 cameras, which can even emulate a Pixy in UART mode for compatibility. We’d love to see a good demo to show off its capabilities.

Are there any other new offerings in the realm of embedded machine vision cameras, or particularly good demos? Let us know in the comments. And speaking of the Hackaday Prize, don’t forget that 2017’s iteration is currently underway.

I hope this new system is good. I tried the Pixy camera about 1.5 years ago and it was quite useless. It could only track things if there was an incredibly high contrast and would incorrectly identify colors on a brown desk as pink, or purple… Fortunately returning it (on amz) was very simple.

It’s amazing, doubly so given how inexpensive it is. About the biggest issue I have with it is the low-light performance, which is pretty poor – the sensor is a OV9653, which is quite old, and the lens is tiny. You can boost the gain quite a bit, but don’t expect to do much with it at night in normal home lighting. In daylight or office/task lighting it’s fine.

There is a reason why in most industrial machine vision applications monochrome cameras are used. Besides throwing out near 2/3 of the light due to the bayer filter, you also have to do some interpolation.

Frankly, I don’t understand the obsession with color cameras; they tend to over complicate processing, have much larger images and the images themselves are less reliable (color crosstalk, chromatic abberations, debayering artifacts).

There’s no “obsession” with colour cameras; they’re just cheap and readily available on the market. Nobody wants a monochrome sensor in a phone.

blazingeclipse has a point though. you do not need color image for many applications. I get that more color cameras are made, because of the smartphone market, which is nice. I would gladly have a monochromatic camera (not in a phone) with the same parameters but color, for a cheaper price if I would not need the color.

I have one. Works pretty well, but doesn’t do eye tracking. It’s really a kind of demo tool to show some capability, but limited beyond that.

There *is* an eye tracking module in there, it’s configured on one of the greyscale modes.

In any case, the provided modules are just examples, this is intended for people to write and run their own modules on. Your complaint is almost like saying the arduino is pretty limited because all it does out of the box is blink an LED.

what is inside the JeVois ? I wanna see someone doing a Xilinx Zynq based camera platform

Website says it has a quad core ARM processor at 1.35 GHz, and two GPU cores. Seems rather capable for the camera it uses.

while its very capable, hardware accelerated processing will be faster and offer closer to realtime performance. No one has really done a FPGA based camera like the OpenMV, Jevois, or CMUCam5 Pixie.

I don’t know what you mean by “realtime performance”; the Jevois is already able to run at really high framerates at decent resolutions. None of this is post-processed. The demo runs several different processes simultaneously (saliency, object detection, character recognition and face recognition) at 60fps and it’s only using 25% of the (4-core) cpu and none of the GPU.

Can the GPUs be harnessed for vision? A lot of the heavy lifting in machine vision is doing mass mathematics on arrays of pixels. GPUs are full of hardware like that.

Probably not as good as an FPGA but a shitload cheaper.

Yes! It comes out of the box with SDK support and at least one example.

Allwinner A33, Cortex A7.

http://jevois.org/doc/Hardware.html

had a feeling it was an allwinner, only chip that can quote that kind of power for low cost. I have mixed feelings about them in general.

I don’t know of any integrated solution, but you can pair an existing Zynq devboard with a MIPI based camera rather easily. I have done it before. You will need to produce FPGA machine vision code though.

Elphel 10393 is based on Xilinx Zynq 7030

JeVois seems like a pretty great robotics camera for only $50!

I’m sold.

Thanks for a very nice review!

For the dark video and poor low-light performance issue, please download and flash the latest microSD disk image from http://jevois.org/start

We have widened the allowed range of autogain in the kernel camera driver and it helps a lot in our testing. Also improved the lens correction parameters which yields more uniform lighting.

The pre-loaded microSD card that ships with the turnkey kits was flashed a while ago and does not yet have this feature.

Please update that old SDK you provide that relies on Ubuntu 16.10 . I wish there was some better integration and support here.

None of the methods listed here: https://github.com/jevois/jevois/blob/master/INSTALL actually work today, because of outdated information.

A VM containing all SDK, ready to run the examples would be VERY welcome.

Being limited to the demo mode makes the whole jevois experience plain frustrating.

Here is a comparison of OpenMV vs JeVois: https://diyrobocars.com/2017/05/07/first-experiments-with-jevois-computer-vision-module/

The IDE and ability to run Micropython makes OpenMV a superior product despite its limited hardware: from unboxing, in two hours, it´s possible to run code with OpenMV. With Jevois, in two hours it is plain impossible to to anything else than changing the config files and playing with those. Mee :(

Yes, good timing, incidentally the INSTALL file you refer to was updated shortly before your post to address compilation issues raised by some users. Please check the history log on GitHub for the updated bits. Certainly a VM image is a great idea, coming soon!

Perhaps, but you’re gonna have to write almost everything from scratch on the OpenMV. The Jevois gives you full access to OpenCV’s extensive libraries and community. And (full!) python support is coming, but it’s trivial to build an image with python and the opencv bindings included *right now* (I’ve done it), and it’s not much harder to write a module with a bit of boilerplate to pipe the frame inputs and outputs through a python binding to an arbitrary script.

Also, the issues with the SDK are not because it’s ‘old’, there’s just a few teething issues around the precompiled release – buildroot trees aren’t generally intended to be transplanted. I’ve already submitted pull requests fixing a bunch of the issues, and the rest is coming soon. Even if you build it from scratch, a few hours wait while the SDK builds isn’t a big deal, since you only do that once. A product’s superiority isn’t defined by the first two hours, it’s defined by how much you can do with it in the long run.

What you really want is this:

https://unibap.com/product/intelligent-vision-system/

The question is, can you afford it?

I got one of these Jevois cameras during the Kickstarter campaign. I’m more of a mechanical guy and I’m really impressed with how easy it is to use it with no knowledge of OpenCV.

So you can’t see the embedded/linked video in this HaD post? Neither can I – at least not without allowing tons of hanger-on third-party linked scripting sites. So just move along…

I didn’t have any trouble figuring out which sites to temporarily allow, and third party javascript for streaming video seems like a pretty legitimate use to me.

I might order a few as the price was much more reasonable than I expected.

global or rolling? ordered one but didn’t see it

We got he JeVois and it works great, however I only get around 30fps when 640×480. How are you getting such high performance? Custom code? Thanks!

helps if I add I was running ArUco

be careful, however. i ordered one of these from JeVois via amazon (their web site said “order from amazon if you want it before xmas.) and what i received was an empty box. i’m sure these guys aren’t a scam, since i know people who successfully ordered them. (and people in the comments apparently got ahold of them.) but… you know… caveat emptor. you *may* get an empty box if you order one of these. maybe they ran out and just decided shipping empty boxes was an acceptable alternative?

moral of the story here is 100% of the items I bought from these guys were not as described.

ymmv.