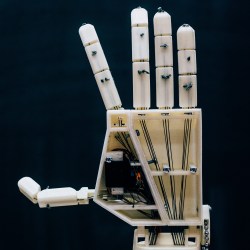

A team of students in Antwerp, Belgium are responsible for Project Aslan, which is exploring the feasibility of using 3D printed robotic arms for assisting with and translating sign language. The idea came from the fact that sign language translators are few and far between, and it’s a task that robots may be able to help with. In addition to translation, robots may be able to assist with teaching sign language as well.

A team of students in Antwerp, Belgium are responsible for Project Aslan, which is exploring the feasibility of using 3D printed robotic arms for assisting with and translating sign language. The idea came from the fact that sign language translators are few and far between, and it’s a task that robots may be able to help with. In addition to translation, robots may be able to assist with teaching sign language as well.

The project set out to use 3D printing and other technology to explore whether low-cost robotic signing could be of any use. So far the team has an arm that can convert text into finger spelling and counting. It’s an interesting use for a robotic arm; signing is an application for which range of motion is important, but there is no real need to carry or move any payloads whatsoever.

A single articulated hand is a good proof of concept, and these early results show some promise and potential but there is still a long ways to go. Sign language involves more than just hands. It is performed using both hands, arms and shoulders, and incorporates motions and facial expressions. Also, the majority of sign language is not finger spelling (reserved primarily for proper names or specific nouns) but a robot hand that is able to finger spell is an important first step to everything else.

Future directions for the project include adding a second arm, adding expressiveness, and exploring the use of cameras for the teaching of new signs. The ability to teach different signs is important, because any project that aims to act as a translator or facilitator needs the ability to learn and update. There is a lot of diversity in sign languages across the world. For people unfamiliar with signing, it may come as a surprise that — for example — not only is American Sign Language (ASL) related to French sign language, but both are entirely different from British Sign Language (BSL). A video of the project is embedded below.

Sign language projects show up fairly regularly in both the hobbyist and academic worlds. We’ve seen puppet-like 3D printed hands used to convert spoken words to ASL, as well as these sensor-covered gloves which aim to convert ASL signs and motions into speech. It’s fascinating to see the progression in technology and approaches taken over time as sensor technology and tools like 3D printing become more advanced.

[via 3DHubs newsroom]

Very nicely done! I released a project years ago for kids to make hands like this from drinking straws and one kid tried to get his to do ASL. While inspired, that wasn’t the right tech. You guys did a wonderful job!

There is just one quite big issue with their approach. It’s basically just spelling each word out, letter by letter. It’s not fully utilizing ASL. Which would make it very difficult to follow e.g. a speaker at a conference. Just imagine it, instead of seeing a complete word, you’d only get to see its letters one by one. And i think it wouldn’t even be able to sign the letter Z as it’s the only one requiring arm movement.

Proper ASL usage would mainly rely on…let’s call them “wordsigns”. A sign means a word. Only by using those is it possible to synchronously translate a spoken talk. The spelling signs are mostly used if there is no sign for a certain word, e.g. Names.

Yes, true, but are they far off? It seems that’s not a big leap to switch to wordsigns as appropriate. The motions of that sample arm look flexible enough to do at least some.

They’d need to build a complete upper torso with a head and two arms, because some signs do require combinations of those. From what i remember even a simple “Thank you” involves interaction between right hand and chin.

I believe they wanted to prove the concept before investing all of that time, money and energy into an unproven system. I think now that the one arm is up they will add the second. Then they can add a head and torso to do the more expressive ASL signs.

This hand cannot do a full ASL alphabet. It cannot do R.

I don’t get it.

Why can’t they just have a screen that shows a picture of a hand, or, you know… text?

Probably because this is cool. Also, it can give you a real finger. Well, not really real, but more real than one on screen.

A lot of deaf people can’t read very well.

So you’re saying that someone taught them sign language, but didn’t teach them to read?

Yes. Phonics is difficult if you have never heard speech.

Also, ASL is its own language, loosely related to English

Difficult but not impossible – not any more than teaching people to read chinese; a billion Chinese do it perfectly well.

Yeah, you can learn language without knowing how to read.

Exhibit A: Children speak, and they don’t know how to read or write! :D

Exhibit B: Koko the gorilla ( https://en.wikipedia.org/wiki/Koko_(gorilla) )

Somebody here proposed to make CGI video, and that’s the only alternative to something like this robot, as sign language can’t be translated from spoken/written language word for word, but uses basic word/concepts combined with signs for place and time, with additional motions and expressions.

For example (this is in sign language in my country, not ASL, but I don’t think there is any major difference), there is no past tense, but you can sign “yesterday” or “before”, and then continue with what happened. Giving and getting is the same sign, but the exact meaning depends on whether you start with your hands near your body and you’re pushing them out, or do you start with hands from afar, and you pull them in.

The question wasn’t about whether you can, but whether people really teach deaf people to sign but not read. That seems like a huge omission and an outright tragedy.

How are they reading the newspaper? Traffic signs? Closed captions on television?

If true, that there are a significant number of deaf who can’t read, that is a much more immediate issue.

Some of it is cultural.

Deafness isolates people from the non-deaf, and just like any language barrier you end up with a community that predominantly socialises within itself. Even the schools are separate. English (or whatever the predominant spoken language is) ends up being a second language that you have to learn in school, but isn’t vital for daily use – all their friends are deaf, their teachers are deaf, their parents are not deaf but they learned sign language for their child’s sake. They have deaf-specific sources for news and entertainment. They use just enough English (or whatever) to get by when they have to interact with the non-deaf world, but it’s not something they necessarily do every day. If you don’t use it, you lose it.

>They use just enough English (or whatever) to get by when they have to interact with the non-deaf world, but it’s not something they necessarily do every day

I find that hardly credible.

Do deaf people not watch TV, don’t they use the internet? Send text messages, chat online, read books, write emails or just their own diaries? Are you saying they’re only looking at picture books and skype, so they could go days between actually reading a full sentence in english?

For them to be so isolated from the surrounding society sounds like bullshit to me.

I understand there are cultural differences, but I am dubious that reading is so rare among the community. There’s a reason closed captions exist, after all.

The original statement was the a lot of deaf people can’t read well. It seems commenters are assuming that it was most deaf people…

The point was that it’s a bigger tragedy that a lot of deaf people couldn’t read, than not having a robot hand to perform sign language for them.

And as others have pointed out, the hand isn’t exactly giving them sign language but merely repeating letters, which the deaf people wouldn’t understand anyhow unless they were familiar with reading and the written language, so it’s doubly pointless.

I’m having trouble finding regional statistics, but given the number and content of the articles I’m coming across, I concede that this is a way bigger problem than I imagined.

My experience: Worked as a professor at local polytechnic. Had deaf students. They knew how to read (our language, not english), but their written one was very bad. Not problematic when doing projects as they know how to convey an idea, problematic during exams.

That’s a good question. The answer is mostly that this project is not really trying to solve the problem of how to communicate with Deaf people.

The project is trying to discover two things: Long-term, whether it’s possible to replace a signing human (with expressiveness, etc) with a robot. Short-term, whether 3D printing and other modern techniques could be used to (cheaply) make a robot arm that is even capable of signing in the first place. So far, it’s early in the short-term part, and so far the answer seems to be a yes.

This could be a very useful text-to-sign app for conferences, meetings and what not.

But I doubt a robot is the best way to do this. I think a CG-hand would be much nicer (cheaper) so you can project it anywhere you like.

I must say: very well done. The robot looks very fast.

Not really, a simple tablet with a voice recognition linked to 3D animation signing would be much easier.

You say “not really”, but if you read their second sentence, you two are in agreement.

I made a robot hand 10 years ago now that did the American Righthannded sign language, although it only had 10DOF it could represent most characters bassed on a keyboard input

http://www.roboteernat.co.uk/animatronics/robot-hand/

I don’t know where exactly in this discussion to fit this in, but I suspect some people don’t really realize the following: there are individual and cultural and language differences, but the only thing Deaf people are unable to do is hear.

How absolutely inspiring.

And as others get pointed out, the script isn’t exactly giving them star sign voice communication but merely repeating letters, which the indifferent(p) people wouldn’t empathize anyhow unless they were familiar with reading material and the written voice communication, so it’s doubly pointless.

Deafness isolates people from the non-indifferent(p), and just like any voice communication roadblock you remnant up with a community of interests that predominantly socialises within itself.