As difficult as it is for a human to learn ambidexterity, it’s quite easy to program into a humanoid robot. After all, a robot doesn’t need to overcome years of muscle memory. Giving a one-handed robot ambidexterity, however, takes some more creativity. [Kelvin Gonzales Amador] managed to do this with his ambidextrous robot hand, capable of signing in either left- or right-handed American Sign Language (ASL).

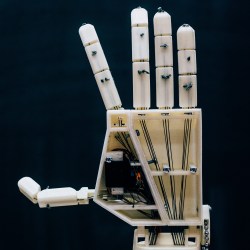

The essential ingredient is a separate servo motor for each joint in the hand, which allows each joint to bend equally well backward and forward. Nothing physically marks one side as the palm or the back of the hand. To change between left and right-handedness, a servo in the wrist simply turns the hand 180 degrees, the fingers flex in the other direction, and the transformation is complete. [Kelvin] demonstrates this in the video below by having the hand sign out the full ASL alphabet in both the right and left-handed configurations.

The tradeoff of a fully direct drive is that this takes 23 servo motors in the hand itself, plus a much larger servo for the wrist joint. Twenty small servo motors articulate the fingers, and three larger servos control joints within the hand. An Arduino Mega controls the hand with the aid of two PCA9685 PWM drivers. The physical hand itself is made out of 3D-printed PLA and nylon, painted gold for a more striking appearance.

This isn’t the first language-signing robot hand we’ve seen, though it does forgo the second hand. To make this perhaps one of the least efficient machine-to-machine communication protocols, you could also equip it with a sign language translation glove.

sign language14 Articles

The Robot That Lends The Deaf-Blind Community A Hand

The loss of one’s sense of hearing or vision is likely to be devastating in the way that it impacts daily life. Fortunately many workarounds exist using one’s remaining senses — such as sign language — but what if not only your sense of hearing is gone, but you are also blind? Fortunately here, too, a workaround exists in the form of tactile signing, which is akin to visual sign language, except that it uses one’s sense of touch. This generally requires someone who knows tactile sign language to translate from spoken or written forms to tactile signaling. Yet what if you’re deaf-blind and without human assistance? This is where a new robotic system could conceivably fill in.

Developed by Tatum Robotics, the Tatum T1 is a a robotic hand and associated software that’s intended to provide this translation function, by taking in natural language information, whether spoken, written or in some digital format, and using a number of translation steps to create tactile sign language as output, whether it’s the ASL format, the BANZSL alphabet or another. These tactile signs are then expressed using the robotic hand, and a connected arm as needed, ideally using ASL gloss to convey as much information as quickly as possible, not unlike with visual ASL.

This also answers the question of why one would not just use a simple braille cell on a hand, as the signing speed is essential to keep up with real-time communications, unlike when, say, reading a book or email. A robotic companion like this could provide deaf-blind individuals with a critical bridge to the world around them. Currently the Tatum T1 is still in the testing phase, but hopefully before long it may be another tool for the tens of thousands of deaf-blind people in the US today.

Sensor Glove Translates Sign Language

Sign language is a language that uses the position and motion of the hands in place of sounds made by the vocal tract. If one could readily capture those hand positions and movements, one could theoretically digitize and translate that language. [ayooluwa98] built a set of sensor gloves to do just that.

The brains of the operation is an Arduino Nano. It’s hooked up to a series of flex sensors woven into the gloves, along with an accelerometer. The flex sensors detect the bending of the fingers and the gestures being made, while the accelerometer captures the movements of the hand. The Arduino then interprets these sensor signals in order to match the user’s movements up with a pre-stored list of valid signs. It can then transmit out the detected language via a Bluetooth module, where it is passed to an Android phone for translation via text-to-speech software.

The idea of capturing sign language via hand tracking is a compelling one; we’ve seen similar projects before, too. Meanwhile, if you’re working on your own accessibility projects, be sure to drop us a line!

Learn Sign Language Using Machine Vision

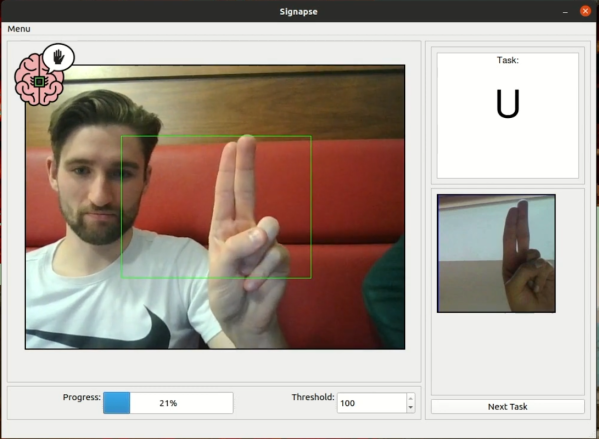

Learning a new language is a great way to exercise the mind and learn about different cultures, and it’s great to have a native speaker around to improve the learning experience. Without one it’s still possible to learn via videos, books, and software though. The task does get much more complicated when trying to learn a language that isn’t spoken, though, like American Sign Language. This project allows users to learn the ASL alphabet with the help of computer vision and some machine learning algorithms.

The build uses a computer vision model in MobileNetV2 which is trained for each sign in the ASL alphabet. A sign is shown to the user on a screen, and the user needs to demonstrate the sign to the computer in order to progress. To do this, OpenCV running on a Raspberry Pi with a PiCamera is used to analyze the frames of the user in real-time. The user is shown pictures of the correct sign, and is rewarded when the correct sign is made.

While this only works for alphabet signs in ASL currently, the team at the University of Glasgow that built this project is planning on expanding it to include other signs as well. We have seen other machines built to teach ASL in the past, like this one which relies on a specialized glove rather than computer vision.

Adding Brakes To Actuated Fingers

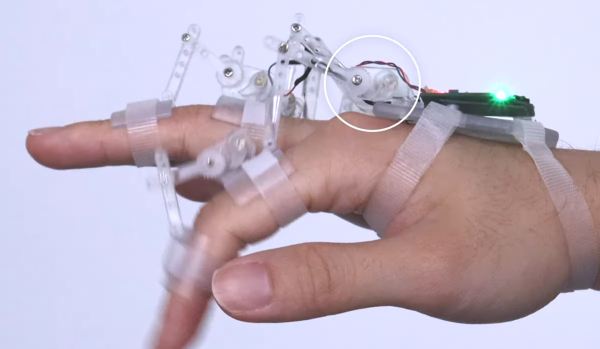

Building exoskeletons for people is a rapidly growing branch of robotics. Whether it’s improving the natural abilities of humans with added strength or helping those with disabilities, the field has plenty of room for new inventions for the augmentation of humans. One of the latest comes to us from a team out of the University of Chicago who recently demonstrated a method of adding brakes to a robotic glove which gives impressive digital control (PDF warning).

The robotic glove is known as DextrEMS but doesn’t actually move the fingers itself. That is handled by a series of electrodes on the forearm which stimulate the finger muscles using Electrical Muscle Stimulation (EMS), hence the name. The problem with EMS for manipulating fingers is that the precision isn’t that great and it tends to cause oscillations. That’s where the glove comes in: each finger includes a series of ratcheting mechanisms that act as brakes which can position the fingers precisely enough to make intelligible signs in sign language or even play a guitar or piano.

For anyone interested in robotics or exoskeletons, the white paper is worth a read. Adding this level of precision to an exoskeleton that manipulates something as small as the fingers opens up a brave new world of robotics, but if you’re looking for something that operates on the scale of an entire human body, take a look at this full-size strength-multiplying exoskeleton that can help you lift superhuman amounts of weight.

This Machine Teaches Sign Language

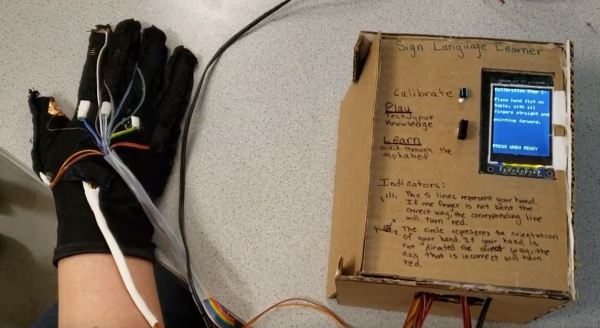

Sign language can like any language be difficult to learn if you’re not immersed in it, or at least learning from someone who is fluent. It’s not easy to know when you’re making minor mistakes or missing nuances. It’s a medium with its own unique issues when learning, so if you want to learn and don’t have access to someone who knows the language you might want to reach for the next best thing: a machine that can teach you.

This project comes from three of [Bruce Land]’s senior electrical and computer engineering students, [Alicia], [Raul], and [Kerry], as part of their final design class at Cornell University. Someone who wishes to learn the sign language alphabet slips on a glove outfitted with position sensors for each finger. A computer inside the device shows each letter’s proper sign on a screen, and then checks the sensors from the glove to ensure that the hand is in the proper position. Two letters include making a gesture as well, and the device is able to track this by use of a gyroscope and compass to ensure that the letter has been properly signed. It appears to only cover the alphabet and not a wider vocabulary, but as a proof of concept it is very effective.

The students show that it is entirely possible to learn the alphabet reliably using the machine as a teaching tool. This type of technology could be useful for other applications as well, such as gesture recognition for a human interface device. If you want to see more of these interesting and well-referenced senior design builds we’ve featured quite a few, from polygraph machines to a sonar system for a bicycle.

3D Printed Robotic Arms For Sign Language

A team of students in Antwerp, Belgium are responsible for Project Aslan, which is exploring the feasibility of using 3D printed robotic arms for assisting with and translating sign language. The idea came from the fact that sign language translators are few and far between, and it’s a task that robots may be able to help with. In addition to translation, robots may be able to assist with teaching sign language as well.

A team of students in Antwerp, Belgium are responsible for Project Aslan, which is exploring the feasibility of using 3D printed robotic arms for assisting with and translating sign language. The idea came from the fact that sign language translators are few and far between, and it’s a task that robots may be able to help with. In addition to translation, robots may be able to assist with teaching sign language as well.

The project set out to use 3D printing and other technology to explore whether low-cost robotic signing could be of any use. So far the team has an arm that can convert text into finger spelling and counting. It’s an interesting use for a robotic arm; signing is an application for which range of motion is important, but there is no real need to carry or move any payloads whatsoever.

A single articulated hand is a good proof of concept, and these early results show some promise and potential but there is still a long ways to go. Sign language involves more than just hands. It is performed using both hands, arms and shoulders, and incorporates motions and facial expressions. Also, the majority of sign language is not finger spelling (reserved primarily for proper names or specific nouns) but a robot hand that is able to finger spell is an important first step to everything else.

Future directions for the project include adding a second arm, adding expressiveness, and exploring the use of cameras for the teaching of new signs. The ability to teach different signs is important, because any project that aims to act as a translator or facilitator needs the ability to learn and update. There is a lot of diversity in sign languages across the world. For people unfamiliar with signing, it may come as a surprise that — for example — not only is American Sign Language (ASL) related to French sign language, but both are entirely different from British Sign Language (BSL). A video of the project is embedded below.

Continue reading “3D Printed Robotic Arms For Sign Language”