Hackers enjoy a good theme, and so it comes as no surprise that every time March 14th (Pi Day) rolls around, the tip line sees an uptick in mathematical activity. Whether it’s something they personally did or some other person’s project they want to bring to our attention, a lot of folks out there are very excited about numbers today.

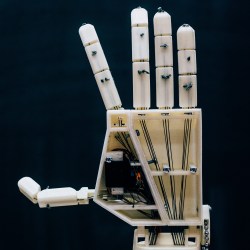

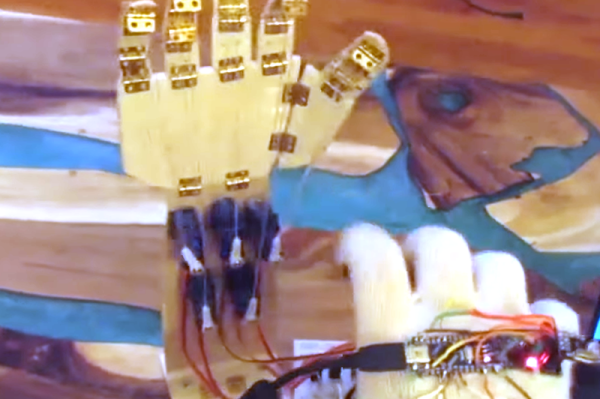

One of our most prolific circumference aficionados is [Cristiano Monteiro], who, for the last several years, has put together a special project to commemorate the date. For 2025, he’s come up with a robotic hand that will use its fingers to show the digits of Pi one at a time. Since there’s only one hand, anything higher than five will be displayed as two gestures in quick succession, necessitating a bit of addition on the viewer’s part.

[Cristiano] makes no claims about the anatomical accuracy of his creation. Indeed, if your mitts look anything like this, you should seek medical attention immediately. But whether you think of them as fingers or nightmarish claws, it’s the motion of the individual digits that matter.

[Cristiano] makes no claims about the anatomical accuracy of his creation. Indeed, if your mitts look anything like this, you should seek medical attention immediately. But whether you think of them as fingers or nightmarish claws, it’s the motion of the individual digits that matter.

To that end, each one is attached to an MG90 servo, which an Arduino Nano drives with attached Servo Shield. From there, it’s just a matter of code to get the digits wiggling out the correct value, which [Cristiano] has kindly shared for anyone looking to recreate this project.

If you’re hungry for more Pi, the ghostly display that [Cristiano] sent in last year is definitely worth another look. While not directly related to today’s mathematical festivities, the portable GPS time server he put together back in 2021 is another fantastic build you should check out.

Continue reading “Pi Hand Is A Digital Display Of A Different Sort”

A team of students in Antwerp, Belgium are responsible for

A team of students in Antwerp, Belgium are responsible for