Some things don’t sound like they should go together, but they do. Peanut butter and chocolate. Twinkies and deep frying. Bacon and maple syrup. Sometimes mixing things up can produce great results. [Dr. Christal Gordon’s] expertise falls into that category. She’s an electrical engineer, but she also studies neuroscience. This can lead to some interesting intellectual Reese’s peanut butter cups.

At the 2017 Hackaday Superconference, [Christal] spoke about sensor fusion. If you’ve done systems that have multiple sensors, you’ve probably run into that before even if you didn’t call it that. However, [Christal] brings the perspective of how biological systems fuse sensor data contrasted to how electronic systems perform similar tasks. You can see a video replay of her talk in the video below.

The precise definition of sensor fusion is taking data from multiple sensors to reduce uncertainty about measurements. For example, an autonomous car might want to measure its position relative to other objects to navigate and avoid collisions. GPS will give you a pretty good idea of where you are. However, you can better understand your position within the uncertainty of GPS using inertial methods although they tend to accumulate errors over larger time periods. By using both sources of data, it is possible to get a better idea of position than trying to use either one individually.

The precise definition of sensor fusion is taking data from multiple sensors to reduce uncertainty about measurements. For example, an autonomous car might want to measure its position relative to other objects to navigate and avoid collisions. GPS will give you a pretty good idea of where you are. However, you can better understand your position within the uncertainty of GPS using inertial methods although they tend to accumulate errors over larger time periods. By using both sources of data, it is possible to get a better idea of position than trying to use either one individually.

Another source of data might be a LIDAR or ultrasonic range finder. Fusion correlates all this data to develop a better operating picture of the environment. Navigation isn’t the only application, of course. [Christal] mentions several others, including fusing data to allow robotic legs to run on a treadmill.

One very important sensor fusion tool that [Christal] mentions is the Kalman filter. This algorithm takes multiple noisy sensor inputs with varying degrees of certainty and arrives at an estimate of the value that is more precise than the sensor inputs alone.

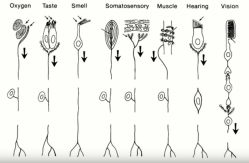

It makes sense that we would look to biological systems for inspiration for sensor fusion. After all, the best fusing system we know of is the human brain. Your brain processes data from a dizzying array of sensors and allows you to make sense of your world. It isn’t perfect, but it does work pretty well most of the time. Think about the navigation ability of, say, a migratory bird. Now think about the size and weight of that bird. Then realize the bird is self-fueling and can — with a little help — produce more birds. Pretty amazing. Our robots are lucky if they can navigate that well and they probably don’t refuel and rebuild plus they probably are much bigger and heavier.

There’s only so much you can cover in 40 minutes, but [Christal] will make you think about how our systems resemble biological systems and the ways we can combine data from multiple sources to get better sensor data in machines.

One of the great things about Superconference is being exposed to new ideas from people who have very different perspectives. [Christal’s] talk is a great example, and thanks to the magic of the Internet, you can watch it in your own living room.

Sensor fusion for a better shopping bot.

“Pretty amazing. Our robots are lucky if they can navigate that well and they probably don’t refuel and rebuild plus they probably are much bigger and heavier.”

A terminator crossed with an autobot.

Thanks for the write-ups on the amazing talks at HaD Super Con. It really motivates me to attend the next one.

Glad to hear you’re enjoying them. There were so many great talks I didn’t get to see. I’m enjoying watching them as we work through the coverage. Much more to come!