What if Google Glass didn’t have a battery? That’s not too far fetched. This battery-free HD video streaming camera could be built into a pair of eyeglass frames to stream HD video to a nearby phone or other receiver using no bulky batteries or external power source. Researchers at the University of Washington are using backscatter to pull this off.

The problem is that a camera which streams HD video wirelessly to a receiver consumes over 1 watt due to the need for a digital processor and transmitter. The researchers have separated the processing hardware into the receiving unit. They then send the analog pixels from the camera sensor directly to backscatter hardware. Backscatter involves reflecting received waves back to where they came from. By adding the video signal to those reflected waves, they eliminated the need for the power-hungry transmitter. The full details are in their paper (PDF), but here are the highlights.

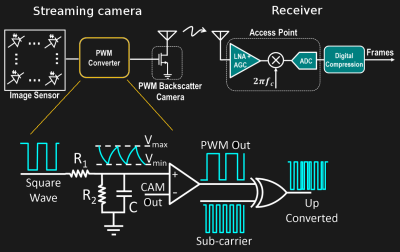

On the camera side, the pixel voltages (CAM Out) are an analog signal which is fed into a comparator along with a triangular waveform. Wherever the triangle wave’s voltage is lower than the pixel voltage, the comparator outputs a 0, otherwise, it outputs a 1. In this way, the pixel voltage is converted to different pulse widths. The triangular waveform’s minimum and maximum voltages are selected such that they cover the full possible range of the camera voltages.

The sub-carrier modulation with the XOR gate in the diagram is there to address the problem of self-interference. This is unwanted interference from the transmitter of the same frequency as the carrier. And so the PWM output is converted to a different frequency using a sub-carrier. The receiver can then filter out the interference. The XOR gate is actually part of an FPGA which also inserts frame and line synchronization patterns.

They tested two different implementations with this circuit design, a 112 x 112 grayscale one at up to 13 frames per second (fps) and an HD one. Unfortunately, no HD camera on the market gives access to the raw analog pixel outputs so they took HD video from a laptop using USB and ran that through a DAC and then into their PWM converter. The USB limited it to 10 fps.

The result is that video streaming at 720p and 10 fps uses as low as 250 μW and can be backscattered up to sixteen feet. They also simulated an ASIC which achieved 720p and 1080p at 60 fps using 321 μW and 806 μW respectively. See the video below for an animated explanation and a demonstration. The resulting video is quite impressive for passive power only.

If the University of Washington seems familiar in the context of backscatter, that’s because we’ve previously covered their battery-free (almost) cell phone. Though they’re not the only ones experimenting with it. Here’s where backscatter is being used for a soil network. All of this involves power harvesting, and now’s a great time to start brushing up on these concepts and building your own prototypes. The Hackaday Prize includes a Power Harvesting Challenge this year.

Sweet..

“Backscatter involves reflecting received waves back to where they came from. ”

Darn. There goes my tinfoil hat.

B^)

You mean your thoughts can no longer be projected to others?

You can use backsacatter to redirect evil waves back to HAARP and illuminati!

I tried that once, but I wired it up wrong and the Illuminati waves ended up being reflected back to HAARP. Now I have an infinite social security number and my brain grows on the outside of my skull. Bloody USB “C” cables.

This paper is borderline scam. Read it for some laughs, they invent h264 level of video compression in order for this thing to work, except this compression works by not sending data at all :) all of their demos are fake, oh, im sorry, “simulated”.

“no HD camera on the market gives access to the raw analog pixel outputs”

Uhh?

Given that you obviously know of at least one such camera please tell us the make and model.

Or is it just that you can’t understand what that means? Raw analog – unprocessed signals from the analog light sensors, it’s in the paper.

How analog are we talking about? Storing analog values, well, digitally, is not really technically possible to do directly. You have to approximate it somehow to be able to store the waveform. Sort of like an MP3 versus a WAV file but the MP3 compresses tones humans typically cannot hear while the WAV file is still a digital file so the analogy still has limitations. Many CMOS devices do ADC on chip now because it’s just easier and cheaper to build that way. But CCDs typically don’t share the same architecture.

Have you looked into actively cooled CCD with off chip ADCs? Many of them offer a direct pipeline to the raw analog data though they are somewhat specialized hardware chips.

Not entirely sure how direct this needs to be here?

Pulling raw NEF or RAW data isn’t good enough? Does one need to go even higher level than that here? My assumption is yes but I haven’t explored the goals of this project in great detail yet.

“The primary benefit of writing images to the memory card in NEF format rather than TIFF or JPEG is that no in-camera processing for white balance, hue, tone and sharpening are applied to the NEF file; rather, those values are retained as instruction sets included in the file. You can change the instruction set as many times as you like without ever disturbing the original image’s RAW data. Another benefit of the NEF file is that depending on the camera, it retains 12-bit or 14-bit data, resulting in an image with a far greater tonal range than an eight-bit JPEG or TIFF file.”

You misquoted. the real quote is:

” no HD camera currently provides access to its raw pixel voltages”

which is something different.

This is followed by:

“we connect the output of a DAC converter to our backscatter hardware to emulate the analog camera and stream raw analog video voltages to our backscatter prototype”

So the transmission hardware isn’t emulated then I conclude.

Excuse me, I meant you quoted a misquote from the HaD writer.

I see your point. I guess I could have been clearer that I was referring to the signals from the light sensor.

“The USB limited it to 10 fps.”

USB 3.0 / USB 3.1 gen 1 is good for 5Gbps.

USB 3.1 / USB 3.1 gen 2 is good for 10Gbps.

They couldn’t manage but 10 fps because USB was a limitation? This needs a bit more elaboration because it seems unlikely that USB bandwidth is either an issue or it’s only an issue because they are using an older USB technology. I am curious why that would be the case though? I mean 10 fps is better than 1 or 2 and if it is not an inherent limitation that could be promising. Still a bit skeptical about some of the explanations here though.

The issue is in regards to analog vs digital. Consider that the switch from analog to digital television alowed the introduction of “sub channels”, because the digital channels took up less bandwidth than the old anlog ones did.

They were “emulating” what an HD image sensor’s analog output would be, by outputting a simulated RAW analog signal out, over USB, int he form of a rapid stream of digital packets that were then converted by a DAC to actual analog. That means they were transmitting the video stream’s actual analog waveform output… That’s a MASSIVE amount of data. That’s why it saturated the USB signal.

That’s also why when they mentioned using an FPGA to do the job, they got actual proper resolutions and bandwidth, cause they were able to create a custom solution that could handle the massive data rates needed to feed the DAC in realtime.

There’s a reason that displaying video used to take a dedicated VGA or DVI connector and was not possible over USB in the least. It simply would saturate it and not work. But fairly recent developments into USB 3 and Displayport and Thunderbolt 3 allow even multiple 4K images at 60 hz to be sent. It’s a pretty huge upgrade.

https://en.wikipedia.org/wiki/Thunderbolt_(interface)#Thunderbolt_3

“Thunderbolt 3 was developed by Intel and uses USB-C connectors. It is the first generation to support USB. Compared to Thunderbolt 2, Intel’s Thunderbolt 3 controller (codenamed Alpine Ridge) doubles the bandwidth to 40 Gbit/s (5 GB/s), halves power consumption, and simultaneously drives two external 4K displays at 60 Hz (or a single external 4K display at 120 Hz, or a 5K display at 60 Hz.”

The issue being talked about here is not analog versus digital because we are not talking about an analog signal. You cannot send an analog signal over usb. Usb by definition is a serial digital bus. So, it’s just plain wrong to say you are sending a “raw analog” signal over usb. You can’t do it. There’s incorrect terminology being used and it’s causing confusion. What I think is really being talked about is Uncompressed versus compressed video. However, make no mistake, it’s still digital we are talking about. And the comment about how digital TV broadcast enabled more channels compared to analog, that’s only the result of compression. Without compression, digital video actually requires FAR MORE BANDWIDTH than analog. It’s the ability to utilize compression that gives digital the advantage.

So, the question here is why don’t they utilize some form of compression? There are different types of compression and some of them, like MJPEG, would be more suitable for applications like this.

because video playback from a laptop is not the point of the article.

they had an idea and in order to build and test a solution they needed a camera…

… a camera that does not exist at a consumer price-point. im sure an HD camera that outputs analog exists, but it’ll probably set you back 1000’s of dollars. besides, have YOU ever spent THAT much money to investigate a new idea of your’s that you’r not sure will work???

remember; the idea is BACKSCATTER of VIDEO (and video is analog before it is digitized), not backscatter of digital data, THAT ONE IS EASY AND HAS BEEN DONE LONG AGO; IT’s CALLED RFID TAGS (and can be made to work at very high speeds)

With a digital transmission, you’re sending a data stream that might represent RGB values as three numbers. What they are doing is creating an ACTUAL analog waveform (using a Digital to Analog converter) by feeding the DAC with a “pseudo analog” waveform, by way of a stream of digital values representing the actual waveform…

While sending RGB values would require sending only three digital values, sending the pseudo analog would requires sending a stream of values to represent the actual waveform that would be expected to be generated by an analog source.

Arguing over technical semantics just comes of as just pompous. You know what I meant.

Quote from the paper:

” A low resolution 144p video recorded at 10 fps requires an ADC sampling at 368 KSPS to generate uncompressed data at 2.95 Mbps.

With the latest advancements in ADCs and backscatter communication, uncompressed data can be digitally recorded using low-power ADCs and transmitted using digital backscatter while consuming below 100 µ W of power, which is within the power budget of energy harvesting platforms.

However, as we scale the resolution to HD quality and higher, such as 1080p and 1440p, the ADC sampling rate increases to 10-100 MHz, and uncompressed data is generated at 100 Mbps to Gbps.

ADCs operating at such high sampling rates consume at least a few mW . Further, a compression block that operates in real time on 100 Mbps to 1 Gbps of uncompressed video consumes up to one Watt of power.

This power budget exceeds by orders of magnitude that available on harvesting platforms”

Using compression would require the device to be powered externally of course since compression hardware needs plenty of power for processing. And if you power externally you can just go to the shop and buy a damn gopro.

You guys haven’t seen the cheap sunglasses you can buy from China on Ebay, that record HD video onto a micro SD card? They obviously do compression and they are powered by small lightweight batteries. They only weigh a few ounces. The argument that the weight of a battery is too much falls flat here. And the compromises that they must make, being limited to 10 frames per sec by their grossly inefficient way they are encoding the video, outweighs the issue of needing a tiny battery.

The point is, this whole thing looks like a solution in search of a problem!

Read the paper, not the botched summation from the HaD writer.

They just said their USB allowed 10FPS.

As the paper has been release for peer review I suggest write a review on their work raising your concerns. And if you find failings in their work ask them to comment.

Or

You can just take cheap shots from the side lines.

Like, you *could* do raw PWM ADC of HD analogue video, but you’d need the comparator to switch very quickly between states, and very fast in general. That’d use a lot of power, and need an extremely high carrier frequency and bandwidth. And then that’s all supposed to work with backscattering, over a really decent range too.

And yet the principle is completely utterly simple. The sort of thing a smart 12 year old might think up, if he’d grown up around all this information in the present day.

Yeah it does seem to have a massive ring of “BOLLOCKS!” to it.

Probably something that would benefit from a bit of an expert eye before posting it as a news item. I dunno enough to tell you conclusively, but it certainly doesn’t smell right.

They lost power consumption but not where they harvested the power from, right?

That’s right. But the idea wasn’t to use lower power overall. The idea was to get rid of weight and bulk of a power source where the camera is located, allowing them to put less mass on a pair of eyeglasses for example. Even increasing the power consumption at the receiver would be okay as long as it allowed for lower mass at the camera.

Its right there in their demo video, proposed use case is:

-wireless batteryless wearable camera on your face (GlassHole)

-phone transmitting continuous 1W

This way you are relieved of inconvenience associated with charging/swapping batteries in your wearable camera. You just have to recharge your phone every 4 hours, brilliant!!1

Consider it from another side – phone can be in your backpack/on your body, so weight is no real issue (anything under 250g is okay). The weight of the camera on glasses IS and issue – heavy glasses are uncomfortable when weared for a long time.

250g battery weight would give you from 25Wh to 60Wh – more than enough for such device.

Can anybody explain in plain English why they can transmit information using next to no power? or is it BS? (it smells like BS).

Scattering is taking a signal and dispersing it in all directions. Backscattering is taking a signal and dispersing it mostly back to the transmitter. There doesn’t have to be much power on the dispersal end, a cleverly designed inanimate object would do. The transmitter on the other hand can be crazy powerful, think radar since that’s also using backscattering but will happily fry anything in front of the transmitter.

So, basically the receiver unit is also powering the camera by sending RF waves which the camera is harvesting? If so, might the overall power consumption be fairly high (assuming that the power being sent is not unidirectional). I can see how this might be beneficial if you really have to avoid a battery, although it might be a nuisance in terms of RF noise.

It’s not actually powering the camera (image detector), it’s powering the camera transmitter. The camera itself doesn’t need much power.

Think of it this way. Instead of using a modulated laser to transmit data to a distant receiver, the laser is mounted on the receiver and pointed at the transmitter unit where a mirror is used to modulate the reflected light on its way back to the receiver module.

> The transmitter on the other hand can be crazy powerful

Considering that in a practical application, the transmitter would be the cell phone in your pocket, that’s not really true.

For this application your cellphone _is_ crazy powerful. Think mW backscatter from a (multi) W transmitter.

Having a multi W transmitter on a phone would drain the battery in a couple of hours, so not really practical.

Is it a generally good idea to have a multi W transmitter operating continuously that close to a person? What kind of frequencies are we talking about here exactly?

It would be the same basic principal as “The Thing (listening device)”..

Call this technology ‘Carpenter’

backscatter is not BS, thats how famous KGB seal bug worked

https://en.wikipedia.org/wiki/The_Thing_(listening_device)

the rest of the paper(video part) however is

The Thing for video? Who let the “3letter” toys out?

For HD video it might be a slight bit unrealistic to expect the necessary bandwidth to be available, but for standard definition video or even just low framerate security cameras it would be perfect.

The sort answer is no. Because this would place no additional load on the radio transmitter Having said that I’m skeptical about the power generated claims.

Obvious spy tool is obvious :X

Just like https://en.wikipedia.org/wiki/The_Thing_(listening_device) it’s impressive and worrying.

Makes me think of the really battery-less ‘radio microphone’ that the Russians got into the US embassy in Moscow. Sound waves in the embassy room modified a resonator that modified the back (or forward?) scattered beam from a microwave illuminator across the road.

illuminator … illuminati … ooooooh o_O

This is pretty much like the RFID sensors that are in so many things.

possible downside: It seems like encryption would be not possible with this technique. Maybe obscuring the signal, like old wireless phones, but not actual keyed encryption

You can get pretty close, though. You can pattern the PWM carrier in a way that only you know, and then recovering it would require knowing what you actually transmitted in the first place. Pretty much the equivalent of a one-time pad. You could still conceivably “spy” on something like this with the equivalent of multiple extremely sensitive directional antennas, but if the transmitting antenna is similarly directional it would be really, really hard to be able to get the “key”. Still might be able to recover *something*, though, so it’s definitely not a ‘secure’ link.

Why not ? Take a digital signal, encrypt it, convert it to suitable waveform, and send that to output.

So, what happens when two of these are next to each other in the same room?

Correct me if I’m wrong but this backscatter concept only works with low bandwidth and low collision.

For low power consumption, it also requires very close proximity, no?

The RF source in the demo was attached to the person walking around and consuming more than 1W even though the ‘camera’ was < four feet away, right?